An official website of the United States government

Official websites use .gov A .gov website belongs to an official government organization in the United States.

Secure .gov websites use HTTPS A lock ( Lock Locked padlock icon ) or https:// means you've safely connected to the .gov website. Share sensitive information only on official, secure websites.

- Publications

- Account settings

- Advanced Search

- Journal List

A hands-on guide to doing content analysis

Christen erlingsson, petra brysiewicz.

- Author information

- Article notes

- Copyright and License information

Corresponding author. [email protected]

Received 2017 Feb 21; Revised 2017 May 6; Accepted 2017 Aug 4; Issue date 2017 Sep.

This is an open access article under the CC BY-NC-ND license (http://creativecommons.org/licenses/by-nc-nd/4.0/).

There is a growing recognition for the important role played by qualitative research and its usefulness in many fields, including the emergency care context in Africa. Novice qualitative researchers are often daunted by the prospect of qualitative data analysis and thus may experience much difficulty in the data analysis process. Our objective with this manuscript is to provide a practical hands-on example of qualitative content analysis to aid novice qualitative researchers in their task.

Keywords: Qualitative research, Qualitative data analysis, Content analysis

African relevance

Qualitative research is useful to deepen the understanding of the human experience.

Novice qualitative researchers may benefit from this hands-on guide to content analysis.

Practical tips and data analysis templates are provided to assist in the analysis process.

Introduction

There is a growing recognition for the important role played by qualitative research and its usefulness in many fields, including emergency care research. An increasing number of health researchers are currently opting to use various qualitative research approaches in exploring and describing complex phenomena, providing textual accounts of individuals’ “life worlds”, and giving voice to vulnerable populations our patients so often represent. Many articles and books are available that describe qualitative research methods and provide overviews of content analysis procedures [1] , [2] , [3] , [4] , [5] , [6] , [7] , [8] , [9] , [10] . Some articles include step-by-step directions intended to clarify content analysis methodology. What we have found in our teaching experience is that these directions are indeed very useful. However, qualitative researchers, especially novice researchers, often struggle to understand what is happening on and between steps, i.e., how the steps are taken.

As research supervisors of postgraduate health professionals, we often meet students who present brilliant ideas for qualitative studies that have potential to fill current gaps in the literature. Typically, the suggested studies aim to explore human experience. Research questions exploring human experience are expediently studied through analysing textual data e.g., collected in individual interviews, focus groups, documents, or documented participant observation. When reflecting on the proposed study aim together with the student, we often suggest content analysis methodology as the best fit for the study and the student, especially the novice researcher. The interview data are collected and the content analysis adventure begins. Students soon realise that data based on human experiences are complex, multifaceted and often carry meaning on multiple levels.

For many novice researchers, analysing qualitative data is found to be unexpectedly challenging and time-consuming. As they soon discover, there is no step-wise analysis process that can be applied to the data like a pattern cutter at a textile factory. They may become extremely annoyed and frustrated during the hands-on enterprise of qualitative content analysis.

The novice researcher may lament, “I’ve read all the methodology but don’t really know how to start and exactly what to do with my data!” They grapple with qualitative research terms and concepts, for example; differences between meaning units, codes, categories and themes, and regarding increasing levels of abstraction from raw data to categories or themes. The content analysis adventure may now seem to be a chaotic undertaking. But, life is messy, complex and utterly fascinating. Experiencing chaos during analysis is normal. Good advice for the qualitative researcher is to be open to the complexity in the data and utilise one’s flow of creativity.

Inspired primarily by descriptions of “conventional content analysis” in Hsieh and Shannon [3] , “inductive content analysis” in Elo and Kyngäs [5] and “qualitative content analysis of an interview text” in Graneheim and Lundman [1] , we have written this paper to help the novice qualitative researcher navigate the uncertainty in-between the steps of qualitative content analysis. We will provide advice and practical tips, as well as data analysis templates, to attempt to ease frustration and hopefully, inspire readers to discover how this exciting methodology contributes to developing a deeper understanding of human experience and our professional contexts.

Overview of qualitative content analysis

Synopsis of content analysis.

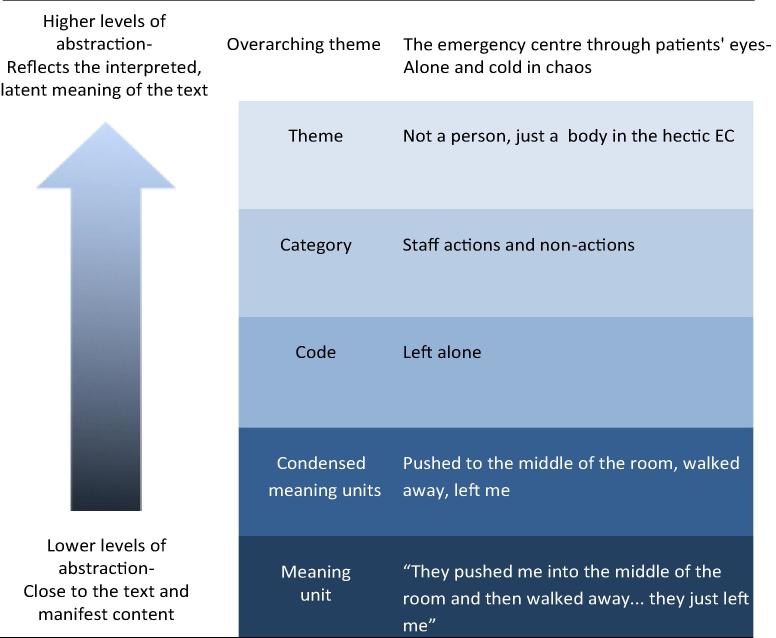

A common starting point for qualitative content analysis is often transcribed interview texts. The objective in qualitative content analysis is to systematically transform a large amount of text into a highly organised and concise summary of key results. Analysis of the raw data from verbatim transcribed interviews to form categories or themes is a process of further abstraction of data at each step of the analysis; from the manifest and literal content to latent meanings ( Fig. 1 and Table 1 ).

Example of analysis leading to higher levels of abstraction; from manifest to latent content.

Glossary of terms as used in this hands-on guide to doing content analysis. *

More information found in Refs. [1] , [2] , [3] , [5]

The initial step is to read and re-read the interviews to get a sense of the whole, i.e., to gain a general understanding of what your participants are talking about. At this point you may already start to get ideas of what the main points or ideas are that your participants are expressing. Then one needs to start dividing up the text into smaller parts, namely, into meaning units. One then condenses these meaning units further. While doing this, you need to ensure that the core meaning is still retained. The next step is to label condensed meaning units by formulating codes and then grouping these codes into categories. Depending on the study’s aim and quality of the collected data, one may choose categories as the highest level of abstraction for reporting results or you can go further and create themes [1] , [2] , [3] , [5] , [8] .

Content analysis as a reflective process

You must mould the clay of the data , tapping into your intuition while maintaining a reflective understanding of how your own previous knowledge is influencing your analysis, i.e., your pre-understanding. In qualitative methodology, it is imperative to vigilantly maintain an awareness of one’s pre-understanding so that this does not influence analysis and/or results. This is the difficult balancing task of keeping a firm grip on one’s assumptions, opinions, and personal beliefs, and not letting them unconsciously steer your analysis process while simultaneously, and knowingly, utilising one’s pre-understanding to facilitate a deeper understanding of the data.

Content analysis, as in all qualitative analysis, is a reflective process. There is no “step 1, 2, 3, done!” linear progression in the analysis. This means that identifying and condensing meaning units, coding, and categorising are not one-time events. It is a continuous process of coding and categorising then returning to the raw data to reflect on your initial analysis. Are you still satisfied with the length of meaning units? Do the condensed meaning units and codes still “fit” with each other? Do the codes still fit into this particular category? Typically, a fair amount of adjusting is needed after the first analysis endeavour. For example: a meaning unit might need to be split into two meaning units in order to capture an additional core meaning; a code modified to more closely match the core meaning of the condensed meaning unit; or a category name tweaked to most accurately describe the included codes. In other words, analysis is a flexible reflective process of working and re-working your data that reveals connections and relationships. Once condensed meaning units are coded it is easier to get a bigger picture and see patterns in your codes and organise codes in categories.

Content analysis exercise

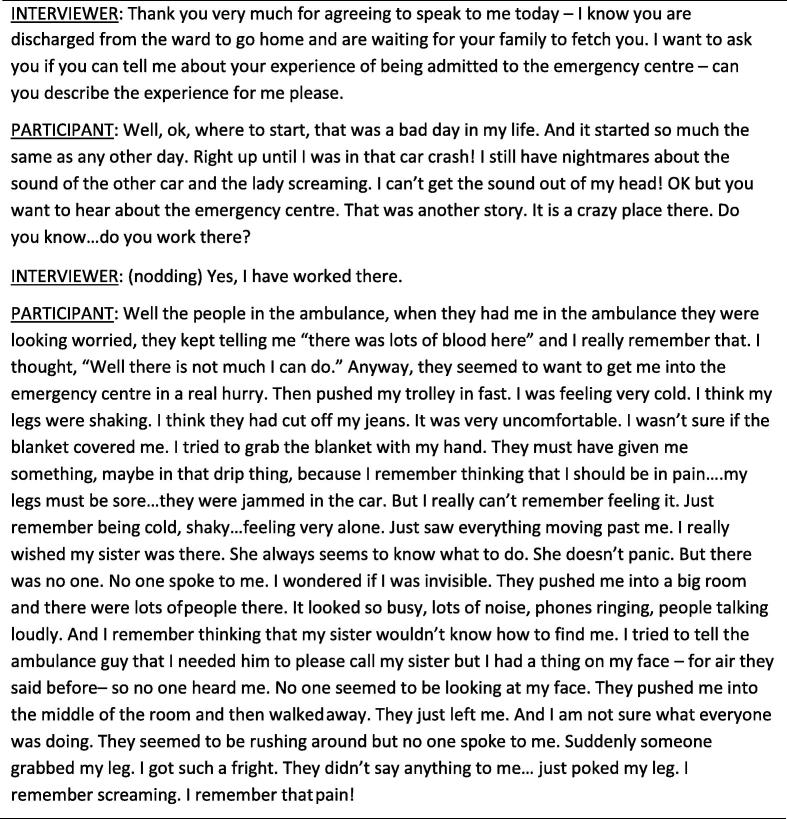

The synopsis above is representative of analysis descriptions in many content analysis articles. Although correct, such method descriptions still do not provide much support for the novice researcher during the actual analysis process. Aspiring to provide guidance and direction to support the novice, a practical example of doing the actual work of content analysis is provided in the following sections. This practical example is based on a transcribed interview excerpt that was part of a study that aimed to explore patients’ experiences of being admitted into the emergency centre ( Fig. 2 ).

Excerpt from interview text exploring “Patient’s experience of being admitted into the emergency centre”

This content analysis exercise provides instructions, tips, and advice to support the content analysis novice in a) familiarising oneself with the data and the hermeneutic spiral, b) dividing up the text into meaning units and subsequently condensing these meaning units, c) formulating codes, and d) developing categories and themes.

Familiarising oneself with the data and the hermeneutic spiral

An important initial phase in the data analysis process is to read and re-read the transcribed interview while keeping your aim in focus. Write down your initial impressions. Embrace your intuition. What is the text talking about? What stands out? How did you react while reading the text? What message did the text leave you with? In this analysis phase, you are gaining a sense of the text as a whole.

You may ask why this is important. During analysis, you will be breaking down the whole text into smaller parts. Returning to your notes with your initial impressions will help you see if your “parts” analysis is matching up with your first impressions of the “whole” text. Are your initial impressions visible in your analysis of the parts? Perhaps you need to go back and check for different perspectives. This is what is referred to as the hermeneutic spiral or hermeneutic circle. It is the process of comparing the parts to the whole to determine whether impressions of the whole verify the analysis of the parts in all phases of analysis. Each part should reflect the whole and the whole should be reflected in each part. This concept will become clearer as you start working with your data.

Dividing up the text into meaning units and condensing meaning units

You have now read the interview a number of times. Keeping your research aim and question clearly in focus, divide up the text into meaning units. Located meaning units are then condensed further while keeping the central meaning intact ( Table 2 ). The condensation should be a shortened version of the same text that still conveys the essential message of the meaning unit. Sometimes the meaning unit is already so compact that no further condensation is required. Some content analysis sources warn researchers against short meaning units, claiming that this can lead to fragmentation [1] . However, our personal experience as research supervisors has shown us that a greater problem for the novice is basing analysis on meaning units that are too large and include many meanings which are then lost in the condensation process.

Suggestion for how the exemplar interview text can be divided into meaning units and condensed meaning units ( condensations are in parentheses ).

Formulating codes

The next step is to develop codes that are descriptive labels for the condensed meaning units ( Table 3 ). Codes concisely describe the condensed meaning unit and are tools to help researchers reflect on the data in new ways. Codes make it easier to identify connections between meaning units. At this stage of analysis you are still keeping very close to your data with very limited interpretation of content. You may adjust, re-do, re-think, and re-code until you get to the point where you are satisfied that your choices are reasonable. Just as in the initial phase of getting to know your data as a whole, it is also good to write notes during coding on your impressions and reactions to the text.

Suggestions for coding of condensed meaning units.

Feeling helpless? Resigned, Powerless? “In god’s hands”? What do you think?

Worried? Feeling lost? Distraught? What do you think?

Developing categories and themes

The next step is to sort codes into categories that answer the questions who , what , when or where? One does this by comparing codes and appraising them to determine which codes seem to belong together, thereby forming a category. In other words, a category consists of codes that appear to deal with the same issue, i.e., manifest content visible in the data with limited interpretation on the part of the researcher. Category names are most often short and factual sounding.

In data that is rich with latent meaning, analysis can be carried on to create themes. In our practical example, we have continued the process of abstracting data to a higher level, from category to theme level, and developed three themes as well as an overarching theme ( Table 4 ). Themes express underlying meaning, i.e., latent content, and are formed by grouping two or more categories together. Themes are answering questions such as why , how , in what way or by what means? Therefore, theme names include verbs, adverbs and adjectives and are very descriptive or even poetic.

Suggestion for organisation of coded meaning units into categories and themes.

Some reflections and helpful tips

Understand your pre-understandings.

While conducting qualitative research, it is paramount that the researcher maintains a vigilance of non-bias during analysis. In other words, did you remain aware of your pre-understandings, i.e., your own personal assumptions, professional background, and previous experiences and knowledge? For example, did you zero in on particular aspects of the interview on account of your profession (as an emergency doctor, emergency nurse, pre-hospital professional, etc.)? Did you assume the patient’s gender? Did your assumptions affect your analysis? How about aspects of culpability; did you assume that this patient was at fault or that this patient was a victim in the crash? Did this affect how you analysed the text?

Staying aware of one’s pre-understandings is exactly as difficult as it sounds. But, it is possible and it is requisite. Focus on putting yourself and your pre-understandings in a holding pattern while you approach your data with an openness and expectation of finding new perspectives. That is the key: expect the new and be prepared to be surprised. If something in your data feels unusual, is different from what you know, atypical, or even odd – don’t by-pass it as “wrong”. Your reactions and intuitive responses are letting you know that here is something to pay extra attention to, besides the more comfortable condensing and coding of more easily recognisable meaning units.

Use your intuition

Intuition is a great asset in qualitative analysis and not to be dismissed as “unscientific”. Intuition results from tacit knowledge. Just as tacit knowledge is a hallmark of great clinicians [11] , [12] ; it is also an invaluable tool in analysis work [13] . Literally, take note of your gut reactions and intuitive guidance and remember to write these down! These notes often form a framework of possible avenues for further analysis and are especially helpful as you lift the analysis to higher levels of abstraction; from meaning units to condensed meaning units, to codes, to categories and then to the highest level of abstraction in content analysis, themes.

Aspects of coding and categorising hard to place data

All too often, the novice gets overwhelmed by interview material that deals with the general subject matter of the interview, but doesn’t seem to answer the research question. Don’t be too quick to consider such text as off topic or dross [6] . There is often data that, although not seeming to match the study aim precisely, is still important for illuminating the problem area. This can be seen in our practical example about exploring patients’ experiences of being admitted into the emergency centre. Initially the participant is describing the accident itself. While not directly answering the research question, the description is important for understanding the context of the experience of being admitted into the emergency centre. It is very common that participants will “begin at the beginning” and prologue their narratives in order to create a context that sets the scene. This type of contextual data is vital for gaining a deepened understanding of participants’ experiences.

In our practical example, the participant begins by describing the crash and the rescue, i.e., experiences leading up to and prior to admission to the emergency centre. That is why we have chosen in our analysis to code the condensed meaning unit “Ambulance staff looked worried about all the blood” as “In the ambulance” and place it in the category “Reliving the rescue”. We did not choose to include this meaning unit in the categories specifically about admission to the emergency centre itself. Do you agree with our coding choice? Would you have chosen differently?

Another common problem for the novice is deciding how to code condensed meaning units when the unit can be labelled in several different ways. At this point researchers usually groan and wish they had thought to ask one of those classic follow-up questions like “Can you tell me a little bit more about that?” We have examples of two such coding conundrums in the exemplar, as can be seen in Table 3 (codes we conferred on) and Table 4 (codes we reached consensus on). Do you agree with our choices or would you have chosen different codes? Our best advice is to go back to your impressions of the whole and lean into your intuition when choosing codes that are most reasonable and best fit your data.

A typical problem area during categorisation, especially for the novice researcher, is overlap between content in more than one initial category, i.e., codes included in one category also seem to be a fit for another category. Overlap between initial categories is very likely an indication that the jump from code to category was too big, a problem not uncommon when the data is voluminous and/or very complex. In such cases, it can be helpful to first sort codes into narrower categories, so-called subcategories. Subcategories can then be reviewed for possibilities of further aggregation into categories. In the case of a problematic coding, it is advantageous to return to the meaning unit and check if the meaning unit itself fits the category or if you need to reconsider your preliminary coding.

It is not uncommon to be faced by thorny problems such as these during coding and categorisation. Here we would like to reiterate how valuable it is to have fellow researchers with whom you can discuss and reflect together with, in order to reach consensus on the best way forward in your data analysis. It is really advantageous to compare your analysis with meaning units, condensations, coding and categorisations done by another researcher on the same text. Have you identified the same meaning units? Do you agree on coding? See similar patterns in the data? Concur on categories? Sometimes referred to as “researcher triangulation,” this is actually a key element in qualitative analysis and an important component when striving to ensure trustworthiness in your study [14] . Qualitative research is about seeking out variations and not controlling variables, as in quantitative research. Collaborating with others during analysis lets you tap into multiple perspectives and often makes it easier to see variations in the data, thereby enhancing the quality of your results as well as contributing to the rigor of your study. It is important to note that it is not necessary to force consensus in the findings but one can embrace these variations in interpretation and use that to capture the richness in the data.

Yet there are times when neither openness, pre-understanding, intuition, nor researcher triangulation does the job; for example, when analysing an interview and one is simply confused on how to code certain meaning units. At such times, there are a variety of options. A good starting place is to re-read all the interviews through the lens of this specific issue and actively search for other similar types of meaning units you might have missed. Another way to handle this is to conduct further interviews with specific queries that hopefully shed light on the issue. A third option is to have a follow-up interview with the same person and ask them to explain.

Additional tips

It is important to remember that in a typical project there are several interviews to analyse. Codes found in a single interview serve as a starting point as you then work through the remaining interviews coding all material. Form your categories and themes when all project interviews have been coded.

When submitting an article with your study results, it is a good idea to create a table or figure providing a few key examples of how you progressed from the raw data of meaning units, to condensed meaning units, coding, categorisation, and, if included, themes. Providing such a table or figure supports the rigor of your study [1] and is an element greatly appreciated by reviewers and research consumers.

During the analysis process, it can be advantageous to write down your research aim and questions on a sheet of paper that you keep nearby as you work. Frequently referring to your aim can help you keep focused and on track during analysis. Many find it helpful to colour code their transcriptions and write notes in the margins.

Having access to qualitative analysis software can be greatly helpful in organising and retrieving analysed data. Just remember, a computer does not analyse the data. As Jennings [15] has stated, “… it is ‘peopleware,’ not software, that analyses.” A major drawback is that qualitative analysis software can be prohibitively expensive. One way forward is to use table templates such as we have used in this article. (Three analysis templates, Templates A, B, and C, are provided as supplementary online material ). Additionally, the “find” function in word processing programmes such as Microsoft Word (Redmond, WA USA) facilitates locating key words, e.g., in transcribed interviews, meaning units, and codes.

Lessons learnt/key points

From our experience with content analysis we have learnt a number of important lessons that may be useful for the novice researcher. They are:

A method description is a guideline supporting analysis and trustworthiness. Don’t get caught up too rigidly following steps. Reflexivity and flexibility are just as important. Remember that a method description is a tool helping you in the process of making sense of your data by reducing a large amount of text to distil key results.

It is important to maintain a vigilant awareness of one’s own pre-understandings in order to avoid bias during analysis and in results.

Use and trust your own intuition during the analysis process.

If possible, discuss and reflect together with other researchers who have analysed the same data. Be open and receptive to new perspectives.

Understand that it is going to take time. Even if you are quite experienced, each set of data is different and all require time to analyse. Don’t expect to have all the data analysis done over a weekend. It may take weeks. You need time to think, reflect and then review your analysis.

Keep reminding yourself how excited you have felt about this area of research and how interesting it is. Embrace it with enthusiasm!

Let it be chaotic – have faith that some sense will start to surface. Don’t be afraid and think you will never get to the end – you will… eventually!

Peer review under responsibility of African Federation for Emergency Medicine.

Supplementary data associated with this article can be found, in the online version, at http://dx.doi.org/10.1016/j.afjem.2017.08.001 .

Contributor Information

Christen Erlingsson, Email: [email protected].

Petra Brysiewicz, Email: [email protected].

Appendix A. Supplementary data

- 1. Graneheim U.H., Lundman B. Qualitative content analysis in nursing research: concepts, procedures, and measures to achieve trustworthiness. Nurse Educ Today. 2004;24:105–112. doi: 10.1016/j.nedt.2003.10.001. [ DOI ] [ PubMed ] [ Google Scholar ]

- 2. Mayring P. Qualitative content analysis. Forum Qual Soc Res. 2000;1(2) http://www.qualitative-research.net/fqs/ [ Google Scholar ]

- 3. Hsieh H.F., Shannon S. Three approaches to qualitative content analysis. Qual Health Res. 2005;15(9):1277–1288. doi: 10.1177/1049732305276687. [ DOI ] [ PubMed ] [ Google Scholar ]

- 4. Schilling J. On the pragmatics of qualitative assessment: designing the process for content analysis. Eur J Psychol Assess. 2006;22(1):28–37. [ Google Scholar ]

- 5. Elo S., Kyngas H. The qualitative content analysis process. J Adv Nurs. 2007;62(1):107–115. doi: 10.1111/j.1365-2648.2007.04569.x. [ DOI ] [ PubMed ] [ Google Scholar ]

- 6. Burnard P., Gill P., Stewart K., Treasure E., Chadwick B. Analysing and presenting qualitative data. Brit Dent J. 2008;204(8):429–432. doi: 10.1038/sj.bdj.2008.292. [ DOI ] [ PubMed ] [ Google Scholar ]

- 7. Berg B., Lune H. 8th ed. Pearson Education, Inc.; Upper Saddle River, NJ: 2012. Qualitative research methods for the social sciences. [ Google Scholar ]

- 8. Erlingsson C., Brysiewicz P. Orientation among multiple truths: an introduction to qualitative research. Afr J Emerg Med. 2013;3:92–99. [ Google Scholar ]

- 9. Krippendorf K. Sage; Thousand Oaks, CA: 2013. Content analysis: an introduction to its methodology. [ Google Scholar ]

- 10. Vaismoradi M., Turunen H., Bondas T. Content analysis and thematic analysis: implications for conducting a qualitative descriptive study. Nurs Health Sci. 2013;15:398–405. doi: 10.1111/nhs.12048. [ DOI ] [ PubMed ] [ Google Scholar ]

- 11. Mattingly C. What is clinical reasoning? Am J Occup Ther. 1991;45(11):979–986. doi: 10.5014/ajot.45.11.979. [ DOI ] [ PubMed ] [ Google Scholar ]

- 12. Henry S. Recognizing tacit knowledge in medical epistemology. Theor Med Bioeth. 2006;27:187–213. doi: 10.1007/s11017-006-9005-x. [ DOI ] [ PubMed ] [ Google Scholar ]

- 13. Swanwick K. Qualitative Research: The Relationship of Intuition and Analysis. Bull Council Res Music Educ. 1994;122:57–69. [ Google Scholar ]

- 14. Carter N., Bryant-Lukosius D., DiCenso A., Blythe J., Neville A.J. The use of triangulation in qualitative research. Oncol Nurs Forum. 2014;41:5. doi: 10.1188/14.ONF.545-547. [ DOI ] [ PubMed ] [ Google Scholar ]

- 15. Jennings B.M. Qualitative analysis: a case of software or ‘Peopleware?’. Res Nurs Health. 2007;30:483–484. doi: 10.1002/nur.20238. [ DOI ] [ PubMed ] [ Google Scholar ]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

- View on publisher site

- PDF (1.2 MB)

- Collections

Similar articles

Cited by other articles, links to ncbi databases.

- Download .nbib .nbib

- Format: AMA APA MLA NLM

Add to Collections

No internet connection.

All search filters on the page have been cleared., your search has been saved..

- Sign in to my profile My Profile

Content Analysis: An Introduction to Its Methodology

- Edition: Fourth Edition

- By: Klaus Krippendorff

- Publisher: SAGE Publications, Inc.

- Publication year: 2019

- Online pub date: February 01, 2022

- Discipline: Communication and Media Studies

- Methods: Content analysis , Research questions , Sampling

- DOI: https:// doi. org/10.4135/9781071878781

- Keywords: attitudes , coincidence , language , mass media , newspapers , population , software Show all Show less

- Print ISBN: 9781506395661

- Online ISBN: 9781071878781

- Buy the book icon link

Subject index

What matters in people’s social lives? What motivates and inspires our society? How do we enact what we know? Since the first edition published in 1980, Content Analysis has helped shape and define the field. In the highly anticipated Fourth Edition, award-winning scholar and author Klaus Krippendorff introduces readers to the most current method of analyzing the textual fabric of contemporary society. Students and scholars will learn to treat data not as physical events but as communications that are created and disseminated to be seen, read, interpreted, enacted, and reflected upon according to the meanings they have for their recipients. Interpreting communications as texts in the contexts of their social uses distinguishes content analysis from other empirical methods of inquiry. Organized into three parts, Content Analysis first examines the conceptual aspects of content analysis, then discusses components such as unitizing and sampling, and concludes by showing readers how to trace the analytical paths and apply evaluative techniques. The Fourth Edition has been completely revised to offer readers the most current techniques and research on content analysis, including new information on reliability and social media. Readers will also gain practical advice and experience for teaching academic and commercial researchers how to conduct content analysis. Available with Perusall–an eBook that makes it easier to prepare for class Perusall is an award-winning eBook platform featuring social annotation tools that allow students and instructors to collaboratively mark up and discuss their SAGE textbook. Backed by research and supported by technological innovations developed at Harvard University, this process of learning through collaborative annotation keeps your students engaged and makes teaching easier and more effective. Learn more.

Front Matter

- Preface to the Fourth Edition

- Introduction

- • History

- • Conceptual Foundation

- • Uses and Inferences

- • The Logic of Content Analysis Designs

- • Unitizing

- • Sampling

- • Recording/Coding

- • Data Languages

- • Analytical Constructs

- • Analytical/Representational Techniques

- • Computer Aids

- • Reliability

- • Validity

- • A Practical Guide

Back Matter

- About the Author

Sign in to access this content

Get a 30 day free trial, more like this, sage recommends.

We found other relevant content for you on other Sage platforms.

Have you created a personal profile? Login or create a profile so that you can save clips, playlists and searches

- Sign in/register

Navigating away from this page will delete your results

Please save your results to "My Self-Assessments" in your profile before navigating away from this page.

Sign in to my profile

Please sign into your institution before accessing your profile

Sign up for a free trial and experience all Sage Learning Resources have to offer.

You must have a valid academic email address to sign up.

Get off-campus access

- View or download all content my institution has access to.

Sign up for a free trial and experience all Sage Learning Resources has to offer.

- view my profile

- view my lists