Have a language expert improve your writing

Run a free plagiarism check in 10 minutes, generate accurate citations for free.

- Knowledge Base

Methodology

Reliability vs. Validity in Research | Difference, Types and Examples

Published on July 3, 2019 by Fiona Middleton . Revised on June 22, 2023.

Reliability and validity are concepts used to evaluate the quality of research. They indicate how well a method , technique. or test measures something. Reliability is about the consistency of a measure, and validity is about the accuracy of a measure.opt

It’s important to consider reliability and validity when you are creating your research design , planning your methods, and writing up your results, especially in quantitative research . Failing to do so can lead to several types of research bias and seriously affect your work.

Table of contents

Understanding reliability vs validity, how are reliability and validity assessed, how to ensure validity and reliability in your research, where to write about reliability and validity in a thesis, other interesting articles.

Reliability and validity are closely related, but they mean different things. A measurement can be reliable without being valid. However, if a measurement is valid, it is usually also reliable.

What is reliability?

Reliability refers to how consistently a method measures something. If the same result can be consistently achieved by using the same methods under the same circumstances, the measurement is considered reliable.

What is validity?

Validity refers to how accurately a method measures what it is intended to measure. If research has high validity, that means it produces results that correspond to real properties, characteristics, and variations in the physical or social world.

High reliability is one indicator that a measurement is valid. If a method is not reliable, it probably isn’t valid.

If the thermometer shows different temperatures each time, even though you have carefully controlled conditions to ensure the sample’s temperature stays the same, the thermometer is probably malfunctioning, and therefore its measurements are not valid.

However, reliability on its own is not enough to ensure validity. Even if a test is reliable, it may not accurately reflect the real situation.

Validity is harder to assess than reliability, but it is even more important. To obtain useful results, the methods you use to collect data must be valid: the research must be measuring what it claims to measure. This ensures that your discussion of the data and the conclusions you draw are also valid.

Prevent plagiarism. Run a free check.

Reliability can be estimated by comparing different versions of the same measurement. Validity is harder to assess, but it can be estimated by comparing the results to other relevant data or theory. Methods of estimating reliability and validity are usually split up into different types.

Types of reliability

Different types of reliability can be estimated through various statistical methods.

Types of validity

The validity of a measurement can be estimated based on three main types of evidence. Each type can be evaluated through expert judgement or statistical methods.

To assess the validity of a cause-and-effect relationship, you also need to consider internal validity (the design of the experiment ) and external validity (the generalizability of the results).

The reliability and validity of your results depends on creating a strong research design , choosing appropriate methods and samples, and conducting the research carefully and consistently.

Ensuring validity

If you use scores or ratings to measure variations in something (such as psychological traits, levels of ability or physical properties), it’s important that your results reflect the real variations as accurately as possible. Validity should be considered in the very earliest stages of your research, when you decide how you will collect your data.

- Choose appropriate methods of measurement

Ensure that your method and measurement technique are high quality and targeted to measure exactly what you want to know. They should be thoroughly researched and based on existing knowledge.

For example, to collect data on a personality trait, you could use a standardized questionnaire that is considered reliable and valid. If you develop your own questionnaire, it should be based on established theory or findings of previous studies, and the questions should be carefully and precisely worded.

- Use appropriate sampling methods to select your subjects

To produce valid and generalizable results, clearly define the population you are researching (e.g., people from a specific age range, geographical location, or profession). Ensure that you have enough participants and that they are representative of the population. Failing to do so can lead to sampling bias and selection bias .

Ensuring reliability

Reliability should be considered throughout the data collection process. When you use a tool or technique to collect data, it’s important that the results are precise, stable, and reproducible .

- Apply your methods consistently

Plan your method carefully to make sure you carry out the same steps in the same way for each measurement. This is especially important if multiple researchers are involved.

For example, if you are conducting interviews or observations , clearly define how specific behaviors or responses will be counted, and make sure questions are phrased the same way each time. Failing to do so can lead to errors such as omitted variable bias or information bias .

- Standardize the conditions of your research

When you collect your data, keep the circumstances as consistent as possible to reduce the influence of external factors that might create variation in the results.

For example, in an experimental setup, make sure all participants are given the same information and tested under the same conditions, preferably in a properly randomized setting. Failing to do so can lead to a placebo effect , Hawthorne effect , or other demand characteristics . If participants can guess the aims or objectives of a study, they may attempt to act in more socially desirable ways.

It’s appropriate to discuss reliability and validity in various sections of your thesis or dissertation or research paper . Showing that you have taken them into account in planning your research and interpreting the results makes your work more credible and trustworthy.

If you want to know more about statistics , methodology , or research bias , make sure to check out some of our other articles with explanations and examples.

- Normal distribution

- Degrees of freedom

- Null hypothesis

- Discourse analysis

- Control groups

- Mixed methods research

- Non-probability sampling

- Quantitative research

- Ecological validity

Research bias

- Rosenthal effect

- Implicit bias

- Cognitive bias

- Selection bias

- Negativity bias

- Status quo bias

Cite this Scribbr article

If you want to cite this source, you can copy and paste the citation or click the “Cite this Scribbr article” button to automatically add the citation to our free Citation Generator.

Middleton, F. (2023, June 22). Reliability vs. Validity in Research | Difference, Types and Examples. Scribbr. Retrieved April 7, 2024, from https://www.scribbr.com/methodology/reliability-vs-validity/

Is this article helpful?

Fiona Middleton

Other students also liked, what is quantitative research | definition, uses & methods, data collection | definition, methods & examples, "i thought ai proofreading was useless but..".

I've been using Scribbr for years now and I know it's a service that won't disappoint. It does a good job spotting mistakes”

Validity & Reliability In Research

A Plain-Language Explanation (With Examples)

By: Derek Jansen (MBA) | Expert Reviewer: Kerryn Warren (PhD) | September 2023

Validity and reliability are two related but distinctly different concepts within research. Understanding what they are and how to achieve them is critically important to any research project. In this post, we’ll unpack these two concepts as simply as possible.

This post is based on our popular online course, Research Methodology Bootcamp . In the course, we unpack the basics of methodology using straightfoward language and loads of examples. If you’re new to academic research, you definitely want to use this link to get 50% off the course (limited-time offer).

Overview: Validity & Reliability

- The big picture

- Validity 101

- Reliability 101

- Key takeaways

First, The Basics…

First, let’s start with a big-picture view and then we can zoom in to the finer details.

Validity and reliability are two incredibly important concepts in research, especially within the social sciences. Both validity and reliability have to do with the measurement of variables and/or constructs – for example, job satisfaction, intelligence, productivity, etc. When undertaking research, you’ll often want to measure these types of constructs and variables and, at the simplest level, validity and reliability are about ensuring the quality and accuracy of those measurements .

As you can probably imagine, if your measurements aren’t accurate or there are quality issues at play when you’re collecting your data, your entire study will be at risk. Therefore, validity and reliability are very important concepts to understand (and to get right). So, let’s unpack each of them.

What Is Validity?

In simple terms, validity (also called “construct validity”) is all about whether a research instrument accurately measures what it’s supposed to measure .

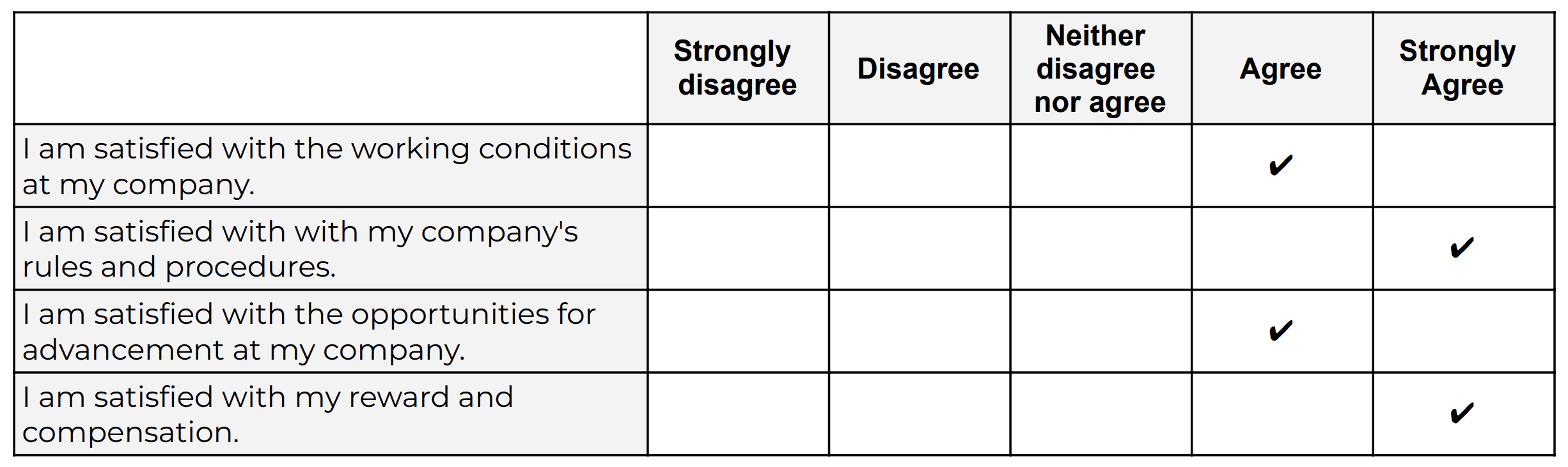

For example, let’s say you have a set of Likert scales that are supposed to quantify someone’s level of overall job satisfaction. If this set of scales focused purely on only one dimension of job satisfaction, say pay satisfaction, this would not be a valid measurement, as it only captures one aspect of the multidimensional construct. In other words, pay satisfaction alone is only one contributing factor toward overall job satisfaction, and therefore it’s not a valid way to measure someone’s job satisfaction.

Oftentimes in quantitative studies, the way in which the researcher or survey designer interprets a question or statement can differ from how the study participants interpret it . Given that respondents don’t have the opportunity to ask clarifying questions when taking a survey, it’s easy for these sorts of misunderstandings to crop up. Naturally, if the respondents are interpreting the question in the wrong way, the data they provide will be pretty useless . Therefore, ensuring that a study’s measurement instruments are valid – in other words, that they are measuring what they intend to measure – is incredibly important.

There are various types of validity and we’re not going to go down that rabbit hole in this post, but it’s worth quickly highlighting the importance of making sure that your research instrument is tightly aligned with the theoretical construct you’re trying to measure . In other words, you need to pay careful attention to how the key theories within your study define the thing you’re trying to measure – and then make sure that your survey presents it in the same way.

For example, sticking with the “job satisfaction” construct we looked at earlier, you’d need to clearly define what you mean by job satisfaction within your study (and this definition would of course need to be underpinned by the relevant theory). You’d then need to make sure that your chosen definition is reflected in the types of questions or scales you’re using in your survey . Simply put, you need to make sure that your survey respondents are perceiving your key constructs in the same way you are. Or, even if they’re not, that your measurement instrument is capturing the necessary information that reflects your definition of the construct at hand.

If all of this talk about constructs sounds a bit fluffy, be sure to check out Research Methodology Bootcamp , which will provide you with a rock-solid foundational understanding of all things methodology-related. Remember, you can take advantage of our 60% discount offer using this link.

Need a helping hand?

What Is Reliability?

As with validity, reliability is an attribute of a measurement instrument – for example, a survey, a weight scale or even a blood pressure monitor. But while validity is concerned with whether the instrument is measuring the “thing” it’s supposed to be measuring, reliability is concerned with consistency and stability . In other words, reliability reflects the degree to which a measurement instrument produces consistent results when applied repeatedly to the same phenomenon , under the same conditions .

As you can probably imagine, a measurement instrument that achieves a high level of consistency is naturally more dependable (or reliable) than one that doesn’t – in other words, it can be trusted to provide consistent measurements . And that, of course, is what you want when undertaking empirical research. If you think about it within a more domestic context, just imagine if you found that your bathroom scale gave you a different number every time you hopped on and off of it – you wouldn’t feel too confident in its ability to measure the variable that is your body weight 🙂

It’s worth mentioning that reliability also extends to the person using the measurement instrument . For example, if two researchers use the same instrument (let’s say a measuring tape) and they get different measurements, there’s likely an issue in terms of how one (or both) of them are using the measuring tape. So, when you think about reliability, consider both the instrument and the researcher as part of the equation.

As with validity, there are various types of reliability and various tests that can be used to assess the reliability of an instrument. A popular one that you’ll likely come across for survey instruments is Cronbach’s alpha , which is a statistical measure that quantifies the degree to which items within an instrument (for example, a set of Likert scales) measure the same underlying construct . In other words, Cronbach’s alpha indicates how closely related the items are and whether they consistently capture the same concept .

Recap: Key Takeaways

Alright, let’s quickly recap to cement your understanding of validity and reliability:

- Validity is concerned with whether an instrument (e.g., a set of Likert scales) is measuring what it’s supposed to measure

- Reliability is concerned with whether that measurement is consistent and stable when measuring the same phenomenon under the same conditions.

In short, validity and reliability are both essential to ensuring that your data collection efforts deliver high-quality, accurate data that help you answer your research questions . So, be sure to always pay careful attention to the validity and reliability of your measurement instruments when collecting and analysing data. As the adage goes, “rubbish in, rubbish out” – make sure that your data inputs are rock-solid.

Psst… there’s more!

This post is an extract from our bestselling Udemy Course, Methodology Bootcamp . If you want to work smart, you don't want to miss this .

You Might Also Like:

THE MATERIAL IS WONDERFUL AND BENEFICIAL TO ALL STUDENTS.

THE MATERIAL IS WONDERFUL AND BENEFICIAL TO ALL STUDENTS AND I HAVE GREATLY BENEFITED FROM THE CONTENT.

Submit a Comment Cancel reply

Your email address will not be published. Required fields are marked *

Save my name, email, and website in this browser for the next time I comment.

- Print Friendly

- My Bookings

- How to Determine the Validity and Reliability of an Instrument

How to Determine the Validity and Reliability of an Instrument By: Yue Li

Validity and reliability are two important factors to consider when developing and testing any instrument (e.g., content assessment test, questionnaire) for use in a study. Attention to these considerations helps to insure the quality of your measurement and of the data collected for your study.

Understanding and Testing Validity

Validity refers to the degree to which an instrument accurately measures what it intends to measure. Three common types of validity for researchers and evaluators to consider are content, construct, and criterion validities.

- Content validity indicates the extent to which items adequately measure or represent the content of the property or trait that the researcher wishes to measure. Subject matter expert review is often a good first step in instrument development to assess content validity, in relation to the area or field you are studying.

- Construct validity indicates the extent to which a measurement method accurately represents a construct (e.g., a latent variable or phenomena that can’t be measured directly, such as a person’s attitude or belief) and produces an observation, distinct from that which is produced by a measure of another construct. Common methods to assess construct validity include, but are not limited to, factor analysis, correlation tests, and item response theory models (including Rasch model).

- Criterion-related validity indicates the extent to which the instrument’s scores correlate with an external criterion (i.e., usually another measurement from a different instrument) either at present ( concurrent validity ) or in the future ( predictive validity ). A common measurement of this type of validity is the correlation coefficient between two measures.

Often times, when developing, modifying, and interpreting the validity of a given instrument, rather than view or test each type of validity individually, researchers and evaluators test for evidence of several different forms of validity, collectively (e.g., see Samuel Messick’s work regarding validity).

Understanding and Testing Reliability

Reliability refers to the degree to which an instrument yields consistent results. Common measures of reliability include internal consistency, test-retest, and inter-rater reliabilities.

- Internal consistency reliability looks at the consistency of the score of individual items on an instrument, with the scores of a set of items, or subscale, which typically consists of several items to measure a single construct. Cronbach’s alpha is one of the most common methods for checking internal consistency reliability. Group variability, score reliability, number of items, sample sizes, and difficulty level of the instrument also can impact the Cronbach’s alpha value.

- Test-retest measures the correlation between scores from one administration of an instrument to another, usually within an interval of 2 to 3 weeks. Unlike pre-post tests, no treatment occurs between the first and second administrations of the instrument, in order to test-retest reliability. A similar type of reliability called alternate forms , involves using slightly different forms or versions of an instrument to see if different versions yield consistent results.

- Inter-rater reliability checks the degree of agreement among raters (i.e., those completing items on an instrument). Common situations where more than one rater is involved may occur when more than one person conducts classroom observations, uses an observation protocol or scores an open-ended test, using a rubric or other standard protocol. Kappa statistics, correlation coefficients, and intra-class correlation (ICC) coefficient are some of the commonly reported measures of inter-rater reliability.

Developing a valid and reliable instrument usually requires multiple iterations of piloting and testing which can be resource intensive. Therefore, when available, I suggest using already established valid and reliable instruments, such as those published in peer-reviewed journal articles. However, even when using these instruments, you should re-check validity and reliability, using the methods of your study and your own participants’ data before running additional statistical analyses. This process will confirm that the instrument performs, as intended, in your study with the population you are studying, even though they are identical to the purpose and population for which the instrument was initially developed. Below are a few additional, useful readings to further inform your understanding of validity and reliability.

Resources for Understanding and Testing Reliability

- American Educational Research Association, American Psychological Association, & National Council on Measurement in Education. (1985). Standards for educational and psychological testing . Washington, DC: Authors.

- Bond, T. G., & Fox, C. M. (2001). Applying the Rasch model: Fundamental measurement in the human sciences . Mahwah, NJ: Lawrence Erlbaum.

- Cronbach, L. (1990). Essentials of psychological testing . New York, NY: Harper & Row.

- Carmines, E., & Zeller, R. (1979). Reliability and Validity Assessment . Beverly Hills, CA: Sage Publications.

- Messick, S. (1987). Validity . ETS Research Report Series, 1987: i–208. doi: 10.1002/j.2330-8516.1987.tb00244.x

- Liu, X. (2010). Using and developing measurement instruments in science education: A Rasch modeling approach . Charlotte, NC: Information Age.

- Search for:

Recent Posts

- Avoiding Data Analysis Pitfalls

- Advice in Building and Boasting a Successful Grant Funding Track Record

- Personal History of Miami University’s Discovery and E & A Centers

- Center Director’s Message

Recent Comments

- November 2016

- September 2016

- February 2016

- November 2015

- October 2015

- Uncategorized

- Entries feed

- Comments feed

- WordPress.org

Uncomplicated Reviews of Educational Research Methods

- Instrument, Validity, Reliability

.pdf version of this page

Part I: The Instrument

Instrument is the general term that researchers use for a measurement device (survey, test, questionnaire, etc.). To help distinguish between instrument and instrumentation, consider that the instrument is the device and instrumentation is the course of action (the process of developing, testing, and using the device).

Instruments fall into two broad categories, researcher-completed and subject-completed, distinguished by those instruments that researchers administer versus those that are completed by participants. Researchers chose which type of instrument, or instruments, to use based on the research question. Examples are listed below:

Usability refers to the ease with which an instrument can be administered, interpreted by the participant, and scored/interpreted by the researcher. Example usability problems include:

- Students are asked to rate a lesson immediately after class, but there are only a few minutes before the next class begins (problem with administration).

- Students are asked to keep self-checklists of their after school activities, but the directions are complicated and the item descriptions confusing (problem with interpretation).

- Teachers are asked about their attitudes regarding school policy, but some questions are worded poorly which results in low completion rates (problem with scoring/interpretation).

Validity and reliability concerns (discussed below) will help alleviate usability issues. For now, we can identify five usability considerations:

- How long will it take to administer?

- Are the directions clear?

- How easy is it to score?

- Do equivalent forms exist?

- Have any problems been reported by others who used it?

It is best to use an existing instrument, one that has been developed and tested numerous times, such as can be found in the Mental Measurements Yearbook . We will turn to why next.

Part II: Validity

Validity is the extent to which an instrument measures what it is supposed to measure and performs as it is designed to perform. It is rare, if nearly impossible, that an instrument be 100% valid, so validity is generally measured in degrees. As a process, validation involves collecting and analyzing data to assess the accuracy of an instrument. There are numerous statistical tests and measures to assess the validity of quantitative instruments, which generally involves pilot testing. The remainder of this discussion focuses on external validity and content validity.

External validity is the extent to which the results of a study can be generalized from a sample to a population. Establishing eternal validity for an instrument, then, follows directly from sampling. Recall that a sample should be an accurate representation of a population, because the total population may not be available. An instrument that is externally valid helps obtain population generalizability, or the degree to which a sample represents the population.

Content validity refers to the appropriateness of the content of an instrument. In other words, do the measures (questions, observation logs, etc.) accurately assess what you want to know? This is particularly important with achievement tests. Consider that a test developer wants to maximize the validity of a unit test for 7th grade mathematics. This would involve taking representative questions from each of the sections of the unit and evaluating them against the desired outcomes.

Part III: Reliability

Reliability can be thought of as consistency. Does the instrument consistently measure what it is intended to measure? It is not possible to calculate reliability; however, there are four general estimators that you may encounter in reading research:

- Inter-Rater/Observer Reliability : The degree to which different raters/observers give consistent answers or estimates.

- Test-Retest Reliability : The consistency of a measure evaluated over time.

- Parallel-Forms Reliability: The reliability of two tests constructed the same way, from the same content.

- Internal Consistency Reliability: The consistency of results across items, often measured with Cronbach’s Alpha.

Relating Reliability and Validity

Reliability is directly related to the validity of the measure. There are several important principles. First, a test can be considered reliable, but not valid. Consider the SAT, used as a predictor of success in college. It is a reliable test (high scores relate to high GPA), though only a moderately valid indicator of success (due to the lack of structured environment – class attendance, parent-regulated study, and sleeping habits – each holistically related to success).

Second, validity is more important than reliability. Using the above example, college admissions may consider the SAT a reliable test, but not necessarily a valid measure of other quantities colleges seek, such as leadership capability, altruism, and civic involvement. The combination of these aspects, alongside the SAT, is a more valid measure of the applicant’s potential for graduation, later social involvement, and generosity (alumni giving) toward the alma mater.

Finally, the most useful instrument is both valid and reliable. Proponents of the SAT argue that it is both. It is a moderately reliable predictor of future success and a moderately valid measure of a student’s knowledge in Mathematics, Critical Reading, and Writing.

Part IV: Validity and Reliability in Qualitative Research

Thus far, we have discussed Instrumentation as related to mostly quantitative measurement. Establishing validity and reliability in qualitative research can be less precise, though participant/member checks, peer evaluation (another researcher checks the researcher’s inferences based on the instrument ( Denzin & Lincoln, 2005 ), and multiple methods (keyword: triangulation ), are convincingly used. Some qualitative researchers reject the concept of validity due to the constructivist viewpoint that reality is unique to the individual, and cannot be generalized. These researchers argue for a different standard for judging research quality. For a more complete discussion of trustworthiness, see Lincoln and Guba’s (1985) chapter .

Share this:

- How To Assess Research Validity | Windranger5

- How unreliable are the judges on Strictly Come Dancing? | Delight Through Logical Misery

Comments are closed.

About Research Rundowns

Research Rundowns was made possible by support from the Dewar College of Education at Valdosta State University .

- Experimental Design

- What is Educational Research?

- Writing Research Questions

- Mixed Methods Research Designs

- Qualitative Coding & Analysis

- Qualitative Research Design

- Correlation

- Effect Size

- Mean & Standard Deviation

- Significance Testing (t-tests)

- Steps 1-4: Finding Research

- Steps 5-6: Analyzing & Organizing

- Steps 7-9: Citing & Writing

- Writing a Research Report

Blog at WordPress.com.

- Already have a WordPress.com account? Log in now.

- Subscribe Subscribed

- Copy shortlink

- Report this content

- View post in Reader

- Manage subscriptions

- Collapse this bar

- How it works

Reliability and Validity – Definitions, Types & Examples

Published by Alvin Nicolas at August 16th, 2021 , Revised On October 26, 2023

A researcher must test the collected data before making any conclusion. Every research design needs to be concerned with reliability and validity to measure the quality of the research.

What is Reliability?

Reliability refers to the consistency of the measurement. Reliability shows how trustworthy is the score of the test. If the collected data shows the same results after being tested using various methods and sample groups, the information is reliable. If your method has reliability, the results will be valid.

Example: If you weigh yourself on a weighing scale throughout the day, you’ll get the same results. These are considered reliable results obtained through repeated measures.

Example: If a teacher conducts the same math test of students and repeats it next week with the same questions. If she gets the same score, then the reliability of the test is high.

What is the Validity?

Validity refers to the accuracy of the measurement. Validity shows how a specific test is suitable for a particular situation. If the results are accurate according to the researcher’s situation, explanation, and prediction, then the research is valid.

If the method of measuring is accurate, then it’ll produce accurate results. If a method is reliable, then it’s valid. In contrast, if a method is not reliable, it’s not valid.

Example: Your weighing scale shows different results each time you weigh yourself within a day even after handling it carefully, and weighing before and after meals. Your weighing machine might be malfunctioning. It means your method had low reliability. Hence you are getting inaccurate or inconsistent results that are not valid.

Example: Suppose a questionnaire is distributed among a group of people to check the quality of a skincare product and repeated the same questionnaire with many groups. If you get the same response from various participants, it means the validity of the questionnaire and product is high as it has high reliability.

Most of the time, validity is difficult to measure even though the process of measurement is reliable. It isn’t easy to interpret the real situation.

Example: If the weighing scale shows the same result, let’s say 70 kg each time, even if your actual weight is 55 kg, then it means the weighing scale is malfunctioning. However, it was showing consistent results, but it cannot be considered as reliable. It means the method has low reliability.

Internal Vs. External Validity

One of the key features of randomised designs is that they have significantly high internal and external validity.

Internal validity is the ability to draw a causal link between your treatment and the dependent variable of interest. It means the observed changes should be due to the experiment conducted, and any external factor should not influence the variables .

Example: age, level, height, and grade.

External validity is the ability to identify and generalise your study outcomes to the population at large. The relationship between the study’s situation and the situations outside the study is considered external validity.

Also, read about Inductive vs Deductive reasoning in this article.

Looking for reliable dissertation support?

We hear you.

- Whether you want a full dissertation written or need help forming a dissertation proposal, we can help you with both.

- Get different dissertation services at ResearchProspect and score amazing grades!

Threats to Interval Validity

Threats of external validity, how to assess reliability and validity.

Reliability can be measured by comparing the consistency of the procedure and its results. There are various methods to measure validity and reliability. Reliability can be measured through various statistical methods depending on the types of validity, as explained below:

Types of Reliability

Types of validity.

As we discussed above, the reliability of the measurement alone cannot determine its validity. Validity is difficult to be measured even if the method is reliable. The following type of tests is conducted for measuring validity.

Does your Research Methodology Have the Following?

- Great Research/Sources

- Perfect Language

- Accurate Sources

If not, we can help. Our panel of experts makes sure to keep the 3 pillars of Research Methodology strong.

How to Increase Reliability?

- Use an appropriate questionnaire to measure the competency level.

- Ensure a consistent environment for participants

- Make the participants familiar with the criteria of assessment.

- Train the participants appropriately.

- Analyse the research items regularly to avoid poor performance.

How to Increase Validity?

Ensuring Validity is also not an easy job. A proper functioning method to ensure validity is given below:

- The reactivity should be minimised at the first concern.

- The Hawthorne effect should be reduced.

- The respondents should be motivated.

- The intervals between the pre-test and post-test should not be lengthy.

- Dropout rates should be avoided.

- The inter-rater reliability should be ensured.

- Control and experimental groups should be matched with each other.

How to Implement Reliability and Validity in your Thesis?

According to the experts, it is helpful if to implement the concept of reliability and Validity. Especially, in the thesis and the dissertation, these concepts are adopted much. The method for implementation given below:

Frequently Asked Questions

What is reliability and validity in research.

Reliability in research refers to the consistency and stability of measurements or findings. Validity relates to the accuracy and truthfulness of results, measuring what the study intends to. Both are crucial for trustworthy and credible research outcomes.

What is validity?

Validity in research refers to the extent to which a study accurately measures what it intends to measure. It ensures that the results are truly representative of the phenomena under investigation. Without validity, research findings may be irrelevant, misleading, or incorrect, limiting their applicability and credibility.

What is reliability?

Reliability in research refers to the consistency and stability of measurements over time. If a study is reliable, repeating the experiment or test under the same conditions should produce similar results. Without reliability, findings become unpredictable and lack dependability, potentially undermining the study’s credibility and generalisability.

What is reliability in psychology?

In psychology, reliability refers to the consistency of a measurement tool or test. A reliable psychological assessment produces stable and consistent results across different times, situations, or raters. It ensures that an instrument’s scores are not due to random error, making the findings dependable and reproducible in similar conditions.

What is test retest reliability?

Test-retest reliability assesses the consistency of measurements taken by a test over time. It involves administering the same test to the same participants at two different points in time and comparing the results. A high correlation between the scores indicates that the test produces stable and consistent results over time.

How to improve reliability of an experiment?

- Standardise procedures and instructions.

- Use consistent and precise measurement tools.

- Train observers or raters to reduce subjective judgments.

- Increase sample size to reduce random errors.

- Conduct pilot studies to refine methods.

- Repeat measurements or use multiple methods.

- Address potential sources of variability.

What is the difference between reliability and validity?

Reliability refers to the consistency and repeatability of measurements, ensuring results are stable over time. Validity indicates how well an instrument measures what it’s intended to measure, ensuring accuracy and relevance. While a test can be reliable without being valid, a valid test must inherently be reliable. Both are essential for credible research.

Are interviews reliable and valid?

Interviews can be both reliable and valid, but they are susceptible to biases. The reliability and validity depend on the design, structure, and execution of the interview. Structured interviews with standardised questions improve reliability. Validity is enhanced when questions accurately capture the intended construct and when interviewer biases are minimised.

Are IQ tests valid and reliable?

IQ tests are generally considered reliable, producing consistent scores over time. Their validity, however, is a subject of debate. While they effectively measure certain cognitive skills, whether they capture the entirety of “intelligence” or predict success in all life areas is contested. Cultural bias and over-reliance on tests are also concerns.

Are questionnaires reliable and valid?

Questionnaires can be both reliable and valid if well-designed. Reliability is achieved when they produce consistent results over time or across similar populations. Validity is ensured when questions accurately measure the intended construct. However, factors like poorly phrased questions, respondent bias, and lack of standardisation can compromise their reliability and validity.

You May Also Like

A hypothesis is a research question that has to be proved correct or incorrect through hypothesis testing – a scientific approach to test a hypothesis.

A case study is a detailed analysis of a situation concerning organizations, industries, and markets. The case study generally aims at identifying the weak areas.

Discourse analysis is an essential aspect of studying a language. It is used in various disciplines of social science and humanities such as linguistic, sociolinguistics, and psycholinguistic.

USEFUL LINKS

LEARNING RESOURCES

COMPANY DETAILS

- How It Works

- Privacy Policy

Buy Me a Coffee

Home » Reliability Vs Validity

Reliability Vs Validity

Table of Contents

Reliability and validity are two important concepts in research that are used to evaluate the quality of measurement instruments or research studies.

Reliability

Reliability refers to the degree to which a measurement instrument or research study produces consistent and stable results over time, across different observers or raters, or under different conditions.

In other words, reliability is the extent to which a measurement instrument or research study produces results that are free from random error. A reliable measurement instrument or research study should produce similar results each time it is used or conducted, regardless of who is using it or conducting it.

Validity, on the other hand, refers to the degree to which a measurement instrument or research study accurately measures what it is supposed to measure or tests what it is supposed to test.

In other words, validity is the extent to which a measurement instrument or research study measures or tests what it claims to measure or test. A valid measurement instrument or research study should produce results that accurately reflect the concept or construct being measured or tested.

Difference Between Reliability Vs Validity

Here’s a comparison table that highlights the differences between reliability and validity:

Also see Research Methods

About the author

Muhammad Hassan

Researcher, Academic Writer, Web developer

You may also like

Validity – Types, Examples and Guide

Inductive Vs Deductive Research

Alternate Forms Reliability – Methods, Examples...

Exploratory Vs Explanatory Research

Construct Validity – Types, Threats and Examples

Internal Validity – Threats, Examples and Guide

An official website of the United States government

The .gov means it’s official. Federal government websites often end in .gov or .mil. Before sharing sensitive information, make sure you’re on a federal government site.

The site is secure. The https:// ensures that you are connecting to the official website and that any information you provide is encrypted and transmitted securely.

- Publications

- Account settings

Preview improvements coming to the PMC website in October 2024. Learn More or Try it out now .

- Advanced Search

- Journal List

- J Family Med Prim Care

- v.4(3); Jul-Sep 2015

Validity, reliability, and generalizability in qualitative research

Lawrence leung.

1 Department of Family Medicine, Queen's University, Kingston, Ontario, Canada

2 Centre of Studies in Primary Care, Queen's University, Kingston, Ontario, Canada

In general practice, qualitative research contributes as significantly as quantitative research, in particular regarding psycho-social aspects of patient-care, health services provision, policy setting, and health administrations. In contrast to quantitative research, qualitative research as a whole has been constantly critiqued, if not disparaged, by the lack of consensus for assessing its quality and robustness. This article illustrates with five published studies how qualitative research can impact and reshape the discipline of primary care, spiraling out from clinic-based health screening to community-based disease monitoring, evaluation of out-of-hours triage services to provincial psychiatric care pathways model and finally, national legislation of core measures for children's healthcare insurance. Fundamental concepts of validity, reliability, and generalizability as applicable to qualitative research are then addressed with an update on the current views and controversies.

Nature of Qualitative Research versus Quantitative Research

The essence of qualitative research is to make sense of and recognize patterns among words in order to build up a meaningful picture without compromising its richness and dimensionality. Like quantitative research, the qualitative research aims to seek answers for questions of “how, where, when who and why” with a perspective to build a theory or refute an existing theory. Unlike quantitative research which deals primarily with numerical data and their statistical interpretations under a reductionist, logical and strictly objective paradigm, qualitative research handles nonnumerical information and their phenomenological interpretation, which inextricably tie in with human senses and subjectivity. While human emotions and perspectives from both subjects and researchers are considered undesirable biases confounding results in quantitative research, the same elements are considered essential and inevitable, if not treasurable, in qualitative research as they invariable add extra dimensions and colors to enrich the corpus of findings. However, the issue of subjectivity and contextual ramifications has fueled incessant controversies regarding yardsticks for quality and trustworthiness of qualitative research results for healthcare.

Impact of Qualitative Research upon Primary Care

In many ways, qualitative research contributes significantly, if not more so than quantitative research, to the field of primary care at various levels. Five qualitative studies are chosen to illustrate how various methodologies of qualitative research helped in advancing primary healthcare, from novel monitoring of chronic obstructive pulmonary disease (COPD) via mobile-health technology,[ 1 ] informed decision for colorectal cancer screening,[ 2 ] triaging out-of-hours GP services,[ 3 ] evaluating care pathways for community psychiatry[ 4 ] and finally prioritization of healthcare initiatives for legislation purposes at national levels.[ 5 ] With the recent advances of information technology and mobile connecting device, self-monitoring and management of chronic diseases via tele-health technology may seem beneficial to both the patient and healthcare provider. Recruiting COPD patients who were given tele-health devices that monitored lung functions, Williams et al. [ 1 ] conducted phone interviews and analyzed their transcripts via a grounded theory approach, identified themes which enabled them to conclude that such mobile-health setup and application helped to engage patients with better adherence to treatment and overall improvement in mood. Such positive findings were in contrast to previous studies, which opined that elderly patients were often challenged by operating computer tablets,[ 6 ] or, conversing with the tele-health software.[ 7 ] To explore the content of recommendations for colorectal cancer screening given out by family physicians, Wackerbarth, et al. [ 2 ] conducted semi-structure interviews with subsequent content analysis and found that most physicians delivered information to enrich patient knowledge with little regard to patients’ true understanding, ideas, and preferences in the matter. These findings suggested room for improvement for family physicians to better engage their patients in recommending preventative care. Faced with various models of out-of-hours triage services for GP consultations, Egbunike et al. [ 3 ] conducted thematic analysis on semi-structured telephone interviews with patients and doctors in various urban, rural and mixed settings. They found that the efficiency of triage services remained a prime concern from both users and providers, among issues of access to doctors and unfulfilled/mismatched expectations from users, which could arouse dissatisfaction and legal implications. In UK, a care pathways model for community psychiatry had been introduced but its benefits were unclear. Khandaker et al. [ 4 ] hence conducted a qualitative study using semi-structure interviews with medical staff and other stakeholders; adopting a grounded-theory approach, major themes emerged which included improved equality of access, more focused logistics, increased work throughput and better accountability for community psychiatry provided under the care pathway model. Finally, at the US national level, Mangione-Smith et al. [ 5 ] employed a modified Delphi method to gather consensus from a panel of nominators which were recognized experts and stakeholders in their disciplines, and identified a core set of quality measures for children's healthcare under the Medicaid and Children's Health Insurance Program. These core measures were made transparent for public opinion and later passed on for full legislation, hence illustrating the impact of qualitative research upon social welfare and policy improvement.

Overall Criteria for Quality in Qualitative Research

Given the diverse genera and forms of qualitative research, there is no consensus for assessing any piece of qualitative research work. Various approaches have been suggested, the two leading schools of thoughts being the school of Dixon-Woods et al. [ 8 ] which emphasizes on methodology, and that of Lincoln et al. [ 9 ] which stresses the rigor of interpretation of results. By identifying commonalities of qualitative research, Dixon-Woods produced a checklist of questions for assessing clarity and appropriateness of the research question; the description and appropriateness for sampling, data collection and data analysis; levels of support and evidence for claims; coherence between data, interpretation and conclusions, and finally level of contribution of the paper. These criteria foster the 10 questions for the Critical Appraisal Skills Program checklist for qualitative studies.[ 10 ] However, these methodology-weighted criteria may not do justice to qualitative studies that differ in epistemological and philosophical paradigms,[ 11 , 12 ] one classic example will be positivistic versus interpretivistic.[ 13 ] Equally, without a robust methodological layout, rigorous interpretation of results advocated by Lincoln et al. [ 9 ] will not be good either. Meyrick[ 14 ] argued from a different angle and proposed fulfillment of the dual core criteria of “transparency” and “systematicity” for good quality qualitative research. In brief, every step of the research logistics (from theory formation, design of study, sampling, data acquisition and analysis to results and conclusions) has to be validated if it is transparent or systematic enough. In this manner, both the research process and results can be assured of high rigor and robustness.[ 14 ] Finally, Kitto et al. [ 15 ] epitomized six criteria for assessing overall quality of qualitative research: (i) Clarification and justification, (ii) procedural rigor, (iii) sample representativeness, (iv) interpretative rigor, (v) reflexive and evaluative rigor and (vi) transferability/generalizability, which also double as evaluative landmarks for manuscript review to the Medical Journal of Australia. Same for quantitative research, quality for qualitative research can be assessed in terms of validity, reliability, and generalizability.

Validity in qualitative research means “appropriateness” of the tools, processes, and data. Whether the research question is valid for the desired outcome, the choice of methodology is appropriate for answering the research question, the design is valid for the methodology, the sampling and data analysis is appropriate, and finally the results and conclusions are valid for the sample and context. In assessing validity of qualitative research, the challenge can start from the ontology and epistemology of the issue being studied, e.g. the concept of “individual” is seen differently between humanistic and positive psychologists due to differing philosophical perspectives:[ 16 ] Where humanistic psychologists believe “individual” is a product of existential awareness and social interaction, positive psychologists think the “individual” exists side-by-side with formation of any human being. Set off in different pathways, qualitative research regarding the individual's wellbeing will be concluded with varying validity. Choice of methodology must enable detection of findings/phenomena in the appropriate context for it to be valid, with due regard to culturally and contextually variable. For sampling, procedures and methods must be appropriate for the research paradigm and be distinctive between systematic,[ 17 ] purposeful[ 18 ] or theoretical (adaptive) sampling[ 19 , 20 ] where the systematic sampling has no a priori theory, purposeful sampling often has a certain aim or framework and theoretical sampling is molded by the ongoing process of data collection and theory in evolution. For data extraction and analysis, several methods were adopted to enhance validity, including 1 st tier triangulation (of researchers) and 2 nd tier triangulation (of resources and theories),[ 17 , 21 ] well-documented audit trail of materials and processes,[ 22 , 23 , 24 ] multidimensional analysis as concept- or case-orientated[ 25 , 26 ] and respondent verification.[ 21 , 27 ]

Reliability

In quantitative research, reliability refers to exact replicability of the processes and the results. In qualitative research with diverse paradigms, such definition of reliability is challenging and epistemologically counter-intuitive. Hence, the essence of reliability for qualitative research lies with consistency.[ 24 , 28 ] A margin of variability for results is tolerated in qualitative research provided the methodology and epistemological logistics consistently yield data that are ontologically similar but may differ in richness and ambience within similar dimensions. Silverman[ 29 ] proposed five approaches in enhancing the reliability of process and results: Refutational analysis, constant data comparison, comprehensive data use, inclusive of the deviant case and use of tables. As data were extracted from the original sources, researchers must verify their accuracy in terms of form and context with constant comparison,[ 27 ] either alone or with peers (a form of triangulation).[ 30 ] The scope and analysis of data included should be as comprehensive and inclusive with reference to quantitative aspects if possible.[ 30 ] Adopting the Popperian dictum of falsifiability as essence of truth and science, attempted to refute the qualitative data and analytes should be performed to assess reliability.[ 31 ]

Generalizability

Most qualitative research studies, if not all, are meant to study a specific issue or phenomenon in a certain population or ethnic group, of a focused locality in a particular context, hence generalizability of qualitative research findings is usually not an expected attribute. However, with rising trend of knowledge synthesis from qualitative research via meta-synthesis, meta-narrative or meta-ethnography, evaluation of generalizability becomes pertinent. A pragmatic approach to assessing generalizability for qualitative studies is to adopt same criteria for validity: That is, use of systematic sampling, triangulation and constant comparison, proper audit and documentation, and multi-dimensional theory.[ 17 ] However, some researchers espouse the approach of analytical generalization[ 32 ] where one judges the extent to which the findings in one study can be generalized to another under similar theoretical, and the proximal similarity model, where generalizability of one study to another is judged by similarities between the time, place, people and other social contexts.[ 33 ] Thus said, Zimmer[ 34 ] questioned the suitability of meta-synthesis in view of the basic tenets of grounded theory,[ 35 ] phenomenology[ 36 ] and ethnography.[ 37 ] He concluded that any valid meta-synthesis must retain the other two goals of theory development and higher-level abstraction while in search of generalizability, and must be executed as a third level interpretation using Gadamer's concepts of the hermeneutic circle,[ 38 , 39 ] dialogic process[ 38 ] and fusion of horizons.[ 39 ] Finally, Toye et al. [ 40 ] reported the practicality of using “conceptual clarity” and “interpretative rigor” as intuitive criteria for assessing quality in meta-ethnography, which somehow echoed Rolfe's controversial aesthetic theory of research reports.[ 41 ]

Food for Thought

Despite various measures to enhance or ensure quality of qualitative studies, some researchers opined from a purist ontological and epistemological angle that qualitative research is not a unified, but ipso facto diverse field,[ 8 ] hence any attempt to synthesize or appraise different studies under one system is impossible and conceptually wrong. Barbour argued from a philosophical angle that these special measures or “technical fixes” (like purposive sampling, multiple-coding, triangulation, and respondent validation) can never confer the rigor as conceived.[ 11 ] In extremis, Rolfe et al. opined from the field of nursing research, that any set of formal criteria used to judge the quality of qualitative research are futile and without validity, and suggested that any qualitative report should be judged by the form it is written (aesthetic) and not by the contents (epistemic).[ 41 ] Rolfe's novel view is rebutted by Porter,[ 42 ] who argued via logical premises that two of Rolfe's fundamental statements were flawed: (i) “The content of research report is determined by their forms” may not be a fact, and (ii) that research appraisal being “subject to individual judgment based on insight and experience” will mean those without sufficient experience of performing research will be unable to judge adequately – hence an elitist's principle. From a realism standpoint, Porter then proposes multiple and open approaches for validity in qualitative research that incorporate parallel perspectives[ 43 , 44 ] and diversification of meanings.[ 44 ] Any work of qualitative research, when read by the readers, is always a two-way interactive process, such that validity and quality has to be judged by the receiving end too and not by the researcher end alone.

In summary, the three gold criteria of validity, reliability and generalizability apply in principle to assess quality for both quantitative and qualitative research, what differs will be the nature and type of processes that ontologically and epistemologically distinguish between the two.

Source of Support: Nil.

Conflict of Interest: None declared.

Log in using your username and password

- Search More Search for this keyword Advanced search

- Latest content

- Current issue

- Write for Us

- BMJ Journals More You are viewing from: Google Indexer

You are here

- Volume 18, Issue 3

- Validity and reliability in quantitative studies

- Article Text

- Article info

- Citation Tools

- Rapid Responses

- Article metrics

- Roberta Heale 1 ,

- Alison Twycross 2

- 1 School of Nursing, Laurentian University , Sudbury, Ontario , Canada

- 2 Faculty of Health and Social Care , London South Bank University , London , UK

- Correspondence to : Dr Roberta Heale, School of Nursing, Laurentian University, Ramsey Lake Road, Sudbury, Ontario, Canada P3E2C6; rheale{at}laurentian.ca

https://doi.org/10.1136/eb-2015-102129

Statistics from Altmetric.com

Request permissions.

If you wish to reuse any or all of this article please use the link below which will take you to the Copyright Clearance Center’s RightsLink service. You will be able to get a quick price and instant permission to reuse the content in many different ways.

Evidence-based practice includes, in part, implementation of the findings of well-conducted quality research studies. So being able to critique quantitative research is an important skill for nurses. Consideration must be given not only to the results of the study but also the rigour of the research. Rigour refers to the extent to which the researchers worked to enhance the quality of the studies. In quantitative research, this is achieved through measurement of the validity and reliability. 1

- View inline

Types of validity

The first category is content validity . This category looks at whether the instrument adequately covers all the content that it should with respect to the variable. In other words, does the instrument cover the entire domain related to the variable, or construct it was designed to measure? In an undergraduate nursing course with instruction about public health, an examination with content validity would cover all the content in the course with greater emphasis on the topics that had received greater coverage or more depth. A subset of content validity is face validity , where experts are asked their opinion about whether an instrument measures the concept intended.

Construct validity refers to whether you can draw inferences about test scores related to the concept being studied. For example, if a person has a high score on a survey that measures anxiety, does this person truly have a high degree of anxiety? In another example, a test of knowledge of medications that requires dosage calculations may instead be testing maths knowledge.

There are three types of evidence that can be used to demonstrate a research instrument has construct validity:

Homogeneity—meaning that the instrument measures one construct.

Convergence—this occurs when the instrument measures concepts similar to that of other instruments. Although if there are no similar instruments available this will not be possible to do.

Theory evidence—this is evident when behaviour is similar to theoretical propositions of the construct measured in the instrument. For example, when an instrument measures anxiety, one would expect to see that participants who score high on the instrument for anxiety also demonstrate symptoms of anxiety in their day-to-day lives. 2

The final measure of validity is criterion validity . A criterion is any other instrument that measures the same variable. Correlations can be conducted to determine the extent to which the different instruments measure the same variable. Criterion validity is measured in three ways:

Convergent validity—shows that an instrument is highly correlated with instruments measuring similar variables.

Divergent validity—shows that an instrument is poorly correlated to instruments that measure different variables. In this case, for example, there should be a low correlation between an instrument that measures motivation and one that measures self-efficacy.

Predictive validity—means that the instrument should have high correlations with future criterions. 2 For example, a score of high self-efficacy related to performing a task should predict the likelihood a participant completing the task.

Reliability

Reliability relates to the consistency of a measure. A participant completing an instrument meant to measure motivation should have approximately the same responses each time the test is completed. Although it is not possible to give an exact calculation of reliability, an estimate of reliability can be achieved through different measures. The three attributes of reliability are outlined in table 2 . How each attribute is tested for is described below.

Attributes of reliability

Homogeneity (internal consistency) is assessed using item-to-total correlation, split-half reliability, Kuder-Richardson coefficient and Cronbach's α. In split-half reliability, the results of a test, or instrument, are divided in half. Correlations are calculated comparing both halves. Strong correlations indicate high reliability, while weak correlations indicate the instrument may not be reliable. The Kuder-Richardson test is a more complicated version of the split-half test. In this process the average of all possible split half combinations is determined and a correlation between 0–1 is generated. This test is more accurate than the split-half test, but can only be completed on questions with two answers (eg, yes or no, 0 or 1). 3

Cronbach's α is the most commonly used test to determine the internal consistency of an instrument. In this test, the average of all correlations in every combination of split-halves is determined. Instruments with questions that have more than two responses can be used in this test. The Cronbach's α result is a number between 0 and 1. An acceptable reliability score is one that is 0.7 and higher. 1 , 3

Stability is tested using test–retest and parallel or alternate-form reliability testing. Test–retest reliability is assessed when an instrument is given to the same participants more than once under similar circumstances. A statistical comparison is made between participant's test scores for each of the times they have completed it. This provides an indication of the reliability of the instrument. Parallel-form reliability (or alternate-form reliability) is similar to test–retest reliability except that a different form of the original instrument is given to participants in subsequent tests. The domain, or concepts being tested are the same in both versions of the instrument but the wording of items is different. 2 For an instrument to demonstrate stability there should be a high correlation between the scores each time a participant completes the test. Generally speaking, a correlation coefficient of less than 0.3 signifies a weak correlation, 0.3–0.5 is moderate and greater than 0.5 is strong. 4

Equivalence is assessed through inter-rater reliability. This test includes a process for qualitatively determining the level of agreement between two or more observers. A good example of the process used in assessing inter-rater reliability is the scores of judges for a skating competition. The level of consistency across all judges in the scores given to skating participants is the measure of inter-rater reliability. An example in research is when researchers are asked to give a score for the relevancy of each item on an instrument. Consistency in their scores relates to the level of inter-rater reliability of the instrument.

Determining how rigorously the issues of reliability and validity have been addressed in a study is an essential component in the critique of research as well as influencing the decision about whether to implement of the study findings into nursing practice. In quantitative studies, rigour is determined through an evaluation of the validity and reliability of the tools or instruments utilised in the study. A good quality research study will provide evidence of how all these factors have been addressed. This will help you to assess the validity and reliability of the research and help you decide whether or not you should apply the findings in your area of clinical practice.

- Lobiondo-Wood G ,

- Shuttleworth M

- ↵ Laerd Statistics . Determining the correlation coefficient . 2013 . https://statistics.laerd.com/premium/pc/pearson-correlation-in-spss-8.php

Twitter Follow Roberta Heale at @robertaheale and Alison Twycross at @alitwy

Competing interests None declared.

Read the full text or download the PDF:

Validity and reliability of measurement instruments used in research

Affiliation.

- 1 Department of Pharmaceutical Outcomes and Policy, College of Pharmacy, University of Florida, Gainesville, FL 32610, USA. [email protected]

- PMID: 19020196

- DOI: 10.2146/ajhp070364

Purpose: Issues related to the validity and reliability of measurement instruments used in research are reviewed.

Summary: Key indicators of the quality of a measuring instrument are the reliability and validity of the measures. The process of developing and validating an instrument is in large part focused on reducing error in the measurement process. Reliability estimates evaluate the stability of measures, internal consistency of measurement instruments, and interrater reliability of instrument scores. Validity is the extent to which the interpretations of the results of a test are warranted, which depends on the particular use the test is intended to serve. The responsiveness of the measure to change is of interest in many of the applications in health care where improvement in outcomes as a result of treatment is a primary goal of research. Several issues may affect the accuracy of data collected, such as those related to self-report and secondary data sources. Self-report of patients or subjects is required for many of the measurements conducted in health care, but self-reports of behavior are particularly subject to problems with social desirability biases. Data that were originally gathered for a different purpose are often used to answer a research question, which can affect the applicability to the study at hand.

Conclusion: In health care and social science research, many of the variables of interest and outcomes that are important are abstract concepts known as theoretical constructs. Using tests or instruments that are valid and reliable to measure such constructs is a crucial component of research quality.

Publication types

- Biomedical Research*

- Clinical Laboratory Techniques / standards

- Data Collection / standards*

- Delivery of Health Care / standards*

- Drug-Related Side Effects and Adverse Reactions

- Health Status

- Medical Records / standards

- Patient Compliance

- Patient Satisfaction

- Quality of Life

- Reproducibility of Results

- Surveys and Questionnaires / standards

- Treatment Outcome

Measuring Reliability and Validity of Evaluation Instruments

How do you know if your evaluation instrument is “good”? Or if the instrument you find on CSEdResearch.org is a decent one to use in your study?

Evaluation instruments (like surveys, questionnaires, and interview protocols) can go through their own evaluation to assess whether or not they have evidence of reliability or validity. In the Filters section on the Evaluation Instruments page, you can find a category called Assessed where you can include instruments in your search that have been previously shown to have evidence of reliability and validity. So, what do these measures mean? And, what is the difference between them?

Evaluation instruments are often designed to measure the impact of outreach activities, curriculum, and other interventions in computing education. But how do you know if these evaluation instruments actually measure what they say they are measuring? We gain confidence in these instruments by assessing evidence of their reliability and validity.

Instruments with evidence of reliability yield the same results each time they are administered. Let’s say that you created an evaluation instrument in computing education research, and you gave it to the same group of high school students four times at (nearly) the same time. If the instrument was reliable, you would expect that the results of these tests to be the same, statistically speaking.

Instruments with evidence of validity are those that have been checked in one or more ways to determine whether or not the instrument measures what it is supposed to measure. So, if your instrument is designed to measure whether or not parental support of high school students taking computer science courses is positively correlated with their grades in these courses, then statistical tests and other steps can be taken to ensure that the instrument does exactly that.

Those are still very broad definitions. Let’s break it down some more. But before we do, there is one very important caveat.

Evidence of reliability and/or validity are assessed for a specified particular demographic in a particular setting. Using an instrument that has evidence for reliability and/or validity does not mean that the evidence applies to your usage of the instrument. It can provide, however, a greater measure of confidence than an instrument that has no evidence of validity or reliability. And, if you are able to find an instrument that has evidence of validity with a population similar to your own (e.g. Hispanic students in an urban middle school), this can provide even greater confidence.

Now, let’s take a look at what each of these terms mean and how they can be measured.

Select here to go to next page to learn about Reliability.

Privacy Overview

- Scholarly Community Encyclopedia

- Log in/Sign up

Video Upload Options

- MDPI and ACS Style

- Chicago Style

Questionnaire is one of the most widely used tools to collect data in especially social science research. The main objective of questionnaire in research is to obtain relevant information in most reliable and valid manner. Thus the accuracy and consistency of survey/questionnaire forms a significant aspect of research methodology which are known as validity and reliability. Often new researchers are confused with selection and conducting of proper validity type to test their research instrument (questionnaire/survey).

1. Introduction

Validity explains how well the collected data covers the actual area of investigation [ 1 ] . Validity basically means “measure what is intended to be measured” [ 2 ] .

2. Face Validity

Face validity is a subjective judgment on the operationalization of a construct. Face validity is the degree to which a measure appears to be related to a specific construct, in the judgment of non-experts such as test takers and representatives of the legal system. That is, a test has face validity if its content simply looks relevant to the person taking the test. It evaluates the appearance of the questionnaire in terms of feasibility, readability, consistency of style and formatting, and the clarity of the language used.

In other words, face validity refers to researchers’ subjective assessments of the presentation and relevance of the measuring instrument as to whether the items in the instrument appear to be relevant, reasonable, unambiguous and clear [ 3 ] .

In order to examine the face validity, the dichotomous scale can be used with categorical option of “Yes” and “No” which indicate a favourable and unfavourable item respectively. Where favourable item means that the item is objectively structured and can be positively classified under the thematic category. Then the collected data is analysed using Cohen’s Kappa Index (CKI) in determining the face validity of the instrument. DM. et al. [ 4 ] recommended a minimally acceptable Kappa of 0.60 for inter-rater agreement. Unfortunately, face validity is arguably the weakest form of validity and many would suggest that it is not a form of validity in the strictest sense of the word.

3. Content Validity

Content validity is defined as “the degree to which items in an instrument reflect the content universe to which the instrument will be generalized” (Straub, Boudreau et al. [ 5 ] ). In the field of IS, it is highly recommended to apply content validity while the new instrument is developed. In general, content validity involves evaluation of a new survey instrument in order to ensure that it includes all the items that are essential and eliminates undesirable items to a particular construct domain [ 6 ] ). The judgemental approach to establish content validity involves literature reviews and then follow-ups with the evaluation by expert judges or panels. The procedure of judgemental approach of content validity requires researchers to be present with experts in order to facilitate validation. However it is not always possible to have many experts of a particular research topic at one location. It poses a limitation to conduct validity on a survey instrument when experts are located in different geographical areas (Choudrie and Dwivedi [ 7 ] ). Contrastingly, a quantitative approach may allow researchers to send content validity questionnaires to experts working at different locations, whereby distance is not a limitation. In order to apply content validity following steps are followed:

1.An exhaustive literature reviews to extract the related items.

2.A content validity survey is generated (each item is assessed using three point scale (not necessary, useful but not essential and essential).

3.The survey should sent to the experts in the same field of the research.

4.The content validity ratio (CVR) is then calculated for each item by employing Lawshe [ 8 ] (1975) ‘s method.

5.Items that are not significant at the critical level are eliminated. In following the critical level of Lawshe method is explained.

4. Construct Validity

If a relationship is causal, what are the particular cause and effect behaviours or constructs involved in the relationship? Construct validity refers to how well you translated or transformed a concept, idea, or behaviour that is a construct into a functioning and operating reality, the operationalization. Construct validity has two components: convergent and discriminant validity.

4.1 Discriminant Validity

Discriminant validity is the extent to which latent variable A discriminates from other latent variables (e.g., B, C, D). Discriminant validity means that a latent variable is able to account for more variance in the observed variables associated with it than a) measurement error or similar external, unmeasured influences; or b) other constructs within the conceptual framework. If this is not the case, then the validity of the individual indicators and of the construct is questionable (Fornell and Larcker [ 9 ] ). In brief, Discriminant validity (or divergent validity) tests that constructs that should have no relationship do, in fact, not have any relationship.

4.2 Convergent Validity

Convergent validity, a parameter often used in sociology, psychology, and other behavioural sciences, refers to the degree to which two measures of constructs that theoretically should be related, are in fact related. In brief, Convergent validity tests that constructs that are expected to be related are, in fact, related.

With the purpose of verifying the construct validity (discriminant and convergent validity), a factor analysis can be conducted utilizing principal component analysis (PCA) with varimax rotation method (Koh and Nam [ 9 ] , Wee and Quazi, [ 10 ] ). Items loaded above 0.40, which is the minimum recommended value in research are considered for further analysis. Also, items cross loading above 0.40 should be deleted. Therefore, the factor analysis results will satisfy the criteria of construct validity including both the discriminant validity (loading of at least 0.40, no cross-loading of items above 0.40) and convergent validity (eigenvalues of 1, loading of at least 0.40, items that load on posited constructs) (Straub et al., [ 11 ] ). There are also other methods to test the convergent and discriminant validity.

5. Criterion Validity

Criterion or concrete validity is the extent to which a measure is related to an outcome. It measures how well one measure predicts an outcome for another measure. A test has this type of validity if it is useful for predicting performance or behavior in another situation (past, present, or future).