Unpacking in Python: Beyond Parallel Assignment

- Introduction

Unpacking in Python refers to an operation that consists of assigning an iterable of values to a tuple (or list ) of variables in a single assignment statement. As a complement, the term packing can be used when we collect several values in a single variable using the iterable unpacking operator, * .

Historically, Python developers have generically referred to this kind of operation as tuple unpacking . However, since this Python feature has turned out to be quite useful and popular, it's been generalized to all kinds of iterables. Nowadays, a more modern and accurate term would be iterable unpacking .

In this tutorial, we'll learn what iterable unpacking is and how we can take advantage of this Python feature to make our code more readable, maintainable, and pythonic.

Additionally, we'll also cover some practical examples of how to use the iterable unpacking feature in the context of assignments operations, for loops, function definitions, and function calls.

- Packing and Unpacking in Python

Python allows a tuple (or list ) of variables to appear on the left side of an assignment operation. Each variable in the tuple can receive one value (or more, if we use the * operator) from an iterable on the right side of the assignment.

For historical reasons, Python developers used to call this tuple unpacking . However, since this feature has been generalized to all kind of iterable, a more accurate term would be iterable unpacking and that's what we'll call it in this tutorial.

Unpacking operations have been quite popular among Python developers because they can make our code more readable, and elegant. Let's take a closer look to unpacking in Python and see how this feature can improve our code.

- Unpacking Tuples

In Python, we can put a tuple of variables on the left side of an assignment operator ( = ) and a tuple of values on the right side. The values on the right will be automatically assigned to the variables on the left according to their position in the tuple . This is commonly known as tuple unpacking in Python. Check out the following example:

When we put tuples on both sides of an assignment operator, a tuple unpacking operation takes place. The values on the right are assigned to the variables on the left according to their relative position in each tuple . As you can see in the above example, a will be 1 , b will be 2 , and c will be 3 .

To create a tuple object, we don't need to use a pair of parentheses () as delimiters. This also works for tuple unpacking, so the following syntaxes are equivalent:

Since all these variations are valid Python syntax, we can use any of them, depending on the situation. Arguably, the last syntax is more commonly used when it comes to unpacking in Python.

When we are unpacking values into variables using tuple unpacking, the number of variables on the left side tuple must exactly match the number of values on the right side tuple . Otherwise, we'll get a ValueError .

For example, in the following code, we use two variables on the left and three values on the right. This will raise a ValueError telling us that there are too many values to unpack:

Note: The only exception to this is when we use the * operator to pack several values in one variable as we'll see later on.

On the other hand, if we use more variables than values, then we'll get a ValueError but this time the message says that there are not enough values to unpack:

If we use a different number of variables and values in a tuple unpacking operation, then we'll get a ValueError . That's because Python needs to unambiguously know what value goes into what variable, so it can do the assignment accordingly.

- Unpacking Iterables

The tuple unpacking feature got so popular among Python developers that the syntax was extended to work with any iterable object. The only requirement is that the iterable yields exactly one item per variable in the receiving tuple (or list ).

Check out the following examples of how iterable unpacking works in Python:

When it comes to unpacking in Python, we can use any iterable on the right side of the assignment operator. The left side can be filled with a tuple or with a list of variables. Check out the following example in which we use a tuple on the right side of the assignment statement:

It works the same way if we use the range() iterator:

Even though this is a valid Python syntax, it's not commonly used in real code and maybe a little bit confusing for beginner Python developers.

Finally, we can also use set objects in unpacking operations. However, since sets are unordered collection, the order of the assignments can be sort of incoherent and can lead to subtle bugs. Check out the following example:

If we use sets in unpacking operations, then the final order of the assignments can be quite different from what we want and expect. So, it's best to avoid using sets in unpacking operations unless the order of assignment isn't important to our code.

- Packing With the * Operator

The * operator is known, in this context, as the tuple (or iterable) unpacking operator . It extends the unpacking functionality to allow us to collect or pack multiple values in a single variable. In the following example, we pack a tuple of values into a single variable by using the * operator:

For this code to work, the left side of the assignment must be a tuple (or a list ). That's why we use a trailing comma. This tuple can contain as many variables as we need. However, it can only contain one starred expression .

We can form a stared expression using the unpacking operator, * , along with a valid Python identifier, just like the *a in the above code. The rest of the variables in the left side tuple are called mandatory variables because they must be filled with concrete values, otherwise, we'll get an error. Here's how this works in practice.

Packing the trailing values in b :

Packing the starting values in a :

Packing one value in a because b and c are mandatory:

Packing no values in a ( a defaults to [] ) because b , c , and d are mandatory:

Supplying no value for a mandatory variable ( e ), so an error occurs:

Packing values in a variable with the * operator can be handy when we need to collect the elements of a generator in a single variable without using the list() function. In the following examples, we use the * operator to pack the elements of a generator expression and a range object to a individual variable:

In these examples, the * operator packs the elements in gen , and ran into g and r respectively. With his syntax, we avoid the need of calling list() to create a list of values from a range object, a generator expression, or a generator function.

Notice that we can't use the unpacking operator, * , to pack multiple values into one variable without adding a trailing comma to the variable on the left side of the assignment. So, the following code won't work:

If we try to use the * operator to pack several values into a single variable, then we need to use the singleton tuple syntax. For example, to make the above example works, we just need to add a comma after the variable r , like in *r, = range(10) .

- Using Packing and Unpacking in Practice

Packing and unpacking operations can be quite useful in practice. They can make your code clear, readable, and pythonic. Let's take a look at some common use-cases of packing and unpacking in Python.

- Assigning in Parallel

One of the most common use-cases of unpacking in Python is what we can call parallel assignment . Parallel assignment allows you to assign the values in an iterable to a tuple (or list ) of variables in a single and elegant statement.

For example, let's suppose we have a database about the employees in our company and we need to assign each item in the list to a descriptive variable. If we ignore how iterable unpacking works in Python, we can get ourself writing code like this:

Even though this code works, the index handling can be clumsy, hard to type, and confusing. A cleaner, more readable, and pythonic solution can be coded as follows:

Using unpacking in Python, we can solve the problem of the previous example with a single, straightforward, and elegant statement. This tiny change would make our code easier to read and understand for newcomers developers.

- Swapping Values Between Variables

Another elegant application of unpacking in Python is swapping values between variables without using a temporary or auxiliary variable. For example, let's suppose we need to swap the values of two variables a and b . To do this, we can stick to the traditional solution and use a temporary variable to store the value to be swapped as follows:

This procedure takes three steps and a new temporary variable. If we use unpacking in Python, then we can achieve the same result in a single and concise step:

In statement a, b = b, a , we're reassigning a to b and b to a in one line of code. This is a lot more readable and straightforward. Also, notice that with this technique, there is no need for a new temporary variable.

- Collecting Multiple Values With *

When we're working with some algorithms, there may be situations in which we need to split the values of an iterable or a sequence in chunks of values for further processing. The following example shows how to uses a list and slicing operations to do so:

Check out our hands-on, practical guide to learning Git, with best-practices, industry-accepted standards, and included cheat sheet. Stop Googling Git commands and actually learn it!

Even though this code works as we expect, dealing with indices and slices can be a little bit annoying, difficult to read, and confusing for beginners. It has also the drawback of making the code rigid and difficult to maintain. In this situation, the iterable unpacking operator, * , and its ability to pack several values in a single variable can be a great tool. Check out this refactoring of the above code:

The line first, *body, last = seq makes the magic here. The iterable unpacking operator, * , collects the elements in the middle of seq in body . This makes our code more readable, maintainable, and flexible. You may be thinking, why more flexible? Well, suppose that seq changes its length in the road and you still need to collect the middle elements in body . In this case, since we're using unpacking in Python, no changes are needed for our code to work. Check out this example:

If we were using sequence slicing instead of iterable unpacking in Python, then we would need to update our indices and slices to correctly catch the new values.

The use of the * operator to pack several values in a single variable can be applied in a variety of configurations, provided that Python can unambiguously determine what element (or elements) to assign to each variable. Take a look at the following examples:

We can move the * operator in the tuple (or list ) of variables to collect the values according to our needs. The only condition is that Python can determine to what variable assign each value.

It's important to note that we can't use more than one stared expression in the assignment If we do so, then we'll get a SyntaxError as follows:

If we use two or more * in an assignment expression, then we'll get a SyntaxError telling us that two-starred expression were found. This is that way because Python can't unambiguously determine what value (or values) we want to assign to each variable.

- Dropping Unneeded Values With *

Another common use-case of the * operator is to use it with a dummy variable name to drop some useless or unneeded values. Check out the following example:

For a more insightful example of this use-case, suppose we're developing a script that needs to determine the Python version we're using. To do this, we can use the sys.version_info attribute . This attribute returns a tuple containing the five components of the version number: major , minor , micro , releaselevel , and serial . But we just need major , minor , and micro for our script to work, so we can drop the rest. Here's an example:

Now, we have three new variables with the information we need. The rest of the information is stored in the dummy variable _ , which can be ignored by our program. This can make clear to newcomer developers that we don't want to (or need to) use the information stored in _ cause this character has no apparent meaning.

Note: By default, the underscore character _ is used by the Python interpreter to store the resulting value of the statements we run in an interactive session. So, in this context, the use of this character to identify dummy variables can be ambiguous.

- Returning Tuples in Functions

Python functions can return several values separated by commas. Since we can define tuple objects without using parentheses, this kind of operation can be interpreted as returning a tuple of values. If we code a function that returns multiple values, then we can perform iterable packing and unpacking operations with the returned values.

Check out the following example in which we define a function to calculate the square and cube of a given number:

If we define a function that returns comma-separated values, then we can do any packing or unpacking operation on these values.

- Merging Iterables With the * Operator

Another interesting use-case for the unpacking operator, * , is the ability to merge several iterables into a final sequence. This functionality works for lists, tuples, and sets. Take a look at the following examples:

We can use the iterable unpacking operator, * , when defining sequences to unpack the elements of a subsequence (or iterable) into the final sequence. This will allow us to create sequences on the fly from other existing sequences without calling methods like append() , insert() , and so on.

The last two examples show that this is also a more readable and efficient way to concatenate iterables. Instead of writing list(my_set) + my_list + list(my_tuple) + list(range(1, 4)) + list(my_str) we just write [*my_set, *my_list, *my_tuple, *range(1, 4), *my_str] .

- Unpacking Dictionaries With the ** Operator

In the context of unpacking in Python, the ** operator is called the dictionary unpacking operator . The use of this operator was extended by PEP 448 . Now, we can use it in function calls, in comprehensions and generator expressions, and in displays .

A basic use-case for the dictionary unpacking operator is to merge multiple dictionaries into one final dictionary with a single expression. Let's see how this works:

If we use the dictionary unpacking operator inside a dictionary display, then we can unpack dictionaries and combine them to create a final dictionary that includes the key-value pairs of the original dictionaries, just like we did in the above code.

An important point to note is that, if the dictionaries we're trying to merge have repeated or common keys, then the values of the right-most dictionary will override the values of the left-most dictionary. Here's an example:

Since the a key is present in both dictionaries, the value that prevail comes from vowels , which is the right-most dictionary. This happens because Python starts adding the key-value pairs from left to right. If, in the process, Python finds keys that already exit, then the interpreter updates that keys with the new value. That's why the value of the a key is lowercased in the above example.

- Unpacking in For-Loops

We can also use iterable unpacking in the context of for loops. When we run a for loop, the loop assigns one item of its iterable to the target variable in every iteration. If the item to be assigned is an iterable, then we can use a tuple of target variables. The loop will unpack the iterable at hand into the tuple of target variables.

As an example, let's suppose we have a file containing data about the sales of a company as follows:

From this table, we can build a list of two-elements tuples. Each tuple will contain the name of the product, the price, and the sold units. With this information, we want to calculate the income of each product. To do this, we can use a for loop like this:

This code works as expected. However, we're using indices to get access to individual elements of each tuple . This can be difficult to read and to understand by newcomer developers.

Let's take a look at an alternative implementation using unpacking in Python:

We're now using iterable unpacking in our for loop. This makes our code way more readable and maintainable because we're using descriptive names to identify the elements of each tuple . This tiny change will allow a newcomer developer to quickly understand the logic behind the code.

It's also possible to use the * operator in a for loop to pack several items in a single target variable:

In this for loop, we're catching the first element of each sequence in first . Then the * operator catches a list of values in its target variable rest .

Finally, the structure of the target variables must agree with the structure of the iterable. Otherwise, we'll get an error. Take a look at the following example:

In the first loop, the structure of the target variables, (a, b), c , agrees with the structure of the items in the iterable, ((1, 2), 2) . In this case, the loop works as expected. In contrast, the second loop uses a structure of target variables that don't agree with the structure of the items in the iterable, so the loop fails and raises a ValueError .

- Packing and Unpacking in Functions

We can also use Python's packing and unpacking features when defining and calling functions. This is a quite useful and popular use-case of packing and unpacking in Python.

In this section, we'll cover the basics of how to use packing and unpacking in Python functions either in the function definition or in the function call.

Note: For a more insightful and detailed material on these topics, check out Variable-Length Arguments in Python with *args and **kwargs .

- Defining Functions With * and **

We can use the * and ** operators in the signature of Python functions. This will allow us to call the function with a variable number of positional arguments ( * ) or with a variable number of keyword arguments, or both. Let's consider the following function:

The above function requires at least one argument called required . It can accept a variable number of positional and keyword arguments as well. In this case, the * operator collects or packs extra positional arguments in a tuple called args and the ** operator collects or packs extra keyword arguments in a dictionary called kwargs . Both, args and kwargs , are optional and automatically default to () and {} respectively.

Even though the names args and kwargs are widely used by the Python community, they're not a requirement for these techniques to work. The syntax just requires * or ** followed by a valid identifier. So, if you can give meaningful names to these arguments, then do it. That will certainly improve your code's readability.

- Calling Functions With * and **

When calling functions, we can also benefit from the use of the * and ** operator to unpack collections of arguments into separate positional or keyword arguments respectively. This is the inverse of using * and ** in the signature of a function. In the signature, the operators mean collect or pack a variable number of arguments in one identifier. In the call, they mean unpack an iterable into several arguments.

Here's a basic example of how this works:

Here, the * operator unpacks sequences like ["Welcome", "to"] into positional arguments. Similarly, the ** operator unpacks dictionaries into arguments whose names match the keys of the unpacked dictionary.

We can also combine this technique and the one covered in the previous section to write quite flexible functions. Here's an example:

The use of the * and ** operators, when defining and calling Python functions, will give them extra capabilities and make them more flexible and powerful.

Iterable unpacking turns out to be a pretty useful and popular feature in Python. This feature allows us to unpack an iterable into several variables. On the other hand, packing consists of catching several values into one variable using the unpacking operator, * .

In this tutorial, we've learned how to use iterable unpacking in Python to write more readable, maintainable, and pythonic code.

With this knowledge, we are now able to use iterable unpacking in Python to solve common problems like parallel assignment and swapping values between variables. We're also able to use this Python feature in other structures like for loops, function calls, and function definitions.

You might also like...

- Hidden Features of Python

- Python Docstrings

- Handling Unix Signals in Python

- The Best Machine Learning Libraries in Python

- Guide to Sending HTTP Requests in Python with urllib3

Improve your dev skills!

Get tutorials, guides, and dev jobs in your inbox.

No spam ever. Unsubscribe at any time. Read our Privacy Policy.

Leodanis is an industrial engineer who loves Python and software development. He is a self-taught Python programmer with 5+ years of experience building desktop applications with PyQt.

In this article

Building Your First Convolutional Neural Network With Keras

Most resources start with pristine datasets, start at importing and finish at validation. There's much more to know. Why was a class predicted? Where was...

Data Visualization in Python with Matplotlib and Pandas

Data Visualization in Python with Matplotlib and Pandas is a course designed to take absolute beginners to Pandas and Matplotlib, with basic Python knowledge, and...

© 2013- 2024 Stack Abuse. All rights reserved.

- Comprehensive Learning Paths

- 150+ Hours of Videos

- Complete Access to Jupyter notebooks, Datasets, References.

Parallel Processing in Python – A Practical Guide with Examples

- October 31, 2018

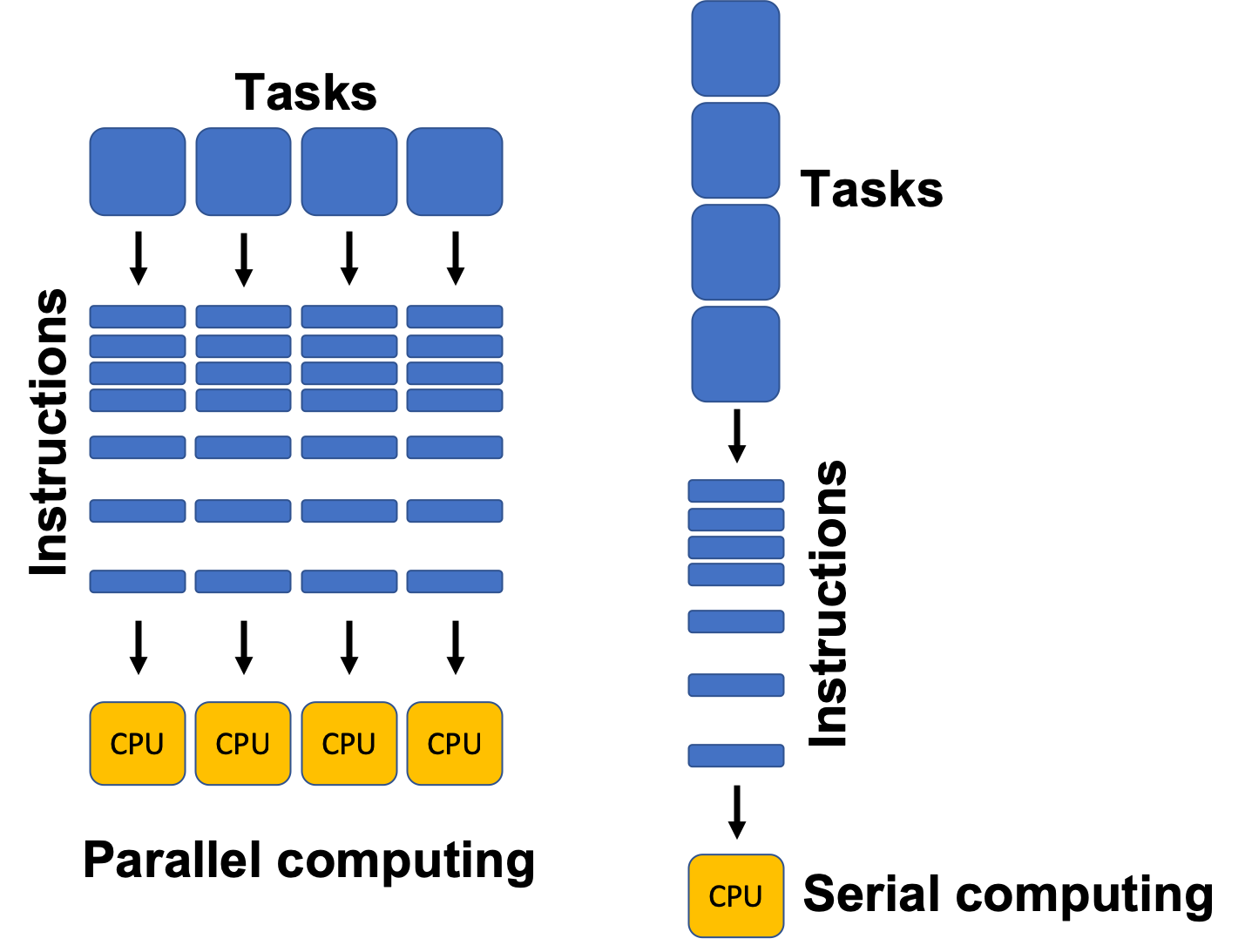

- Selva Prabhakaran

Parallel processing is a mode of operation where the task is executed simultaneously in multiple processors in the same computer. It is meant to reduce the overall processing time. In this tutorial, you’ll understand the procedure to parallelize any typical logic using python’s multiprocessing module.

1. Introduction

Parallel processing is a mode of operation where the task is executed simultaneously in multiple processors in the same computer. It is meant to reduce the overall processing time.

However, there is usually a bit of overhead when communicating between processes which can actually increase the overall time taken for small tasks instead of decreasing it.

In python, the multiprocessing module is used to run independent parallel processes by using subprocesses (instead of threads).

It allows you to leverage multiple processors on a machine (both Windows and Unix), which means, the processes can be run in completely separate memory locations. By the end of this tutorial you would know:

- How to structure the code and understand the syntax to enable parallel processing using multiprocessing ?

- How to implement synchronous and asynchronous parallel processing?

- How to parallelize a Pandas DataFrame?

- Solve 3 different usecases with the multiprocessing.Pool() interface.

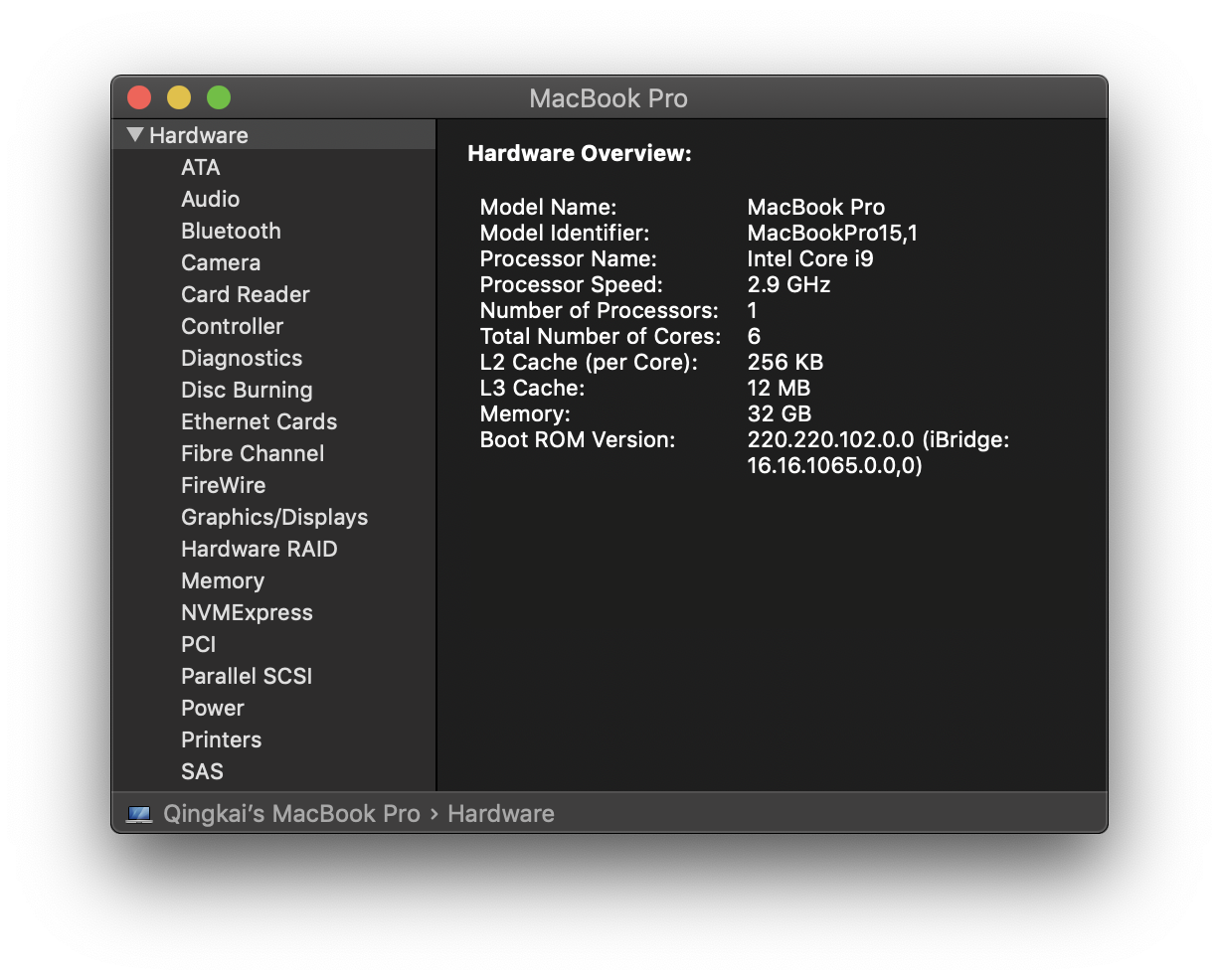

2. How many maximum parallel processes can you run?

The maximum number of processes you can run at a time is limited by the number of processors in your computer. If you don’t know how many processors are present in the machine, the cpu_count() function in multiprocessing will show it.

3. What is Synchronous and Asynchronous execution?

In parallel processing, there are two types of execution: Synchronous and Asynchronous.

A synchronous execution is one the processes are completed in the same order in which it was started. This is achieved by locking the main program until the respective processes are finished.

Asynchronous, on the other hand, doesn’t involve locking. As a result, the order of results can get mixed up but usually gets done quicker.

There are 2 main objects in multiprocessing to implement parallel execution of a function: The Pool Class and the Process Class.

- Pool.map() and Pool.starmap()

- Pool.apply()

- Pool.map_async() and Pool.starmap_async()

- Pool.apply_async() )

- Process Class

Let’s take up a typical problem and implement parallelization using the above techniques.

In this tutorial, we stick to the Pool class, because it is most convenient to use and serves most common practical applications.

4. Problem Statement: Count how many numbers exist between a given range in each row

The first problem is: Given a 2D matrix (or list of lists), count how many numbers are present between a given range in each row. We will work on the list prepared below.

Solution without parallelization

Let’s see how long it takes to compute it without parallelization.

For this, we iterate the function howmany_within_range() (written below) to check how many numbers lie within range and returns the count.

<heborder=”0″ scrolling=”auto” allowfullscreen=”allowfullscreen”> <!– /wp:parag4>

5. How to parallelize any function?

The general way to parallelize any operation is to take a particular function that should be run multiple times and make it run parallelly in different processors.

To do this, you initialize a Pool with n number of processors and pass the function you want to parallelize to one of Pool s parallization methods.

multiprocessing.Pool() provides the apply() , map() and starmap() methods to make any function run in parallel.

So what’s the difference between apply() and map() ?

Both apply and map take the function to be parallelized as the main argument.

But the difference is, apply() takes an args argument that accepts the parameters passed to the ‘function-to-be-parallelized’ as an argument, whereas, map can take only one iterable as an argument.

So, map() is really more suitable for simpler iterable operations but does the job faster.

We will get to starmap() once we see how to parallelize howmany_within_range() function with apply() and map() .

5.1. Parallelizing using Pool.apply()

Let’s parallelize the howmany_within_range() function using multiprocessing.Pool() .

5.2. Parallelizing using Pool.map()

Pool.map() accepts only one iterable as argument.

So as a workaround, I modify the howmany_within_range function by setting a default to the minimum and maximum parameters to create a new howmany_within_range_rowonly() function so it accetps only an iterable list of rows as input.

I know this is not a nice usecase of map() , but it clearly shows how it differs from apply() .

5.3. Parallelizing using Pool.starmap()

In previous example, we have to redefine howmany_within_range function to make couple of parameters to take default values.

Using starmap() , you can avoid doing this.

How you ask?

Like Pool.map() , Pool.starmap() also accepts only one iterable as argument, but in starmap() , each element in that iterable is also a iterable.

You can to provide the arguments to the ‘function-to-be-parallelized’ in the same order in this inner iterable element, will in turn be unpacked during execution.

So effectively, Pool.starmap() is like a version of Pool.map() that accepts arguments.

6. Asynchronous Parallel Processing

The asynchronous equivalents apply_async() , map_async() and starmap_async() lets you do execute the processes in parallel asynchronously, that is the next process can start as soon as previous one gets over without regard for the starting order.

As a result, there is no guarantee that the result will be in the same order as the input.

6.1 Parallelizing with Pool.apply_async()

apply_async() is very similar to apply() except that you need to provide a callback function that tells how the computed results should be stored.

However, a caveat with apply_async() is, the order of numbers in the result gets jumbled up indicating the processes did not complete in the order it was started.

A workaround for this is, we redefine a new howmany_within_range2() to accept and return the iteration number ( i ) as well and then sort the final results.

It is possible to use apply_async() without providing a callback function.

Only that, if you don’t provide a callback, then you get a list of pool.ApplyResult objects which contains the computed output values from each process.

From this, you need to use the pool.ApplyResult.get() method to retrieve the desired final result.

6.2 Parallelizing with Pool.starmap_async()

You saw how apply_async() works.

Can you imagine and write up an equivalent version for starmap_async and map_async ?

The implementation is below anyways.

7. How to Parallelize a Pandas DataFrame?

So far you’ve seen how to parallelize a function by making it work on lists.

But when working in data analysis or machine learning projects, you might want to parallelize Pandas Dataframes, which are the most commonly used objects (besides numpy arrays) to store tabular data.

When it comes to parallelizing a DataFrame , you can make the function-to-be-parallelized to take as an input parameter:

- one row of the dataframe

- one column of the dataframe

- the entire dataframe itself

The first 2 can be done using multiprocessing module itself.

But for the last one, that is parallelizing on an entire dataframe, we will use the pathos package that uses dill for serialization internally.

First, lets create a sample dataframe and see how to do row-wise and column-wise paralleization.

Something like using pd.apply() on a user defined function but in parallel.

We have a dataframe. Let’s apply the hypotenuse function on each row, but running 4 processes at a time.

To do this, we exploit the df.itertuples(name=False) .

By setting name=False , you are passing each row of the dataframe as a simple tuple to the hypotenuse function.

That was an example of row-wise parallelization.

Let’s also do a column-wise parallelization.

For this, I use df.iteritems() to pass an entire column as a series to the sum_of_squares function.

Now comes the third part – Parallelizing a function that accepts a Pandas Dataframe, NumPy Array, etc. Pathos follows the multiprocessing style of: Pool > Map > Close > Join > Clear.

Check out the pathos docs for more info.

Thanks to notsoprocoder for this contribution based on pathos.

If you are familiar with pandas dataframes but want to get hands-on and master it, check out these pandas exercises .

8. Exercises

Problem 1: Use Pool.apply() to get the row wise common items in list_a and list_b .

9. Conclusion

Hope you were able to solve the above exercises, congratulations if you did! In this post, we saw the overall procedure and various ways to implement parallel processing using the multiprocessing module. The procedure described above is pretty much the same even if you work on larger machines with many more number of processors, where you may reap the real speed benefits of parallel processing. Happy coding and I’ll see you in the next one !

Recommended Posts

Dask Tutorial – How to handle large data in Python Python JSON Guide Python RegEx Tutorial Python Logging Guide Python Collections Guide Guide to Python Requests Module

More Articles

How to convert python code to cython (and speed up 100x), how to convert python to cython inside jupyter notebooks, install opencv python – a comprehensive guide to installing “opencv-python”, install pip mac – how to install pip in macos: a comprehensive guide, scrapy vs. beautiful soup: which is better for web scraping, add python to path – how to add python to the path environment variable in windows, similar articles, complete introduction to linear regression in r, how to implement common statistical significance tests and find the p value, logistic regression – a complete tutorial with examples in r.

Subscribe to Machine Learning Plus for high value data science content

© Machinelearningplus. All rights reserved.

Machine Learning A-Z™: Hands-On Python & R In Data Science

Free sample videos:.

A Guide to Python Multiprocessing and Parallel Programming

Share this article

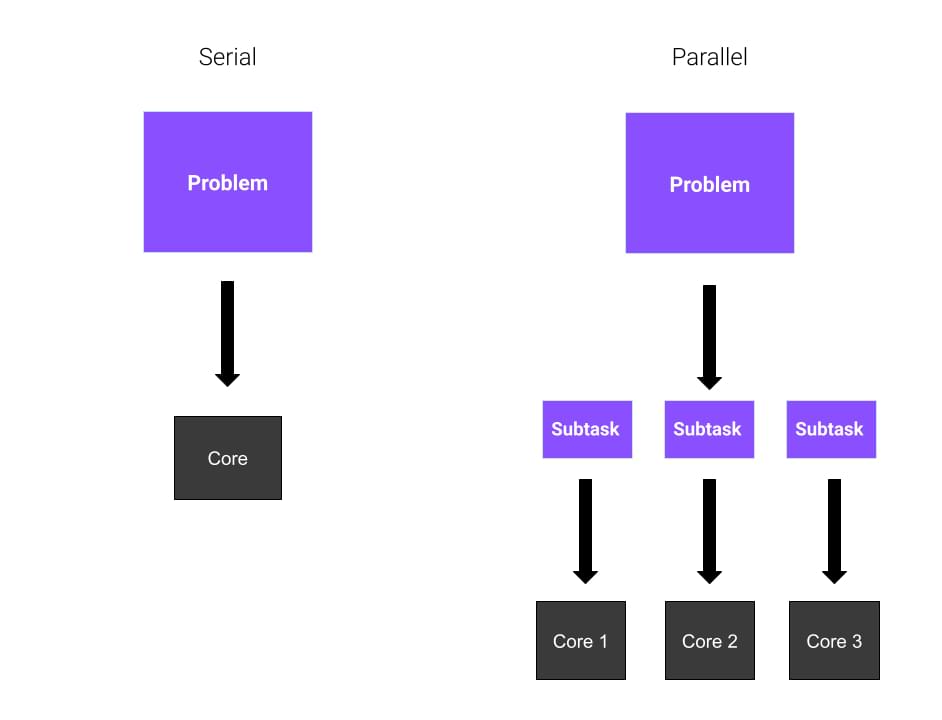

An Introduction to Parallelism

Parallel and serial computing, models for parallel programming, python multiprocessing: process-based parallelism in python, benefits of using multiprocessing, getting started with python multiprocessing, making best use of python multiprocessing, faqs about python multiprocessing and parallel programming.

Speeding up computations is a goal that everybody wants to achieve. What if you have a script that could run ten times faster than its current running time? In this article, we’ll look at Python multiprocessing and a library called multiprocessing . We’ll talk about what multiprocessing is, its advantages, and how to improve the running time of your Python programs by using parallel programming.

Okay, so let’s go!

Before we dive into Python code, we have to talk about parallel computing , which is an important concept in computer science.

Usually, when you run a Python script, your code at some point becomes a process, and the process runs on a single core of your CPU. But modern computers have more than one core, so what if you could use more cores for your computations? It turns out that your computations will be faster.

Let’s take this as a general principle for now, but later on, in this article, we’ll see that this isn’t universally true.

Without getting into too many details, the idea behind parallelism is to write your code in such a way that it can use multiple cores of the CPU.

To make things easier, let’s look at an example.

Imagine you have a huge problem to solve, and you’re alone. You need to calculate the square root of eight different numbers. What do you do? Well, you don’t have many options. You start with the first number, and you calculate the result. Then, you go on with the others.

What if you have three friends good at math willing to help you? Each of them will calculate the square root of two numbers, and your job will be easier because the workload is distributed equally between your friends. This means that your problem will be solved faster.

Okay, so all clear? In these examples, each friend represents a core of the CPU. In the first example, the entire task is solved sequentially by you. This is called serial computing . In the second example, since you’re working with four cores in total, you’re using parallel computing . Parallel computing involves the usage of parallel processes or processes that are divided among multiple cores in a processor.

We’ve established what parallel programming is, but how do we use it? Well, we said before that parallel computing involves the execution of multiple tasks among multiple cores of the processor, meaning that those tasks are executed simultaneously. There are a few questions that you should consider before approaching parallelization. For example, are there any other optimizations that could speed up our computations?

For now, let’s take for granted that parallelization is the best solution for you. There are mainly three models in parallel computing:

- Perfectly parallel . The tasks can be run independently, and they don’t need to communicate with each other.

- Shared memory parallelism . Processes (or threads) need to communicate, so they share a global address space.

- Message passing . Processes need to share messages when needed.

In this article, we’ll illustrate the first model, which is also the simplest.

One way to achieve parallelism in Python is by using the multiprocessing module . The multiprocessing module allows you to create multiple processes, each of them with its own Python interpreter. For this reason, Python multiprocessing accomplishes process-based parallelism.

You might have heard of other libraries, like threading , which also comes built-in with Python, but there are crucial differences between them. The multiprocessing module creates new processes, while threading creates new threads.

In the next section, we’ll look at the advantages of using multiprocessing.

Here are a few benefits of multiprocessing:

- better usage of the CPU when dealing with high CPU-intensive tasks

- more control over a child compared with threads

- easy to code

The first advantage is related to performance. Since multiprocessing creates new processes, you can make much better use of the computational power of your CPU by dividing your tasks among the other cores. Most processors are multi-core processors nowadays, and if you optimize your code you can save time by solving calculations in parallel.

The second advantage looks at an alternative to multiprocessing, which is multithreading. Threads are not processes though, and this has its consequences. If you create a thread, it’s dangerous to kill it or even interrupt it as you would do with a normal process. Since the comparison between multiprocessing and multithreading isn’t in the scope of this article, I encourage you to do some further reading on it.

The third advantage of multiprocessing is that it’s quite easy to implement, given that the task you’re trying to handle is suited for parallel programming.

We’re finally ready to write some Python code!

We’ll start with a very basic example and we’ll use it to illustrate the core aspects of Python multiprocessing. In this example, we’ll have two processes:

- The parent process. There’s only one parent process, which can have multiple children.

- The child process. This is spawned by the parent. Each child can also have new children.

We’re going to use the child process to execute a certain function. In this way, the parent can go on with its execution.

A simple Python multiprocessing example

Here’s the code we’ll use for this example:

In this snippet, we’ve defined a function called bubble_sort(array) . This function is a really naive implementation of the Bubble Sort sorting algorithm. If you don’t know what it is, don’t worry, because it’s not that important. The crucial thing to know is that it’s a function that does some work.

The Process class

From multiprocessing , we import the class Process . This class represents an activity that will be run in a separate process. Indeed, you can see that we’ve passed a few arguments:

- target=bubble_sort , meaning that our new process will run the bubble_sort function

- args=([1,9,4,52,6,8,4],) , which is the array passed as argument to the target function

Once we’ve created an instance to the Process class, we only need to start the process. This is done by writing p.start() . At this point, the process is started.

Before we exit, we need to wait for the child process to finish its computations. The join() method waits for the process to terminate.

In this example, we’ve created only one child process. As you may guess, we can create more child processes by creating more instances in the Process class.

The Pool class

What if we need to create multiple processes to handle more CPU-intensive tasks? Do we always need to start and wait explicitly for termination? The solution here is to use the Pool class.

The Pool class allows you to create a pool of worker processes, and in the following example, we’ll look at how can we use it. This is our new example:

In this code snippet, we have a cube(x) function that simply takes an integer and returns its square root. Easy, right?

Then, we create an instance of the Pool class, without specifying any attribute. The pool class creates by default one process per CPU core. Next, we run the map method with a few arguments.

The map method applies the cube function to every element of the iterable we provide — which, in this case, is a list of every number from 10 to N .

The huge advantage of this is that the calculations on the list are done in parallel!

Creating multiple processes and doing parallel computations is not necessarily more efficient than serial computing. For low CPU-intensive tasks, serial computation is faster than parallel computation. For this reason, it’s important to understand when you should use multiprocessing — which depends on the tasks you’re performing.

To convince you of this, let’s look at a simple example:

This snippet is based on the previous example. We’re solving the same problem, which is calculating the square root of N numbers, but in two ways. The first one involves the usage of Python multiprocessing, while the second one doesn’t. We’re using the perf_counter() method from the time library to measure the time performance.

On my laptop, I get this result:

As you can see, there’s more than one second difference. So in this case, multiprocessing is better.

Let’s change something in the code, like the value of N . Let’s lower it to N=10000 and see what happens.

This is what I get now:

What happened? It seems that multiprocessing is now a bad choice. Why?

The overhead introduced by splitting the computations between the processes is too much compared to the task solved. You can see how much difference there is in terms of time performances.

In this article, we’ve talked about the performance optimization of Python code by using Python multiprocessing.

First, we briefly introduced what parallel computing is and the main models for using it. Then, we started talking about multiprocessing and its advantages. In the end, we saw that parallelizing the computations is not always the best choice and the multiprocessing module should be used for parallelizing CPU-bound tasks. As always, it’s a matter of considering the specific problem you’re facing and evaluating the pros and cons of the different solutions.

I hope you’ve found learning about Python multiprocessing as useful as I did.

What Is the Main Advantage of Using Multiprocessing in Python?

The primary advantage of using multiprocessing in Python is that it allows for the execution of multiple processes simultaneously. This is particularly beneficial when working with CPU-intensive tasks, as it enables the program to utilize multiple cores of the CPU, thereby significantly improving the speed and efficiency of the program. Unlike threading, multiprocessing does not suffer from the Global Interpreter Lock (GIL) in Python, which means that each process can run independently without being affected by other processes. This makes multiprocessing a powerful tool for parallel programming in Python.

How Does the Multiprocessing Module in Python Work?

The multiprocessing module in Python works by creating a new process for each task that needs to be executed concurrently. Each process has its own Python interpreter and memory space, which means that it can run independently of other processes. The multiprocessing module provides a number of classes and functions that make it easy to create and manage these processes. For example, the Process class is used to create a new process, while the Pool class is used to manage a pool of worker processes.

What Is the Difference Between Multiprocessing and Multithreading in Python?

The main difference between multiprocessing and multithreading in Python lies in how they handle tasks. While multiprocessing creates a new process for each task, multithreading creates a new thread within the same process. This means that while multiprocessing can take full advantage of multiple CPU cores, multithreading is limited by the Global Interpreter Lock (GIL) in Python, which allows only one thread to execute at a time. However, multithreading can still be useful for I/O-bound tasks, where the program spends most of its time waiting for input/output operations to complete.

How Can I Share Data Between Processes in Python?

Sharing data between processes in Python can be achieved using the multiprocessing module’s shared memory mechanisms. These include the Value and Array classes, which allow for the creation of shared variables and arrays respectively. However, it’s important to note that because each process has its own memory space, changes made to shared variables or arrays in one process will not be reflected in other processes unless they are explicitly synchronized using locks or other synchronization primitives provided by the multiprocessing module.

What Are the Potential Pitfalls of Using Multiprocessing in Python?

While multiprocessing in Python can greatly improve the speed and efficiency of your program, it also comes with its own set of challenges. One of the main pitfalls is the increased complexity of your code. Managing multiple processes can be more complex than managing a single-threaded program, especially when it comes to handling shared data and synchronizing processes. Additionally, creating a new process is more resource-intensive than creating a new thread, which can lead to increased memory usage. Finally, not all tasks are suitable for parallelization, and in some cases, the overhead of creating and managing multiple processes can outweigh the potential performance gains.

How Can I Handle Exceptions in Multiprocessing in Python?

Handling exceptions in multiprocessing in Python can be a bit tricky, as exceptions that occur in child processes do not automatically propagate to the parent process. However, the multiprocessing module provides several ways to handle exceptions. One way is to use the Process class’s is_alive() method to check if a process is still running. If the method returns False, it means that the process has terminated, which could be due to an exception. Another way is to use the Process class’s exitcode attribute, which can provide more information about why a process terminated.

Can I Use Multiprocessing with Other Python Libraries?

Yes, you can use multiprocessing with other Python libraries. However, it’s important to note that not all libraries are designed to be used in a multiprocessing environment. Some libraries may not be thread-safe or may not support concurrent execution. Therefore, it’s always a good idea to check the documentation of the library you’re using to see if it supports multiprocessing.

How Can I Debug a Multiprocessing Program in Python?

Debugging a multiprocessing program in Python can be challenging, as traditional debugging tools may not work as expected in a multiprocessing environment. However, there are several techniques you can use to debug your program. One way is to use print statements or logging to track the execution of your program. Another way is to use the pdb module’s set_trace() function to set breakpoints in your code. You can also use specialized debugging tools that support multiprocessing, such as the multiprocessing module’s log_to_stderr() function, which allows you to log the activity of your processes to the standard error.

Can I Use Multiprocessing in Python on Different Operating Systems?

Yes, you can use multiprocessing in Python on different operating systems. The multiprocessing module is part of the standard Python library, which means it’s available on all platforms that support Python. However, the behavior of the multiprocessing module may vary slightly between different operating systems due to differences in how they handle processes. Therefore, it’s always a good idea to test your program on the target operating system to ensure it works as expected.

What are some best practices for using multiprocessing in Python?

Some best practices for using multiprocessing in Python include: – Avoid sharing data between processes whenever possible, as this can lead to complex synchronization issues. – Use the Pool class to manage your worker processes, as it provides a higher-level interface that simplifies the process of creating and managing processes. – Always clean up your processes by calling the Process class’s join() method, which ensures that the process has finished before the program continues. – Handle exceptions properly to prevent your program from crashing unexpectedly. – Test your program thoroughly to ensure it works correctly in a multiprocessing environment.

I am a computer science student fond of asking questions and learning new things. Traveller, musician and occasional writer. Check me out on my website .

technically a blog

Parallel assignment: a python idiom cleverly optimized.

Friday, May 15, 2020

Every programmer has had to swap variables. It's common in real programs and it's a frequently used example when people want to show off just how nice and simple Python is. Python handles this case very nicely and efficiently. But how Python handles it efficiently is not always clear, so we'll have to dive into how the runtime works and disassemble some code to see what's happening. For this post, we're going to focus on CPython. This will probably be handled differently in every runtime, so PyPy and Jython will have different behavior, and probably will have similarly cool things going on!

Before we dive into disassembling some Python code (which isn't scary, I promise), let's make sure we're on the same page of what we're talking about. Here's the common example of how you would do it in a language that's Not As Great As Python:

Okay, so we've all seen that, what's the point, I'm closing the tab now. Well now we get to the part that's people trumpet as evidence of Python's great brevity. Look, Python can do it in one line!

This method is known as parallel assignment , and is present in languages like Ruby, as well. This method lets you avoid a few lines of code while improving readability, because now we can quickly see that we're doing a swap, rather than having to look through the lines carefully to ensure the swap is ordered correctly. And, we might even save some memory, depending on how this is implemented! If you followed the link to the Wikipedia article about parallel assignment, you'll see the following:

This is shorthand for an operation involving an intermediate data structure: in Python, a tuple; in Ruby, an array.

A very similar statement is made in Effective Python (a great book to read together as a team, by the way!), where the author states that a tuple is made for the right-hand side, then unpacked into the left-hand side.

This makes sense, but it isn't the whole story, which gets far more fascinating. But first, we need to know a little about how the Python runtime works.

Inside the Python runtime (remember that we're talking about CPython specifically, not Python-the-spec), there's a virtual machine and the runtime compiles code into bytecode which is then run on that virtual machine. Python ships with a disassembler you can use, and it provides handy documentation listing all the available bytecode instructions . Another thing to note is that Python's VM is stack based. That means that instead of having fixed registers, it simply has a memory stack. Each time you load a variable, it pushes onto the stack; and you can pop off the stack. Now, let's use the disassembler to take a look at how Python is actually handling this swapping business!

First, let's disassemble the "standard" swap. We define this inside a function, because we have to pass a module or a function into the disassembler.

This doesn't do anything useful, because it just swaps them in place. We didn't even declare the variables anywhere, so this has no chance of ever actually running. But, because Python is a beautiful language, we can go ahead and disassemble this anyway! If you've defined that in your Python session, you can then import dis and go ahead and disassemble it:

Stepping through this, you can see that first we have a LOAD_FAST of x which puts x onto the top of the stack. Then STORE_FAST pops the top of the stack and stores it into temp . This general pattern repeats three times, once per line of the swap. Then, at the end, we load in the return value ( None ) and return it. Okay, so this is about what we'd expect. Barring some really fancy compiler tricks, this is analogous to what I'd expect in any compiled language.

So let's take a look at the version that is idiomatic.

Once again, this isn't doing anything useful, and Python miraculously lets us disassemble this thing that would never even run. Let's see what we get this time:

And here is where we see the magic. First, we LOAD_FAST twice onto the stack. If we just go off the language spec, we'd now expect to form an intermediate tuple (the BUILD_TUPLE command is what does this, and from its absence we know that we aren't building a tuple here the way you would with x = (1,2) ). On the contrary, you see... ROT_TWO ! This is a cool instruction which takes the top two elements of the stack and "rotates" them (a math term for changing the order of things, kind of shifting everyone along with one moving from the front to the back). Then we STORE_FAST again, twice, to put it back into the variables.

The question now might be, "why do we even need ROT_TWO ? Why can't we simply change the order we store them to achieve the same effect?" This is because of how Python has defined its semantics . In Python, variables on the lefthand side of an expression are stored in order from left to right. The righthand side also has these left-to-right semantics. This matters in case like assigning to both an index and a list:

If you didn't define the semantics, the result above would be ambiguous: will a be [0, 10] or [10, 0] after running this? It will be [0, 10] because we assign from left to right. Similar semantics apply on the righthand side for the comma operator, and the end result is that we have to do something in the middle to ensure we adhere to these semantics by changing the order of the stack.

So, at the end of the day, there you have it. Parallel assignment, or swapping without another variable, does not use any extra honest-to-goodness tuples or anything under the hood in Python. It does it through a clever optimization with rotating the top of the stack!

Update 5/16 : I made a few edits to make the article clearer and avoid distracting from the content by implying/stating that people were wrong, and making certain things clearer (focus on CPython, focus on implementation vs. spec, etc.).

If this post was enjoyable or useful for you, please share it! If you have comments, questions, or feedback, you can email my personal email . To get new posts and support my work, subscribe to the newsletter . There is also an RSS feed .

Want to become a better programmer? Join the Recurse Center!

- Skip to primary navigation

- Skip to main content

- Skip to primary sidebar

PythonForBeginners.com

Learn By Example

Unpacking in Python

Author: Aditya Raj Last Updated: May 31, 2023

Python provides us with the packing and unpacking operator to convert one iterable object to another easily. In this article, we will discuss the unpacking operator in Python with different examples.

What is the Unpacking Operator in Python?

Unpacking in python using parallel assignment, unpacking using the * operator in python.

The unpacking operator in Python is used to unpack an iterable object into individual elements. It is represented by an asterisk sign * and has the following syntax.

Here,

- The iterable_object variable represents an iterable object such as a list, tuple, set, or a Python dictionary .

- After execution of the above statement, the elements of iterable_object are unpacked. Then, we can use the packing operation to create other iterable objects.

To understand this, consider the following example.

In the above example, the * operator unpacks myList . Then, we created a set from the unpacked elements.

Remember that you cannot use the unpacking operator to assign the elements of the iterable object to individual elements. If you do so, the program will run into an error. You can observe this in the following example.

In this example, we have tried to assign elements from myList to six variables using the * operator. Hence, the program runs into SyntaxError exception.

Instead of using the * operator, you can unpack an iterable object into multiple variables using parallel assignment. For this, you can use the following syntax.

In the above statement, variables var1 , var2 , var3 , var4 till varN are individual variables. After execution of the statement, all the variables are initialized with elements from iterable_object as shown below.

In the above example, we have six elements in myList . Hence, we have unpacked the list into six variables a , b , c , d , e , and f .

In the above syntax, the number of variables must be equal to the number of elements in the iterable_object . Otherwise, the program will run into error.

For instance, if the number of variables on the left-hand side is less than the number of elements in the iterable object, the program will run into a ValueError exception saying that there are too many values to unpack. You can observe this in the following example.

In the about code, you an observe that there are six elements in the list but we have only five variables. Due to this, the program runs into a Python ValueError exception saying that there are too many values to unpack.

In a similar manner, if the number of variables on the left side of the assignment operator is greater than the number of elements in the iterable object, the program will run into a ValueError exception saying that there are not too many values to unpack. You can observe this in the following example.

In the above example, there seven variables on the left hand side and only six elements in the list. Due to this, the program runs into ValueError exception saying that there aren’t enough values to unpack.

When we have less number of variables than the elements in the iterable object, we can use the * operator to unpack the iterable object using the following syntax.

In the above syntax, If there are more than N elements in the iterable_object , first N objects are assigned to the variables var1 to varN . The rest of the variables are packed in a list and assigned to the variable var . You can observe this in the following example.

In the above example, we have six elements in the list. On the left hand side, we have four variables with the last variable containing the * sign. You can observe that the three variables are assigned individual elements whereas the variable containing the * operator gets all the remaining elements in a list.

Now, let us move the variable containing the * operator to the start of the expression as shown below.

In this case, if there are more than N elements in the iterable_object , the last N elements are assigned to the variables var1 to varN . The remaining elements from the start are assigned to variable var in a list. You can observe this in the following example.

We can also put the variable containing the * operator in between the variables on the left-hand side of the assignment operator. For example, consider the following syntax.

In the above example, there are M variables on the left-hand side of var and N-M variables on the right-hand side of var . Now, if the object iterable_object has more than N elements,

- First M elements of iterable_object are assigned to the variables var1 to varM .

- The last N-M variables in iterable_object are assigned to the variables varM+1 to varN .

- The rest of the elements in the middle are assigned to the variable var as a list.

You can observe this in the following example.

In this article, we discussed how to use the unpacking operator in Python. The unpacking operation works the same in lists, sets, and tuples. In dictionaries, only the keys of the dictionary are unpacked when using the unpacking operator.

To learn more about Python programming, you can read this article on tuple comprehension in Python . You might also like this article on Python continue vs break statements .

I hope you enjoyed reading this article. Stay tuned for more informative articles.

Happy Learning!

Recommended Python Training

Course: Python 3 For Beginners

Over 15 hours of video content with guided instruction for beginners. Learn how to create real world applications and master the basics.

More Python Topics

Parallelization tutorial

Training materials for parallelization with Python, R, Julia, MATLAB and C/C++, including use of the GPU with Python and Julia. See the top menu for pages specific to each language.

2.1 What is the BLAS?

2.2 example syntax, 2.3 fixing the number of threads (cores used), 3.1 parallel looping on one machine, 3.2 using multiple machines or cluster nodes, 4.1 parallel looping in dask, 4.2 parallel looping in ray, 5.1 overview, 5.2 parallel rng in practice, 6.1 matrix multiplication, 6.2 vectorized calculations (and loop fusion), 6.3 using apple’s m2 gpu, 7.1 vectorized calculations (and loop fusion), 7.2 linear algebra, 7.3 using the gpu with jax, 7.4 some comments, 7.5 vmap and for vectorized map operations, 8 using cupy.

View the Project on GitHub berkeley-scf/tutorial-parallelization

This project is maintained by berkeley-scf , the UC Berkeley Statistical Computing Facility

Hosted on GitHub Pages — Theme by orderedlist

Parallel processing in Python

Python provides a variety of functionality for parallelization, including threaded operations (in particular for linear algebra), parallel looping and map statements, and parallelization across multiple machines. For the CPU, this material focuses on Python’s ipyparallel package and JAX, with some discussion of Dask and Ray. For the GPU, the material focuses on PyTorch and JAX, with a bit of discussion of CuPy.

Note that all of the looping-based functionality discussed here applies only if the iterations/loops of your calculations can be done completely separately and do not depend on one another. This scenario is called an embarrassingly parallel computation. So coding up the evolution of a time series or a Markov chain is not possible using these tools. However, bootstrapping, random forests, simulation studies, cross-validation and many other statistical methods can be handled in this way.

2 Threading

The BLAS is the library of basic linear algebra operations (written in Fortran or C). A fast BLAS can greatly speed up linear algebra relative to the default BLAS on a machine. Some fast BLAS libraries are

- Intel’s MKL ; may be available for educational use for free

- OpenBLAS ; open source and free

- vecLib for Macs; provided with your Mac

In addition to being fast when used on a single core, all of these BLAS libraries are threaded - if your computer has multiple cores and there are free resources, your linear algebra will use multiple cores, provided your installed Python is linked against the threaded BLAS installed on your machine.

To use a fast, threaded BLAS, one approach is to use the Anaconda/Miniconda Python distribution. When you install numpy and scipy, these should be automatically linked against a fast, threaded BLAS (MKL). More generally, simply installing numpy from PyPI should make use of OpenBLAS .

Threading in Python is limited to linear algebra (provided Python is linked against a threaded BLAS, except if using Dask or JAX or various other packages). Python has something called the Global Interpreter Lock that interferes with threading in Python (but not in threaded linear algebra packages called by Python).

Here’s some linear algebra in Python that will use threading if numpy is linked against a threaded BLAS, though I don’t compare the timing for different numbers of threads here.

If you watch the Python process via the top command, you should see CPU usage above 100% if Python is linking to a threaded BLAS.

In general, threaded code will detect the number of cores available on a machine and make use of them. However, you can also explicitly control the number of threads available to a process.

For most threaded code (that based on the openMP protocol), the number of threads can be set by setting the OMP_NUM_THREADS environment variable. Note that under some circumstances you may need to use VECLIB_MAXIMUM_THREADS if on an (older, Intel-based) Mac or MKL_NUM_THREADS if numpy/scipy are linked against MKL.

For example, to set it for four threads in bash, do this before starting your Python session.

Alternatively, you can set OMP_NUM_THREADS as you invoke your job, e.g.,

3 Basic parallelized loops/maps/apply using ipyparallel

3.1.1 starting the workers.

First we’ll cover IPython Parallel (i.e., the ipyparallel package) functionality, which allows one to parallelize on a single machine (discussed here) or across multiple machines (see next section). In later sections, I’ll discuss other packages that can be used for parallelization.

First we need to start our workers. As of ipyparallel version 7, we can start the workers from within Python.

3.1.2 Testing our workers

Let’s verify that things seem set up ok and we can interact with all our workers:

dview stands for a ‘direct view’, which is an interface to our cluster that allows us to ‘manually’ send tasks to the workers.

3.1.3 Parallelized machine learning example: setup

Now let’s see an example of how we can use our workers to run code in parallel.

We’ll carry out a statistics/machine learning prediction method (random forest regression) with leave-one-out cross-validation, parallelizing over different held out data.

First let’s set up packages, data and our main function on the workers:

3.1.4 Parallelized machine learning example: execution

Now let’s set up a “load-balanced view”. With this type of interface, one submits the tasks and the controller decides how to divide up the tasks, ideally achieving good load balancing. A load-balanced computation is one that keeps all the workers busy throughout the computation

3.1.5 Starting the workers outside Python

One can also start the workers outside of Python. This was required in older versions of ipyparallel, before version 7.

Now in Python, we can connect to the running workers:

Finally, stop the workers.

One can use ipyparallel in a context with multiple nodes, though the setup to get the worker processes started is a bit more involved when you have multiple nodes.

If we are using the SLURM scheduling software, here’s how we start up the worker processes:

At this point you should be able to connect to the running cluster using the syntax seen for single-node usage.

Warning : Be careful to set the sleep period long enough that the controller starts before trying to start the workers and the workers start before trying to connect to the workers from within Python.

After doing your computations and quitting your main Python session, shut down the cluster of workers:

To start the engines in a context outside of using Slurm (provided all machines share a filesystem), you should be able ssh to each machine and run ipengine & for as many worker processes as you want to start as follows. In some, but not all cases (depending on how the network is set up) you may not need the --location flag, but if you do, it should be set to the name of the machine you’re working on, e.g., by using the HOST environment variable. Here we start all the workers on a single other machine, “other_host”:

4 Dask and Ray

Dask and Ray are powerful packages for parallelization that allow one to parallelize tasks in similar fashion to ipyparallel. But they also provide additional useful functionality: Dask allows one to work with large datasets that are split up across multiple processes on (potentially) multiple nodes, providing Spark/Hadoop-like functionality. Ray allows one to develop complicated apps that execute in parallel using the notion of actors .

For more details on using distributed dataset with Dask, see this Dask tutorial . For more details on Ray’s actors, please see the Ray documentation .

There are various ways to do parallel loops in Dask, as discussed in detail in this Dask tutorial .

Here’s an example of doing it with “delayed” calculations set up via list comprehension. First we’ll start workers on a single machine. One can also start workers on multiple machines, as discussed in the tutorial linked to just above.

Now we’ll execute a set of tasks in parallel by wrapping the function of interest in dask.delayed to set up lazy evaluation that will be done in parallel using the workers already set up with the ‘processes’ scheduler above.

Execution only starts when we call dask.compute .

Note that we set a separate seed for each task to try to ensure indepenedent random numbers between tasks, but Section 5 discusses better ways to do this.

We’ll start up workers on a single machine. To run across multiple workers, see this tutorial or the Ray documentation .

To run a computation in parallel, we decorate the function of interest with the remote tag:

5 Random number generation (RNG) in parallel

The key thing when thinking about random numbers in a parallel context is that you want to avoid having the same ‘random’ numbers occur on multiple processes. On a computer, random numbers are not actually random but are generated as a sequence of pseudo-random numbers designed to mimic true random numbers. The sequence is finite (but very long) and eventually repeats itself. When one sets a seed, one is choosing a position in that sequence to start from. Subsequent random numbers are based on that subsequence. All random numbers can be generated from one or more random uniform numbers, so we can just think about a sequence of values between 0 and 1.

The worst thing that could happen is that one sets things up in such a way that every process is using the same sequence of random numbers. This could happen if you mistakenly set the same seed in each process, e.g., using rng = np.random.default_rng(1) or np.random.seed(1) in Python for every worker.

The naive approach is to use a different seed for each process. E.g., if your processes are numbered id = 1,2,...,p with a variable id that is unique to a process, setting the seed to be the value of id on each process. This is likely not to cause problems, but raises the danger that two (or more) subsequences might overlap. For an algorithm with dependence on the full subsequence, such as an MCMC, this probably won’t cause big problems (though you likely wouldn’t know if it did), but for something like simple simulation studies, some of your ‘independent’ samples could be exact replicates of a sample on another process. Given the period length of the default generator in Python, this is actually quite unlikely, but it is a bit sloppy.

To avoid this problem, the key is to use an algorithm that ensures sequences that do not overlap.

In recent versions of numpy there has been attention paid to this problem and there are now multiple approaches to getting high-quality random number generation for parallel code .

One approach is to generate one random seed per task such that the blocks of random numbers avoid overlapping with high probability, as implemented in numpy’s SeedSequence approach.

Here we use that approach within the context of an ipyparallel load-balanced view.

A second approach is to advance the state of the random number generator as if a large number of random numbers had been drawn.

Note that above, I’ve done everything at the level of the computational tasks. One could presumably do this at the level of the workers, but one would need to figure out how to maintain the state of the generator from one task to the next for any given worker.

6 Using the GPU via PyTorch

Python is the go-to language used to run computations on a GPU. Some of the packages that can easily offload computations to the GPU include PyTorch, Tensorflow, JAX, and CuPy. (Of course PyTorch and Tensorflow are famously used for deep learning, but they’re also general numerical computing packages.) We’ll discuss some of these.

There are a couple key things to remember about using a GPU:

- If possible, generate the data on the GPU or keep the data on the GPU when carrying out a sequence of operations.

- This can affect speed comparisons between CPU and GPU if one doesn’t compare operations with the same types of floating point numbers.

- In the examples below, note syntax that ensures the operation is done before timing concludes (e.g., cuda.synchronize for PyTorch and block_until_ready for JAX).

Note that for this section, I’m pasting in the output when running the code separately on a machine with a GPU because this document is generated on a machine without a GPU.

By default PyTorch will use 32-bit numbers.

So we achieved a speedup of about 100-fold over a single CPU core using an A100 GPU in this case.

Let’s consider the time for copying data to the GPU:

This suggests that the time in copying the data is similar to that for doing the matrix multiplication.

We can generate data on the GPU like this:

Here we’ll consider using the GPU for vectorized calculations. We’ll compare using numpy, CPU-based PyTorch, and GPU-based PyTorch, again with 32-bit numbers.

So using the GPU speeds things up by 150-fold (compared to numpy) and 250-fold (compared to CPU-based PyTorch).

One can also have PyTorch “fuse” the operations in the loop, which avoids having the different vectorized operations in myfun being done in separate loops under the hood. For an overview of loop fusion, see this discussion in the context of Julia.

To fuse the operations, we need to have the function in a module. In this case I defined myfun_torch in myfun_torch.py , and we need to compile the code using torch.jit.script .

So that seems to give a 2-3 fold speedup compared to without loop fusion.

One can also use PyTorch to run computations on the GPU that comes with Apple’s M2 chips.

The “backend” is called “MPS”, where “M” stands for “Metal”, which is what Apple calls its GPU framework.

So there is about a two-fold speed up, which isn’t impressive compared to the speedup on a standard GPU.

Let’s see how much time is involved in transferring the data.

So it looks like the transfer time is pretty small compared to the computation time (and to the savings involved in using the M2 GPU).

7 Using JAX (for CPU and GPU)

You can think of JAX as a version of numpy enabled to use the GPU (or automatically parallelize on CPU threads) and provide automatic differentiation.

One can also use just-in-time (JIT) compilation with JAX. Behind the scenes, the instructions are compiled to machine code for different backends (e.g., CPU and GPU) using XLA.

Let’s first consider running a vectorized calculation using JAX on the CPU, which will use multiple threads, each thread running on a separate CPU core on our computer.

There’s a nice speedup compared to numpy.

Since JAX will often execute computations asynchronously (in particular when using the GPU), the block_until_ready invocation ensures that the computation finishes before we stop timing.

By default the JAX floating point type is 32-bit so we forced the use of 32-bit numbers for numpy for comparability. One could have JAX use 64-bit numbers like this:

Next let’s consider JIT compiling it, which should fuse the vectorized operations and avoid temporary objects. The JAX docs have a nice discussion of when JIT compilation will be beneficial.

So that gives another almost 2x speedup.

Linear algebra in JAX will use multiple threads ( as discussed for numpy ). Here we’ll compare 64-bit calculation, since matrix decompositions sometimes need more precision.

So here the matrix multiplication is slower using JAX with 64-bit numbers but the Cholesky is a bit faster. If one uses 32-bit numbers, JAX is faster for both (not shown).

In general, the JAX speedups are not huge, which is not surprising given both approaches are using multiple threads to carry out the linear algebra. At the least it indicates one can move a numpy workflow to JAX without worrying about losing the threaded BLAS speed of numpy.

Getting threaded CPU computation automatically is nice, but the real benefit of JAX comes in offloading computations to the GPU (and in providing automatic differentiation, not discussed in this tutorial). If a GPU is available and a GPU-enabled JAX is installed , JAX will generally try to use the GPU.

Note my general comments about using the GPU in the PyTorch section .

We’ll just repeat the experiments we ran earlier comparing numpy- and JAX-based calculations, but on a machine with an A100 GPU.

So that gives a speedup of more than 100x.

JIT compilation helps a bit (about 2x).

Finally, here’s the linear algebra example on the GPU.

Again we get a very impressive speedup.

As discussed elsewhere in this tutorial, it takes time to transfer data to and from the GPU, so it’s best to generate values on the GPU and keep objects on the GPU when possible.

Also, JAX objects are designed to be manipulated as objects, rather than manipulating individual values.

We can use JAX’s vmap to automatically vectorize a map operation. Unlike numpy’s vectorize or apply_along_axis , which are just handy syntax (“syntactic sugar”) and don’t actually speed anything up (because the looping is still done in Python), vmap actually vectorizes the loop. Behind the scenes it generates a vectorized version of the code that can run in parallel on CPU or GPU.