Social Work Supervisor resume examples for 2024

A social work supervisor's resume should highlight their ability to manage and support a team of social workers while also providing direct social services to clients. According to Samson Chama , Professor at Alabama A & M University, "social workers who are novice to technology will need to motivate and reinvent themselves by willingly acquiring knowledge and skills in latest cutting-edge technology." This includes proficiency in relevant software packages and the ability to work effectively from any point of reference. Michael Heron , Assistant Professor at Saginaw Valley State University, emphasizes the importance of critical thinking, interpersonal communication, and being proactive, while Lynette Reitz , MSW Program Director and Associate Professor at Montclair State University, stresses the importance of demonstrating field experience, cultural sensitivity, empowerment, and dependability.

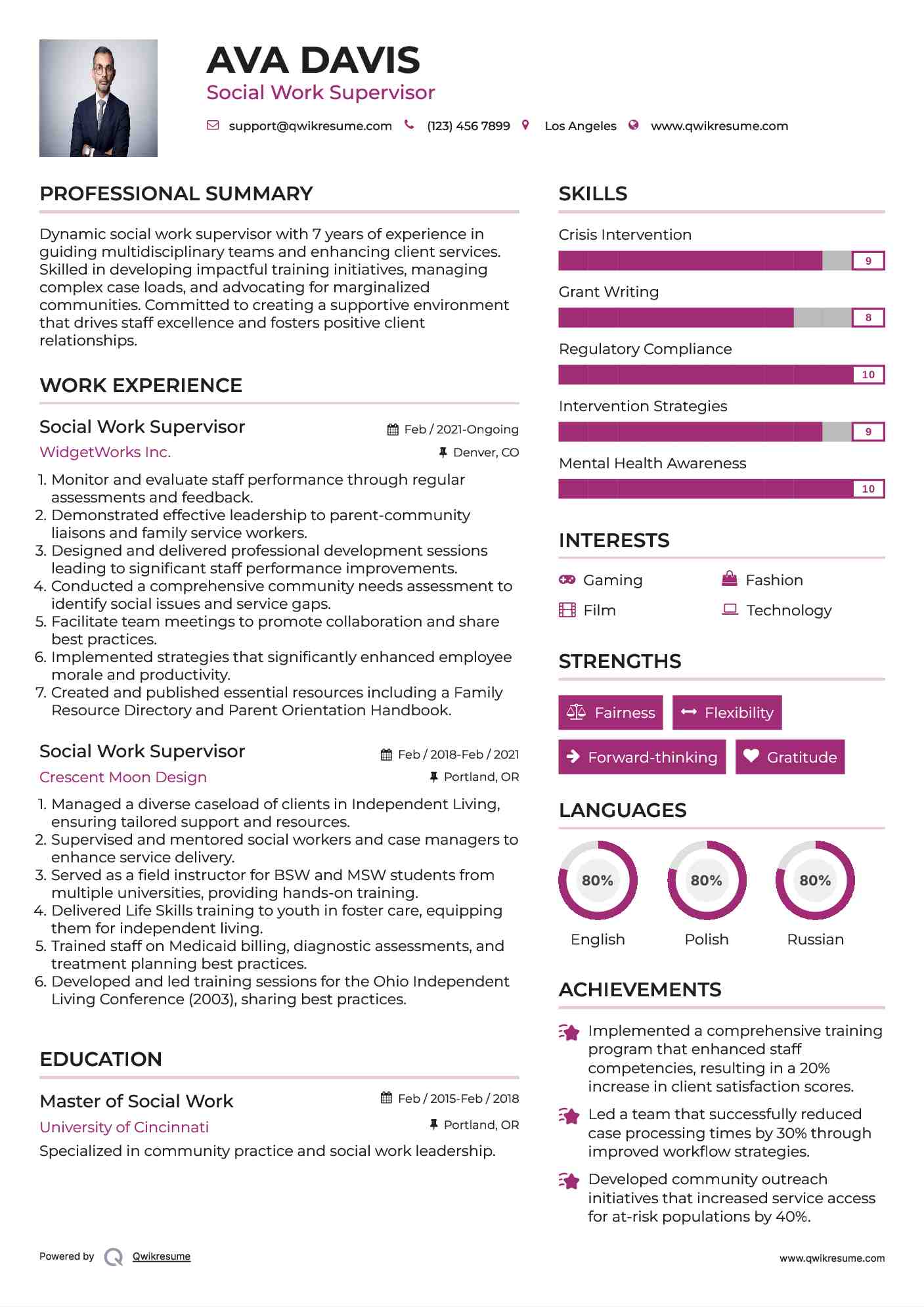

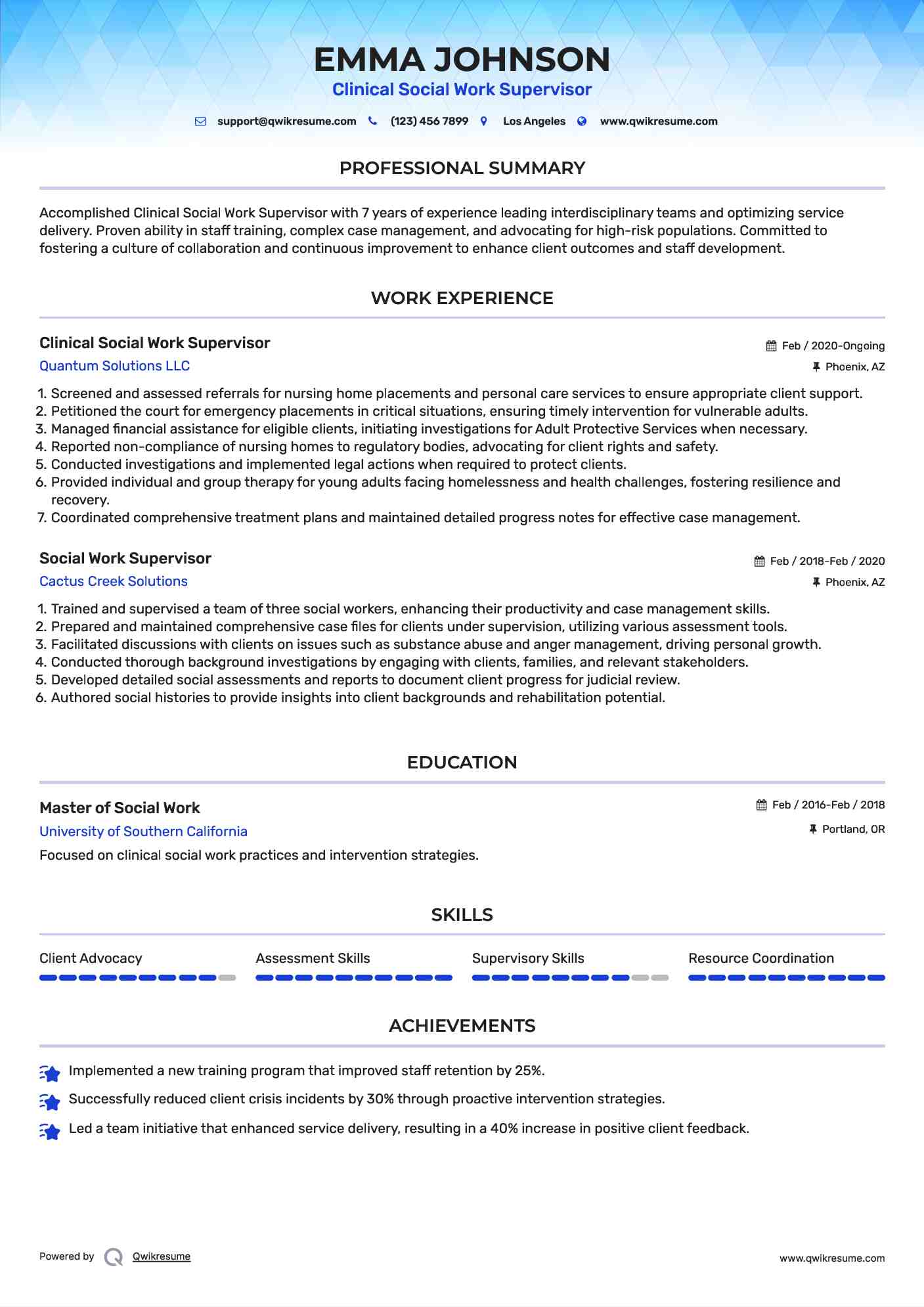

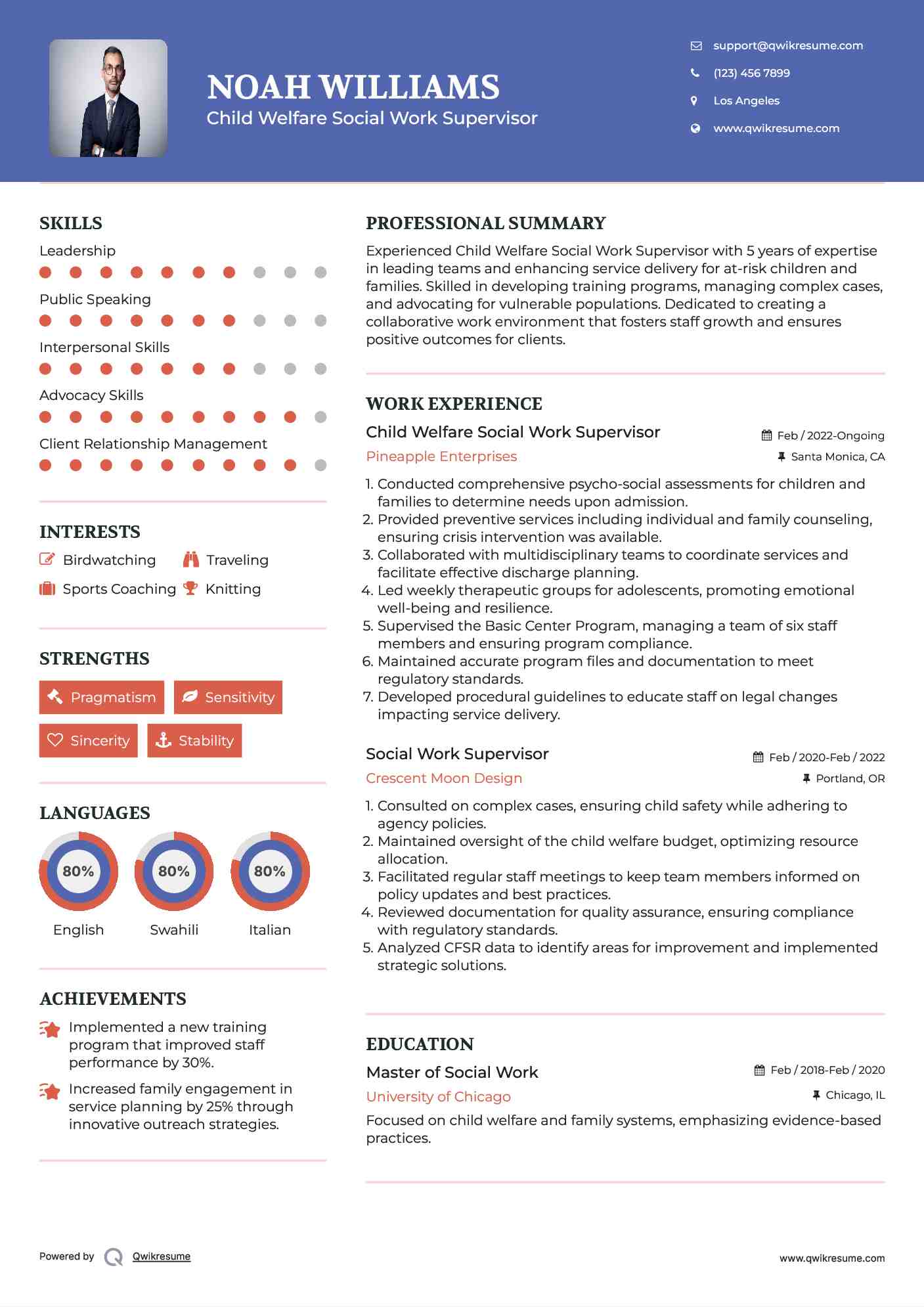

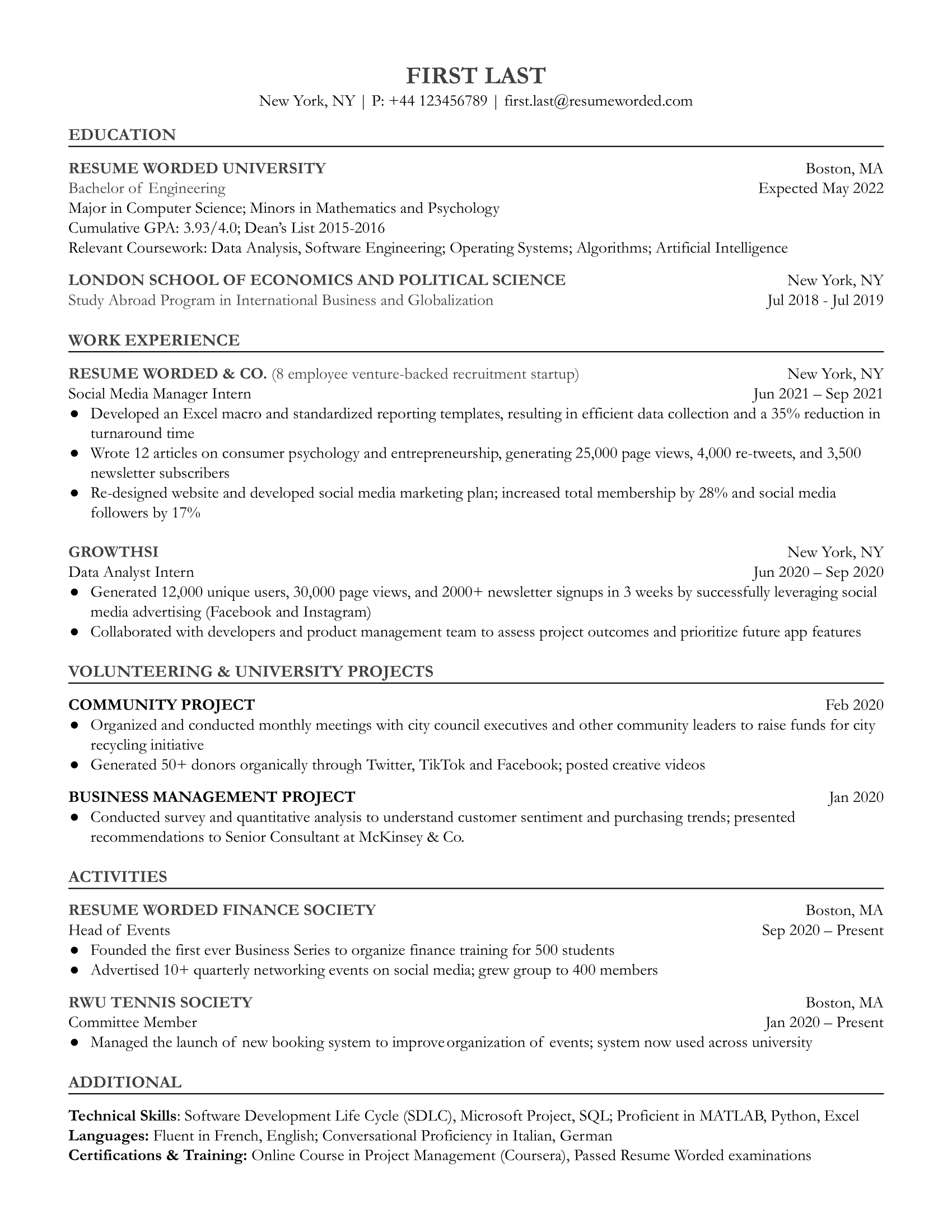

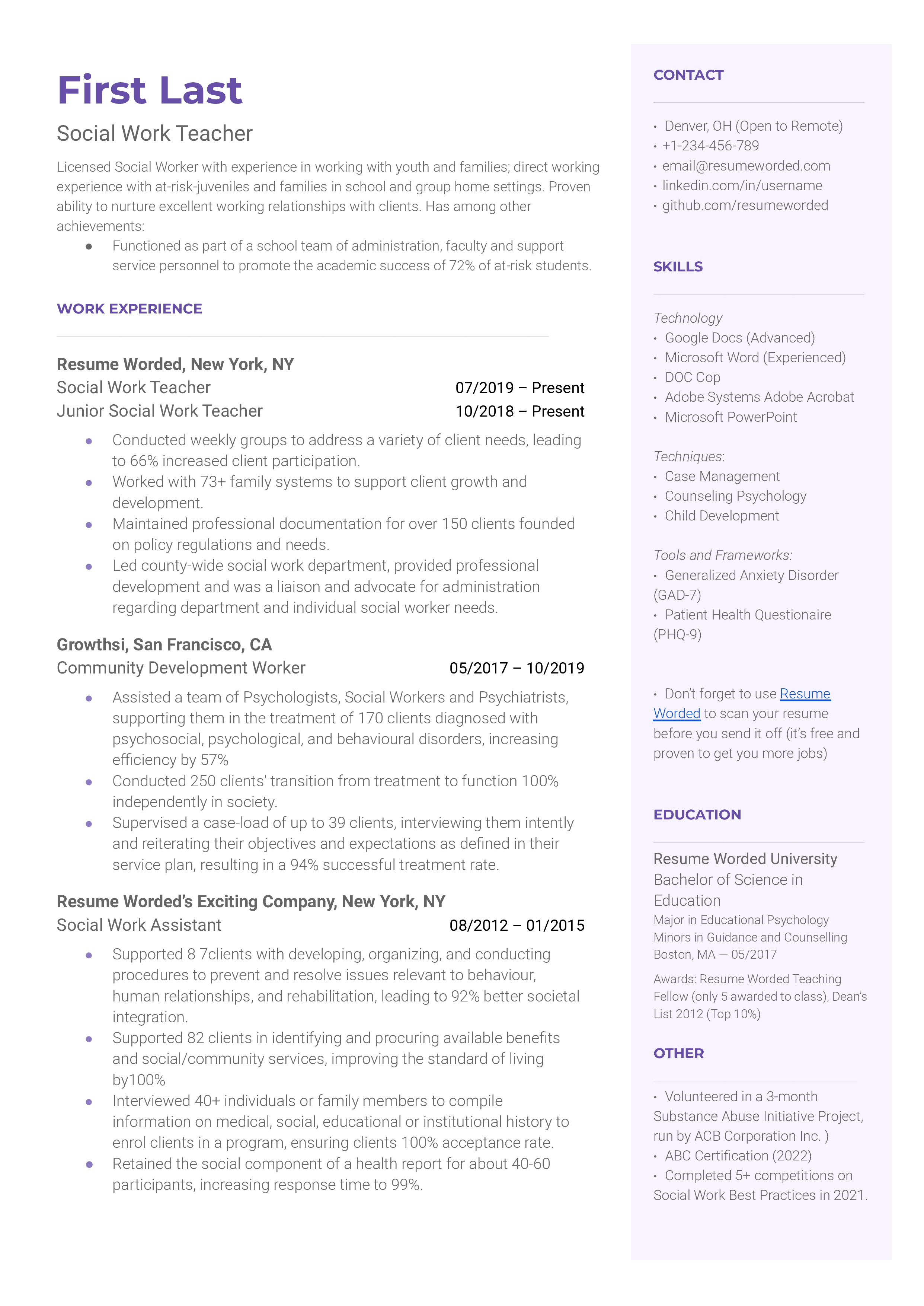

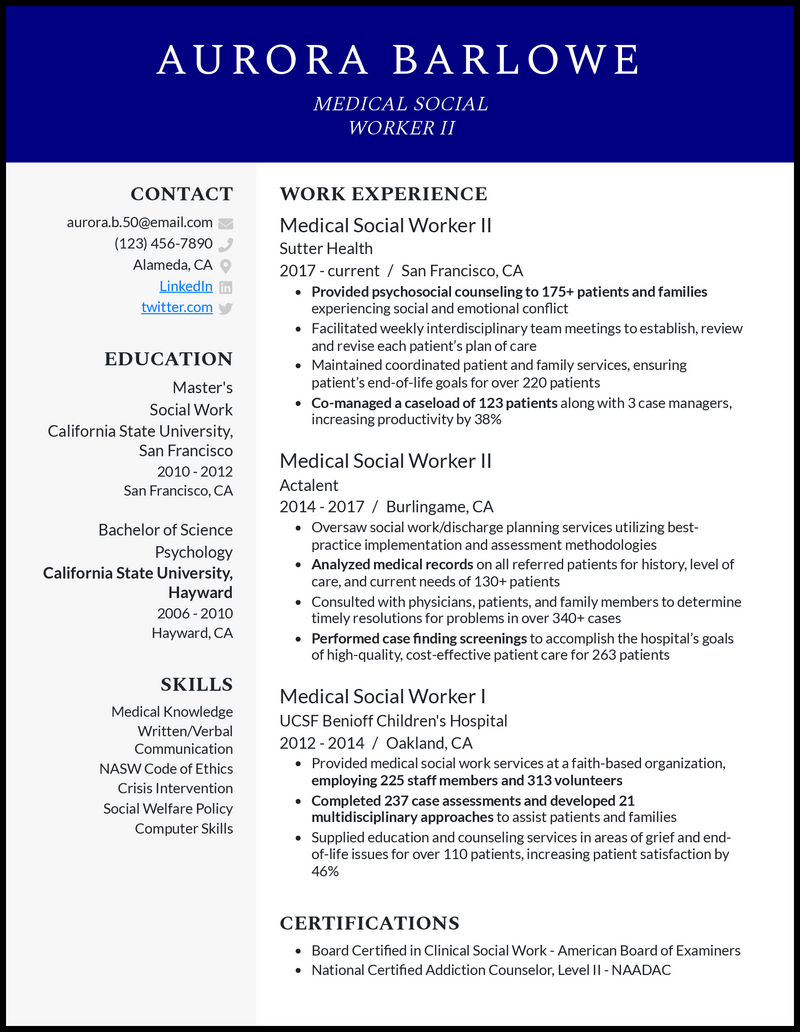

Social Work Supervisor resume example

How to format your social work supervisor resume:.

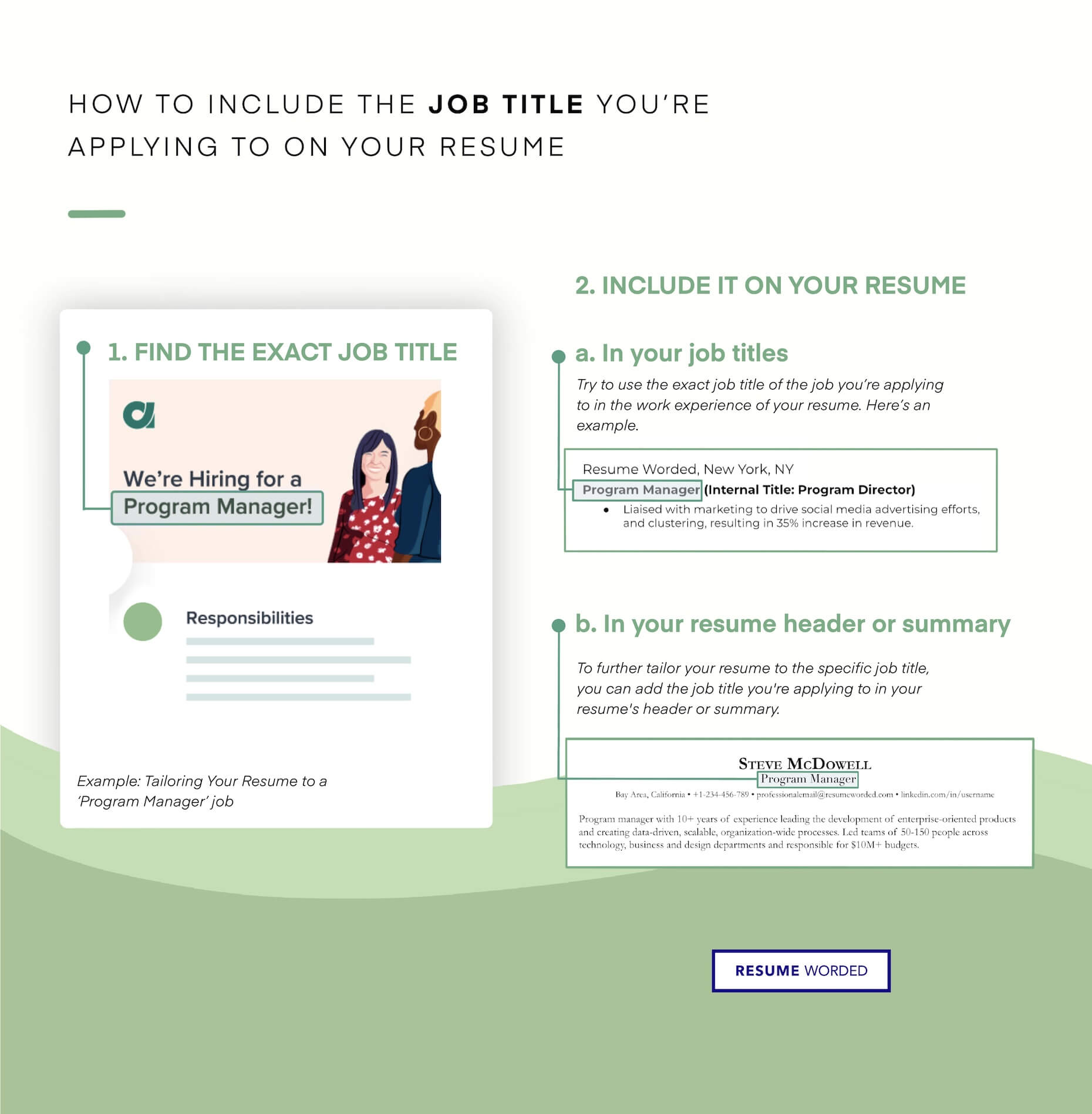

- Use the same job title on your resume as the one in the job posting for the social work supervisor role.

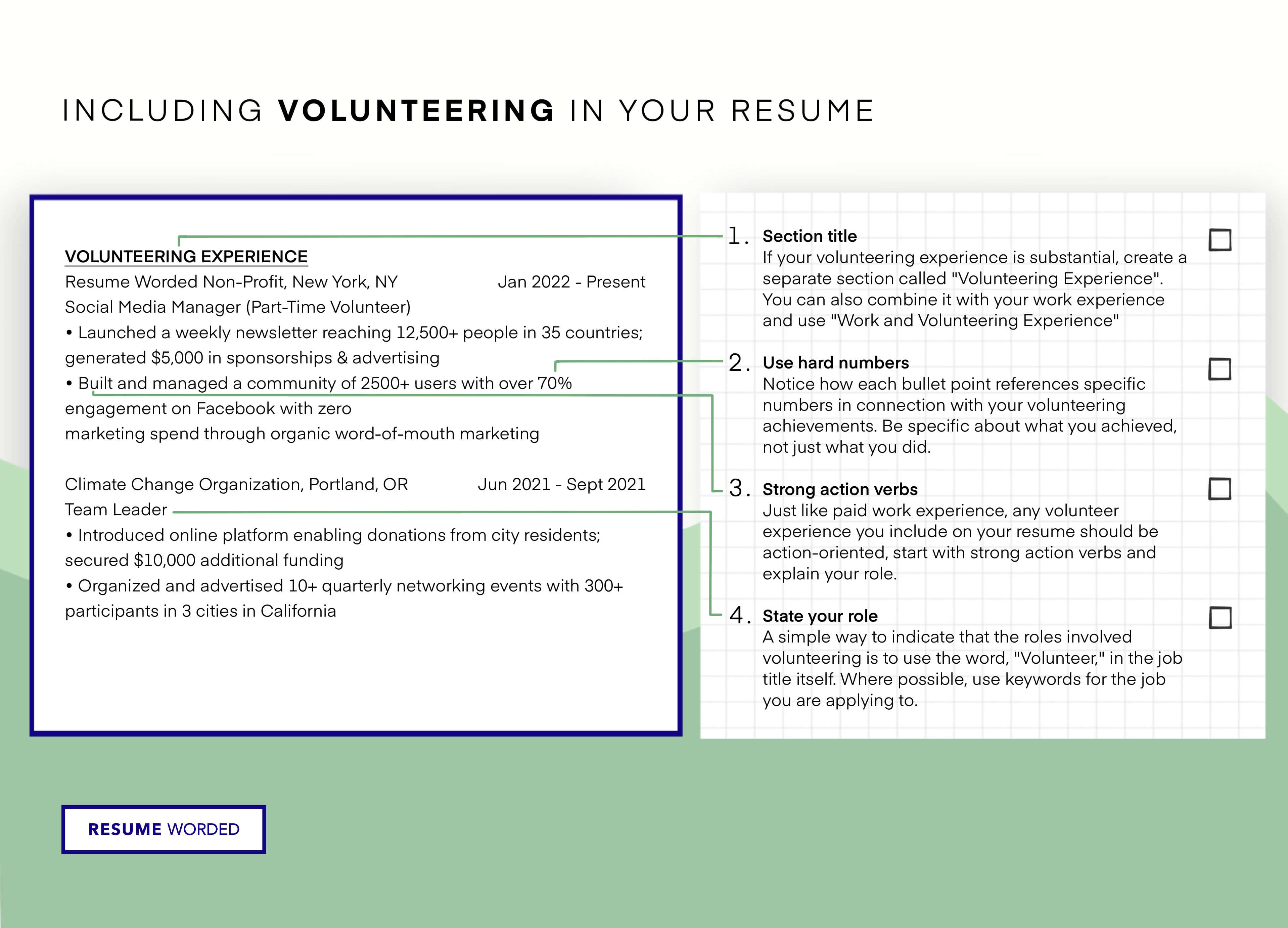

- Highlight your accomplishments in your work experience section, rather than just listing your responsibilities as a social work supervisor. Consider using bullet points to make your achievements stand out.

- Aim to fit your resume on one page, focusing on the most relevant and impressive experiences and achievements for the social work supervisor role. Consider using a clear and concise writing style to make the most of the space you have.

Choose from 10+ customizable social work supervisor resume templates

Choose from a variety of easy-to-use social work supervisor resume templates and get expert advice from Zippia’s AI resume writer along the way. Using pre-approved templates, you can rest assured that the structure and format of your social work supervisor resume is top notch. Choose a template with the colors, fonts & text sizes that are appropriate for your industry.

Social Work Supervisor resume format and sections

1. add contact information to your social work supervisor resume.

Social Work Supervisor Resume Contact Information Example # 1

Dhruv Johnson

[email protected] | 333-111-2222 | www.linkedin.com/in/dhruv-johnson

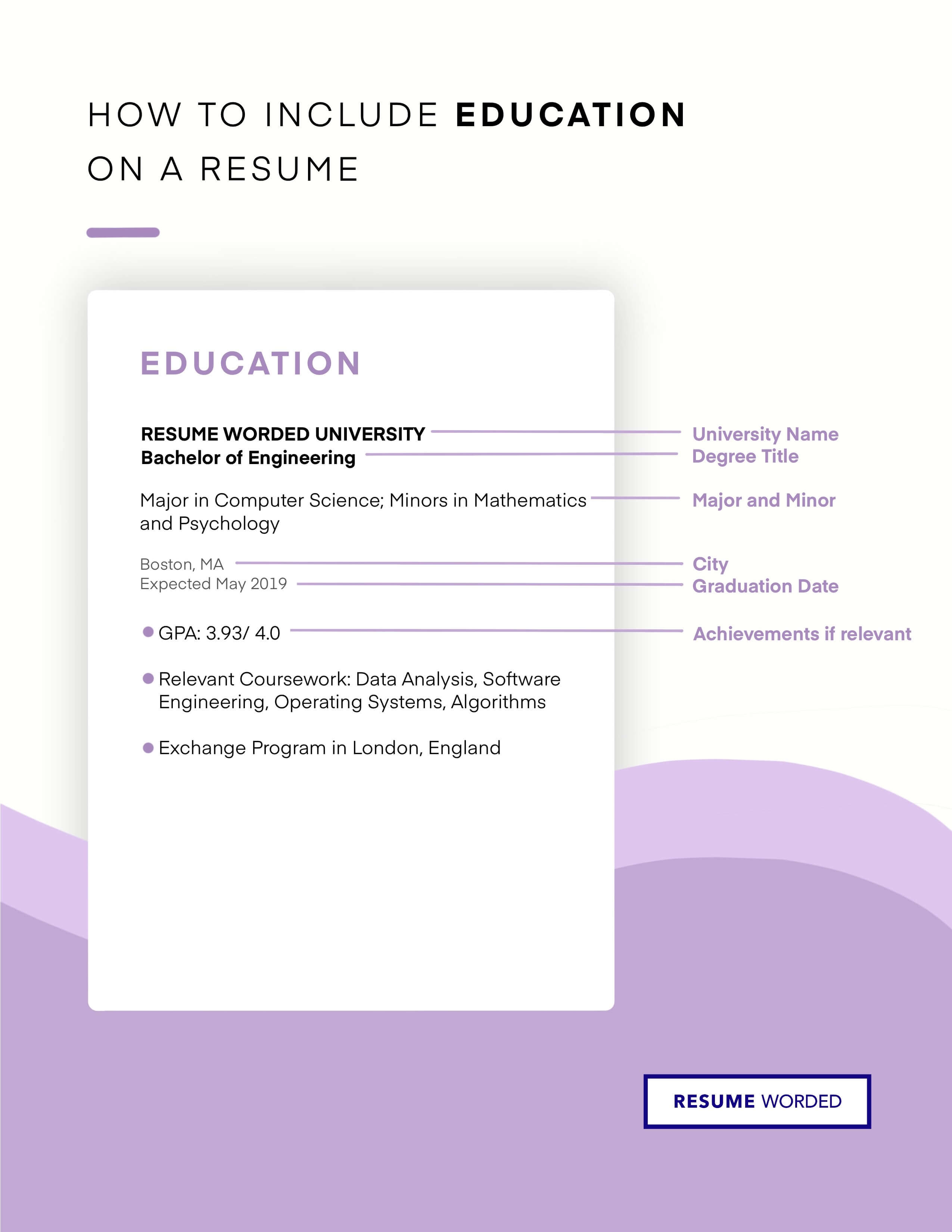

2. Add relevant education to your social work supervisor resume

Your resume's education section should include:

- The name of your school

- The date you graduated ( Month, Year or Year are both appropriate)

- The name of your degree

If you graduated more than 15 years ago, you should consider dropping your graduation date to avoid age discrimination.

Optional subsections for your education section include:

- Academic awards (Dean's List, Latin honors, etc. )

- GPA (if you're a recent graduate and your GPA was 3.5+)

- Extra certifications

- Academic projects (thesis, dissertation, etc. )

Other tips to consider when writing your education section include:

- If you're a recent graduate, you might opt to place your education section above your experience section

- The more work experience you get, the shorter your education section should be

- List your education in reverse chronological order, with your most recent and high-ranking degrees first

- If you haven't graduated yet, you can include "Expected graduation date" to the entry for that school

Check More About Social Work Supervisor Education

Social Work Supervisor Resume Relevant Education Example # 1

Bachelor's Degree In Social Work 2007 - 2010

University of North Carolina at Greensboro Greensboro, NC

Social Work Supervisor Resume Relevant Education Example # 2

Master's Degree In Social Work 2000 - 2001

3. Next, create a social work supervisor skills section on your resume

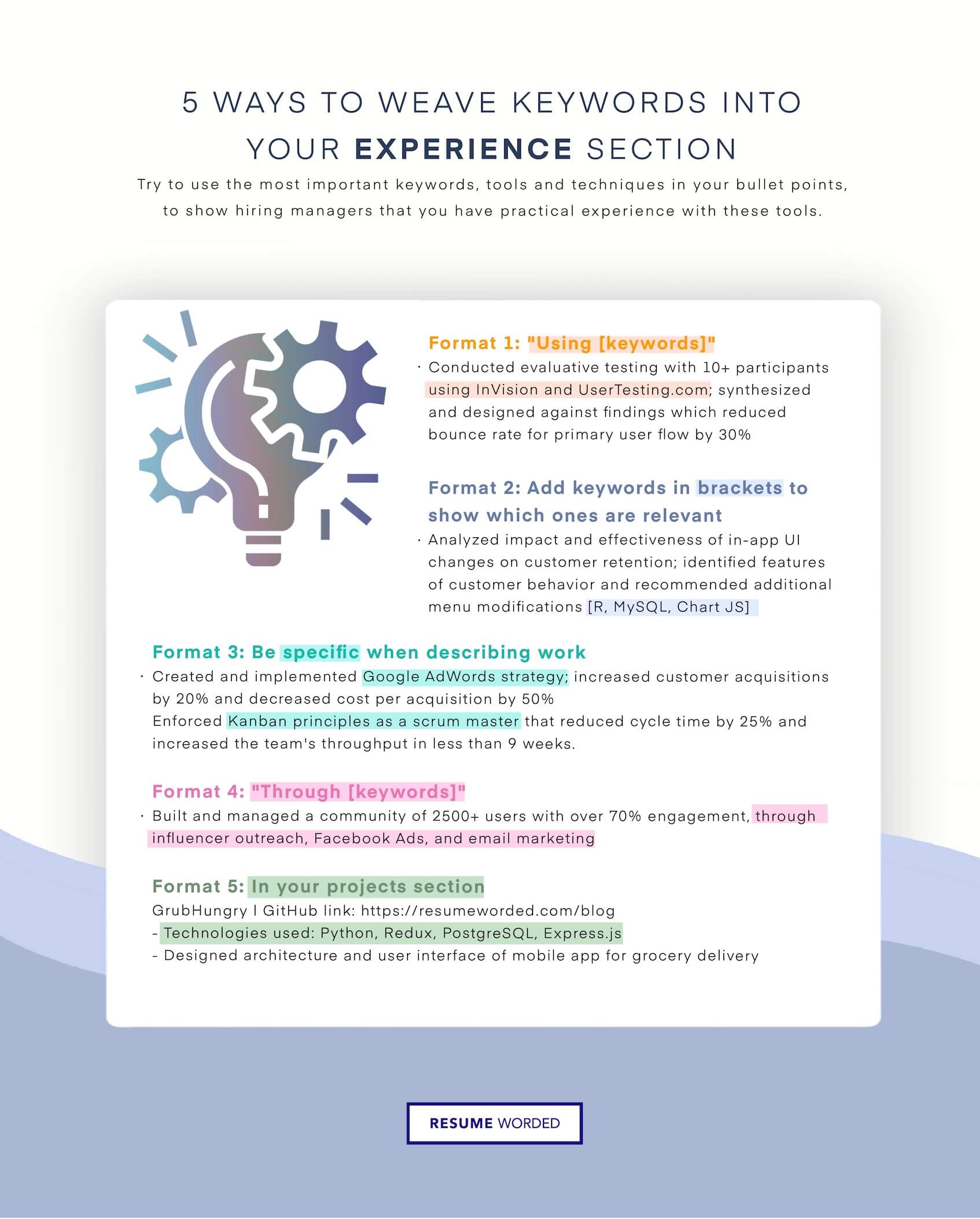

Your resume's skills section should include the most important keywords from the job description, as long as you actually have those skills. If you haven't started your job search yet, you can look over resumes to get an idea of what skills are the most important.

Here are some tips to keep in mind when writing your resume's skills section:

- Include 6-12 skills, in bullet point form

- List mostly hard skills ; soft skills are hard to test

- Emphasize the skills that are most important for the job

Hard skills are generally more important to hiring managers because they relate to on-the-job knowledge and specific experience with a certain technology or process.

Soft skills are also valuable, as they're highly transferable and make you a great person to work alongside, but they're impossible to prove on a resume.

Example of skills to include on an social work supervisor resume

Clinical supervision refers to how practicing nurses get professional and moral support from their experienced colleagues. The practice aims to promote their ability to make a concrete decision that values the patient's well-being.

Community resources are a set of resources that are used in the day to day life of people which improves their lifestyle in some way. People, sites or houses, and population assistance can come under the services offered by community resources.

Protective services are services offered to vulnerable individuals or legal representatives to protect them against potential abuse, violence, or negligence. Protective services are offered to ensure that an individual's safety stays intact and they don't fall victim to crime or exploitation. Such services include, social casework, stated appointed witness protection, home care, legal assistance, day-care etc.

DHS (Department of Homeland Security) refers to the department that handles the USA's immigration enforcement.

NYC stands for New York City.

Top Skills for a Social Work Supervisor

- Social Work , 31.2%

- Patients , 6.3%

- Child Welfare , 5.4%

- Other Skills , 51.6%

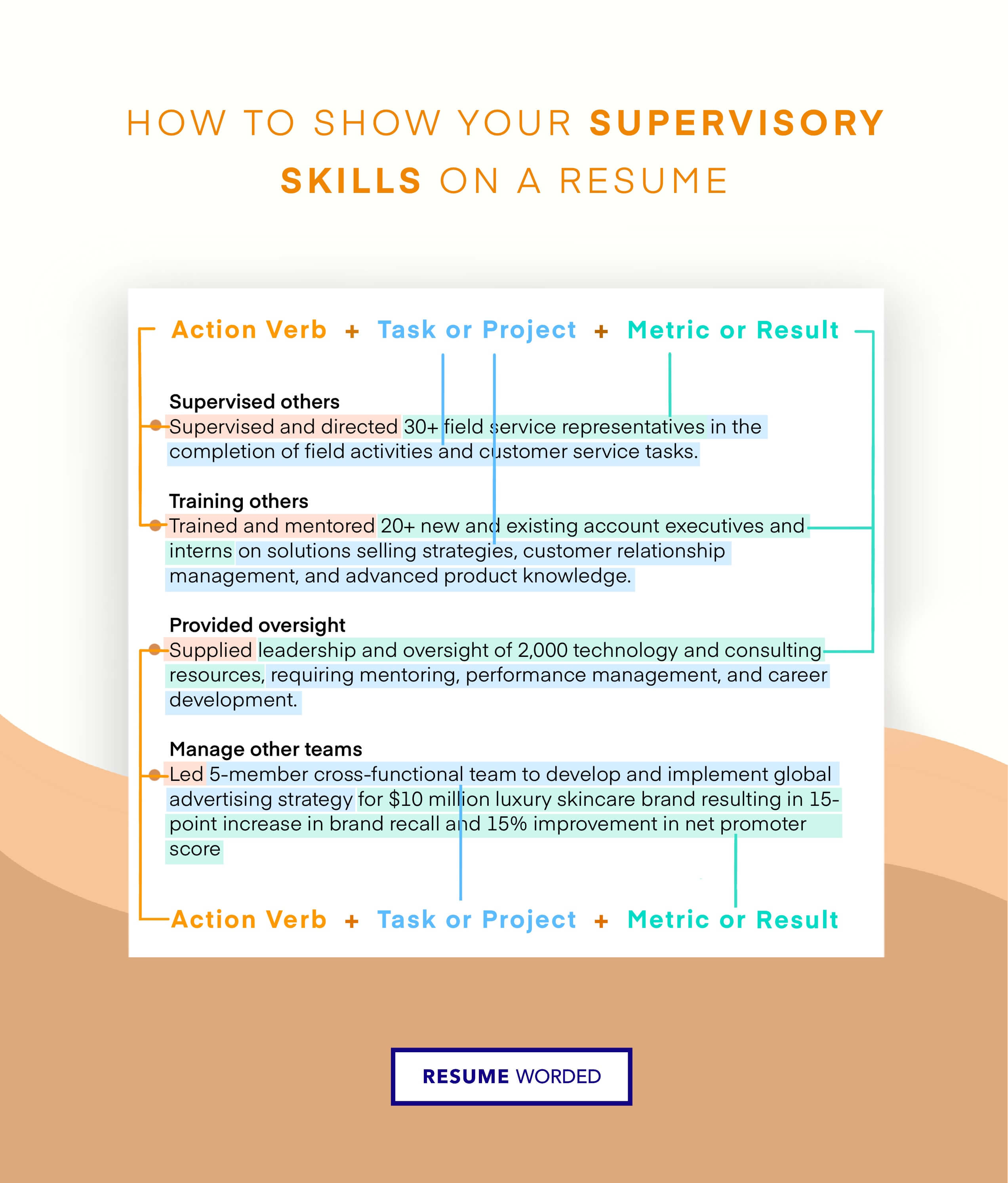

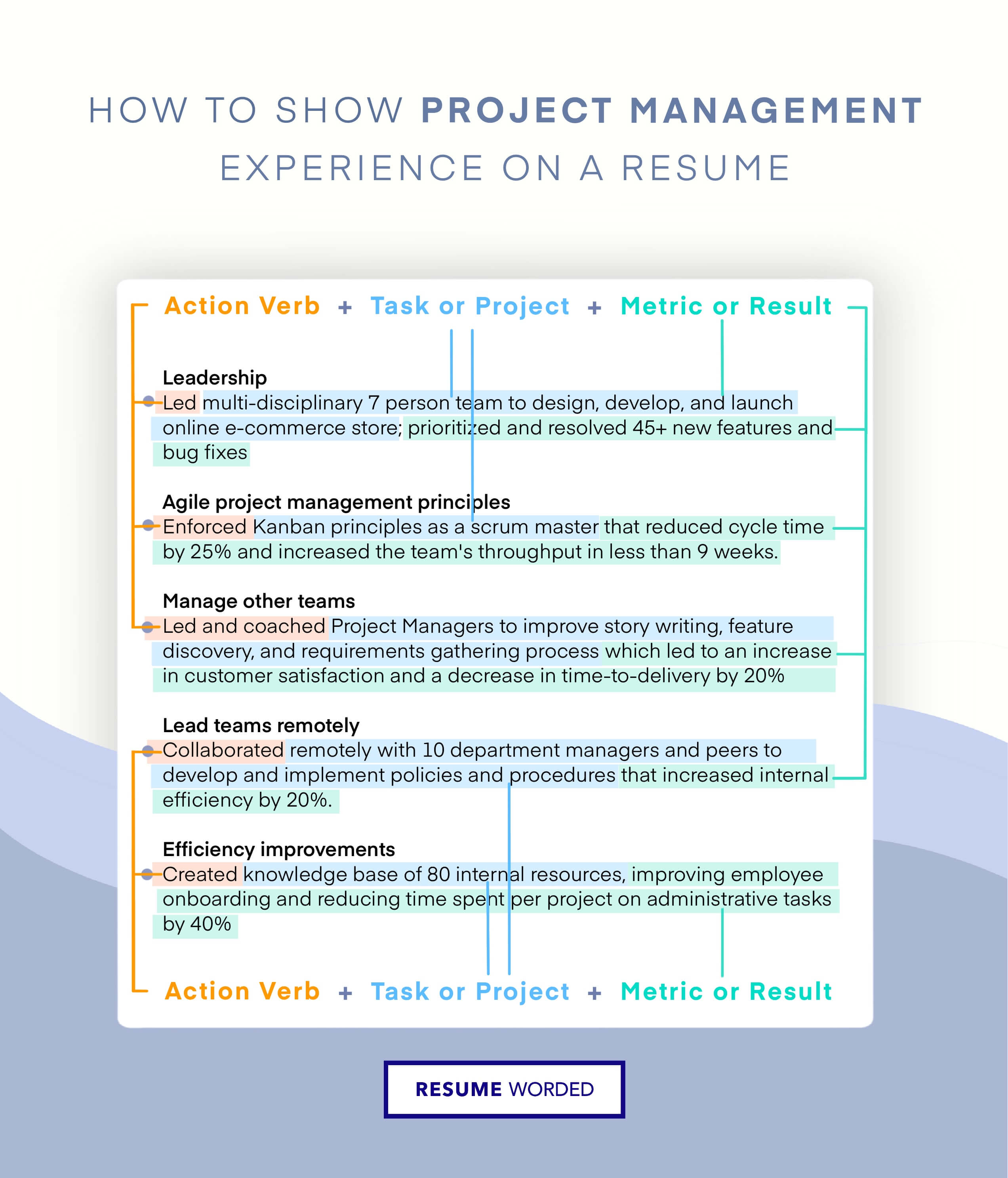

4. List your social work supervisor experience

The most important part of any resume for a social work supervisor is the experience section. Recruiters and hiring managers expect to see your experience listed in reverse chronological order, meaning that you should begin with your most recent experience and then work backwards.

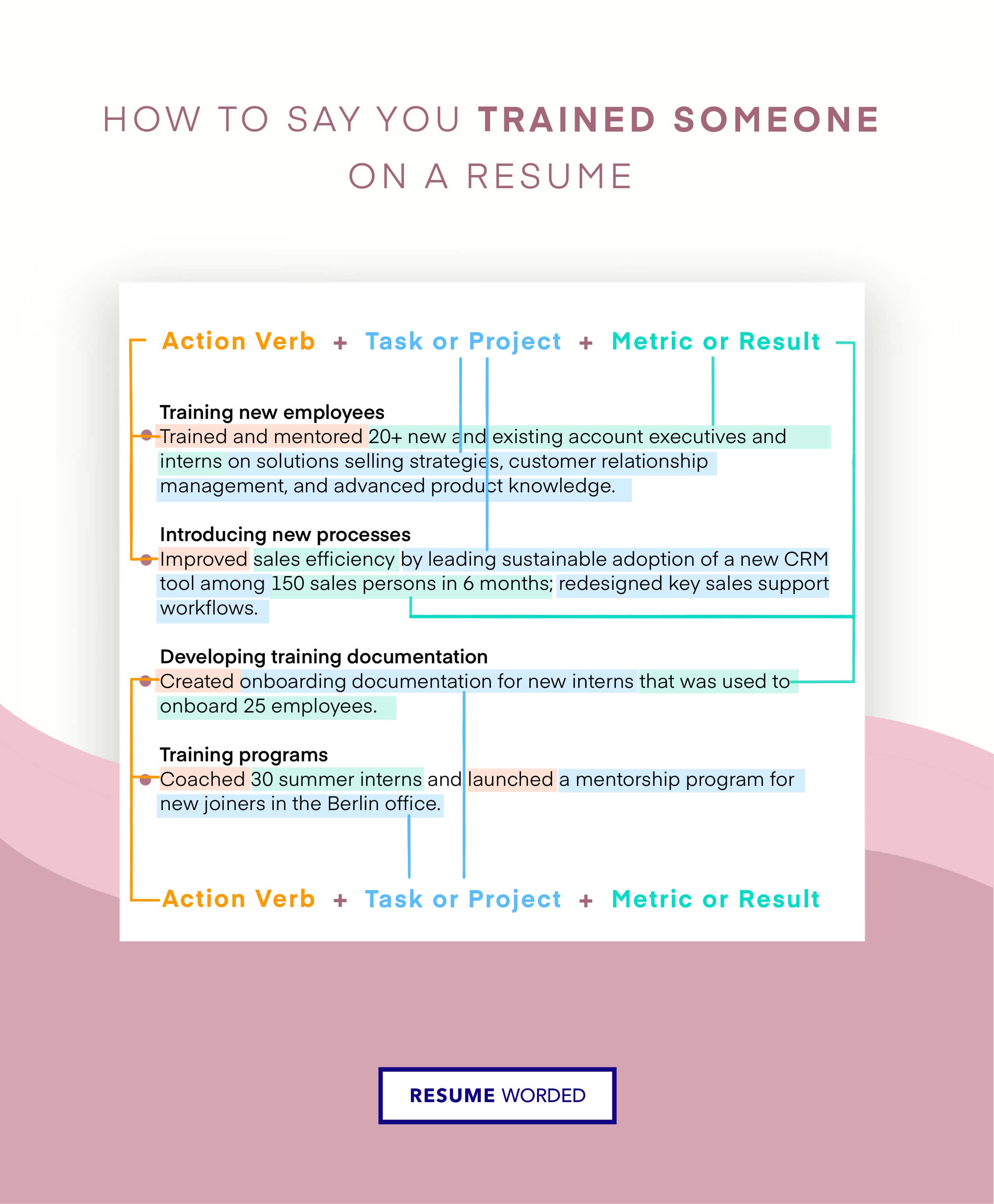

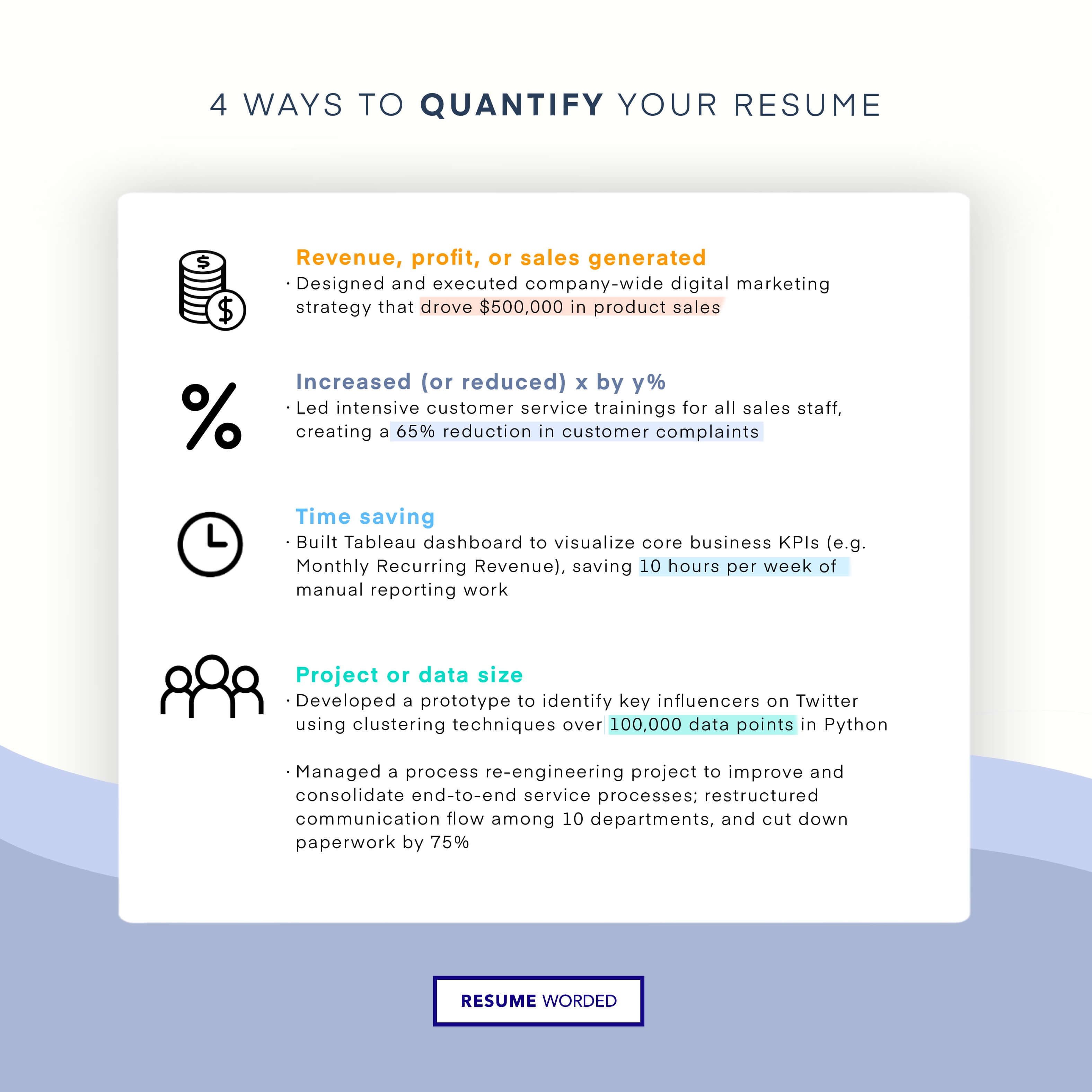

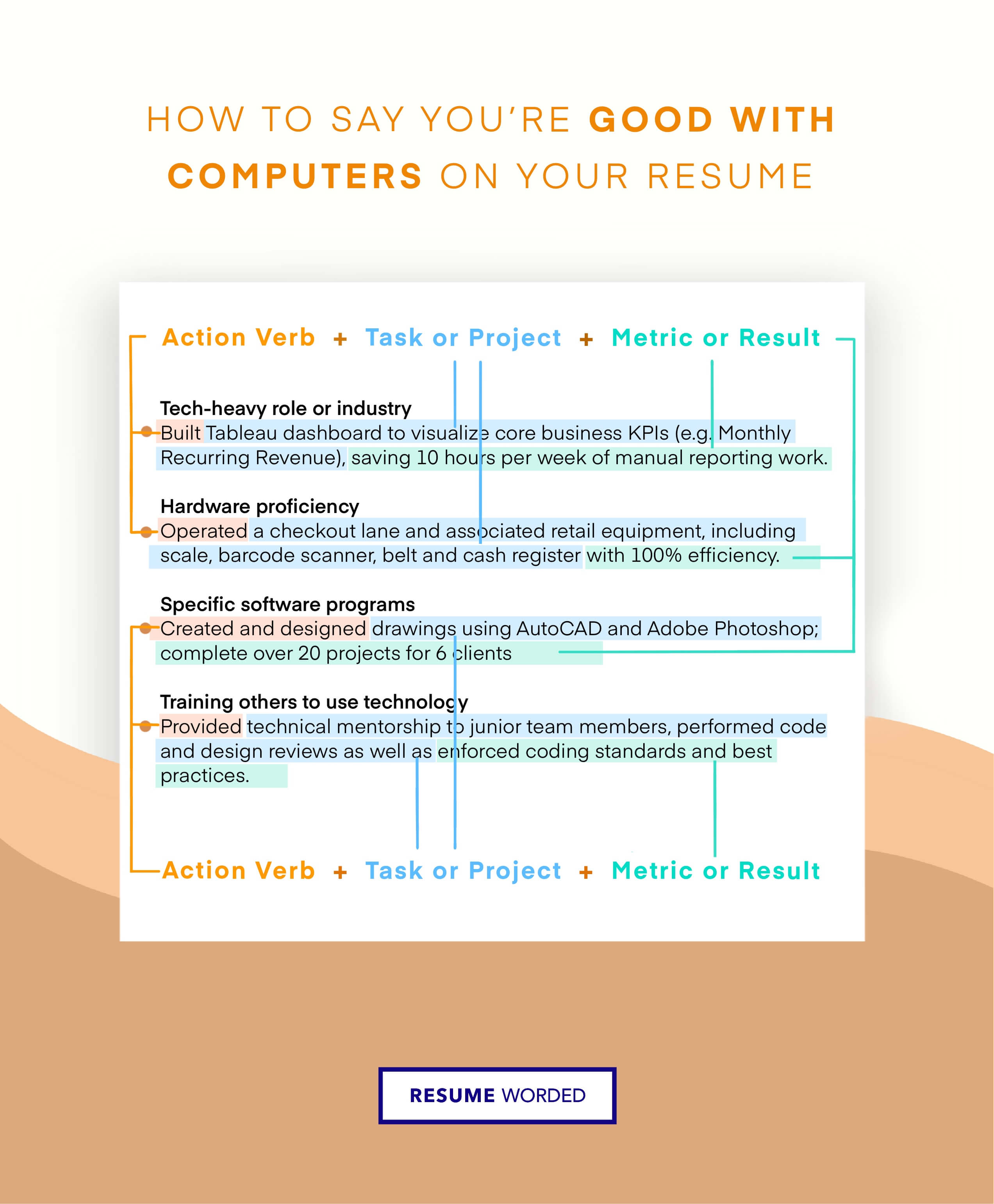

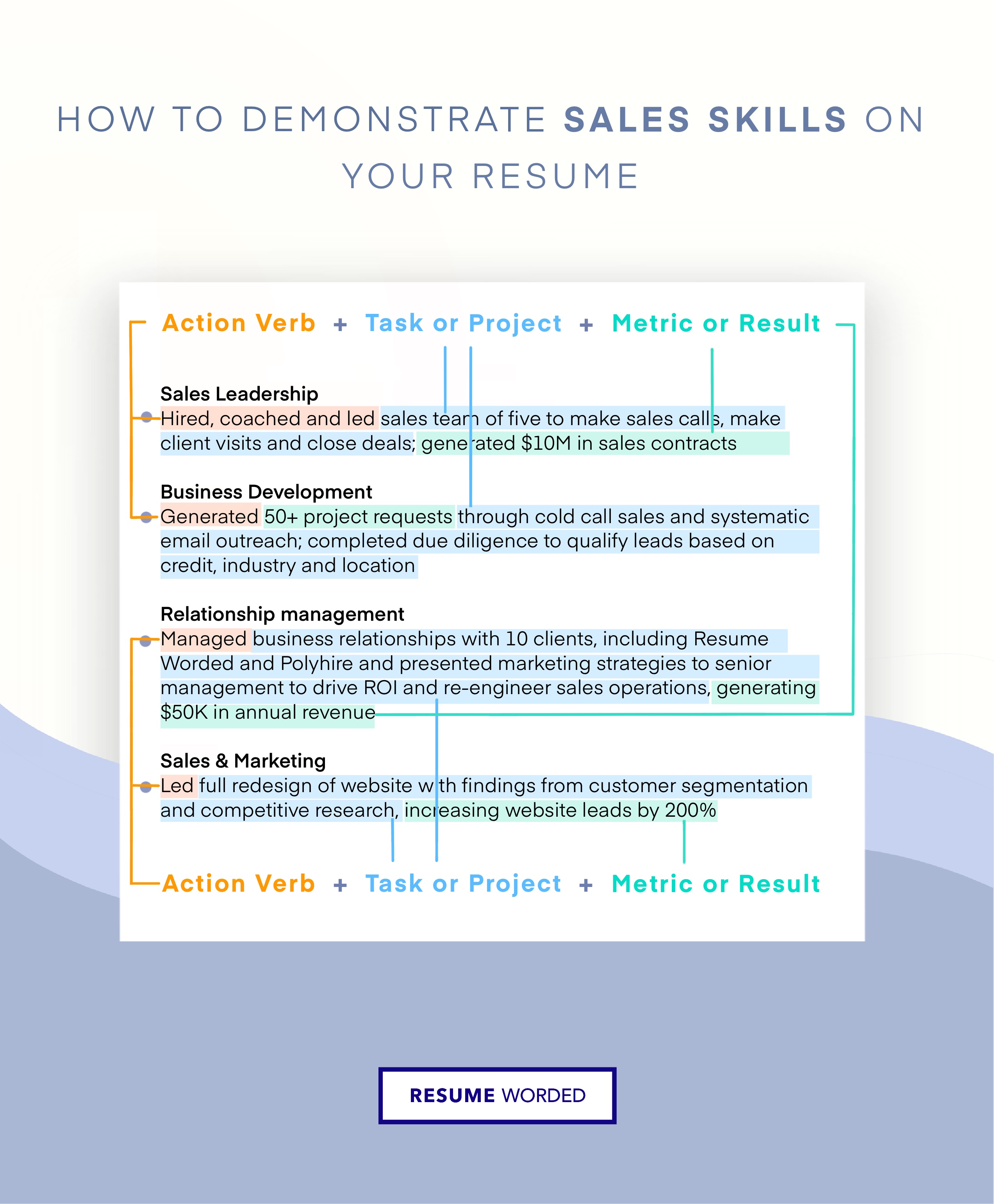

Don't just list your job duties below each job entry. Instead, make sure most of your bullet points discuss impressive achievements from your past positions. Whenever you can, use numbers to contextualize your accomplishments for the hiring manager reading your resume.

It's okay if you can't include exact percentages or dollar figures. There's a big difference even between saying "Managed a team of social work supervisors" and "Managed a team of 6 social work supervisors over a 9-month project. "

Most importantly, make sure that the experience you include is relevant to the job you're applying for. Use the job description to ensure that each bullet point on your resume is appropriate and helpful.

- Provided and oversaw weekly clinical and administrative supervision to social work staff and MSW student interns.

- Provided direct supervision and guidance to student interns pursuing MSW degrees and supervised their field placement.

- Counseled parents and conducted parenting workshops.

- Ensured that Support Coordination Managers were following policies and procedures.

- Provided comprehensive case management to DCFS intact families by assessing family functioning.

- Provided intensive, in-home counseling to families that had one or more children at the risk of out-of-home placement.

- Established client communication through co-facilitating group and independent therapy sessions.

- Provided services to low risk families brought to the attention of DCFS because of abuse and/or neglect.

- Received training in Therapeutic Crisis Intervention, CPR/ First Aid, and Basic Water Rescue.

- Provided evidence-based In-home counseling services to Foster Care and community children throughout Palm Beach County.

- Seasoned LCSW with experience in fast-paced inpatient behavioral health setting.

- Marketed facility with area hospitals and discharge planners.

- Co-supervised and actively mentor matriculating Clinical Track MSW students from Rutgers University.

- Provided Clinical Supervision for LCSW Social Workers and MSW Social Work Interns.

- Served on hospital staff oncology committee.

- Provided individual and family counseling to children, adolescents, and adults in an outpatient setting.

- High-paced psychology office offering individual, couples, and family counseling.

- Maintained documentation and communication with outside workers and families.

- Supervised graduate interns for LPC and LMFT internships/licensure.

- Provided sex abuse specific group and individual counseling to children and adolescents using Trauma Focused Cognitive Behavioral Therapy model of treatment.

5. Highlight social work supervisor certifications on your resume

Specific social work supervisor certifications can be a powerful tool to show employers you've developed the appropriate skills.

If you have any of these certifications, make sure to put them on your social work supervisor resume:

- Certification in Forensic Social Work (CFSW)

- Diplomate in Clinical Social Work (DCSW)

- Academy of Certified Social Workers Credential (ACSW)

- Certified Professional - Human Resource (IPMA-CP)

6. Finally, add an social work supervisor resume summary or objective statement

A resume summary statement consists of 1-3 sentences at the top of your social work supervisor resume that quickly summarizes who you are and what you have to offer. The summary statement should include your job title, years of experience (if it's 3+), and an impressive accomplishment, if you have space for it.

Remember to emphasize skills and experiences that feature in the job description.

Common social work supervisor resume skills

- Social Work

- Child Welfare

- Social Services

- Crisis Intervention

- Clinical Supervision

- Community Resources

- Protective Services

- Foster Care

- Substance Abuse

- Discharge Planning

- Rehabilitation

- Staff Development

- Mental Health

- Professional Development

- Child Abuse

- Psychosocial Assessments

- Group Therapy

- Clinical Services

- Service Delivery

- Mental Illness

- Intake Assessments

- Direct Services

- Direct Supervision

- Staff Training

- Community Agencies

- Staff Performance

- In-Service Training

- Performance Evaluations

- Crisis Management

- Group Supervision

- Court Reports

- Family Therapy

- Individual Supervision

- Administrative Supervision

- Program Development

- Individual Therapy

- Child Protective

- Therapeutic Services

- Law Enforcement

- Domestic Violence

- Early Intervention

Social Work Supervisor Jobs

Links to help optimize your social work supervisor resume.

- How To Write A Resume

- List Of Skills For Your Resume

- How To Write A Resume Summary Statement

- Action Words For Your Resume

- How To List References On Your Resume

Updated April 25, 2024

Editorial Staff

The Zippia Research Team has spent countless hours reviewing resumes, job postings, and government data to determine what goes into getting a job in each phase of life. Professional writers and data scientists comprise the Zippia Research Team.

Social Work Supervisor Related Resumes

- Adult Case Manager Resume

- Assistant Group Supervisor Resume

- Case Manager Lead Resume

- Clinical Social Worker Resume

- Clinical Supervisor Resume

- Coordinator Resume

- Counselor/Case Manager Resume

- Licensed Social Worker Resume

- Psychiatric Social Worker Resume

- Senior Case Manager Resume

- Social Services Case Manager Resume

- Social Work Case Manager Resume

- Social Work Internship Resume

- Social Worker Resume

- Supervisor Of Communications Resume

Social Work Supervisor Related Careers

- Adult Case Manager

- Assistant Group Supervisor

- Case Manager Lead

- Case Manager/Program Manager

- Clinical Social Worker

- Clinical Supervisor

- Coordinator

- Counselor/Case Manager

- Family Case Manager

- Foster Care Case Manager

- Licensed Social Worker

- Psychiatric Social Worker

- Resident Assistant

- Senior Case Manager

- Social Services Case Manager

Social Work Supervisor Related Jobs

What similar roles do.

- What Does an Adult Case Manager Do

- What Does a Case Manager Lead Do

- What Does a Case Manager/Program Manager Do

- What Does a Clinical Social Worker Do

- What Does a Clinical Supervisor Do

- What Does a Coordinator Do

- What Does a Counselor/Case Manager Do

- What Does a Family Case Manager Do

- What Does a Foster Care Case Manager Do

- What Does a Licensed Social Worker Do

- What Does a Psychiatric Social Worker Do

- What Does a Resident Assistant Do

- What Does a Senior Case Manager Do

- What Does a Social Services Case Manager Do

- What Does a Social Work Case Manager Do

- Zippia Careers

- Community and Social Services Industry

- Social Work Supervisor

- Social Work Supervisor Resume

Browse community and social services jobs

Social Work Supervisor Resume Samples

A Social Work Supervisor is hired to take up the duty of overseeing, monitoring and supervising the work activities of the less-experienced social workers. Some of the common tasks that are depicted on the Social Work Supervisor Resume include tasks like – providing administrative and clinical support to social workers , ensuring the objectives of the missions are met, taking responsibility for implementing, locating and securing funds, setting training sessions for social workers , and providing direct clinical supervision to social workers or staff members.

The skills that can highlight a resume are – excellent advocating and coaching skills, familiarity with child development and family systems, familiarity with counseling and various therapy techniques, ability to provide personalized service and familiarity with clinical issues and stress management concepts. A bachelor-s degree or a Master-s degree in Social Work or Psychology is commonplace among job seekers.

- Resume Samples

- Social Work Supervisor

Social Work Supervisor Resume

Headline : A compassionate and intelligent clinical social worker who has worked in a wide variety of social work environments, as a direct service provider and therapist to families and individuals.

Skills : Individual, Family Therapy, CBT, EMR, Management.

Description :

- Comprehensive and intensive services provided to prevent foster care placement by reducing risk through individual, family and group counseling.

- Counseled clients during crises, conferences, and when a worker was not available.

- Guided, directed, and advised workers in dealing with presenting problems and high-risk issues.

- Documented case progression and issues; using various computerized data management systems.

- Regularly reviewed charts, notes, and case reports, providing edits, guidance, & direction.

- Participated in regular meetings with upper management for program planning and review.

- Wrote staff evaluations and detailed supervision summaries, per ACS requirements.

Social Work Supervisor II Resume

Headline : Proven leader able to work under challenging conditions, maintain and support professional staff, successfully raise funding, obtain grants, receive programs and to manage such with positive outcomes.

Skills : Microsoft office, Management.

- Facilitated Mental Health and Intensive In-Home Assessments.

- Provided individual and group supervision to QHMP's; counselors and licensed eligible clinicians.

- Facilitate weekly Clinical Case Staffing and Treatment Team Meetings.

- Trained counselors/clinicians on various therapeutic interventions and treatment modalities.

- Reviewed and edit ISP's, progress notes, discharge summaries, Medicaid extensions, and other agency documentation.

- Monitored daily counselor/clinician activities, home visits, and case management.

- Provided Clinical assessments and Trauma-focused Cognitive Behavioral Therapy for Medicaid eligible children, youth.

Social Work Supervisor/Analyst Resume

Headline : Highly qualified professional displays positive interpersonal relationship skills and ability to work collaboratively as a team leader/player. Demonstrate sound judgment, decision making and problem solving skills.

Skills : Microsoft Office, Management.

- Provided sound clinical supervision to a diverse staff of licensed social workers and psychologists.

- Provided positive leadership to parent/community liaisons and family service workers.

- Effectively planned and presented professional development for staff and parenting workshops for parents with significant results.

- Prepared a community needs assessment of community populations and social issues.

- Coordinated federal and state education audits with no findings.

- Dramatically improved employee morale and productivity.

- Developed and published Family Resource Directory, Parent Orientation Handbook, Student Recruitment/Enrollment Guide.

Social Work Supervisor/Executive Resume

Headline : Supervising the work of a unit of social workers providing social services; plans, develops, and provides training on social service principles and programs.

Skills : Supervising, Management.

- Provided direct supervision for master level social workers. Received and maintained CPR certification.

- Responsible for monitoring productivity and quality work for those supervised.

- Represented the social work program on multidisciplinary committees within the prison.

- Maintained an active caseload for offenders in the mental health section of the prison.

- Providing individual and group counseling to those in caseload.

- Responsible for providing crisis intervention for other offenders throughout the prison.

- Developed and taught social skills, and anger management groups within the prison population.

Social Work Supervisor I Resume

Objective : A self starter and team player with excellent organizational skills, supervisory skills and initiative as well as excellent written and verbal communication skills. Experience with starting up programs.

Skills : Trained In AF-CBT, Solution Focused Brief Therapy.

- Worked closely with Program Director on the general functioning of the Prevention Program.

- Provided ongoing supervision of caseworkers. Maintain intake, supervisory and casework logs.

- Monitored to ensure that all FTC's, FASP's and required paperwork is completed in a timely fashion.

- Implemented and integrate the principles and procedures of Solution-Based Casework.

- Facilitated case consultation and offer support and guidance to ensure staff is adhering to Solution-Based Casework.

- Integrated the practices of SBC into every supervisory session.

- Participated in the continued SBC training, coaching and SBC verification process.

Social Work Supervisor/Representative Resume

Summary : Employed in an area to help people and would like to continue to help others in community. Experience as a Rehabilitation Counselor helping clients with removing barriers to employment.

Skills : Management, MS-Office.

- Screened referrals for nursing home placement and personal care services.

- Petition court for emergency placement hearings in severe situations and make placement for adults.

- Payment of bills for eligible incoming clients. Initiate investigation of Adult Protective Service reports.

- Reported nursing homes to state offices and licensures.

- Obtained a disposition for investigation and implement legal action if necessary.

- Provided individual and group therapy for young adults diagnosed with HIV and Homeless.

- Coordinated quarterly treatment plans, monthly and weekly progress notes for a caseload.

Headline : Seeking a position in a progressive company where initiative and accomplishments will be the basis for stability, advancement and growth while broaden knowledge.

Skills : Communication Skills, Management.

- Ensured compliance with local, state, and federal rules and regulations.

- Tracked systems of programmatic performance targets and other reporting measures.

- Submit reports to program funders as required. Make Program modifications as directed by the Director of Foster Care Services.

- Attend all Interdisciplinary Team Meetings for Children's Services.

- Attend trial and final discharge conference for foster care cases.

- Coordinated family enrichment activities as budgets allow.

- Recruit, appoint, develop and evaluate all Foster Care vacancies.

Lead Social Work Supervisor Resume

Summary : To establish a long-term career in a company where I may utilize my Social Work Supervisor professional skills and knowledge to be an effective Associate Program Manager and inspiration to those around me.

Skills : Supervising Skills, MS-Office.

- Performed psycho-social assessment of the child and family upon admission.

- Provided needed preventive services to youth and families including, but not limited to individual and family counseling, crisis intervention.

- Collaborated with other team members to ensure coordinated service and discharge planning.

- Conducted weekly therapeutic groups with adolescents. Oversaw day to day operations of Basic Center Program, Supervised a staff of six.

- Guaranteed that program files were complete and accurate.

- Ensured program goals and outcomes were met.

- Created several steps by step procedures for employees to understand changes in the law.

Associate Social Work Supervisor Resume

Headline : Usually qualified and licensed social workers who have several years of experience and specialized training in supervision.

Skills : Management, Communication Skills.

- Coordinated services, and home visits to foster/ birth parents.

- Monitored children's adjustment to foster homes.

- Managed unit work routines, distribute assignments, enforced schedules, and authorize the allocation of available resources.

- Attended and participated in clinical meetings, case management, and unit meetings.

- Interviewed and counseled adoptive foster parents on adoption procedures and policies.

- Provided comprehensive discharge planning for children returning to their parents.

- Case Manager Monitored children's health, school performance and adjustment in a foster home.

Sr. Social Work Supervisor Resume

Summary : Strong planning, organizing and leadership skills. Pro-active mind set with a consistent approach toward attending daily operational matters of social and administrative nature.

Skills : Computer Skills Windows, MS Office, Self-motivated.

- Supervision of subordinate Social Workers, and Agency policies and procedures.

- Management of the day-to-day operations of the section.

- Assignment and monitored cases for delivery of service in compliance with statutory guidelines, regulations.

- Performance of employee evaluations to include recommendations of personnel and disciplinary actions.

- Development of statistical and qualitative reports.

- Maintenance of court-mandated caseload ratios.

- Reviewed written work of lower-level co-workers for grammar, content, and format.

Summary : To be considered for a part-time or per diem position where social work education will be utilized in communicating with clients and linking them with the proper resources.

- Assist with screening and interviewing job applicants and social work students and orienting and training new employees and students.

- Coordinate scheduling and monitor work assignments for employees.

- Evaluated employees' job performance using the standards and format of the Performance Appraisal Review System.

- Monitor the casework services of supervisees.

- Provided consultation and supervision to supervisees.

- Provided basic social services to patients. Provide on-call coverage for the supervisees.

- Received Social Worker of the year twice and Employee of the Year once.

Table of Contents

Recent posts, download this pdf template., creating an account is free and takes five seconds. you'll get access to the pdf version of this resume template., choose an option., unlock the power of over 10,000 resume samples., take your job search to the next level with our extensive collection of 10,000+ resume samples. find inspiration for your own resume and gain a competitive edge in your job search., get hired faster with resume assistant., make your resume shine with our resume assistant. you'll receive a real-time score as you edit, helping you to optimize your skills, experience, and achievements for the role you want., get noticed with resume templates that beat the ats., get past the resume screeners with ease using our optimized templates. our professional designs are tailored to beat the ats and help you land your dream job..

Resume Guide for Social Workers

Resumes act as the first point of contact between a social worker and his/her potential employer, so it’s critical for resume writers to detail their professional experience and qualifications as effectively as possible. Aspiring social work employees should use their resumes to showcase their certifications or licensures, plus their supervised clinical hours and work experience. Social work resumes should also prove that a candidate is qualified to work with clients and has experience interacting with the people who will be in their care. On top of that, job applicants should tailor their resumes to fit the position for which they are applying.

How to Write a Social Work Resume

- Do Your Research: It’s important for a resume to reflect the skills and work experience required by the position in question. Before drafting their social work resume, applicants should research their potential new employer to learn about which skills they value, and tweak the resume as needed to highlights those skills.

- Write Down the Key Points: Start with an outline to organize the resume’s main points. Social work resumes should address a candidate’s strengths and weaknesses, and include details on how to overcome the specified weaknesses.

- Format Your Resume: These resumes should be professional and easy to read. Start with an online search for sample resume formats, choose an appropriate design, and go from there. Headlines should separate resume categories (work experience, education, and skills, for example). Arrange the categories to reflect the employer’s priorities.

Types of Resumes for Social Workers

Resumes provide an overview of a job candidate’s skills and experience, and there are many ways to organize this information. Some resumes highlight work history first, while others focus on educational credentials. Applicants can alter the format of their resume to best suit the position in question. For example, employers who work closely with the community might prefer to see a resume highlighting experience with outreach programs, while recruiters hiring for clinical positions might be more interested in educational background. The three primary resume formats are as follows:

- Reverse-Chronological: Reverse-chronological resumes start with the applicant’s most recent work experience, and work down to the oldest. This format is ideal for candidates with recent work experience in their desired field.

- Functional: Functional resumes focus primarily on practical experience and skills, and less on job history. This format works best for candidates who are changing careers, have taken time away from work, or are just entering the workforce. Volunteer and coursework experience may be included on a functional resume.

- Combination: A combination resume incorporates aspects of both the reverse-chronological and functional formats. Combination resumes typically lead with a description of qualifications and related skills, followed by a breakdown of work experience.

Required vs. Preferred Qualifications

In job postings, employers often list required qualifications and preferred qualifications separately. Required qualifications are deal-breakers when it comes to being considered for a position, while preferred qualifications give candidates some bonus points in the hiring process. Applicants who have some of the position’s preferred qualifications should make sure to include them on their resume, but even those without required qualifications should still apply if they are otherwise qualified for the role.

What Should I Include on a Social Work Resume?

Education and Training: Social work careers require some level of higher education. Some social work positions only require a bachelor’s, but many call for a master’s degree. Any social work application must show that the candidate has met the minimum education requirements. It should also detail their prior training and certifications. Applicants who earned high a GPA in college may include this information on their resume, but in most cases GPA information isn’t necessary.

Experience: Applicants with relevant job experience should list this information in reverse-chronological order, including the dates of employment, the number of clients seen, the types of treatment offered, and the general client population. Candidates should also break down each job’s specific duties on their resume. Using positive adjectives and action verbs to describe these duties, and highlight how they pertain to the position in question. If a job posting includes a list of required and preferred qualifications, address those points in this section. Use the job listing’s specific wording in case applications are reviewed by a resume-reading robot. Applicants lacking in relevant job experience should follow the functional resume format to highlight their other skills and qualifications.

Skills: Applicants can devote a resume section to their personal skills, which exist outside of work or education experience. It’s important to note how those personal skills can apply to a career in social work. Candidates can use this section to note unique skills that might set their resume apart from the others.

Licensure, Certifications: Most social work positions require some form of certification or licensure, usually at the state level. In many cases, a license is required to qualify for social work positions, while certifications might be optional. Either way, it’s crucial to include all licenses and certifications when applying for a social work job, and beneficial to include their expiration and renewal dates, as well.

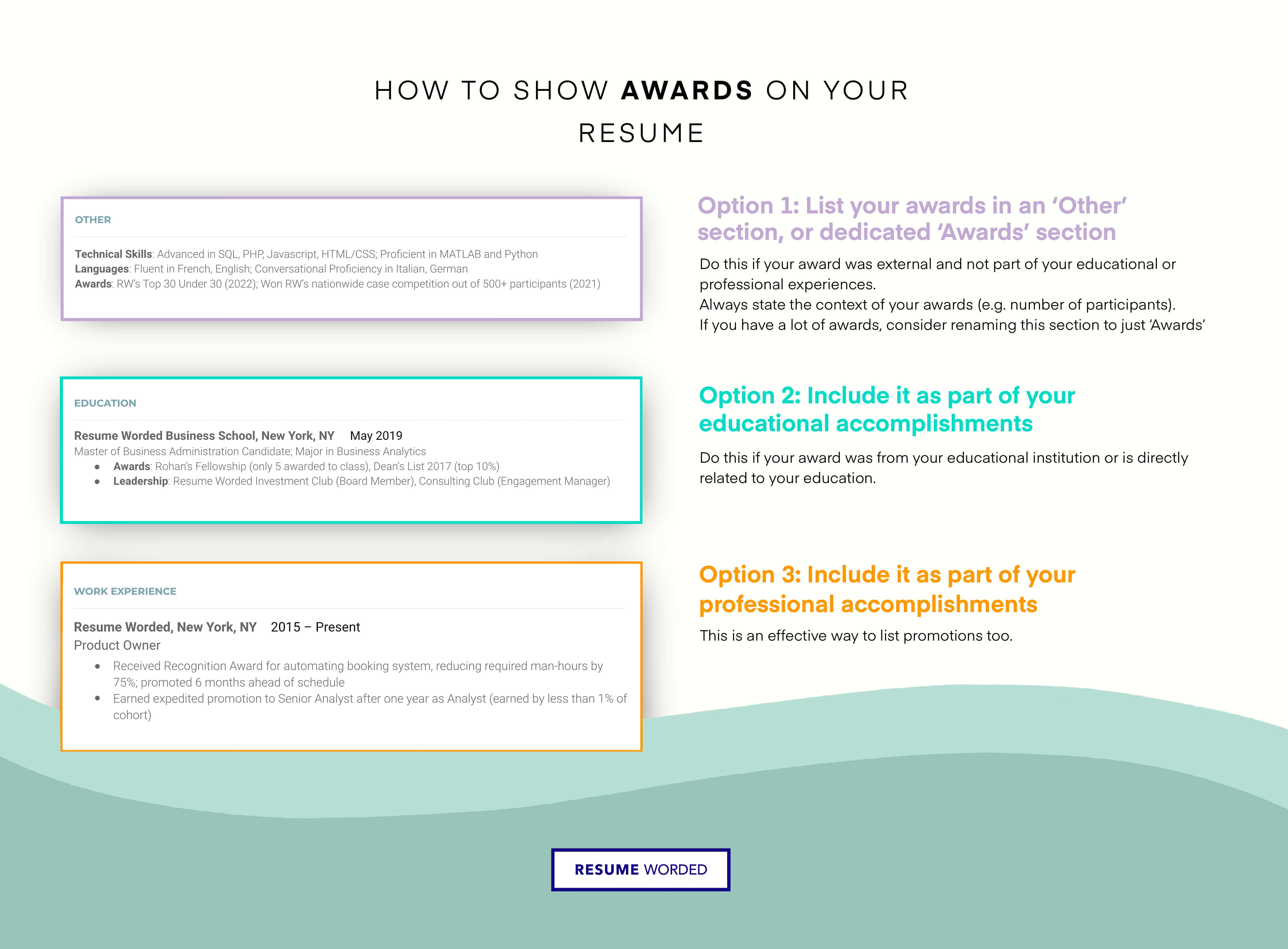

Awards, Accomplishments, Affiliations: Members of professional organizations should list their affiliations in this section. Applicants should also list their awards or accomplishments that relate to the job in question.

Volunteer Work: Hiring managers take notice of candidates who donate their time, especially if they volunteer in the same field as their desired career. Applicants with social work volunteer experience would boost their resume by including information on their duties and responsibilities. Applicants who lack professional social work experience might include volunteer social work on their resume instead.

Featured Online Programs

What should i put on my social work resume if i don’t have any experience.

Some social work careers require a minimum of a bachelor’s degree, meaning many applicants have only education, internships, and volunteer work under their belt. Entry-level social work resumes should include educational credentials, certificates, and licenses. Hiring managers might also take interest in unrelated work experience — for example, retail or service industry experience could indicate an ability to work well in high-pressure situations.

What Is a Resume-Reading Robot?

What is ats.

Applicant tracking systems (ATS) aim to streamline the recruitment process when employers receive an influx of mixed-quality applications. An ATS simplifies the candidate-screening process by scanning resumes for keywords and phrases that match the job description in question. This helps narrow down the application pile for hiring managers. Some ATS systems are even advanced enough to search a candidate’s social media profiles for relevant information.

Tips for Outsmarting an ATS

- Simple Headers: Chances are that any ATS will be looking for straightforward terms such as “education,” “skills,” and “professional experience.” Use these terms to boost the chances that a bot will store your information for employer review.

- Clean Format: ATS systems are more likely to select resumes with simple layouts and basic fonts. Some bots automatically reject certain fonts or added graphics. To be safe, avoid graphics and utilize common fonts such as Arial, Tahoma, or Verdana.

- Keywords/Phrases: Job postings often include descriptions of duties and applicant qualifications. These blurbs may use the same terms the employer’s ATS system is looking for, so work them into your resume when possible.

- Industry-Specific Jargon: Research terminology that’s commonly used in your desired position and work it into your resume. Industry jargon may make its way into an ATS system’s screening process.

Resume Writing Tips for Social Workers

- Tailor Your Resume: In applying to any job, it’s important to list your most relevant skills and experience first, to tailor your resume to the position in question.

- Save Your Resume Under a Professional Name: To play it safe and keep things professional, follow the format: “Firstlast_specialty_resume.doc” when you save your resume. This format makes sure an employer can clearly make out that the document in question is your resume.

- Make It Easy to Read: Social work resumes should stick to basic fonts such as Arial, Verdana, and Tahoma. These fonts are sans-serif and easily readable.

- Include a Cover Letter: Cover letters offer applicants a chance to express their personality and writing skills, and allow them to explain how seemingly irrelevant work experience might translate to a career in social work.

- Keep It to One Page: Hiring managers prefer resumes that are short and sweet, especially if they have receive a pile of applications. If you can’t fit all your relevant work experience on one page, consider including only the most valuable experience. Resumes with a clinical focus may be up to two pages long, since clinical positions usually call for more extensive experience.

Common Mistakes Social Workers Make on Their Resumes

- Typos: Proofread your resume for typos and grammatical errors, which could come off as laziness or lack of attention to detail.

- Including Personal Information: Remember to include an email address and phone number so the hiring manager can contact you if necessary. A home address, however, might not be necessary.

- Including Salary Information: It’s generally inadvisable to include current or previous salaries on a resume. Prospective employers may ask for salary information later in the hiring process.

- Using Nicknames: Nicknames are considered unprofessional on a resume. Employees may use them after the hiring process, but during recruitment, all documents (including the resume) should use their legal name.

- Using an Unprofessional Email Address: Professional email addresses should consist of your first and last name or initials.

- First-Person Pronouns: Avoid overusing first-person pronouns, since the nature of a resume assumes all information included pertains to the candidate in question.

- Unprofessional Voicemail: If you include your phone number on your resume, make sure your outgoing voicemail message is professional and includes your full name.

Social Work Resume Samples

Applicants can use online resources as a tool for formatting their resumes. Make sure to choose a template that highlights your most relevant experience in the most accessible way possible. The below examples might inspire applicants struggling for resume inspiration:

Sample 1 : This social work resume sample is in reverse-chronological format and includes work experience and education. It also opens with a short summary.

Sample 2 : The summary provided in this resume focuses more on the candidate’s goals rather than his/her skills. This social work resume sample fuses the functional format with the reverse chronological format, highlighting both skills and work experience.

Sample 3 : The resume in this sample includes certifications and licensure as a key feature, placing key skills at the bottom. This format is ideal for highlighting verifiable credentials, work experience, and education.

Take the next step toward your future with online learning.

Discover schools with the programs and courses you’re interested in, and start learning today.

Resume Worded | Resume Skills

Skill profile, social work supervisor, improve your resume's success rate by using these social work supervisor skills and keywords ..

- Hard Skills and Keywords for your Social Work Supervisor Resume

- ATS Scan : Compare Your Resume To These Skills

- Sample Resume Templates

- How To Add Skills

- Social Work Supervisor More Resume Templates

Browse Skills from Similar Jobs

Frequently asked questions.

- 3. Effective Action Verbs for your Resume

Get a Free Resume Review

Looking for keywords for a specific job search for your job title here., © 2024 resume worded. all rights reserved., social work supervisor resume keywords and skills (hard skills).

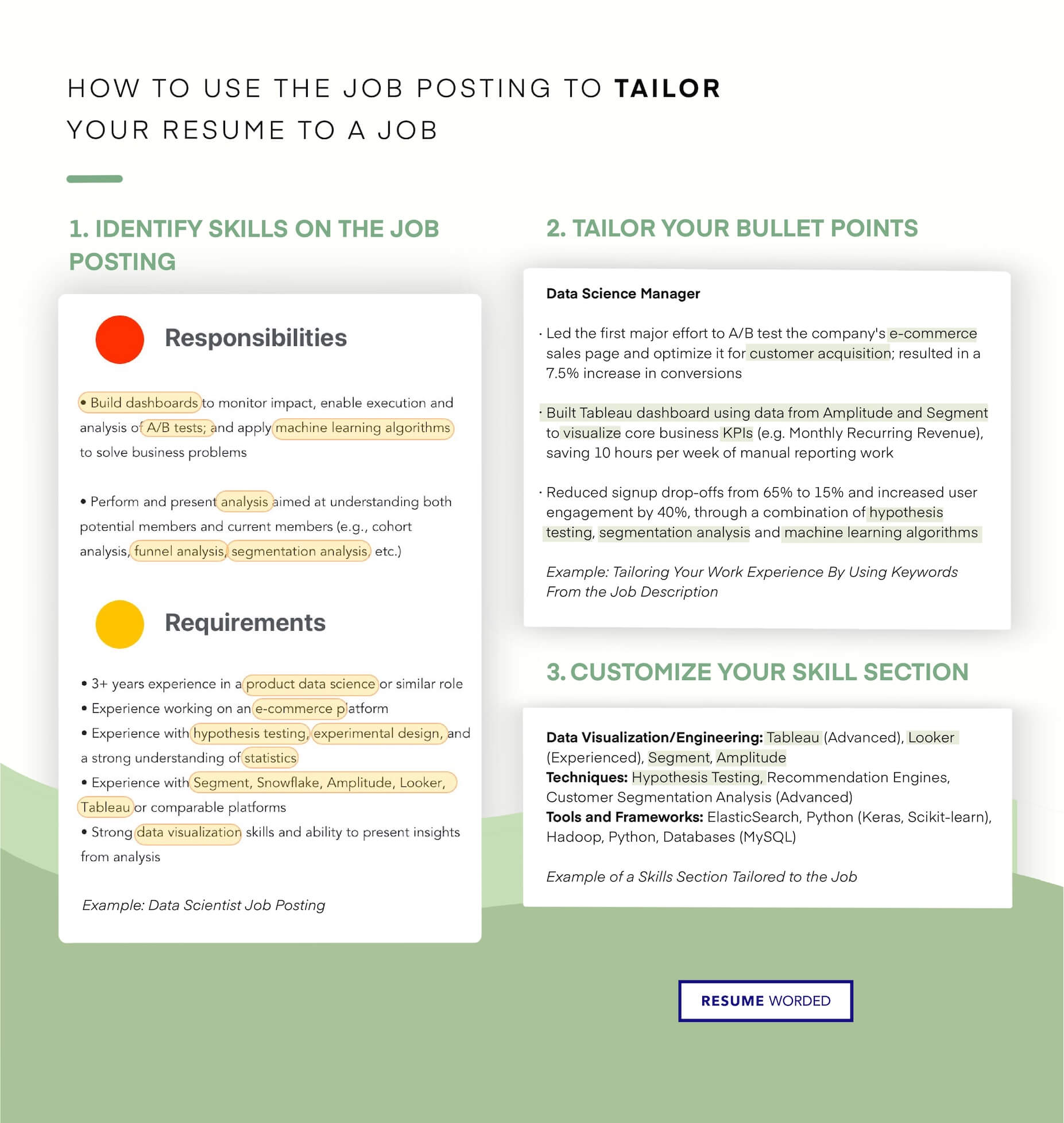

Here are the keywords and skills that appear most frequently on recent Social Work Supervisor job postings. In other words, these are the most sought after skills by recruiters and hiring managers. Go to Sample Templates ↓ below to see how to include them on your resume. Remember that every job is different. Instead of including all keywords on your resume, identify those that are most relevant to the job you're applying to. Use the free Targeted Resume tool to help with this.

- Social Services

- Case Management

- Crisis Intervention

- Mental Health

- Psychotherapy

- Clinical Supervision

- Group Therapy

- Find out what your resume's missing

- Mental Health Counseling

- Child Welfare

- Behavioral Health

- Program Development

- Social Work

- Community Outreach

- Family Therapy

- Cognitive Behavioral Therapy (CBT)

- Nonprofit Organizations

- Motivational Interviewing

- Psychosocial

- Medical Social Work

Resume Skills: Therapeutic Techniques

- Cognitive Behavioral Therapy

- Psycho-Education

- Cognitive Behavioural Therapy

- Mindfulness

- Dialectal Behavior Therapy

- Trauma Informed Care

- Match your resume to these skills

Resume Skills: Digital Outreach

- Digital marketing

- Social Media Management

- CRM software (Hubspot, Salesforce)

- A/B Testing

- Google Analytics

Resume Skills: Community Organization

- Event planning

- Public Speaking

- Coalition building

- Program management

- Fundraising

Resume Skills: Policy & Research

- Public policy analysis

- Survey design

- Advocacy strategies

- Data management and analysis (SPSS, Excel)

- Report Writing

- Legislative and public policy research

Resume Skills: Languages

- Spanish (Fluent)

- American Sign Language

- Sign Language

Resume Skills: Software & Tools

- MS Office Suite

- Google Workspace

- Electronic Health Records (EHRs)

- Salesforce CRM

Resume Skills: Regulations & Frameworks

- HIPAA Compliance

- Child Protective Services (CPS)

- IEP Procedures

Resume Skills: Supervision

- Programme Development

- Leadership Development

- Conflict Resolution

- Decision Making

- Quality Management

Resume Skills: Software

- Microsoft Office

- Electronic Health Records

- Project Management Software

- Virtual Meeting Platforms

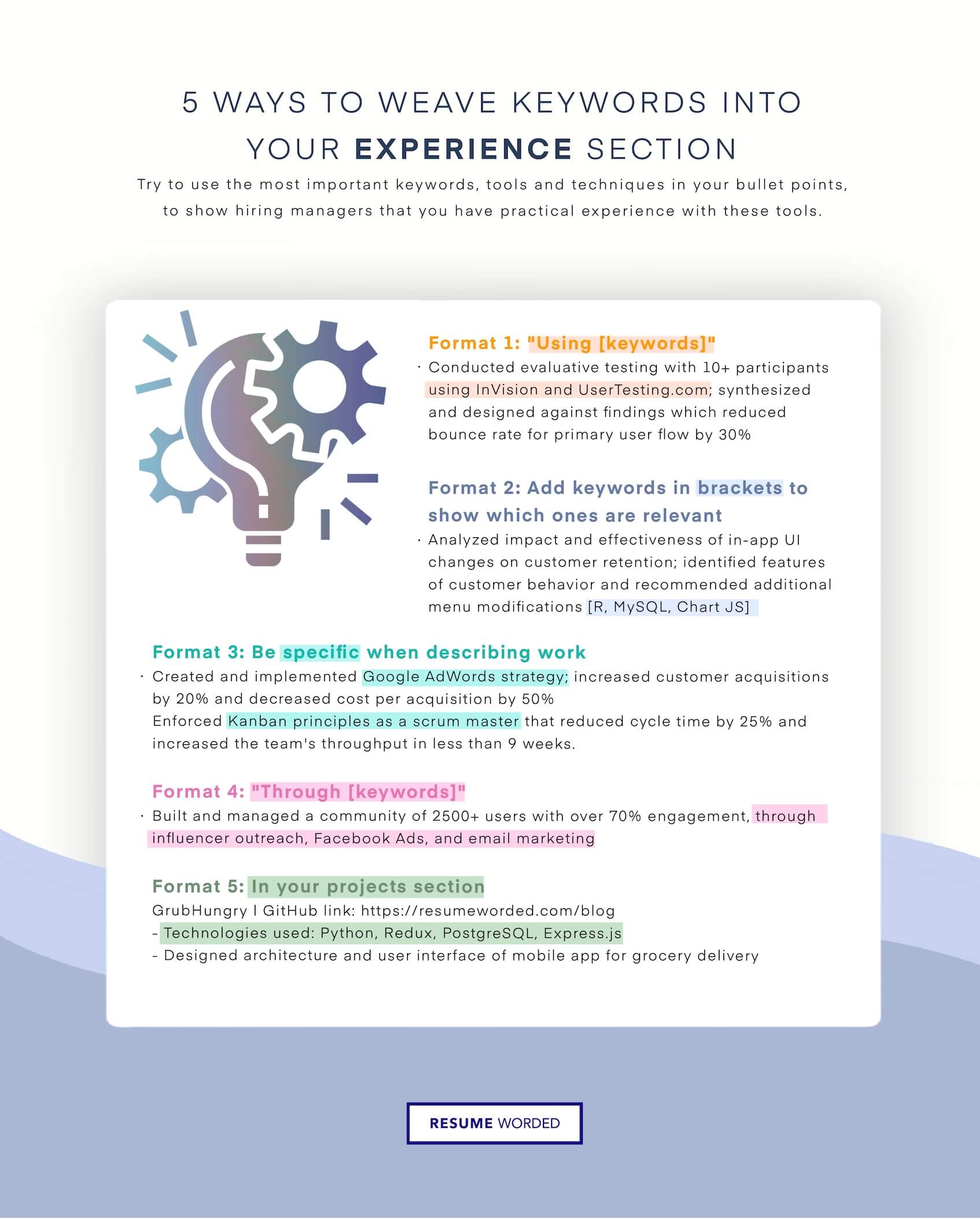

Where on my resume do I add these buzzwords? Add keywords directly into your resume's work experiences , education or projects. Alternatively, you can also include a Skills section where you can list your technical skills in order of your proficiency. Only include these technical skills or keywords into your resume if you actually have experience with them.

Does your resume contain all the right skills? Paste in your resume in the AI Resume Scan ↓ section below and get an instant score.

Compare Your Resume To These Social Work Supervisor Skills (ATS Scan)

Paste your resume below and our AI will identify which keywords are missing from your resume from the list above (and what you need to include). Including the right keywords will help you get past Applicant Tracking Systems (i.e. resume screeners) which may scan your resume for keywords to see if you're a match for the job.

Sample Social Work Supervisor Resume Examples: How To Include These Skills

Add keywords directly into your resume's work experiences , education or skills section , like we've shown in the examples below. use the examples below as inspiration..

Where on my resume do I add these buzzwords? Add keywords directly into your resume's work experiences , education or projects. Only include these technical skills or keywords into your resume if you actually have experience with them.

How do I add skills to a Social Work Supervisor resume?

Go through the Social Work Supervisor posting you're applying to, and identify hard skills the company is looking for. For example, skills like Psychotherapy, Group Therapy and Mental Health Counseling are possible skills. These are skills you should try to include on your resume.

Add other common skills from your industry - such as Crisis Intervention, Mental Health and Case Management - into your resume if they're relevant.

Incorporate skills - like Psychosocial, Social Work and Program Development - into your work experience too. This shows hiring managers that you have practical experience with these tools, techniques and skills.

Specifically on Social Work Supervisor resumes, you should think about highlighting examples of where you supervised others. Recruiters want to see if you have experience supervising and providing oversight over teams of similar sizes.

Try to add the exact job title, Social Work Supervisor, somewhere into your resume to get past resume screeners. See the infographic for how to do this.

Word Cloud for Social Work Supervisor Skills & Keywords

The following word cloud highlights the most popular keywords that appear on Social Work Supervisor job descriptions. The bigger the word, the more frequently it shows up on employer's job postings. If you have experience with these keywords, include them on your resume.

Get your Resume Instantly Checked, For Free

Upload your resume and we'll spot the issues in it before an actual social work supervisor recruiter sees it. for free., social work supervisor resume templates.

Here are examples of proven resumes in related jobs and industries, approved by experienced hiring managers. Use them as inspiration when you're writing your own resume. You can even download and edit the resume template in Google Docs.

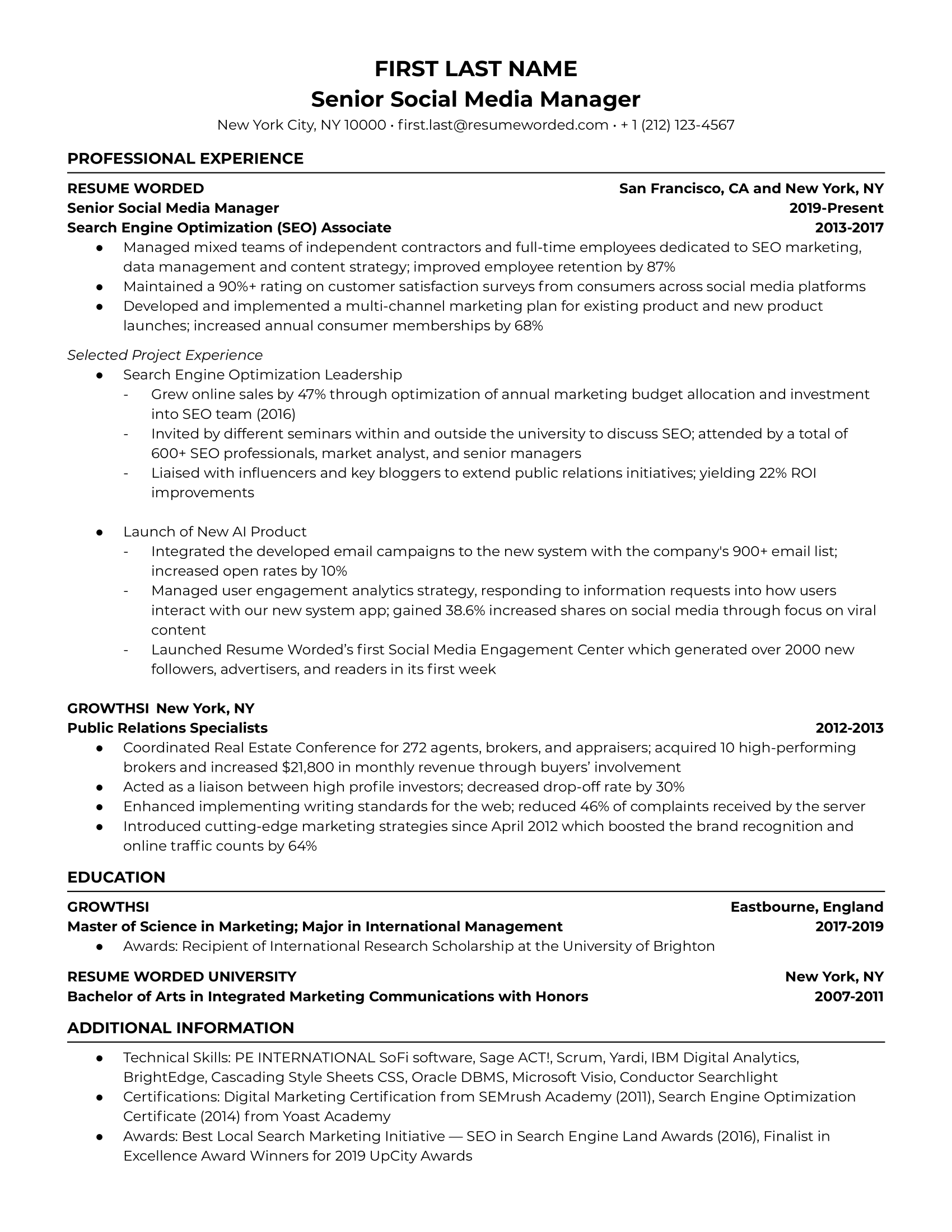

Resume Example Senior Social Media Manager

An effective Description of the templates...

Download this resume template

As a senior social media manager, you’ll likely be leading and managing a team of employees on top of your social media projects. The applicant notes that they “Improved employee retention by 87%” while managing both contractors and full-time employees. This shows the hiring manager that the applicant has expertise in both social media and management.

Tips on why this template works

selected social media project experience.

If you’re looking for a senior level role, most other applicants will have plenty of experience in the social media space, just like you. This template sets the applicant apart by focusing on select projects where their skills stand out. In this example, the template details their leadership in boosting online sales with SEO and highlights a successful product launch. When selecting project experience, we suggest you choose projects where your skills and experience align closely with key words and responsibilities outlined in the job posting.

Skills, certifications and awards in social media

Managing social media campaigns at the senior level requires deep expertise and technical skills. This resume lists the applicant’s skills and certifications in various social media academies and analytics software, demonstrating their extensive knowledge in this area.

Resume Example Entry Level Social Media Manager

Many students seeking their first entry level job may feel like they have little to no experience in their desired industry, or that they don’t stand a chance among the sea of applicants. If you feel that way, don’t worry -- everyone starts somewhere. Having a strong academic history or experience in relevant projects can effectively demonstrate your strong analytical skills, or even just an interest in social media that many recruiters often look for at the entry level.

Previous experience in social media manager

Even though this template doesn’t detail an extensive work history, the applicant demonstrates their interest in social media with their previous work as a social media manager intern. Additionally, their other activities and experience, such as their social media work in their community project, showcase their ability and understanding of organic social media growth.

Education in related fields

When applying to your first entry level position, leading with your educational history and highlighting relevant coursework, such as data analysis, can communicate those abilities you’ve developed outside of your work history to your recruiter.

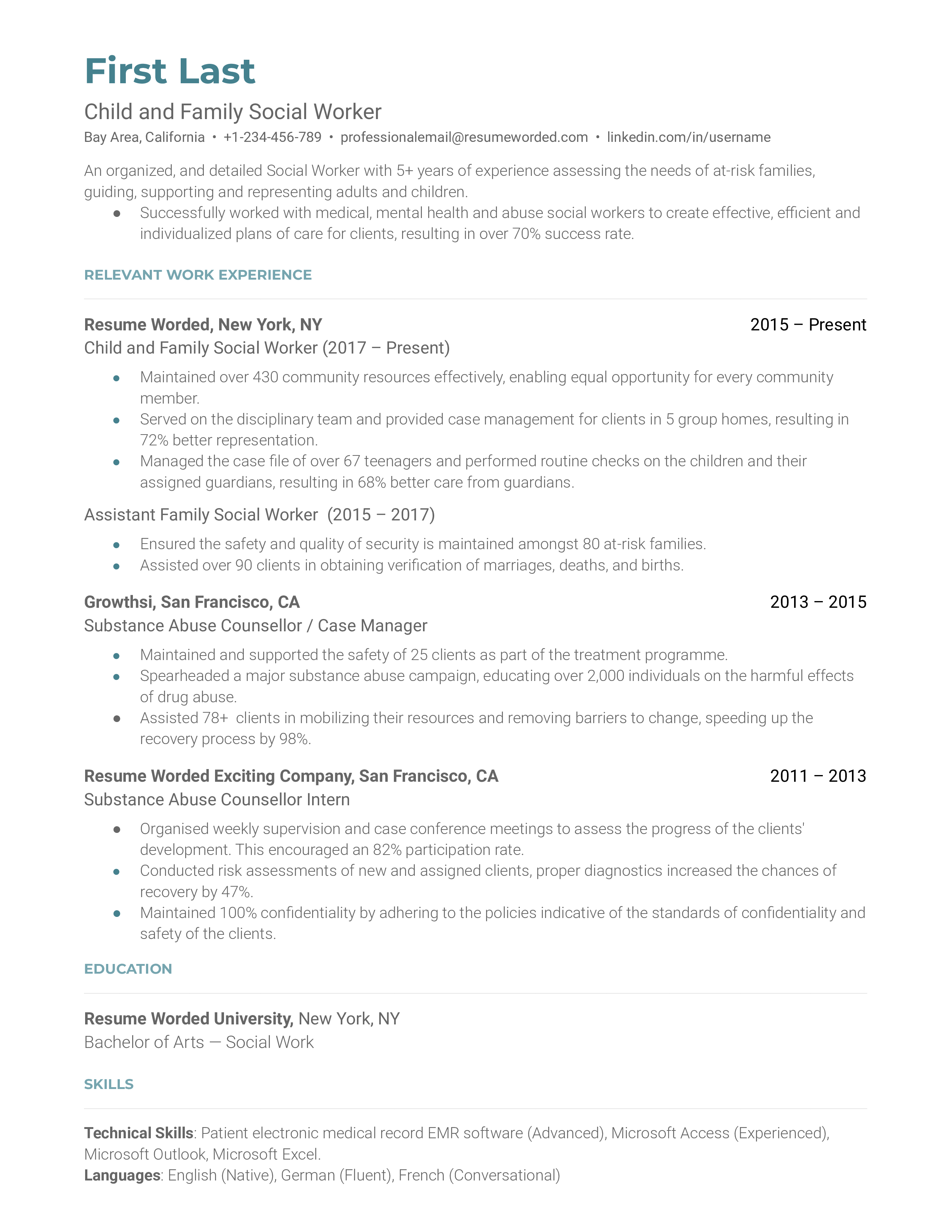

Resume Example Child and Family Social Worker

A child and family social worker mediates instances of abuse and neglect as well as places children in safe environments. Being assigned such a role involves arranging adoptions and foster homes, assisting families through such processes, and reuniting dissected families and children. A skilled social worker keeps track of case files and notes improvements accordingly while understanding their clients’ needs and efficiently solving their issues. This resume illustrates a social worker of 5-year experience. It lists experience on a case-by-case basis with a success rate, a B.A. in the relevant field of social work, and the executions of each role - treatment programs, maintaining resources, and managing case files.

Include metrics like participant and case success rates.

Notice how this resume highlights the number of cases dealt with as well as their participation and success rates. You should always include this information to show recruiters your efficiency in managing simultaneous case files as well as your work ethic in each one’s success rate.

Highlight the specifics of each role.

Notice how this resume explains each responsibility in detail - “organized meetings / maintained community resources”. It is important that you list those to show that you understand the dynamics and nature of the work.

Resume Example Social Work Teacher

A social work teacher can range from specializing in social work and conducting academic positions to instructing teachers and officials on important responsibilities. This is executed by training them (or students) on important societal, cultural, or economic factors in the educational realm or the skill of identifying instances of abuse. A social work teacher focuses mainly on training people to include social work in their professions or their lives. This resume illustrates an experienced social work teacher. It lists their social work, their community development roles, and their teaching responsibilities. There is a highly strong base established with a B.S. in Psychology - with a minor in Counselling. The resume continues on a role-by-role basis and volunteering work is listed which shows recruiters their plan-to-action progression and initiative.

Define the goals of each social assistance role.

Notice how this resume highlights the reason behind each initiative - “to prevent and resolve issues related to human behavior and relationships”. You should list these for recruiters to see your know-how and immersion in the work.

List your tools and techniques.

Notice how this resume provides its tools and techniques. This shows recruiters your methodological diversity. You should include all the ways you employ to complete your goals.

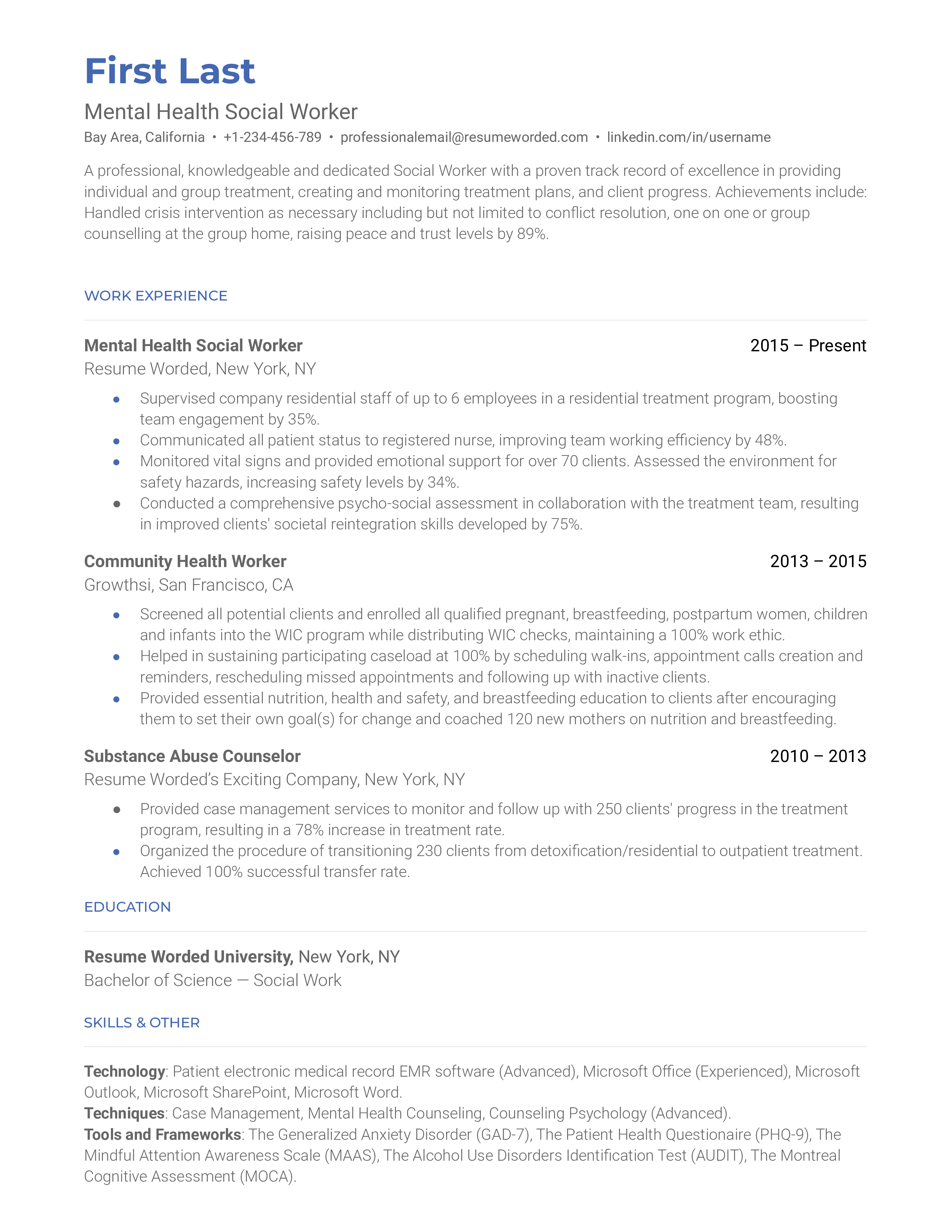

Resume Example Mental Health Social Worker

A mental health social worker is mainly responsible for spotting, treating, and preventing mental and behavioral issues. Such a social worker cultivates relationships with clients, provides coping tools, addresses urgent needs, and provides support. This resume is that of a mental health social worker. As seen, there’s a strong base of a B.S. in social work with highlights on individual and group treatments, conflict resolution, and crisis intervention. There’s sufficient experience in counseling and community. This resume portrays a strong work diversity and a success rate in treatment and progression.

Underscore client-success results.

Notice how this resume underscores client relations and societal reintegration. You should list your involvement in clients’ success in conquering their mental health issues to show recruiters your capacity of helping, empathy, and improvement of peoples’ lives.

Highlight the social categories of your clients.

Notice how this resume lists their diversity of working with clients - children, infants, and pregnant women. This shows a wide range of interpersonal skills and dynamic work needed for this role.

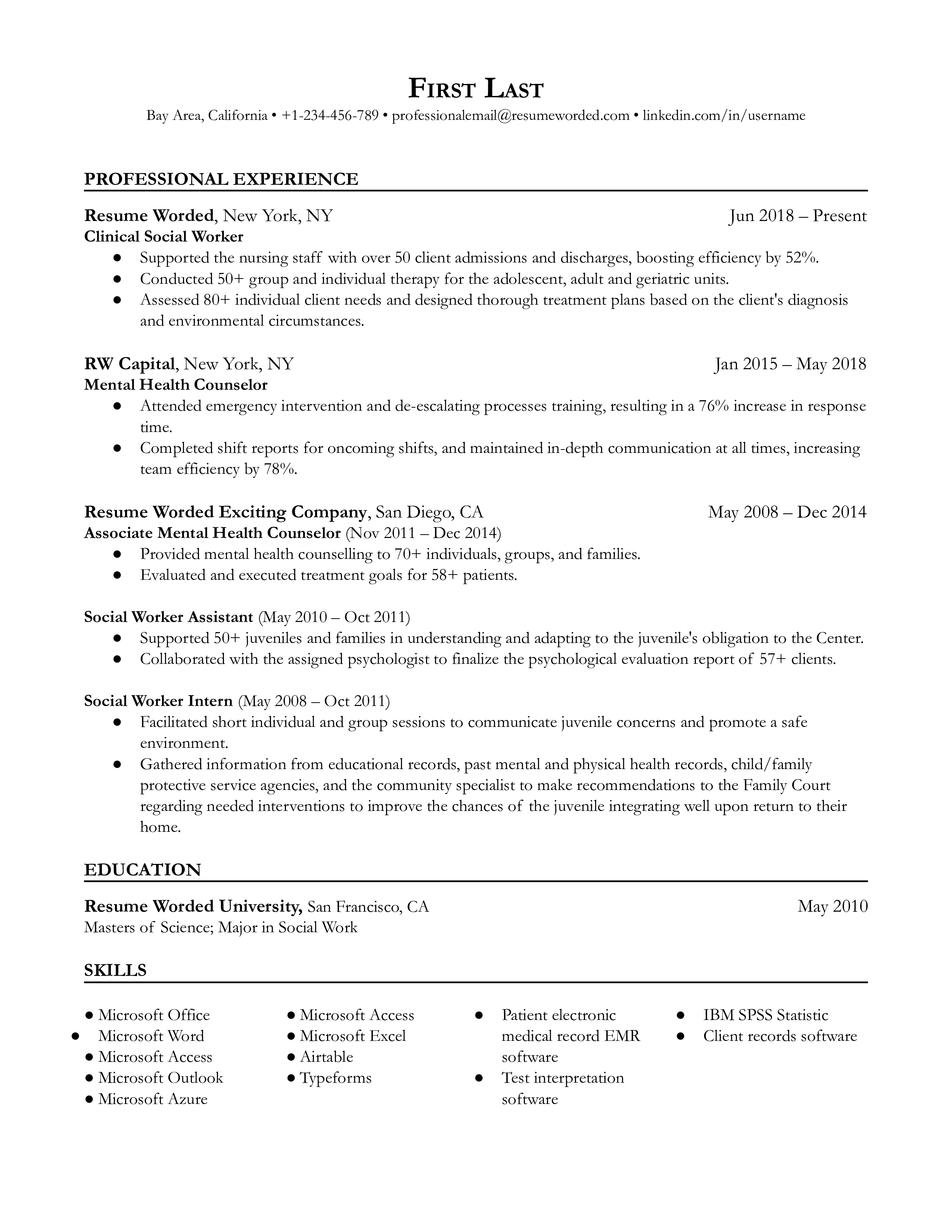

Resume Example Clinical Social Worker

A clinical social worker is focused on the assessment, diagnosis, and treatment of mental and behavioral issues. Their work ranges from individual to group therapy. This role may seem similar to psychology but it’s more complex in that it deals with socioeconomic, cultural, and familial impacts on people. This resume portrays a highly qualified social worker, with several years of experience and an M.A. in Science. It begins with an internship and a volunteering capacity which shows drive and work ethic. 10 years of experience are listed in many areas ranging from mental health counseling to clinical social work.

Mention any internship and volunteering experience, if applicable.

Notice how this resume highlights internships and volunteering capacity. You should include any extra work you’ve executed since this portrays the value of your capacity to take initiative.

Highlight relationships with clients.

Notice how this resume mentions work with families and individuals alike. It emphasizes the continuation of communication and safety through upheld relationships. This shows recruiters the interpersonal and social skills that are needed for the job.

What are the top skills you should add to your Social Work Supervisor resume?

Some popular Social Work Supervisor hard skills are Social Services, Case Management, Crisis Intervention, Mental Health, Psychotherapy, Clinical Supervision, Group Therapy and Mental Health Counseling. Depending on the job you apply to, skills like Psychosocial, Family Therapy, Program Development, Social Work and Nonprofit Organizations can also be good to include on your resume.

Target your Resume to a Job Description

While the keywords above are a good indication of what skills you need on your resume, you should try to find additional keywords that are specific to the job. To do this, use the free Targeted Resume tool. It analyzes the job you are applying to and finds the most important keywords you need on your resume. It is personalized to your resume, and is the best way to ensure your resume will pass the automated resume filters. Start targeting your resume

Most resumes get auto-rejected because of small, simple errors. These errors are easy to miss but can be costly in your job search. If you want to make sure your resume is error-free, upload it to Score My Resume for a free resume review. You'll get a score so you know where your resume stands, as well as actionable feedback to improve it. Get a free resume review

Scan your skills and keywords.

Creating an account is free and takes five seconds. you'll get instant access to all skills and keywords, plus be able to score your resume against them - no strings attached., choose an option..

- Have an account? Sign in

E-mail Please enter a valid email address This email address hasn't been signed up yet, or it has already been signed up with Facebook or Google login.

Password Show Your password needs to be between 6 and 50 characters long, and must contain at least 1 letter and 1 number. It looks like your password is incorrect.

Remember me

Forgot your password?

Sign up to get access to Resume Worded's Career Coaching platform in less than 2 minutes

Name Please enter your name correctly

E-mail Remember to use a real email address that you have access to. You will need to confirm your email address before you get access to our features, so please enter it correctly. Please enter a valid email address, or another email address to sign up. We unfortunately can't accept that email domain right now. This email address has already been taken, or you've already signed up via Google or Facebook login. We currently are experiencing a very high server load so Email signup is currently disabled for the next 24 hours. Please sign up with Google or Facebook to continue! We apologize for the inconvenience!

Password Show Your password needs to be between 6 and 50 characters long, and must contain at least 1 letter and 1 number.

Receive resume templates, real resume samples, and updates monthly via email

By continuing, you agree to our Terms and Conditions and Privacy Policy .

Lost your password? Please enter the email address you used when you signed up. We'll send you a link to create a new password.

E-mail This email address either hasn't been signed up yet, or you signed up with Facebook or Google. This email address doesn't look valid.

Back to log-in

Find out what keywords recruiters search for. These keywords will help you beat resume screeners (i.e. the Applicant Tracking System).

get a resume score., find out how effective your resume really is. you'll get access to our confidential resume review tool which will tell you how recruiters see your resume..

Thank you for the checklist! I realized I was making so many mistakes on my resume that I've now fixed. I'm much more confident in my resume now.

10 Essential Tips for Your Amazing Social Work Résumé

by Valerie Arendt

Resume Crumpled

by Valerie Arendt, MSW, MPP

(Editor's Note: After you read this article, be sure to see Valerie Arendt's 7 MORE Tips for Your Amazing Social Work Résumé .)

Is your résumé ready to send out to employers? You have Googled example résumé templates, perfected your formatting, and added appropriate action words. Everything is in the correct tense, in reverse chronological order, and kept to two pages or less. What else should you think about for an amazing social work résumé? Whether you are a clinical or macro social worker, student, new professional, or have been in the field for 30 years, these essential tips will keep your résumé ready to send out to your future employer.

1. Objective or Professional Summary?

Let’s start at the beginning. I am not a fan of the objective, and neither are many hiring managers. If they are reading your résumé, they already know you are seeking a position with them. Generally, an objective is used by someone who has recently graduated or has very little experience. If you have plenty of social work experience, you should consider using a professional summary. This is one to three sentences at the beginning of your résumé that help describe the value you bring as a social worker through your skills and experience. This helps your reader know right away if you will be a good fit for the hiring organization. It is much easier for a hiring manager to find that value in a short paragraph than trying to piece it together from a lengthy history of professional experience and education.

DON’T: Objective: Seeking a social work position within a facility where I can utilize my experience to the benefit of my employer as well as gain knowledge and professional growth.

DO: Licensed Clinical Social Worker with 6+ years experience in medical and mental health settings, working with diverse populations in private practice, health care, outpatient, and inpatient treatment settings. Recently relocated to Georgia.

2. Don’t assume your reader already knows what you do.

This is one of the biggest mistakes I see when reviewing résumés. Write your résumé as if the person reading it has no idea what you do. Really? Yes! This will help you to be descriptive about your experience. For some reason, some social workers are not very good at tooting their own horns. Your résumé is exactly the place you need to brag about what an amazing professional you are. Don’t assume that because your title was “Outpatient Therapist,” the reader of your résumé will know exactly what you did. Be descriptive. Give a little information about the organization or program, the clients, and the type of therapy or work you performed. This can easily be done in three to five bullets if you craft thoughtful, complete sentences.

DON’T: Provide psychotherapy to clients.

DO: Provide group and individual outpatient therapy to adult clients at a substance abuse treatment center utilizing Cognitive Behavioral Therapy (CBT), Dialectical Behavioral Therapy (DBT), psychoeducation, and motivational interviewing.

3. List your accomplishments.

If you worked in a position for five years but don’t list one relevant accomplishment, that is a red flag for a hiring manager. Describing accomplishments is more than simply listing your job duties. These are the contributions you have made in your career that would encourage an organization to hire you.

Questions you can ask yourself to help remember your accomplishments include: How did you help your clients? Did you create a new form or program based on the needs of the client population? Did your therapy skills reduce the relapse rate in your agency? Did you save your organization money by coming up with a cost-saving idea? Were you selected for special projects, committees, or task forces? Even if the only social work experience you have on your résumé is your field placement, you should be able to list an accomplishment that will entice the reader to want to know more.

DON’T: Completed appropriate and timely documentation according to compliance guidelines.

DO: Recognized need for updated agency forms. Developed 10 clinical and administrative forms, including no-harm contract, behavior contract, and therapist’s behavior inventory, which increased staff efficiency and productivity by 15%.

4.Quantify your accomplishments.

Numbers aren’t just for business professionals. Numbers also help with the bragging I mentioned that needs to happen on your résumé. The most convincing accomplishments are measurable and help your résumé stand out from the crowd. How many clients did you serve? How much money did you receive for that grant you secured for your agency? How many people do you supervise?

DON’T: Wrote grants for counseling program in schools.

DO: Co-wrote School Group Experiences proposal, which received a $150,000 grant from State Foundation for Health, resulting in doubling the number of children served in group counseling from 120 children to 240 children, and increasing the percentage of minority children served from 20% to 50% of the total child population in group therapy.

5. Tailor your résumé to the specific job.

You have heard this over and over, and it should make sense. Still, not many social workers do this correctly or at all. Many big organizations, hospitals, and university systems use online applicant tracking systems to review résumés. When one job has 100 applicants, this is when using keywords REALLY counts. Look at the job description for keywords.

For example, what words do they use to describe the clients? Patients, clients, residents, victims, survivors, adults, children? If you have worked with the same client populations, used the same therapy techniques, or provided the supervision listed in the job description, make sure these SAME words are in your résumé. Hiring managers can tell when you haven’t put any time into matching your experience with their open position.

DON’T: Provide in-home therapy for families.

DO: (Similar language from job description) Perform individual and family, agency, and home-based therapy for medically fragile children and their families (parents and siblings) with goal of maintaining intact families and improving family functioning.

6. Spell out all acronyms.

Social workers LOVE to use acronyms. Many social workers spend hours writing case notes, and to be efficient, they rely on acronyms to describe their work. For the same reasons you should use keywords, it is essential that you spell things out for the computer or human resources person who may not know what certain acronyms mean. I am a social worker with limited clinical knowledge, and I often have to Google acronyms when I review NASW members’ résumés. The reader responsible for finding the right candidates to interview will consider this a waste of his or her time and might move on to the rest of the résumés in the pile if he or she has no idea what you are talking about.

DON’T: Scored and analyzed clinical assessments to include SIB-R, CBCL, CTRF, or SCQ in packets for families scheduled for autism evaluations.

DO: Scored and analyzed clinical assessments for autism evaluations including Scales of Independent Behavior-Revised (SIB-R), Child Behavior Checklist (CBCL), Caregiver/Teacher Report Form (CTRF), and Social Communication Questionnaire (SCQ).

7. Bullets, bullets, bullets.

Most résumés I review are succinct and formatted very nicely by bulleting experience. But there are still some folks who use paragraphs to describe their experience. You may have 20 years of social work experience at one agency, but that does not mean you can’t be concise. I guarantee you that hiring managers are not going to read a paragraph that is 15 lines long to look for the experience that will fit the position they are trying to fill. Write your résumé in such a way that it is easy to scan and find the keywords in 30 seconds or less. Use three to eight bullets to describe your experience and accomplishments.

8. Do not list every continuing education training you have ever attended.

Whether or not you are licensed in your state, you should seek out continuing education in social work. Don’t forget, it is in the NASW Code of Ethics: Section 4.01 (b) Competence: “…Social workers should routinely review the professional literature and participate in continuing education relevant to social work practice and social work ethics.”

It is great to show your reader that you are up to date on the latest clinical information on your client population, but the section on your résumé for Continuing Education or Professional Development should only list the courses that are relevant to the job you are applying for. It is a great idea to keep a list of all your continuing education, for your own reference and for your license renewal. You just don’t need to list them all on your résumé.

9. Less is more.

I hope you are seeing a theme here. Recently, I have come across a few résumés that have all of the following sections:

- Professional Summary

- Relevant Social Work Experience

- Work Experience

- Additional Experience

- Summary of Skills

- Professional Affiliations

- Volunteer Experience

- Publications

- Relevant Coursework

Every résumé is personal and different. You don’t need 10 categories on your résumé. Professional Experience and Education are musts. but after that, limit the places hiring managers need to search to find the information that will help them decide to interview you. Only put the information that is most relevant to the job to which you are applying.

10. Your references should always be available upon request and not on your résumé.

If the last line on your résumé is “References Available Upon Request,” this one is for you. It is not necessary to tell your reader that you have references. If you get far enough in the interview process, they will ask you for your references. Have them listed in a separate document.

Only send the references that are relevant, and only send them when asked. It is imperative that you inform your references that they may be contacted, and always send them a copy of the job description and your recent résumé, so they can be prepared when contacted. Nothing is a bigger turnoff to me than getting a call to be a reference for someone I supervised five years ago and I can’t remember exactly what their job duties were. It is great to get a heads-up and a reminder of what the person did under my supervision. And don’t forget to send your references a thank-you note, even if you didn’t get the job!

DON’T: References Available Upon Request

DO: (Separate document with your contact information at the top) References:

Jessica Rogers, MSW, LICSW, Director of Family Programs, Affordable Housing AuthorityChicago, ILRelationship: Former SupervisorPhone: [email protected] was my direct supervisor and is familiar with my clinical social work skills, my ability to work with diverse communities, and my aptitude for managing relationships with partner organizations. Jessica recognized my success in client outcomes and promoted me within 6 months of my hire date.

Remember, your résumé is your tool to get an interview. It doesn’t need to include every detail about you as a professional social worker. Use your cover letter to expand on details that are specific to the job you are seeking. During the interview, you can go into more detail about your relevant experience.

RELATED ARTICLE : Read the sidebar to this article, Cover Letters for Social Workers: Get Yourself the Interview.

Valerie Arendt, MSW, MPP, is the Associate Executive Director for the National Association of Social Workers, North Carolina Chapter (NASW-NC). She received her dual degree in social work and public policy from the University of Minnesota and currently provides membership support, including résumé review, to the members of NASW-NC.

More on your amazing social work résumé:

- 7 More Tips for Your Amazing Social Work Résumé

All material published on this website Copyright 1994-2023 White Hat Communications. All rights reserved. Please contact the publisher for permission to reproduce or reprint any materials on this site. Opinions expressed on this site are the opinions of the writer and do not necessarily represent the views of the publisher. As an Amazon Associate, we earn from qualifying purchases.

Resume Builder

- Resume Experts

- Search Jobs

- Search for Talent

- Employer Branding

- Outplacement

- Resume Samples

Clinical Supervisor Resume Samples

The guide to resume tailoring.

Guide the recruiter to the conclusion that you are the best candidate for the clinical supervisor job. It’s actually very simple. Tailor your resume by picking relevant responsibilities from the examples below and then add your accomplishments. This way, you can position yourself in the best way to get hired.

Craft your perfect resume by picking job responsibilities written by professional recruiters

Pick from the thousands of curated job responsibilities used by the leading companies, tailor your resume & cover letter with wording that best fits for each job you apply.

Create a Resume in Minutes with Professional Resume Templates

- Work collaboratively with the Performance Improvement Nurse to ensure optimal patient care, inclusive of utilization management and outcomes

- Utilizes creativity in developing quality/performance improvement programs, instructing staff and implementing new and improved standards

- Assists clinicians in establishing immediate, short, and long-term therapeutic goals, in setting priorities, and in developing plan of care

- Work closely with the sales team in making regional certification doctor expansion plan, doctor activity improvement plan and region coverage improvement plan

- Visit regional KOLs, cooperate with sales and marketing team to make speaker development plan, improve the communication efficiency with critical customers

- Responsible for the development, implementation, revision and reporting of the nursing Performance Improvement activities

- 1 Identifies and facilitates unit-based, system wide and ministry wide performance improvement activities including evaluation of individual co-workers

- Assists clinicians in establishing immediate, short, and long-term goals, in setting priorities, and in developing plan of care

- Assists in the planning, implementation and evaluation of in-service and continuing education programs

- Visits patient at their homes for patient care, as well as to provide training, competency and supervision of clinical staff

- Responsible for the quality of care and documentation of patient care, manages patient, physician and staff phone calls and communications throughout the day

- Assists in the implementation of policies and procedures, as well as strategic goals and objectives

- Performs duties in accordance of and under the direction/supervision as defined by the Agency’s organizational chart

- Promotes customer service orientation to all organization personnel

- Creates a learning atmosphere, reassures and encourages improved performance

- Provides leadership in developing and implementing solutions

- Performs work according to the code of conduct, regulations, policies and guidelines

- Provides timely coaching, identifies successes and areas of improvement

- Makes effective use of time, materials, and resources by planning, scheduling and organizing work

- Flexible, adjusts workload to accommodate changes in priorities and workload

- Does not engage in activities other than official business during working hours

- Strong leadership skills with the ability to work with multiple levels of staff

- Strong communication and leadership skills, with the ability to maintain a flexible schedule

- Good interpersonal skills; ability to deal fairly and consistently with all staff

- Ability to make logical, mature judgements. Makes decisions quickly and confidently. Functions calmly and effectively in stressful situations

- Ability to manage multiple cases concurrently reasonable administrative and coordination skills

- Knowledgeable on medical coding and billing procedures in a physician practice setting

- Strong attention to detail

- Strong clinical skills and knowledge

- Strong operational knowledge of billing process and payors (Medicaid, Medicare, private insurers and Managed Care, etc.)

- Strong knowledge of Article 31 regulations (Part 599), and best practices in clinic operations

15 Clinical Supervisor resume templates

Read our complete resume writing guides

How to tailor your resume, how to make a resume, how to mention achievements, work experience in resume, 50+ skills to put on a resume, how and why put hobbies, top 22 fonts for your resume, 50 best resume tips, 200+ action words to use, internship resume, killer resume summary, write a resume objective, what to put on a resume, how long should a resume be, the best resume format, how to list education, cv vs. resume: the difference, include contact information, resume format pdf vs word, how to write a student resume, licensed clinical supervisor resume examples & samples.

- Program oversight

- Supervising a team of Care Managers who provide intensive Case Management for children and families

- Monitoring ongoing quality assurance standards

- LSW, LAC, LPC, LCSW, LMFT or LCADC

- Strong communication, computer and time management skills

- At least one year of Supervisory experience

- Experience with the Developmentally Disabled

- Familiarity with Medicaid

Clinical Supervisor Resume Examples & Samples

- 5+ years of of working Clinical experience in an area of expertise; 2+ years of Management experience in an Ambulatory Services setting

- Bachelor's and/or Master's Degree in a related field

- Current CT licensure as one of the following: PhD; Licensed Clinical Social Worker (LCSW); Licensed Marriage & Family Therapist (LMFT); Licensed Alcohol Drug Abuse Counselor (LADC); or Licnesed Professional Counselor (LPC)

- Experience and oversight of Partial Hospitalization (PHP) and/or Intensive Outpatient (IOP) services

- Previous experience Supervising and Developing employees

- Computer savvy and ability to manage electronic calendar and email system

- Substance Abuse background

- Experience working within a large agency / organization with heavy daily volumes

- Experience with managing productivity (maybe not RVU’s, but some productivity model)

- Education: Bachelor's Degree in Nursing or related field required

- Experience: 3-5 years progressive experience in management role

- Skills & Abilities: Problem solving, excellent communication and interpersonal skills

- 5+ years of total nursing experience

- 3+ years of Emergency Nursing care experience

- 4-5 years of progressive leadership experience

- Establishes and assumes responsibility for technical standards in accordance with regulatory standards for all lab personnel; monitors compliance with same and initiates action to improve or maintain performance in accordance with same

- Responsible for the development of training programs for all new personnel, for continuing and in-service education to ensure a high level of technical expertise in all lab personnel and for completing annual competency evaluation of staff. Provides training and orientation for clinical pathology residents, GYN fourth year medical students and CRM fellows and nurses

- Actively involved administratively and technically in setting up new programs in conjunction with Center for Reproductive Medicine, Urology and The Antenatal Diagnostic Center

- Keeps abreast of the technical and theoretical developments in the field of Reproductive Endocrinology, Andrology and Semen Cryobanking. Evaluates and recommends to the Medical Director and Laboratory Administration new instrumentation and procedures. Develops cost-benefit analyses to support recommendations

- Evaluates analytical methods, laboratory procedures and organizational structure of the lab and initiates changes as necessary in consultation with the Medical Director and Technical Director of Clinical Chemistry

- Responsible for maintaining fiscal control of the lab; prepares and monitors operating and capital budgets. Regularly reviews laboratory operations to foster cost-containment efforts while maintaining high-quality laboratory service. Reviews and approves all revenue and statistical reports

- Has the ability to maintain and troubleshoot all lab equipment. Assures that all remedial actions are taken whenever test systems deviate from the laboratory’s established performance specifications

- Responsible for hiring, counseling, evaluating and terminating lab employees according to the established policies of the hospital. Is responsible for the day-to day supervision of the laboratory operation and personnel. Plans and oversees work assignments and schedules. Is accessible to testing personnel at all times

- Maintains on-going quality assurance program supplementary to laboratory quality control program, which is consistent with JCAHO and CAP requirements. Reviews quality control and proficiency-program records, manuals on standard procedures, and maintenance records in accordance with hospital policies and the requirements of the regulatory agencies. Insures action is taken to correct deficiencies noted

- Is responsible to be aware of JCAHO National Patient Safety Goals and complies with BWH and laboratory policies and procedures

- Assures patients’ confidentiality and manages patient and clinician customer service issues relating to the laboratory and sperm bank. Participates in patient education classes

- Performs receptionist and medical technologist duties as required

- In the absence of the medical director in consultation with the technical director of Chemistry has the ability to act on behalf of the medical director for delegated functions to include but not limited to QC data, procedure manuals, proficiency testing performance and other duties as assigned

- Bachelor of Science degree in Medical Technology, Biology or Chemistry. MT(ASCP) certification or equivalent preferred