Research Proposal Example/Sample

Detailed Walkthrough + Free Proposal Template

If you’re getting started crafting your research proposal and are looking for a few examples of research proposals , you’ve come to the right place.

In this video, we walk you through two successful (approved) research proposals , one for a Master’s-level project, and one for a PhD-level dissertation. We also start off by unpacking our free research proposal template and discussing the four core sections of a research proposal, so that you have a clear understanding of the basics before diving into the actual proposals.

- Research proposal example/sample – Master’s-level (PDF/Word)

- Research proposal example/sample – PhD-level (PDF/Word)

- Proposal template (Fully editable)

If you’re working on a research proposal for a dissertation or thesis, you may also find the following useful:

- Research Proposal Bootcamp : Learn how to write a research proposal as efficiently and effectively as possible

- 1:1 Proposal Coaching : Get hands-on help with your research proposal

FAQ: Research Proposal Example

Research proposal example: frequently asked questions, are the sample proposals real.

Yes. The proposals are real and were approved by the respective universities.

Can I copy one of these proposals for my own research?

As we discuss in the video, every research proposal will be slightly different, depending on the university’s unique requirements, as well as the nature of the research itself. Therefore, you’ll need to tailor your research proposal to suit your specific context.

You can learn more about the basics of writing a research proposal here .

How do I get the research proposal template?

You can access our free proposal template here .

Is the proposal template really free?

Yes. There is no cost for the proposal template and you are free to use it as a foundation for your research proposal.

Where can I learn more about proposal writing?

For self-directed learners, our Research Proposal Bootcamp is a great starting point.

For students that want hands-on guidance, our private coaching service is recommended.

Psst… there’s more!

This post is an extract from our bestselling short course, Research Proposal Bootcamp . If you want to work smart, you don't want to miss this .

You Might Also Like:

I am at the stage of writing my thesis proposal for a PhD in Management at Altantic International University. I checked on the coaching services, but it indicates that it’s not available in my area. I am in South Sudan. My proposed topic is: “Leadership Behavior in Local Government Governance Ecosystem and Service Delivery Effectiveness in Post Conflict Districts of Northern Uganda”. I will appreciate your guidance and support

GRADCOCH is very grateful motivated and helpful for all students etc. it is very accorporated and provide easy access way strongly agree from GRADCOCH.

Proposal research departemet management

I am at the stage of writing my thesis proposal for a masters in Analysis of w heat commercialisation by small holders householdrs at Hawassa International University. I will appreciate your guidance and support

please provide a attractive proposal about foreign universities .It would be your highness.

comparative constitutional law

Kindly guide me through writing a good proposal on the thesis topic; Impact of Artificial Intelligence on Financial Inclusion in Nigeria. Thank you

Submit a Comment Cancel reply

Your email address will not be published. Required fields are marked *

Save my name, email, and website in this browser for the next time I comment.

- Print Friendly

- Skip to main content

- Skip to primary sidebar

- Skip to footer

- QuestionPro

- Solutions Industries Gaming Automotive Sports and events Education Government Travel & Hospitality Financial Services Healthcare Cannabis Technology Use Case NPS+ Communities Audience Contactless surveys Mobile LivePolls Member Experience GDPR Positive People Science 360 Feedback Surveys

- Resources Blog eBooks Survey Templates Case Studies Training Help center

Home Market Research

Evaluation Research: Definition, Methods and Examples

Content Index

- What is evaluation research

- Why do evaluation research

Quantitative methods

Qualitative methods.

- Process evaluation research question examples

- Outcome evaluation research question examples

What is evaluation research?

Evaluation research, also known as program evaluation, refers to research purpose instead of a specific method. Evaluation research is the systematic assessment of the worth or merit of time, money, effort and resources spent in order to achieve a goal.

Evaluation research is closely related to but slightly different from more conventional social research . It uses many of the same methods used in traditional social research, but because it takes place within an organizational context, it requires team skills, interpersonal skills, management skills, political smartness, and other research skills that social research does not need much. Evaluation research also requires one to keep in mind the interests of the stakeholders.

Evaluation research is a type of applied research, and so it is intended to have some real-world effect. Many methods like surveys and experiments can be used to do evaluation research. The process of evaluation research consisting of data analysis and reporting is a rigorous, systematic process that involves collecting data about organizations, processes, projects, services, and/or resources. Evaluation research enhances knowledge and decision-making, and leads to practical applications.

LEARN ABOUT: Action Research

Why do evaluation research?

The common goal of most evaluations is to extract meaningful information from the audience and provide valuable insights to evaluators such as sponsors, donors, client-groups, administrators, staff, and other relevant constituencies. Most often, feedback is perceived value as useful if it helps in decision-making. However, evaluation research does not always create an impact that can be applied anywhere else, sometimes they fail to influence short-term decisions. It is also equally true that initially, it might seem to not have any influence, but can have a delayed impact when the situation is more favorable. In spite of this, there is a general agreement that the major goal of evaluation research should be to improve decision-making through the systematic utilization of measurable feedback.

Below are some of the benefits of evaluation research

- Gain insights about a project or program and its operations

Evaluation Research lets you understand what works and what doesn’t, where we were, where we are and where we are headed towards. You can find out the areas of improvement and identify strengths. So, it will help you to figure out what do you need to focus more on and if there are any threats to your business. You can also find out if there are currently hidden sectors in the market that are yet untapped.

- Improve practice

It is essential to gauge your past performance and understand what went wrong in order to deliver better services to your customers. Unless it is a two-way communication, there is no way to improve on what you have to offer. Evaluation research gives an opportunity to your employees and customers to express how they feel and if there’s anything they would like to change. It also lets you modify or adopt a practice such that it increases the chances of success.

- Assess the effects

After evaluating the efforts, you can see how well you are meeting objectives and targets. Evaluations let you measure if the intended benefits are really reaching the targeted audience and if yes, then how effectively.

- Build capacity

Evaluations help you to analyze the demand pattern and predict if you will need more funds, upgrade skills and improve the efficiency of operations. It lets you find the gaps in the production to delivery chain and possible ways to fill them.

Methods of evaluation research

All market research methods involve collecting and analyzing the data, making decisions about the validity of the information and deriving relevant inferences from it. Evaluation research comprises of planning, conducting and analyzing the results which include the use of data collection techniques and applying statistical methods.

Some of the evaluation methods which are quite popular are input measurement, output or performance measurement, impact or outcomes assessment, quality assessment, process evaluation, benchmarking, standards, cost analysis, organizational effectiveness, program evaluation methods, and LIS-centered methods. There are also a few types of evaluations that do not always result in a meaningful assessment such as descriptive studies, formative evaluations, and implementation analysis. Evaluation research is more about information-processing and feedback functions of evaluation.

These methods can be broadly classified as quantitative and qualitative methods.

The outcome of the quantitative research methods is an answer to the questions below and is used to measure anything tangible.

- Who was involved?

- What were the outcomes?

- What was the price?

The best way to collect quantitative data is through surveys , questionnaires , and polls . You can also create pre-tests and post-tests, review existing documents and databases or gather clinical data.

Surveys are used to gather opinions, feedback or ideas of your employees or customers and consist of various question types . They can be conducted by a person face-to-face or by telephone, by mail, or online. Online surveys do not require the intervention of any human and are far more efficient and practical. You can see the survey results on dashboard of research tools and dig deeper using filter criteria based on various factors such as age, gender, location, etc. You can also keep survey logic such as branching, quotas, chain survey, looping, etc in the survey questions and reduce the time to both create and respond to the donor survey . You can also generate a number of reports that involve statistical formulae and present data that can be readily absorbed in the meetings. To learn more about how research tool works and whether it is suitable for you, sign up for a free account now.

Create a free account!

Quantitative data measure the depth and breadth of an initiative, for instance, the number of people who participated in the non-profit event, the number of people who enrolled for a new course at the university. Quantitative data collected before and after a program can show its results and impact.

The accuracy of quantitative data to be used for evaluation research depends on how well the sample represents the population, the ease of analysis, and their consistency. Quantitative methods can fail if the questions are not framed correctly and not distributed to the right audience. Also, quantitative data do not provide an understanding of the context and may not be apt for complex issues.

Learn more: Quantitative Market Research: The Complete Guide

Qualitative research methods are used where quantitative methods cannot solve the research problem , i.e. they are used to measure intangible values. They answer questions such as

- What is the value added?

- How satisfied are you with our service?

- How likely are you to recommend us to your friends?

- What will improve your experience?

LEARN ABOUT: Qualitative Interview

Qualitative data is collected through observation, interviews, case studies, and focus groups. The steps for creating a qualitative study involve examining, comparing and contrasting, and understanding patterns. Analysts conclude after identification of themes, clustering similar data, and finally reducing to points that make sense.

Observations may help explain behaviors as well as the social context that is generally not discovered by quantitative methods. Observations of behavior and body language can be done by watching a participant, recording audio or video. Structured interviews can be conducted with people alone or in a group under controlled conditions, or they may be asked open-ended qualitative research questions . Qualitative research methods are also used to understand a person’s perceptions and motivations.

LEARN ABOUT: Social Communication Questionnaire

The strength of this method is that group discussion can provide ideas and stimulate memories with topics cascading as discussion occurs. The accuracy of qualitative data depends on how well contextual data explains complex issues and complements quantitative data. It helps get the answer of “why” and “how”, after getting an answer to “what”. The limitations of qualitative data for evaluation research are that they are subjective, time-consuming, costly and difficult to analyze and interpret.

Learn more: Qualitative Market Research: The Complete Guide

Survey software can be used for both the evaluation research methods. You can use above sample questions for evaluation research and send a survey in minutes using research software. Using a tool for research simplifies the process right from creating a survey, importing contacts, distributing the survey and generating reports that aid in research.

Examples of evaluation research

Evaluation research questions lay the foundation of a successful evaluation. They define the topics that will be evaluated. Keeping evaluation questions ready not only saves time and money, but also makes it easier to decide what data to collect, how to analyze it, and how to report it.

Evaluation research questions must be developed and agreed on in the planning stage, however, ready-made research templates can also be used.

Process evaluation research question examples:

- How often do you use our product in a day?

- Were approvals taken from all stakeholders?

- Can you report the issue from the system?

- Can you submit the feedback from the system?

- Was each task done as per the standard operating procedure?

- What were the barriers to the implementation of each task?

- Were any improvement areas discovered?

Outcome evaluation research question examples:

- How satisfied are you with our product?

- Did the program produce intended outcomes?

- What were the unintended outcomes?

- Has the program increased the knowledge of participants?

- Were the participants of the program employable before the course started?

- Do participants of the program have the skills to find a job after the course ended?

- Is the knowledge of participants better compared to those who did not participate in the program?

MORE LIKE THIS

21 Best Customer Advocacy Software for Customers in 2024

Apr 19, 2024

10 Quantitative Data Analysis Software for Every Data Scientist

Apr 18, 2024

11 Best Enterprise Feedback Management Software in 2024

17 Best Online Reputation Management Software in 2024

Apr 17, 2024

Other categories

- Academic Research

- Artificial Intelligence

- Assessments

- Brand Awareness

- Case Studies

- Communities

- Consumer Insights

- Customer effort score

- Customer Engagement

- Customer Experience

- Customer Loyalty

- Customer Research

- Customer Satisfaction

- Employee Benefits

- Employee Engagement

- Employee Retention

- Friday Five

- General Data Protection Regulation

- Insights Hub

- Life@QuestionPro

- Market Research

- Mobile diaries

- Mobile Surveys

- New Features

- Online Communities

- Question Types

- Questionnaire

- QuestionPro Products

- Release Notes

- Research Tools and Apps

- Revenue at Risk

- Survey Templates

- Training Tips

- Uncategorized

- Video Learning Series

- What’s Coming Up

- Workforce Intelligence

- Evaluation Research Design: Examples, Methods & Types

As you engage in tasks, you will need to take intermittent breaks to determine how much progress has been made and if any changes need to be effected along the way. This is very similar to what organizations do when they carry out evaluation research.

The evaluation research methodology has become one of the most important approaches for organizations as they strive to create products, services, and processes that speak to the needs of target users. In this article, we will show you how your organization can conduct successful evaluation research using Formplus .

What is Evaluation Research?

Also known as program evaluation, evaluation research is a common research design that entails carrying out a structured assessment of the value of resources committed to a project or specific goal. It often adopts social research methods to gather and analyze useful information about organizational processes and products.

As a type of applied research , evaluation research typically associated with real-life scenarios within organizational contexts. This means that the researcher will need to leverage common workplace skills including interpersonal skills and team play to arrive at objective research findings that will be useful to stakeholders.

Characteristics of Evaluation Research

- Research Environment: Evaluation research is conducted in the real world; that is, within the context of an organization.

- Research Focus: Evaluation research is primarily concerned with measuring the outcomes of a process rather than the process itself.

- Research Outcome: Evaluation research is employed for strategic decision making in organizations.

- Research Goal: The goal of program evaluation is to determine whether a process has yielded the desired result(s).

- This type of research protects the interests of stakeholders in the organization.

- It often represents a middle-ground between pure and applied research.

- Evaluation research is both detailed and continuous. It pays attention to performative processes rather than descriptions.

- Research Process: This research design utilizes qualitative and quantitative research methods to gather relevant data about a product or action-based strategy. These methods include observation, tests, and surveys.

Types of Evaluation Research

The Encyclopedia of Evaluation (Mathison, 2004) treats forty-two different evaluation approaches and models ranging from “appreciative inquiry” to “connoisseurship” to “transformative evaluation”. Common types of evaluation research include the following:

- Formative Evaluation

Formative evaluation or baseline survey is a type of evaluation research that involves assessing the needs of the users or target market before embarking on a project. Formative evaluation is the starting point of evaluation research because it sets the tone of the organization’s project and provides useful insights for other types of evaluation.

- Mid-term Evaluation

Mid-term evaluation entails assessing how far a project has come and determining if it is in line with the set goals and objectives. Mid-term reviews allow the organization to determine if a change or modification of the implementation strategy is necessary, and it also serves for tracking the project.

- Summative Evaluation

This type of evaluation is also known as end-term evaluation of project-completion evaluation and it is conducted immediately after the completion of a project. Here, the researcher examines the value and outputs of the program within the context of the projected results.

Summative evaluation allows the organization to measure the degree of success of a project. Such results can be shared with stakeholders, target markets, and prospective investors.

- Outcome Evaluation

Outcome evaluation is primarily target-audience oriented because it measures the effects of the project, program, or product on the users. This type of evaluation views the outcomes of the project through the lens of the target audience and it often measures changes such as knowledge-improvement, skill acquisition, and increased job efficiency.

- Appreciative Enquiry

Appreciative inquiry is a type of evaluation research that pays attention to result-producing approaches. It is predicated on the belief that an organization will grow in whatever direction its stakeholders pay primary attention to such that if all the attention is focused on problems, identifying them would be easy.

In carrying out appreciative inquiry, the research identifies the factors directly responsible for the positive results realized in the course of a project, analyses the reasons for these results, and intensifies the utilization of these factors.

Evaluation Research Methodology

There are four major evaluation research methods, namely; output measurement, input measurement, impact assessment and service quality

- Output/Performance Measurement

Output measurement is a method employed in evaluative research that shows the results of an activity undertaking by an organization. In other words, performance measurement pays attention to the results achieved by the resources invested in a specific activity or organizational process.

More than investing resources in a project, organizations must be able to track the extent to which these resources have yielded results, and this is where performance measurement comes in. Output measurement allows organizations to pay attention to the effectiveness and impact of a process rather than just the process itself.

Other key indicators of performance measurement include user-satisfaction, organizational capacity, market penetration, and facility utilization. In carrying out performance measurement, organizations must identify the parameters that are relevant to the process in question, their industry, and the target markets.

5 Performance Evaluation Research Questions Examples

- What is the cost-effectiveness of this project?

- What is the overall reach of this project?

- How would you rate the market penetration of this project?

- How accessible is the project?

- Is this project time-efficient?

- Input Measurement

In evaluation research, input measurement entails assessing the number of resources committed to a project or goal in any organization. This is one of the most common indicators in evaluation research because it allows organizations to track their investments.

The most common indicator of inputs measurement is the budget which allows organizations to evaluate and limit expenditure for a project. It is also important to measure non-monetary investments like human capital; that is the number of persons needed for successful project execution and production capital.

5 Input Evaluation Research Questions Examples

- What is the budget for this project?

- What is the timeline of this process?

- How many employees have been assigned to this project?

- Do we need to purchase new machinery for this project?

- How many third-parties are collaborators in this project?

- Impact/Outcomes Assessment

In impact assessment, the evaluation researcher focuses on how the product or project affects target markets, both directly and indirectly. Outcomes assessment is somewhat challenging because many times, it is difficult to measure the real-time value and benefits of a project for the users.

In assessing the impact of a process, the evaluation researcher must pay attention to the improvement recorded by the users as a result of the process or project in question. Hence, it makes sense to focus on cognitive and affective changes, expectation-satisfaction, and similar accomplishments of the users.

5 Impact Evaluation Research Questions Examples

- How has this project affected you?

- Has this process affected you positively or negatively?

- What role did this project play in improving your earning power?

- On a scale of 1-10, how excited are you about this project?

- How has this project improved your mental health?

- Service Quality

Service quality is the evaluation research method that accounts for any differences between the expectations of the target markets and their impression of the undertaken project. Hence, it pays attention to the overall service quality assessment carried out by the users.

It is not uncommon for organizations to build the expectations of target markets as they embark on specific projects. Service quality evaluation allows these organizations to track the extent to which the actual product or service delivery fulfils the expectations.

5 Service Quality Evaluation Questions

- On a scale of 1-10, how satisfied are you with the product?

- How helpful was our customer service representative?

- How satisfied are you with the quality of service?

- How long did it take to resolve the issue at hand?

- How likely are you to recommend us to your network?

Uses of Evaluation Research

- Evaluation research is used by organizations to measure the effectiveness of activities and identify areas needing improvement. Findings from evaluation research are key to project and product advancements and are very influential in helping organizations realize their goals efficiently.

- The findings arrived at from evaluation research serve as evidence of the impact of the project embarked on by an organization. This information can be presented to stakeholders, customers, and can also help your organization secure investments for future projects.

- Evaluation research helps organizations to justify their use of limited resources and choose the best alternatives.

- It is also useful in pragmatic goal setting and realization.

- Evaluation research provides detailed insights into projects embarked on by an organization. Essentially, it allows all stakeholders to understand multiple dimensions of a process, and to determine strengths and weaknesses.

- Evaluation research also plays a major role in helping organizations to improve their overall practice and service delivery. This research design allows organizations to weigh existing processes through feedback provided by stakeholders, and this informs better decision making.

- Evaluation research is also instrumental to sustainable capacity building. It helps you to analyze demand patterns and determine whether your organization requires more funds, upskilling or improved operations.

Data Collection Techniques Used in Evaluation Research

In gathering useful data for evaluation research, the researcher often combines quantitative and qualitative research methods . Qualitative research methods allow the researcher to gather information relating to intangible values such as market satisfaction and perception.

On the other hand, quantitative methods are used by the evaluation researcher to assess numerical patterns, that is, quantifiable data. These methods help you measure impact and results; although they may not serve for understanding the context of the process.

Quantitative Methods for Evaluation Research

A survey is a quantitative method that allows you to gather information about a project from a specific group of people. Surveys are largely context-based and limited to target groups who are asked a set of structured questions in line with the predetermined context.

Surveys usually consist of close-ended questions that allow the evaluative researcher to gain insight into several variables including market coverage and customer preferences. Surveys can be carried out physically using paper forms or online through data-gathering platforms like Formplus .

- Questionnaires

A questionnaire is a common quantitative research instrument deployed in evaluation research. Typically, it is an aggregation of different types of questions or prompts which help the researcher to obtain valuable information from respondents.

A poll is a common method of opinion-sampling that allows you to weigh the perception of the public about issues that affect them. The best way to achieve accuracy in polling is by conducting them online using platforms like Formplus.

Polls are often structured as Likert questions and the options provided always account for neutrality or indecision. Conducting a poll allows the evaluation researcher to understand the extent to which the product or service satisfies the needs of the users.

Qualitative Methods for Evaluation Research

- One-on-One Interview

An interview is a structured conversation involving two participants; usually the researcher and the user or a member of the target market. One-on-One interviews can be conducted physically, via the telephone and through video conferencing apps like Zoom and Google Meet.

- Focus Groups

A focus group is a research method that involves interacting with a limited number of persons within your target market, who can provide insights on market perceptions and new products.

- Qualitative Observation

Qualitative observation is a research method that allows the evaluation researcher to gather useful information from the target audience through a variety of subjective approaches. This method is more extensive than quantitative observation because it deals with a smaller sample size, and it also utilizes inductive analysis.

- Case Studies

A case study is a research method that helps the researcher to gain a better understanding of a subject or process. Case studies involve in-depth research into a given subject, to understand its functionalities and successes.

How to Formplus Online Form Builder for Evaluation Survey

- Sign into Formplus

In the Formplus builder, you can easily create your evaluation survey by dragging and dropping preferred fields into your form. To access the Formplus builder, you will need to create an account on Formplus.

Once you do this, sign in to your account and click on “Create Form ” to begin.

- Edit Form Title

Click on the field provided to input your form title, for example, “Evaluation Research Survey”.

Click on the edit button to edit the form.

Add Fields: Drag and drop preferred form fields into your form in the Formplus builder inputs column. There are several field input options for surveys in the Formplus builder.

Edit fields

Click on “Save”

Preview form.

- Form Customization

With the form customization options in the form builder, you can easily change the outlook of your form and make it more unique and personalized. Formplus allows you to change your form theme, add background images, and even change the font according to your needs.

- Multiple Sharing Options

Formplus offers multiple form sharing options which enables you to easily share your evaluation survey with survey respondents. You can use the direct social media sharing buttons to share your form link to your organization’s social media pages.

You can send out your survey form as email invitations to your research subjects too. If you wish, you can share your form’s QR code or embed it on your organization’s website for easy access.

Conclusion

Conducting evaluation research allows organizations to determine the effectiveness of their activities at different phases. This type of research can be carried out using qualitative and quantitative data collection methods including focus groups, observation, telephone and one-on-one interviews, and surveys.

Online surveys created and administered via data collection platforms like Formplus make it easier for you to gather and process information during evaluation research. With Formplus multiple form sharing options, it is even easier for you to gather useful data from target markets.

Connect to Formplus, Get Started Now - It's Free!

- characteristics of evaluation research

- evaluation research methods

- types of evaluation research

- what is evaluation research

- busayo.longe

You may also like:

Assessment vs Evaluation: 11 Key Differences

This article will discuss what constitutes evaluations and assessments along with the key differences between these two research methods.

Recall Bias: Definition, Types, Examples & Mitigation

This article will discuss the impact of recall bias in studies and the best ways to avoid them during research.

What is Pure or Basic Research? + [Examples & Method]

Simple guide on pure or basic research, its methods, characteristics, advantages, and examples in science, medicine, education and psychology

Formal Assessment: Definition, Types Examples & Benefits

In this article, we will discuss different types and examples of formal evaluation, and show you how to use Formplus for online assessments.

Formplus - For Seamless Data Collection

Collect data the right way with a versatile data collection tool. try formplus and transform your work productivity today..

An official website of the United States government

The .gov means it’s official. Federal government websites often end in .gov or .mil. Before sharing sensitive information, make sure you’re on a federal government site.

The site is secure. The https:// ensures that you are connecting to the official website and that any information you provide is encrypted and transmitted securely.

- Publications

- Account settings

Preview improvements coming to the PMC website in October 2024. Learn More or Try it out now .

- Advanced Search

- Journal List

- Indian J Anaesth

- v.60(9); 2016 Sep

How to write a research proposal?

Department of Anaesthesiology, Bangalore Medical College and Research Institute, Bengaluru, Karnataka, India

Devika Rani Duggappa

Writing the proposal of a research work in the present era is a challenging task due to the constantly evolving trends in the qualitative research design and the need to incorporate medical advances into the methodology. The proposal is a detailed plan or ‘blueprint’ for the intended study, and once it is completed, the research project should flow smoothly. Even today, many of the proposals at post-graduate evaluation committees and application proposals for funding are substandard. A search was conducted with keywords such as research proposal, writing proposal and qualitative using search engines, namely, PubMed and Google Scholar, and an attempt has been made to provide broad guidelines for writing a scientifically appropriate research proposal.

INTRODUCTION

A clean, well-thought-out proposal forms the backbone for the research itself and hence becomes the most important step in the process of conduct of research.[ 1 ] The objective of preparing a research proposal would be to obtain approvals from various committees including ethics committee [details under ‘Research methodology II’ section [ Table 1 ] in this issue of IJA) and to request for grants. However, there are very few universally accepted guidelines for preparation of a good quality research proposal. A search was performed with keywords such as research proposal, funding, qualitative and writing proposals using search engines, namely, PubMed, Google Scholar and Scopus.

Five ‘C’s while writing a literature review

BASIC REQUIREMENTS OF A RESEARCH PROPOSAL

A proposal needs to show how your work fits into what is already known about the topic and what new paradigm will it add to the literature, while specifying the question that the research will answer, establishing its significance, and the implications of the answer.[ 2 ] The proposal must be capable of convincing the evaluation committee about the credibility, achievability, practicality and reproducibility (repeatability) of the research design.[ 3 ] Four categories of audience with different expectations may be present in the evaluation committees, namely academic colleagues, policy-makers, practitioners and lay audiences who evaluate the research proposal. Tips for preparation of a good research proposal include; ‘be practical, be persuasive, make broader links, aim for crystal clarity and plan before you write’. A researcher must be balanced, with a realistic understanding of what can be achieved. Being persuasive implies that researcher must be able to convince other researchers, research funding agencies, educational institutions and supervisors that the research is worth getting approval. The aim of the researcher should be clearly stated in simple language that describes the research in a way that non-specialists can comprehend, without use of jargons. The proposal must not only demonstrate that it is based on an intelligent understanding of the existing literature but also show that the writer has thought about the time needed to conduct each stage of the research.[ 4 , 5 ]

CONTENTS OF A RESEARCH PROPOSAL

The contents or formats of a research proposal vary depending on the requirements of evaluation committee and are generally provided by the evaluation committee or the institution.

In general, a cover page should contain the (i) title of the proposal, (ii) name and affiliation of the researcher (principal investigator) and co-investigators, (iii) institutional affiliation (degree of the investigator and the name of institution where the study will be performed), details of contact such as phone numbers, E-mail id's and lines for signatures of investigators.

The main contents of the proposal may be presented under the following headings: (i) introduction, (ii) review of literature, (iii) aims and objectives, (iv) research design and methods, (v) ethical considerations, (vi) budget, (vii) appendices and (viii) citations.[ 4 ]

Introduction

It is also sometimes termed as ‘need for study’ or ‘abstract’. Introduction is an initial pitch of an idea; it sets the scene and puts the research in context.[ 6 ] The introduction should be designed to create interest in the reader about the topic and proposal. It should convey to the reader, what you want to do, what necessitates the study and your passion for the topic.[ 7 ] Some questions that can be used to assess the significance of the study are: (i) Who has an interest in the domain of inquiry? (ii) What do we already know about the topic? (iii) What has not been answered adequately in previous research and practice? (iv) How will this research add to knowledge, practice and policy in this area? Some of the evaluation committees, expect the last two questions, elaborated under a separate heading of ‘background and significance’.[ 8 ] Introduction should also contain the hypothesis behind the research design. If hypothesis cannot be constructed, the line of inquiry to be used in the research must be indicated.

Review of literature

It refers to all sources of scientific evidence pertaining to the topic in interest. In the present era of digitalisation and easy accessibility, there is an enormous amount of relevant data available, making it a challenge for the researcher to include all of it in his/her review.[ 9 ] It is crucial to structure this section intelligently so that the reader can grasp the argument related to your study in relation to that of other researchers, while still demonstrating to your readers that your work is original and innovative. It is preferable to summarise each article in a paragraph, highlighting the details pertinent to the topic of interest. The progression of review can move from the more general to the more focused studies, or a historical progression can be used to develop the story, without making it exhaustive.[ 1 ] Literature should include supporting data, disagreements and controversies. Five ‘C's may be kept in mind while writing a literature review[ 10 ] [ Table 1 ].

Aims and objectives

The research purpose (or goal or aim) gives a broad indication of what the researcher wishes to achieve in the research. The hypothesis to be tested can be the aim of the study. The objectives related to parameters or tools used to achieve the aim are generally categorised as primary and secondary objectives.

Research design and method

The objective here is to convince the reader that the overall research design and methods of analysis will correctly address the research problem and to impress upon the reader that the methodology/sources chosen are appropriate for the specific topic. It should be unmistakably tied to the specific aims of your study.

In this section, the methods and sources used to conduct the research must be discussed, including specific references to sites, databases, key texts or authors that will be indispensable to the project. There should be specific mention about the methodological approaches to be undertaken to gather information, about the techniques to be used to analyse it and about the tests of external validity to which researcher is committed.[ 10 , 11 ]

The components of this section include the following:[ 4 ]

Population and sample

Population refers to all the elements (individuals, objects or substances) that meet certain criteria for inclusion in a given universe,[ 12 ] and sample refers to subset of population which meets the inclusion criteria for enrolment into the study. The inclusion and exclusion criteria should be clearly defined. The details pertaining to sample size are discussed in the article “Sample size calculation: Basic priniciples” published in this issue of IJA.

Data collection

The researcher is expected to give a detailed account of the methodology adopted for collection of data, which include the time frame required for the research. The methodology should be tested for its validity and ensure that, in pursuit of achieving the results, the participant's life is not jeopardised. The author should anticipate and acknowledge any potential barrier and pitfall in carrying out the research design and explain plans to address them, thereby avoiding lacunae due to incomplete data collection. If the researcher is planning to acquire data through interviews or questionnaires, copy of the questions used for the same should be attached as an annexure with the proposal.

Rigor (soundness of the research)

This addresses the strength of the research with respect to its neutrality, consistency and applicability. Rigor must be reflected throughout the proposal.

It refers to the robustness of a research method against bias. The author should convey the measures taken to avoid bias, viz. blinding and randomisation, in an elaborate way, thus ensuring that the result obtained from the adopted method is purely as chance and not influenced by other confounding variables.

Consistency

Consistency considers whether the findings will be consistent if the inquiry was replicated with the same participants and in a similar context. This can be achieved by adopting standard and universally accepted methods and scales.

Applicability

Applicability refers to the degree to which the findings can be applied to different contexts and groups.[ 13 ]

Data analysis

This section deals with the reduction and reconstruction of data and its analysis including sample size calculation. The researcher is expected to explain the steps adopted for coding and sorting the data obtained. Various tests to be used to analyse the data for its robustness, significance should be clearly stated. Author should also mention the names of statistician and suitable software which will be used in due course of data analysis and their contribution to data analysis and sample calculation.[ 9 ]

Ethical considerations

Medical research introduces special moral and ethical problems that are not usually encountered by other researchers during data collection, and hence, the researcher should take special care in ensuring that ethical standards are met. Ethical considerations refer to the protection of the participants' rights (right to self-determination, right to privacy, right to autonomy and confidentiality, right to fair treatment and right to protection from discomfort and harm), obtaining informed consent and the institutional review process (ethical approval). The researcher needs to provide adequate information on each of these aspects.

Informed consent needs to be obtained from the participants (details discussed in further chapters), as well as the research site and the relevant authorities.

When the researcher prepares a research budget, he/she should predict and cost all aspects of the research and then add an additional allowance for unpredictable disasters, delays and rising costs. All items in the budget should be justified.

Appendices are documents that support the proposal and application. The appendices will be specific for each proposal but documents that are usually required include informed consent form, supporting documents, questionnaires, measurement tools and patient information of the study in layman's language.

As with any scholarly research paper, you must cite the sources you used in composing your proposal. Although the words ‘references and bibliography’ are different, they are used interchangeably. It refers to all references cited in the research proposal.

Successful, qualitative research proposals should communicate the researcher's knowledge of the field and method and convey the emergent nature of the qualitative design. The proposal should follow a discernible logic from the introduction to presentation of the appendices.

Financial support and sponsorship

Conflicts of interest.

There are no conflicts of interest.

17 Research Proposal Examples

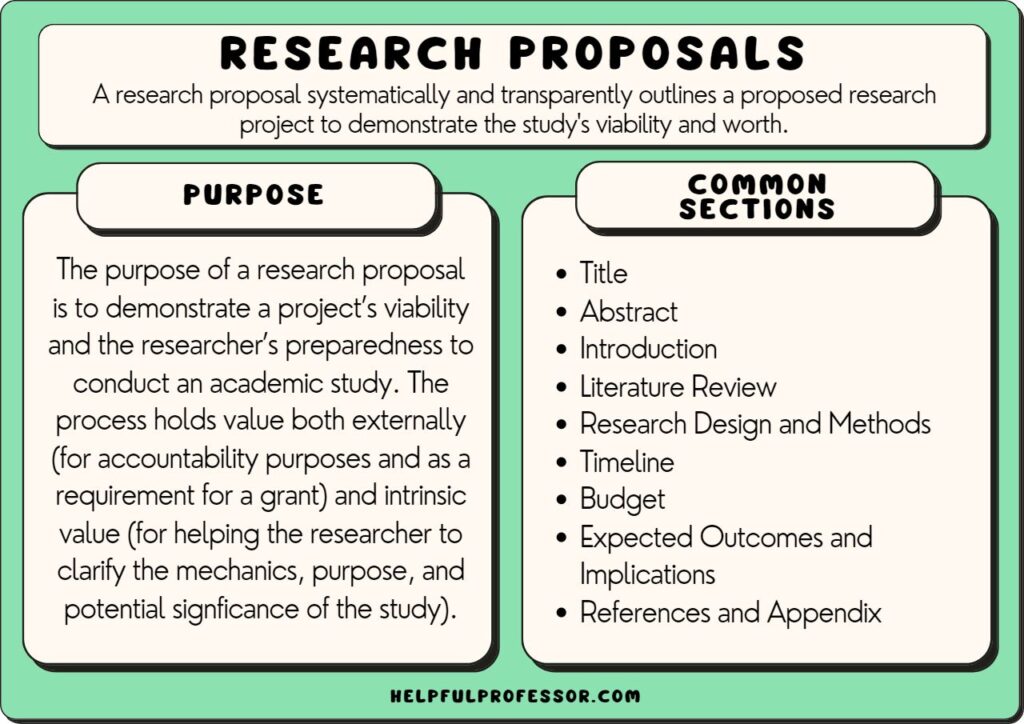

A research proposal systematically and transparently outlines a proposed research project.

The purpose of a research proposal is to demonstrate a project’s viability and the researcher’s preparedness to conduct an academic study. It serves as a roadmap for the researcher.

The process holds value both externally (for accountability purposes and often as a requirement for a grant application) and intrinsic value (for helping the researcher to clarify the mechanics, purpose, and potential signficance of the study).

Key sections of a research proposal include: the title, abstract, introduction, literature review, research design and methods, timeline, budget, outcomes and implications, references, and appendix. Each is briefly explained below.

Watch my Guide: How to Write a Research Proposal

Get your Template for Writing your Research Proposal Here (With AI Prompts!)

Research Proposal Sample Structure

Title: The title should present a concise and descriptive statement that clearly conveys the core idea of the research projects. Make it as specific as possible. The reader should immediately be able to grasp the core idea of the intended research project. Often, the title is left too vague and does not help give an understanding of what exactly the study looks at.

Abstract: Abstracts are usually around 250-300 words and provide an overview of what is to follow – including the research problem , objectives, methods, expected outcomes, and significance of the study. Use it as a roadmap and ensure that, if the abstract is the only thing someone reads, they’ll get a good fly-by of what will be discussed in the peice.

Introduction: Introductions are all about contextualization. They often set the background information with a statement of the problem. At the end of the introduction, the reader should understand what the rationale for the study truly is. I like to see the research questions or hypotheses included in the introduction and I like to get a good understanding of what the significance of the research will be. It’s often easiest to write the introduction last

Literature Review: The literature review dives deep into the existing literature on the topic, demosntrating your thorough understanding of the existing literature including themes, strengths, weaknesses, and gaps in the literature. It serves both to demonstrate your knowledge of the field and, to demonstrate how the proposed study will fit alongside the literature on the topic. A good literature review concludes by clearly demonstrating how your research will contribute something new and innovative to the conversation in the literature.

Research Design and Methods: This section needs to clearly demonstrate how the data will be gathered and analyzed in a systematic and academically sound manner. Here, you need to demonstrate that the conclusions of your research will be both valid and reliable. Common points discussed in the research design and methods section include highlighting the research paradigm, methodologies, intended population or sample to be studied, data collection techniques, and data analysis procedures . Toward the end of this section, you are encouraged to also address ethical considerations and limitations of the research process , but also to explain why you chose your research design and how you are mitigating the identified risks and limitations.

Timeline: Provide an outline of the anticipated timeline for the study. Break it down into its various stages (including data collection, data analysis, and report writing). The goal of this section is firstly to establish a reasonable breakdown of steps for you to follow and secondly to demonstrate to the assessors that your project is practicable and feasible.

Budget: Estimate the costs associated with the research project and include evidence for your estimations. Typical costs include staffing costs, equipment, travel, and data collection tools. When applying for a scholarship, the budget should demonstrate that you are being responsible with your expensive and that your funding application is reasonable.

Expected Outcomes and Implications: A discussion of the anticipated findings or results of the research, as well as the potential contributions to the existing knowledge, theory, or practice in the field. This section should also address the potential impact of the research on relevant stakeholders and any broader implications for policy or practice.

References: A complete list of all the sources cited in the research proposal, formatted according to the required citation style. This demonstrates the researcher’s familiarity with the relevant literature and ensures proper attribution of ideas and information.

Appendices (if applicable): Any additional materials, such as questionnaires, interview guides, or consent forms, that provide further information or support for the research proposal. These materials should be included as appendices at the end of the document.

Research Proposal Examples

Research proposals often extend anywhere between 2,000 and 15,000 words in length. The following snippets are samples designed to briefly demonstrate what might be discussed in each section.

1. Education Studies Research Proposals

See some real sample pieces:

- Assessment of the perceptions of teachers towards a new grading system

- Does ICT use in secondary classrooms help or hinder student learning?

- Digital technologies in focus project

- Urban Middle School Teachers’ Experiences of the Implementation of

- Restorative Justice Practices

- Experiences of students of color in service learning

Consider this hypothetical education research proposal:

The Impact of Game-Based Learning on Student Engagement and Academic Performance in Middle School Mathematics

Abstract: The proposed study will explore multiplayer game-based learning techniques in middle school mathematics curricula and their effects on student engagement. The study aims to contribute to the current literature on game-based learning by examining the effects of multiplayer gaming in learning.

Introduction: Digital game-based learning has long been shunned within mathematics education for fears that it may distract students or lower the academic integrity of the classrooms. However, there is emerging evidence that digital games in math have emerging benefits not only for engagement but also academic skill development. Contributing to this discourse, this study seeks to explore the potential benefits of multiplayer digital game-based learning by examining its impact on middle school students’ engagement and academic performance in a mathematics class.

Literature Review: The literature review has identified gaps in the current knowledge, namely, while game-based learning has been extensively explored, the role of multiplayer games in supporting learning has not been studied.

Research Design and Methods: This study will employ a mixed-methods research design based upon action research in the classroom. A quasi-experimental pre-test/post-test control group design will first be used to compare the academic performance and engagement of middle school students exposed to game-based learning techniques with those in a control group receiving instruction without the aid of technology. Students will also be observed and interviewed in regard to the effect of communication and collaboration during gameplay on their learning.

Timeline: The study will take place across the second term of the school year with a pre-test taking place on the first day of the term and the post-test taking place on Wednesday in Week 10.

Budget: The key budgetary requirements will be the technologies required, including the subscription cost for the identified games and computers.

Expected Outcomes and Implications: It is expected that the findings will contribute to the current literature on game-based learning and inform educational practices, providing educators and policymakers with insights into how to better support student achievement in mathematics.

2. Psychology Research Proposals

See some real examples:

- A situational analysis of shared leadership in a self-managing team

- The effect of musical preference on running performance

- Relationship between self-esteem and disordered eating amongst adolescent females

Consider this hypothetical psychology research proposal:

The Effects of Mindfulness-Based Interventions on Stress Reduction in College Students

Abstract: This research proposal examines the impact of mindfulness-based interventions on stress reduction among college students, using a pre-test/post-test experimental design with both quantitative and qualitative data collection methods .

Introduction: College students face heightened stress levels during exam weeks. This can affect both mental health and test performance. This study explores the potential benefits of mindfulness-based interventions such as meditation as a way to mediate stress levels in the weeks leading up to exam time.

Literature Review: Existing research on mindfulness-based meditation has shown the ability for mindfulness to increase metacognition, decrease anxiety levels, and decrease stress. Existing literature has looked at workplace, high school and general college-level applications. This study will contribute to the corpus of literature by exploring the effects of mindfulness directly in the context of exam weeks.

Research Design and Methods: Participants ( n= 234 ) will be randomly assigned to either an experimental group, receiving 5 days per week of 10-minute mindfulness-based interventions, or a control group, receiving no intervention. Data will be collected through self-report questionnaires, measuring stress levels, semi-structured interviews exploring participants’ experiences, and students’ test scores.

Timeline: The study will begin three weeks before the students’ exam week and conclude after each student’s final exam. Data collection will occur at the beginning (pre-test of self-reported stress levels) and end (post-test) of the three weeks.

Expected Outcomes and Implications: The study aims to provide evidence supporting the effectiveness of mindfulness-based interventions in reducing stress among college students in the lead up to exams, with potential implications for mental health support and stress management programs on college campuses.

3. Sociology Research Proposals

- Understanding emerging social movements: A case study of ‘Jersey in Transition’

- The interaction of health, education and employment in Western China

- Can we preserve lower-income affordable neighbourhoods in the face of rising costs?

Consider this hypothetical sociology research proposal:

The Impact of Social Media Usage on Interpersonal Relationships among Young Adults

Abstract: This research proposal investigates the effects of social media usage on interpersonal relationships among young adults, using a longitudinal mixed-methods approach with ongoing semi-structured interviews to collect qualitative data.

Introduction: Social media platforms have become a key medium for the development of interpersonal relationships, particularly for young adults. This study examines the potential positive and negative effects of social media usage on young adults’ relationships and development over time.

Literature Review: A preliminary review of relevant literature has demonstrated that social media usage is central to development of a personal identity and relationships with others with similar subcultural interests. However, it has also been accompanied by data on mental health deline and deteriorating off-screen relationships. The literature is to-date lacking important longitudinal data on these topics.

Research Design and Methods: Participants ( n = 454 ) will be young adults aged 18-24. Ongoing self-report surveys will assess participants’ social media usage, relationship satisfaction, and communication patterns. A subset of participants will be selected for longitudinal in-depth interviews starting at age 18 and continuing for 5 years.

Timeline: The study will be conducted over a period of five years, including recruitment, data collection, analysis, and report writing.

Expected Outcomes and Implications: This study aims to provide insights into the complex relationship between social media usage and interpersonal relationships among young adults, potentially informing social policies and mental health support related to social media use.

4. Nursing Research Proposals

- Does Orthopaedic Pre-assessment clinic prepare the patient for admission to hospital?

- Nurses’ perceptions and experiences of providing psychological care to burns patients

- Registered psychiatric nurse’s practice with mentally ill parents and their children

Consider this hypothetical nursing research proposal:

The Influence of Nurse-Patient Communication on Patient Satisfaction and Health Outcomes following Emergency Cesarians

Abstract: This research will examines the impact of effective nurse-patient communication on patient satisfaction and health outcomes for women following c-sections, utilizing a mixed-methods approach with patient surveys and semi-structured interviews.

Introduction: It has long been known that effective communication between nurses and patients is crucial for quality care. However, additional complications arise following emergency c-sections due to the interaction between new mother’s changing roles and recovery from surgery.

Literature Review: A review of the literature demonstrates the importance of nurse-patient communication, its impact on patient satisfaction, and potential links to health outcomes. However, communication between nurses and new mothers is less examined, and the specific experiences of those who have given birth via emergency c-section are to date unexamined.

Research Design and Methods: Participants will be patients in a hospital setting who have recently had an emergency c-section. A self-report survey will assess their satisfaction with nurse-patient communication and perceived health outcomes. A subset of participants will be selected for in-depth interviews to explore their experiences and perceptions of the communication with their nurses.

Timeline: The study will be conducted over a period of six months, including rolling recruitment, data collection, analysis, and report writing within the hospital.

Expected Outcomes and Implications: This study aims to provide evidence for the significance of nurse-patient communication in supporting new mothers who have had an emergency c-section. Recommendations will be presented for supporting nurses and midwives in improving outcomes for new mothers who had complications during birth.

5. Social Work Research Proposals

- Experiences of negotiating employment and caring responsibilities of fathers post-divorce

- Exploring kinship care in the north region of British Columbia

Consider this hypothetical social work research proposal:

The Role of a Family-Centered Intervention in Preventing Homelessness Among At-Risk Youthin a working-class town in Northern England

Abstract: This research proposal investigates the effectiveness of a family-centered intervention provided by a local council area in preventing homelessness among at-risk youth. This case study will use a mixed-methods approach with program evaluation data and semi-structured interviews to collect quantitative and qualitative data .

Introduction: Homelessness among youth remains a significant social issue. This study aims to assess the effectiveness of family-centered interventions in addressing this problem and identify factors that contribute to successful prevention strategies.

Literature Review: A review of the literature has demonstrated several key factors contributing to youth homelessness including lack of parental support, lack of social support, and low levels of family involvement. It also demonstrates the important role of family-centered interventions in addressing this issue. Drawing on current evidence, this study explores the effectiveness of one such intervention in preventing homelessness among at-risk youth in a working-class town in Northern England.

Research Design and Methods: The study will evaluate a new family-centered intervention program targeting at-risk youth and their families. Quantitative data on program outcomes, including housing stability and family functioning, will be collected through program records and evaluation reports. Semi-structured interviews with program staff, participants, and relevant stakeholders will provide qualitative insights into the factors contributing to program success or failure.

Timeline: The study will be conducted over a period of six months, including recruitment, data collection, analysis, and report writing.

Budget: Expenses include access to program evaluation data, interview materials, data analysis software, and any related travel costs for in-person interviews.

Expected Outcomes and Implications: This study aims to provide evidence for the effectiveness of family-centered interventions in preventing youth homelessness, potentially informing the expansion of or necessary changes to social work practices in Northern England.

Research Proposal Template

Get your Detailed Template for Writing your Research Proposal Here (With AI Prompts!)

This is a template for a 2500-word research proposal. You may find it difficult to squeeze everything into this wordcount, but it’s a common wordcount for Honors and MA-level dissertations.

Your research proposal is where you really get going with your study. I’d strongly recommend working closely with your teacher in developing a research proposal that’s consistent with the requirements and culture of your institution, as in my experience it varies considerably. The above template is from my own courses that walk students through research proposals in a British School of Education.

Chris Drew (PhD)

Dr. Chris Drew is the founder of the Helpful Professor. He holds a PhD in education and has published over 20 articles in scholarly journals. He is the former editor of the Journal of Learning Development in Higher Education. [Image Descriptor: Photo of Chris]

- Chris Drew (PhD) https://helpfulprofessor.com/author/chris-drew-phd/ 5 Top Tips for Succeeding at University

- Chris Drew (PhD) https://helpfulprofessor.com/author/chris-drew-phd/ 50 Durable Goods Examples

- Chris Drew (PhD) https://helpfulprofessor.com/author/chris-drew-phd/ 100 Consumer Goods Examples

- Chris Drew (PhD) https://helpfulprofessor.com/author/chris-drew-phd/ 30 Globalization Pros and Cons

8 thoughts on “17 Research Proposal Examples”

Very excellent research proposals

very helpful

Very helpful

Dear Sir, I need some help to write an educational research proposal. Thank you.

Hi Levi, use the site search bar to ask a question and I’ll likely have a guide already written for your specific question. Thanks for reading!

very good research proposal

Thank you so much sir! ❤️

Very helpful 👌

Leave a Comment Cancel Reply

Your email address will not be published. Required fields are marked *

- Privacy Policy

Buy Me a Coffee

Home » How To Write A Research Proposal – Step-by-Step [Template]

How To Write A Research Proposal – Step-by-Step [Template]

Table of Contents

How To Write a Research Proposal

Writing a Research proposal involves several steps to ensure a well-structured and comprehensive document. Here is an explanation of each step:

1. Title and Abstract

- Choose a concise and descriptive title that reflects the essence of your research.

- Write an abstract summarizing your research question, objectives, methodology, and expected outcomes. It should provide a brief overview of your proposal.

2. Introduction:

- Provide an introduction to your research topic, highlighting its significance and relevance.

- Clearly state the research problem or question you aim to address.

- Discuss the background and context of the study, including previous research in the field.

3. Research Objectives

- Outline the specific objectives or aims of your research. These objectives should be clear, achievable, and aligned with the research problem.

4. Literature Review:

- Conduct a comprehensive review of relevant literature and studies related to your research topic.

- Summarize key findings, identify gaps, and highlight how your research will contribute to the existing knowledge.

5. Methodology:

- Describe the research design and methodology you plan to employ to address your research objectives.

- Explain the data collection methods, instruments, and analysis techniques you will use.

- Justify why the chosen methods are appropriate and suitable for your research.

6. Timeline:

- Create a timeline or schedule that outlines the major milestones and activities of your research project.

- Break down the research process into smaller tasks and estimate the time required for each task.

7. Resources:

- Identify the resources needed for your research, such as access to specific databases, equipment, or funding.

- Explain how you will acquire or utilize these resources to carry out your research effectively.

8. Ethical Considerations:

- Discuss any ethical issues that may arise during your research and explain how you plan to address them.

- If your research involves human subjects, explain how you will ensure their informed consent and privacy.

9. Expected Outcomes and Significance:

- Clearly state the expected outcomes or results of your research.

- Highlight the potential impact and significance of your research in advancing knowledge or addressing practical issues.

10. References:

- Provide a list of all the references cited in your proposal, following a consistent citation style (e.g., APA, MLA).

11. Appendices:

- Include any additional supporting materials, such as survey questionnaires, interview guides, or data analysis plans.

Research Proposal Format

The format of a research proposal may vary depending on the specific requirements of the institution or funding agency. However, the following is a commonly used format for a research proposal:

1. Title Page:

- Include the title of your research proposal, your name, your affiliation or institution, and the date.

2. Abstract:

- Provide a brief summary of your research proposal, highlighting the research problem, objectives, methodology, and expected outcomes.

3. Introduction:

- Introduce the research topic and provide background information.

- State the research problem or question you aim to address.

- Explain the significance and relevance of the research.

- Review relevant literature and studies related to your research topic.

- Summarize key findings and identify gaps in the existing knowledge.

- Explain how your research will contribute to filling those gaps.

5. Research Objectives:

- Clearly state the specific objectives or aims of your research.

- Ensure that the objectives are clear, focused, and aligned with the research problem.

6. Methodology:

- Describe the research design and methodology you plan to use.

- Explain the data collection methods, instruments, and analysis techniques.

- Justify why the chosen methods are appropriate for your research.

7. Timeline:

8. Resources:

- Explain how you will acquire or utilize these resources effectively.

9. Ethical Considerations:

- If applicable, explain how you will ensure informed consent and protect the privacy of research participants.

10. Expected Outcomes and Significance:

11. References:

12. Appendices:

Research Proposal Template

Here’s a template for a research proposal:

1. Introduction:

2. Literature Review:

3. Research Objectives:

4. Methodology:

5. Timeline:

6. Resources:

7. Ethical Considerations:

8. Expected Outcomes and Significance:

9. References:

10. Appendices:

Research Proposal Sample

Title: The Impact of Online Education on Student Learning Outcomes: A Comparative Study

1. Introduction

Online education has gained significant prominence in recent years, especially due to the COVID-19 pandemic. This research proposal aims to investigate the impact of online education on student learning outcomes by comparing them with traditional face-to-face instruction. The study will explore various aspects of online education, such as instructional methods, student engagement, and academic performance, to provide insights into the effectiveness of online learning.

2. Objectives

The main objectives of this research are as follows:

- To compare student learning outcomes between online and traditional face-to-face education.

- To examine the factors influencing student engagement in online learning environments.

- To assess the effectiveness of different instructional methods employed in online education.

- To identify challenges and opportunities associated with online education and suggest recommendations for improvement.

3. Methodology

3.1 Study Design

This research will utilize a mixed-methods approach to gather both quantitative and qualitative data. The study will include the following components:

3.2 Participants

The research will involve undergraduate students from two universities, one offering online education and the other providing face-to-face instruction. A total of 500 students (250 from each university) will be selected randomly to participate in the study.

3.3 Data Collection

The research will employ the following data collection methods:

- Quantitative: Pre- and post-assessments will be conducted to measure students’ learning outcomes. Data on student demographics and academic performance will also be collected from university records.

- Qualitative: Focus group discussions and individual interviews will be conducted with students to gather their perceptions and experiences regarding online education.

3.4 Data Analysis

Quantitative data will be analyzed using statistical software, employing descriptive statistics, t-tests, and regression analysis. Qualitative data will be transcribed, coded, and analyzed thematically to identify recurring patterns and themes.

4. Ethical Considerations

The study will adhere to ethical guidelines, ensuring the privacy and confidentiality of participants. Informed consent will be obtained, and participants will have the right to withdraw from the study at any time.

5. Significance and Expected Outcomes

This research will contribute to the existing literature by providing empirical evidence on the impact of online education on student learning outcomes. The findings will help educational institutions and policymakers make informed decisions about incorporating online learning methods and improving the quality of online education. Moreover, the study will identify potential challenges and opportunities related to online education and offer recommendations for enhancing student engagement and overall learning outcomes.

6. Timeline

The proposed research will be conducted over a period of 12 months, including data collection, analysis, and report writing.

The estimated budget for this research includes expenses related to data collection, software licenses, participant compensation, and research assistance. A detailed budget breakdown will be provided in the final research plan.

8. Conclusion

This research proposal aims to investigate the impact of online education on student learning outcomes through a comparative study with traditional face-to-face instruction. By exploring various dimensions of online education, this research will provide valuable insights into the effectiveness and challenges associated with online learning. The findings will contribute to the ongoing discourse on educational practices and help shape future strategies for maximizing student learning outcomes in online education settings.

About the author

Muhammad Hassan

Researcher, Academic Writer, Web developer

You may also like

How To Write A Proposal – Step By Step Guide...

Grant Proposal – Example, Template and Guide

How To Write A Business Proposal – Step-by-Step...

Business Proposal – Templates, Examples and Guide

Proposal – Types, Examples, and Writing Guide

How to choose an Appropriate Method for Research?

- Search Menu

- Advance articles

- Author Guidelines

- Submission Site

- Open Access

- Why Publish?

- About Science and Public Policy

- Editorial Board

- Advertising and Corporate Services

- Journals Career Network

- Self-Archiving Policy

- Dispatch Dates

- Journals on Oxford Academic

- Books on Oxford Academic

Article Contents

1. introduction, 2. background, 4. findings, 5. discussion, 6. conclusion and final remarks, supplementary material, data availability, conflict of interest statement., acknowledgements.

- < Previous

Evaluation of research proposals by peer review panels: broader panels for broader assessments?

- Article contents

- Figures & tables

- Supplementary Data

Rebecca Abma-Schouten, Joey Gijbels, Wendy Reijmerink, Ingeborg Meijer, Evaluation of research proposals by peer review panels: broader panels for broader assessments?, Science and Public Policy , Volume 50, Issue 4, August 2023, Pages 619–632, https://doi.org/10.1093/scipol/scad009

- Permissions Icon Permissions

Panel peer review is widely used to decide which research proposals receive funding. Through this exploratory observational study at two large biomedical and health research funders in the Netherlands, we gain insight into how scientific quality and societal relevance are discussed in panel meetings. We explore, in ten review panel meetings of biomedical and health funding programmes, how panel composition and formal assessment criteria affect the arguments used. We observe that more scientific arguments are used than arguments related to societal relevance and expected impact. Also, more diverse panels result in a wider range of arguments, largely for the benefit of arguments related to societal relevance and impact. We discuss how funders can contribute to the quality of peer review by creating a shared conceptual framework that better defines research quality and societal relevance. We also contribute to a further understanding of the role of diverse peer review panels.

Scientific biomedical and health research is often supported by project or programme grants from public funding agencies such as governmental research funders and charities. Research funders primarily rely on peer review, often a combination of independent written review and discussion in a peer review panel, to inform their funding decisions. Peer review panels have the difficult task of integrating and balancing the various assessment criteria to select and rank the eligible proposals. With the increasing emphasis on societal benefit and being responsive to societal needs, the assessment of research proposals ought to include broader assessment criteria, including both scientific quality and societal relevance, and a broader perspective on relevant peers. This results in new practices of including non-scientific peers in review panels ( Del Carmen Calatrava Moreno et al. 2019 ; Den Oudendammer et al. 2019 ; Van den Brink et al. 2016 ). Relevant peers, in the context of biomedical and health research, include, for example, health-care professionals, (healthcare) policymakers, and patients as the (end-)users of research.