An official website of the United States government

The .gov means it's official. Federal government websites often end in .gov or .mil. Before sharing sensitive information, make sure you're on a federal government site.

The site is secure. The https:// ensures that you are connecting to the official website and that any information you provide is encrypted and transmitted securely.

- Publications

- Account settings

- Browse Titles

NCBI Bookshelf. A service of the National Library of Medicine, National Institutes of Health.

StatPearls [Internet]. Treasure Island (FL): StatPearls Publishing; 2024 Jan-.

StatPearls [Internet].

Type i and type ii errors and statistical power.

Jacob Shreffler ; Martin R. Huecker .

Affiliations

Last Update: March 13, 2023 .

- Definition/Introduction

Healthcare professionals, when determining the impact of patient interventions in clinical studies or research endeavors that provide evidence for clinical practice, must distinguish well-designed studies with valid results from studies with research design or statistical flaws. This article will help providers determine the likelihood of type I or type II errors and judge adequacy of statistical power. Then one can decide whether or not the evidence provided should be implemented in practice or used to guide future studies.

- Issues of Concern

Having an understanding of the concepts discussed in this article will allow healthcare providers to accurately and thoroughly assess the results and validity of medical research. Without an understanding of type I and II errors and power analysis, clinicians could make poor clinical decisions without evidence to support them.

Type I and Type II Errors

Type I and Type II errors can lead to confusion as providers assess medical literature. A vignette that illustrates the errors is the Boy Who Cried Wolf. First, the citizens commit a type I error by believing there is a wolf when there is not. Second, the citizens commit a type II error by believing there is no wolf when there is one.

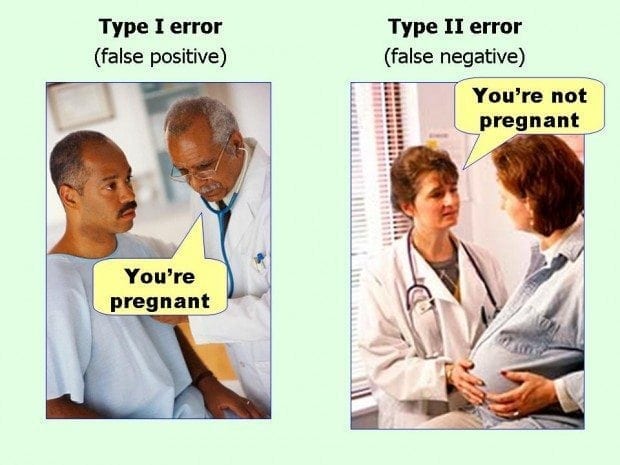

A type I error occurs when in research when we reject the null hypothesis and erroneously state that the study found significant differences when there indeed was no difference. In other words, it is equivalent to saying that the groups or variables differ when, in fact, they do not or having false positives. [1] An example of a research hypothesis is below:

Drug 23 will significantly reduce symptoms associated with Disease A compared to Drug 22.

For our example, if we were to state that Drug 23 significantly reduced symptoms of Disease A compared to Drug 22 when it did not, this would be a type I error. Committing a type I error can be very grave in specific scenarios. For example, if we did, move ahead with Drug 23 based on our research findings even though there was actually was no difference between groups, and the drug costs significantly more money for patients or has more side effects, then we would raise healthcare costs, cause iatrogenic harm, and not improve clinical outcomes. If a p-value is used to examine type I error, the lower the p-value, the lower the likelihood of the type I error to occur.

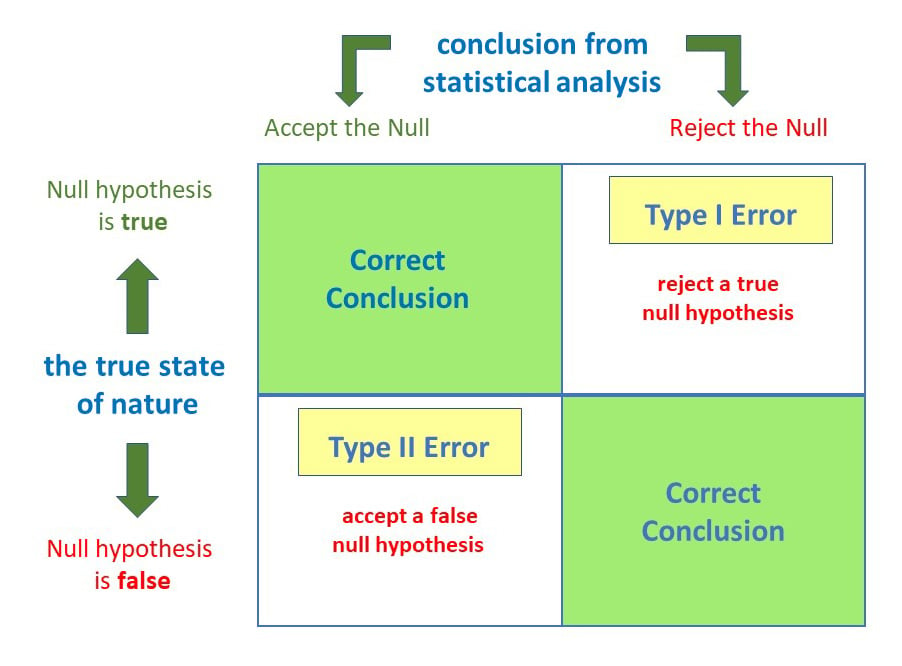

A type II error occurs when we declare no differences or associations between study groups when, in fact, there was. [2] As with type I errors, type II errors in certain cause problems. Picture an example with a new, less invasive surgical technique that was developed and tested in comparison to the more invasive standard care. Researchers would seek to show no differences between patients receiving the two treatment methods in health outcomes (noninferiority study). If, however, the less invasive procedure resulted in less favorable health outcomes, it would be a severe error. Table 1 provides a depiction of type I and type II errors.

(See Type I and Type II Errors and Statistical Power Table 1)

A concept closely aligned to type II error is statistical power. Statistical power is a crucial part of the research process that is most valuable in the design and planning phases of studies, though it requires assessment when interpreting results. Power is the ability to correctly reject a null hypothesis that is indeed false. [3] Unfortunately, many studies lack sufficient power and should be presented as having inconclusive findings. [4] Power is the probability of a study to make correct decisions or detect an effect when one exists. [3] [5]

The power of a statistical test is dependent on: the level of significance set by the researcher, the sample size, and the effect size or the extent to which the groups differ based on treatment. [3] Statistical power is critical for healthcare providers to decide how many patients to enroll in clinical studies. [4] Power is strongly associated with sample size; when the sample size is large, power will generally not be an issue. [6] Thus, when conducting a study with a low sample size, and ultimately low power, researchers should be aware of the likelihood of a type II error. The greater the N within a study, the more likely it is that a researcher will reject the null hypothesis. The concern with this approach is that a very large sample could show a statistically significant finding due to the ability to detect small differences in the dataset; thus, utilization of p values alone based on a large sample can be troublesome.

It is essential to recognize that power can be deemed adequate with a smaller sample if the effect size is large. [6] What is an acceptable level of power? Many researchers agree upon a power of 80% or higher as credible enough for determining the actual effects of research studies. [3] Ultimately, studies with lower power will find fewer true effects than studies with higher power; thus, clinicians should be aware of the likelihood of a power issue resulting in a type II error. [7] Unfortunately, many researchers, and providers who assess medical literature, do not scrutinize power analyses. Studies with low power may inhibit future work as they lack the ability to detect actual effects with variables; this could lead to potential impacts remaining undiscovered or noted as not effective when they may be. [7]

Medical researchers should invest time in conducting power analyses to sufficiently distinguish a difference or association. [3] Luckily, there are many tables of power values as well as statistical software packages that can help to determine study power and guide researchers in study design and analysis. If choosing to utilize statistical software to calculate power, the following are necessary for entry: the predetermined alpha level, proposed sample size, and effect size the investigator(s) is aiming to detect. [2] By utilizing power calculations on the front end, researchers can determine adequate sample size to compute effect, and determine based on statistical findings; sufficient power was actually observed. [2]

- Clinical Significance

By limiting type I and type II errors, healthcare providers can ensure that decisions based on research outputs are safe for patients. [8] Additionally, while power analysis can be time-consuming, making inferences on low powered studies can be inaccurate and irresponsible. Through the utilization of adequately designed studies through balancing the likelihood of type I and type II errors and understanding power, providers and researchers can determine which studies are clinically significant and should, therefore, implemented into practice.

- Nursing, Allied Health, and Interprofessional Team Interventions

All physicians, nurses, pharmacists, and other healthcare professionals should strive to understand the concepts of Type I and II errors and power. These individuals should maintain the ability to review and incorporate new literature for evidence-based and safe care. They will also more effectively work in teams with other professionals.

- Review Questions

- Access free multiple choice questions on this topic.

- Comment on this article.

Type I and Type II Errors and Statistical Power Table 1 Contributed by Martin Huecker, MD and Jacob Shreffler, PhD

Disclosure: Jacob Shreffler declares no relevant financial relationships with ineligible companies.

Disclosure: Martin Huecker declares no relevant financial relationships with ineligible companies.

This book is distributed under the terms of the Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International (CC BY-NC-ND 4.0) ( http://creativecommons.org/licenses/by-nc-nd/4.0/ ), which permits others to distribute the work, provided that the article is not altered or used commercially. You are not required to obtain permission to distribute this article, provided that you credit the author and journal.

- Cite this Page Shreffler J, Huecker MR. Type I and Type II Errors and Statistical Power. [Updated 2023 Mar 13]. In: StatPearls [Internet]. Treasure Island (FL): StatPearls Publishing; 2024 Jan-.

In this Page

Bulk download.

- Bulk download StatPearls data from FTP

Related information

- PMC PubMed Central citations

- PubMed Links to PubMed

Similar articles in PubMed

- Suicidal Ideation. [StatPearls. 2024] Suicidal Ideation. Harmer B, Lee S, Duong TVH, Saadabadi A. StatPearls. 2024 Jan

- Medical Error Reduction and Prevention. [StatPearls. 2024] Medical Error Reduction and Prevention. Rodziewicz TL, Houseman B, Hipskind JE. StatPearls. 2024 Jan

- The future of Cochrane Neonatal. [Early Hum Dev. 2020] The future of Cochrane Neonatal. Soll RF, Ovelman C, McGuire W. Early Hum Dev. 2020 Nov; 150:105191. Epub 2020 Sep 12.

- Review Evidence Brief: The Quality of Care Provided by Advanced Practice Nurses [ 2014] Review Evidence Brief: The Quality of Care Provided by Advanced Practice Nurses McCleery E, Christensen V, Peterson K, Humphrey L, Helfand M. 2014 Sep

- Review Low power and type II errors in recent ophthalmology research. [Can J Ophthalmol. 2016] Review Low power and type II errors in recent ophthalmology research. Khan Z, Milko J, Iqbal M, Masri M, Almeida DRP. Can J Ophthalmol. 2016 Oct; 51(5):368-372. Epub 2016 Sep 3.

Recent Activity

- Type I and Type II Errors and Statistical Power - StatPearls Type I and Type II Errors and Statistical Power - StatPearls

Your browsing activity is empty.

Activity recording is turned off.

Turn recording back on

Connect with NLM

National Library of Medicine 8600 Rockville Pike Bethesda, MD 20894

Web Policies FOIA HHS Vulnerability Disclosure

Help Accessibility Careers

Type 1 and Type 2 Errors in Statistics

Saul Mcleod, PhD

Editor-in-Chief for Simply Psychology

BSc (Hons) Psychology, MRes, PhD, University of Manchester

Saul Mcleod, PhD., is a qualified psychology teacher with over 18 years of experience in further and higher education. He has been published in peer-reviewed journals, including the Journal of Clinical Psychology.

Learn about our Editorial Process

On This Page:

A statistically significant result cannot prove that a research hypothesis is correct (which implies 100% certainty). Because a p -value is based on probabilities, there is always a chance of making an incorrect conclusion regarding accepting or rejecting the null hypothesis ( H 0 ).

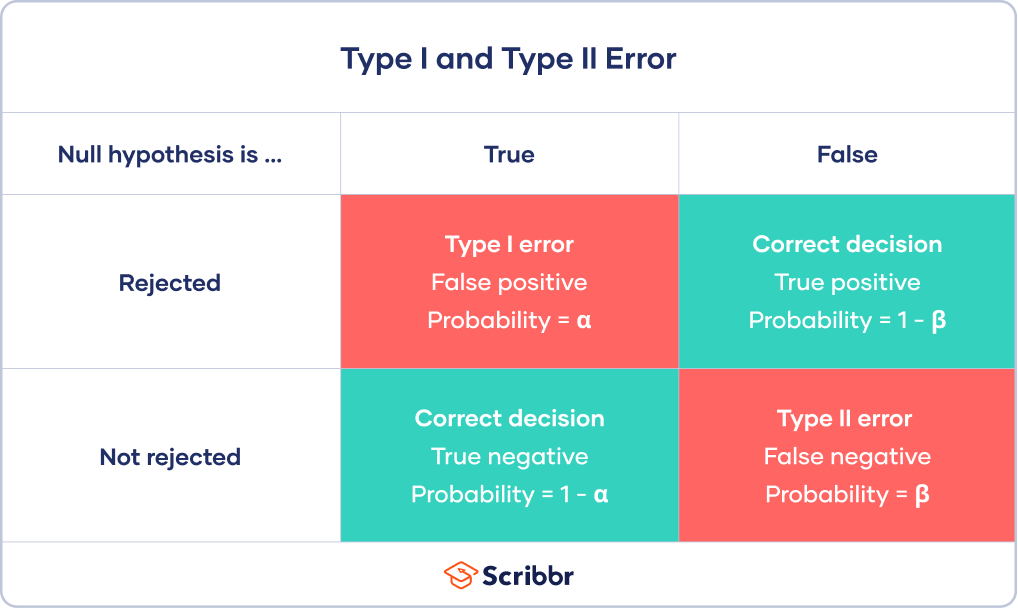

Anytime we make a decision using statistics, there are four possible outcomes, with two representing correct decisions and two representing errors.

The chances of committing these two types of errors are inversely proportional: that is, decreasing type I error rate increases type II error rate and vice versa.

As the significance level (α) increases, it becomes easier to reject the null hypothesis, decreasing the chance of missing a real effect (Type II error, β). If the significance level (α) goes down, it becomes harder to reject the null hypothesis , increasing the chance of missing an effect while reducing the risk of falsely finding one (Type I error).

Type I error

A type 1 error is also known as a false positive and occurs when a researcher incorrectly rejects a true null hypothesis. Simply put, it’s a false alarm.

This means that you report that your findings are significant when they have occurred by chance.

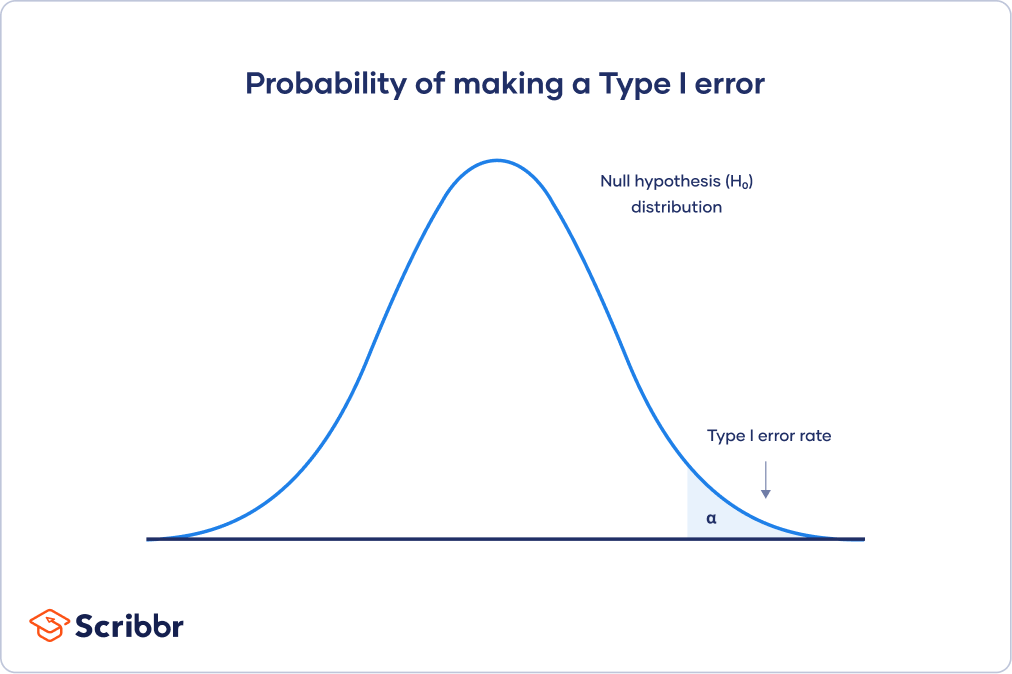

The probability of making a type 1 error is represented by your alpha level (α), the p- value below which you reject the null hypothesis.

A p -value of 0.05 indicates that you are willing to accept a 5% chance of getting the observed data (or something more extreme) when the null hypothesis is true.

You can reduce your risk of committing a type 1 error by setting a lower alpha level (like α = 0.01). For example, a p-value of 0.01 would mean there is a 1% chance of committing a Type I error.

However, using a lower value for alpha means that you will be less likely to detect a true difference if one really exists (thus risking a type II error).

Scenario: Drug Efficacy Study

Imagine a pharmaceutical company is testing a new drug, named “MediCure”, to determine if it’s more effective than a placebo at reducing fever. They experimented with two groups: one receives MediCure, and the other received a placebo.

- Null Hypothesis (H0) : MediCure is no more effective at reducing fever than the placebo.

- Alternative Hypothesis (H1) : MediCure is more effective at reducing fever than the placebo.

After conducting the study and analyzing the results, the researchers found a p-value of 0.04.

If they use an alpha (α) level of 0.05, this p-value is considered statistically significant, leading them to reject the null hypothesis and conclude that MediCure is more effective than the placebo.

However, MediCure has no actual effect, and the observed difference was due to random variation or some other confounding factor. In this case, the researchers have incorrectly rejected a true null hypothesis.

Error : The researchers have made a Type 1 error by concluding that MediCure is more effective when it isn’t.

Implications

Resource Allocation : Making a Type I error can lead to wastage of resources. If a business believes a new strategy is effective when it’s not (based on a Type I error), they might allocate significant financial and human resources toward that ineffective strategy.

Unnecessary Interventions : In medical trials, a Type I error might lead to the belief that a new treatment is effective when it isn’t. As a result, patients might undergo unnecessary treatments, risking potential side effects without any benefit.

Reputation and Credibility : For researchers, making repeated Type I errors can harm their professional reputation. If they frequently claim groundbreaking results that are later refuted, their credibility in the scientific community might diminish.

Type II error

A type 2 error (or false negative) happens when you accept the null hypothesis when it should actually be rejected.

Here, a researcher concludes there is not a significant effect when actually there really is.

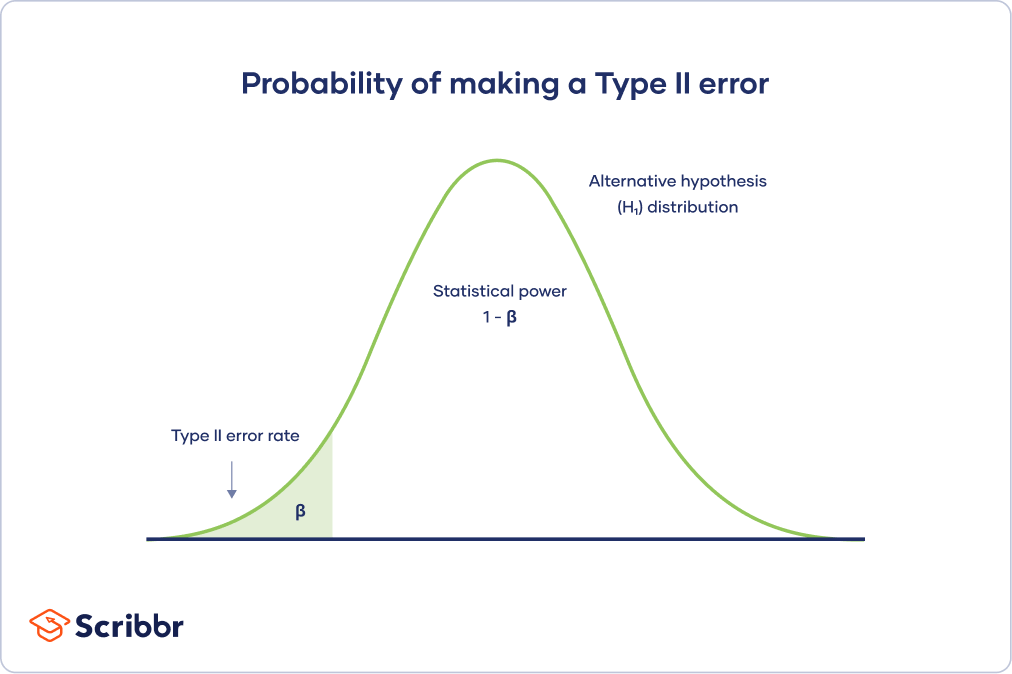

The probability of making a type II error is called Beta (β), which is related to the power of the statistical test (power = 1- β). You can decrease your risk of committing a type II error by ensuring your test has enough power.

You can do this by ensuring your sample size is large enough to detect a practical difference when one truly exists.

Scenario: Efficacy of a New Teaching Method

Educational psychologists are investigating the potential benefits of a new interactive teaching method, named “EduInteract”, which utilizes virtual reality (VR) technology to teach history to middle school students.

They hypothesize that this method will lead to better retention and understanding compared to the traditional textbook-based approach.

- Null Hypothesis (H0) : The EduInteract VR teaching method does not result in significantly better retention and understanding of history content than the traditional textbook method.

- Alternative Hypothesis (H1) : The EduInteract VR teaching method results in significantly better retention and understanding of history content than the traditional textbook method.

The researchers designed an experiment where one group of students learns a history module using the EduInteract VR method, while a control group learns the same module using a traditional textbook.

After a week, the student’s retention and understanding are tested using a standardized assessment.

Upon analyzing the results, the psychologists found a p-value of 0.06. Using an alpha (α) level of 0.05, this p-value isn’t statistically significant.

Therefore, they fail to reject the null hypothesis and conclude that the EduInteract VR method isn’t more effective than the traditional textbook approach.

However, let’s assume that in the real world, the EduInteract VR truly enhances retention and understanding, but the study failed to detect this benefit due to reasons like small sample size, variability in students’ prior knowledge, or perhaps the assessment wasn’t sensitive enough to detect the nuances of VR-based learning.

Error : By concluding that the EduInteract VR method isn’t more effective than the traditional method when it is, the researchers have made a Type 2 error.

This could prevent schools from adopting a potentially superior teaching method that might benefit students’ learning experiences.

Missed Opportunities : A Type II error can lead to missed opportunities for improvement or innovation. For example, in education, if a more effective teaching method is overlooked because of a Type II error, students might miss out on a better learning experience.

Potential Risks : In healthcare, a Type II error might mean overlooking a harmful side effect of a medication because the research didn’t detect its harmful impacts. As a result, patients might continue using a harmful treatment.

Stagnation : In the business world, making a Type II error can result in continued investment in outdated or less efficient methods. This can lead to stagnation and the inability to compete effectively in the marketplace.

How do Type I and Type II errors relate to psychological research and experiments?

Type I errors are like false alarms, while Type II errors are like missed opportunities. Both errors can impact the validity and reliability of psychological findings, so researchers strive to minimize them to draw accurate conclusions from their studies.

How does sample size influence the likelihood of Type I and Type II errors in psychological research?

Sample size in psychological research influences the likelihood of Type I and Type II errors. A larger sample size reduces the chances of Type I errors, which means researchers are less likely to mistakenly find a significant effect when there isn’t one.

A larger sample size also increases the chances of detecting true effects, reducing the likelihood of Type II errors.

Are there any ethical implications associated with Type I and Type II errors in psychological research?

Yes, there are ethical implications associated with Type I and Type II errors in psychological research.

Type I errors may lead to false positive findings, resulting in misleading conclusions and potentially wasting resources on ineffective interventions. This can harm individuals who are falsely diagnosed or receive unnecessary treatments.

Type II errors, on the other hand, may result in missed opportunities to identify important effects or relationships, leading to a lack of appropriate interventions or support. This can also have negative consequences for individuals who genuinely require assistance.

Therefore, minimizing these errors is crucial for ethical research and ensuring the well-being of participants.

Further Information

- Publication manual of the American Psychological Association

- Statistics for Psychology Book Download

Have a thesis expert improve your writing

Check your thesis for plagiarism in 10 minutes, generate your apa citations for free.

- Knowledge Base

- Type I & Type II Errors | Differences, Examples, Visualizations

Type I & Type II Errors | Differences, Examples, Visualizations

Published on 18 January 2021 by Pritha Bhandari . Revised on 2 February 2023.

In statistics , a Type I error is a false positive conclusion, while a Type II error is a false negative conclusion.

Making a statistical decision always involves uncertainties, so the risks of making these errors are unavoidable in hypothesis testing .

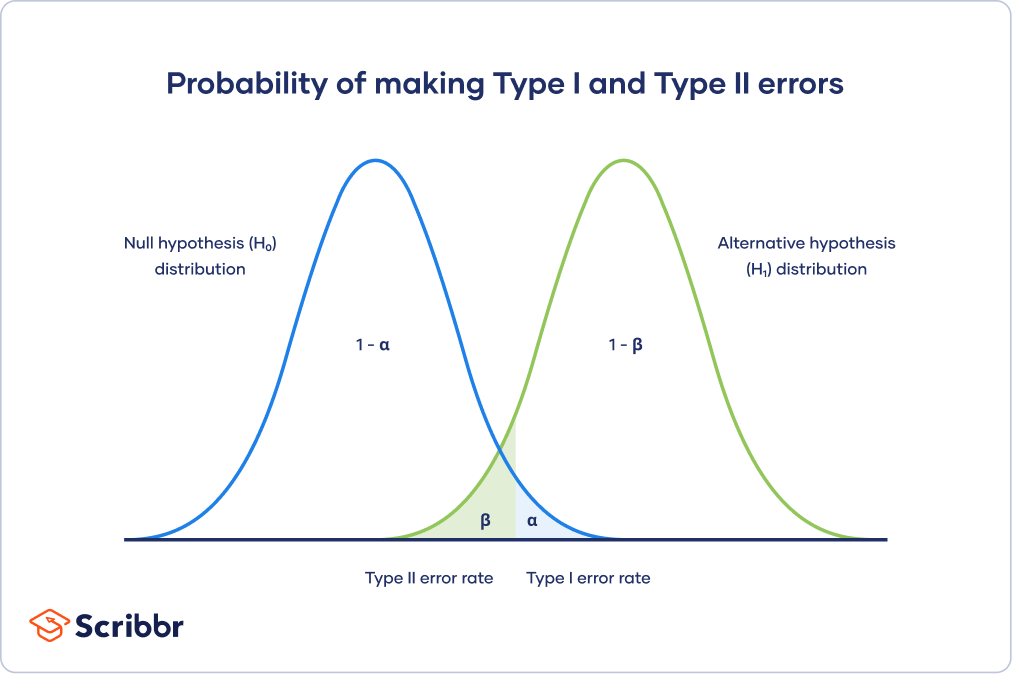

The probability of making a Type I error is the significance level , or alpha (α), while the probability of making a Type II error is beta (β). These risks can be minimized through careful planning in your study design.

- Type I error (false positive) : the test result says you have coronavirus, but you actually don’t.

- Type II error (false negative) : the test result says you don’t have coronavirus, but you actually do.

Table of contents

Error in statistical decision-making, type i error, type ii error, trade-off between type i and type ii errors, is a type i or type ii error worse, frequently asked questions about type i and ii errors.

Using hypothesis testing, you can make decisions about whether your data support or refute your research predictions with null and alternative hypotheses .

Hypothesis testing starts with the assumption of no difference between groups or no relationship between variables in the population—this is the null hypothesis . It’s always paired with an alternative hypothesis , which is your research prediction of an actual difference between groups or a true relationship between variables .

In this case:

- The null hypothesis (H 0 ) is that the new drug has no effect on symptoms of the disease.

- The alternative hypothesis (H 1 ) is that the drug is effective for alleviating symptoms of the disease.

Then , you decide whether the null hypothesis can be rejected based on your data and the results of a statistical test . Since these decisions are based on probabilities, there is always a risk of making the wrong conclusion.

- If your results show statistical significance , that means they are very unlikely to occur if the null hypothesis is true. In this case, you would reject your null hypothesis. But sometimes, this may actually be a Type I error.

- If your findings do not show statistical significance, they have a high chance of occurring if the null hypothesis is true. Therefore, you fail to reject your null hypothesis. But sometimes, this may be a Type II error.

A Type I error means rejecting the null hypothesis when it’s actually true. It means concluding that results are statistically significant when, in reality, they came about purely by chance or because of unrelated factors.

The risk of committing this error is the significance level (alpha or α) you choose. That’s a value that you set at the beginning of your study to assess the statistical probability of obtaining your results ( p value).

The significance level is usually set at 0.05 or 5%. This means that your results only have a 5% chance of occurring, or less, if the null hypothesis is actually true.

If the p value of your test is lower than the significance level, it means your results are statistically significant and consistent with the alternative hypothesis. If your p value is higher than the significance level, then your results are considered statistically non-significant.

To reduce the Type I error probability, you can simply set a lower significance level.

Type I error rate

The null hypothesis distribution curve below shows the probabilities of obtaining all possible results if the study were repeated with new samples and the null hypothesis were true in the population .

At the tail end, the shaded area represents alpha. It’s also called a critical region in statistics.

If your results fall in the critical region of this curve, they are considered statistically significant and the null hypothesis is rejected. However, this is a false positive conclusion, because the null hypothesis is actually true in this case!

A Type II error means not rejecting the null hypothesis when it’s actually false. This is not quite the same as “accepting” the null hypothesis, because hypothesis testing can only tell you whether to reject the null hypothesis.

Instead, a Type II error means failing to conclude there was an effect when there actually was. In reality, your study may not have had enough statistical power to detect an effect of a certain size.

Power is the extent to which a test can correctly detect a real effect when there is one. A power level of 80% or higher is usually considered acceptable.

The risk of a Type II error is inversely related to the statistical power of a study. The higher the statistical power, the lower the probability of making a Type II error.

Statistical power is determined by:

- Size of the effect : Larger effects are more easily detected.

- Measurement error : Systematic and random errors in recorded data reduce power.

- Sample size : Larger samples reduce sampling error and increase power.

- Significance level : Increasing the significance level increases power.

To (indirectly) reduce the risk of a Type II error, you can increase the sample size or the significance level.

Type II error rate

The alternative hypothesis distribution curve below shows the probabilities of obtaining all possible results if the study were repeated with new samples and the alternative hypothesis were true in the population .

The Type II error rate is beta (β), represented by the shaded area on the left side. The remaining area under the curve represents statistical power, which is 1 – β.

Increasing the statistical power of your test directly decreases the risk of making a Type II error.

The Type I and Type II error rates influence each other. That’s because the significance level (the Type I error rate) affects statistical power, which is inversely related to the Type II error rate.

This means there’s an important tradeoff between Type I and Type II errors:

- Setting a lower significance level decreases a Type I error risk, but increases a Type II error risk.

- Increasing the power of a test decreases a Type II error risk, but increases a Type I error risk.

This trade-off is visualized in the graph below. It shows two curves:

- The null hypothesis distribution shows all possible results you’d obtain if the null hypothesis is true. The correct conclusion for any point on this distribution means not rejecting the null hypothesis.

- The alternative hypothesis distribution shows all possible results you’d obtain if the alternative hypothesis is true. The correct conclusion for any point on this distribution means rejecting the null hypothesis.

Type I and Type II errors occur where these two distributions overlap. The blue shaded area represents alpha, the Type I error rate, and the green shaded area represents beta, the Type II error rate.

By setting the Type I error rate, you indirectly influence the size of the Type II error rate as well.

It’s important to strike a balance between the risks of making Type I and Type II errors. Reducing the alpha always comes at the cost of increasing beta, and vice versa .

For statisticians, a Type I error is usually worse. In practical terms, however, either type of error could be worse depending on your research context.

A Type I error means mistakenly going against the main statistical assumption of a null hypothesis. This may lead to new policies, practices or treatments that are inadequate or a waste of resources.

In contrast, a Type II error means failing to reject a null hypothesis. It may only result in missed opportunities to innovate, but these can also have important practical consequences.

In statistics, a Type I error means rejecting the null hypothesis when it’s actually true, while a Type II error means failing to reject the null hypothesis when it’s actually false.

The risk of making a Type I error is the significance level (or alpha) that you choose. That’s a value that you set at the beginning of your study to assess the statistical probability of obtaining your results ( p value ).

To reduce the Type I error probability, you can set a lower significance level.

The risk of making a Type II error is inversely related to the statistical power of a test. Power is the extent to which a test can correctly detect a real effect when there is one.

To (indirectly) reduce the risk of a Type II error, you can increase the sample size or the significance level to increase statistical power.

Statistical significance is a term used by researchers to state that it is unlikely their observations could have occurred under the null hypothesis of a statistical test . Significance is usually denoted by a p -value , or probability value.

Statistical significance is arbitrary – it depends on the threshold, or alpha value, chosen by the researcher. The most common threshold is p < 0.05, which means that the data is likely to occur less than 5% of the time under the null hypothesis .

When the p -value falls below the chosen alpha value, then we say the result of the test is statistically significant.

In statistics, power refers to the likelihood of a hypothesis test detecting a true effect if there is one. A statistically powerful test is more likely to reject a false negative (a Type II error).

If you don’t ensure enough power in your study, you may not be able to detect a statistically significant result even when it has practical significance. Your study might not have the ability to answer your research question.

Cite this Scribbr article

If you want to cite this source, you can copy and paste the citation or click the ‘Cite this Scribbr article’ button to automatically add the citation to our free Reference Generator.

Bhandari, P. (2023, February 02). Type I & Type II Errors | Differences, Examples, Visualizations. Scribbr. Retrieved 29 April 2024, from https://www.scribbr.co.uk/stats/type-i-and-type-ii-error/

Is this article helpful?

Pritha Bhandari

- school Campus Bookshelves

- menu_book Bookshelves

- perm_media Learning Objects

- login Login

- how_to_reg Request Instructor Account

- hub Instructor Commons

- Download Page (PDF)

- Download Full Book (PDF)

- Periodic Table

- Physics Constants

- Scientific Calculator

- Reference & Cite

- Tools expand_more

- Readability

selected template will load here

This action is not available.

9.2: Outcomes, Type I and Type II Errors

- Last updated

- Save as PDF

- Page ID 16267

When you perform a hypothesis test, there are four possible outcomes depending on the actual truth (or falseness) of the null hypothesis H 0 and the decision to reject or not.

The outcomes are summarized in the following table:

The four possible outcomes in the table are:

- The decision is not to reject H 0 when H 0 is true (correct decision) .

- The decision is to reject H 0 when H 0 is true (incorrect decision known as a Type I error ).

- The decision is not to reject H 0 when H 0 is false (incorrect decision known as a Type II error ).

- The decision is to reject H 0 when H 0 is false ( correct decision whose probability is called the Power of the Test ).

Each of the errors occurs with a particular probability. The Greek letters α and β represent the probabilities.

α = probability of a Type I error = P (Type I error) = probability of rejecting the null hypothesis when the null hypothesis is true.

β = probability of a Type II error = P (Type II error) = probability of not rejecting the null hypothesis when the null hypothesis is false.

α and β should be as small as possible because they are probabilities of errors. They are rarely zero.

The Power of the Test is 1 – β . Since β is probability of making type II error, we want this probability to be small. In other words, we want the value 1 – β to be as closed to one as possible. Increasing the sample size can increase the Power of the Test.

Suppose the null hypothesis, H 0 , is: Frank’s rock climbing equipment is safe.

- Ty pe I error: Frank thinks that his rock climbing equipment may not be safe when, in fact, it really is safe.

- Type II error: Frank thinks that his rock climbing equipment may be safe when, in fact, it is not safe.

α = Probability that Frank thinks his rock climbing equipment may not be safe when it really is safe. β = Probability that Frank thinks his rock climbing equipment may be safe when it is not safe.

Notice that, in this case, the error with the greater consequence is the Type II error. (If Frank thinks his rock climbing equipment is safe, he will go ahead and use it.)

Suppose the null hypothesis, H 0 , is: the blood cultures contain no traces of pathogen X . State the Type I and Type II errors.

[practice-area rows=”2″][/practice-area] [reveal-answer q=”313018″]Solution[/reveal-answer] [hidden-answer a=”313018″]

Type I error : The researcher thinks the blood cultures do contain traces of pathogen X, when in fact, they do not. Type II error : The researcher thinks the blood cultures do not contain traces of pathogen X, when in fact, they do.

[/hidden-answer]

Suppose the null hypothesis, H 0 : The victim of an automobile accident is alive when he arrives at the emergency room of a hospital. State the 4 possible outcomes of performing a hypothesis test.

α = probability that the emergency crew thinks the victim is dead when, in fact, he is really alive = P (Type I error). β = probability that the emergency crew does not know if the victim is alive when, in fact, the victim is dead = P (Type II error).

The error with the greater consequence is the Type I error. (If the emergency crew thinks the victim is dead, they will not treat him.)

A YouTube element has been excluded from this version of the text. You can view it online here: http://pb.libretexts.org/esm/?p=120

Suppose the null hypothesis, H 0 , is: A patient is not sick. Which type of error has the greater consequence, Type I or Type II?

[practice-area rows=”1=2″][/practice-area] [reveal-answer q=”3933″]Solution[/reveal-answer] [hidden-answer a=”3933″]

Type I Error: The patient will not be thought well when, in fact, he is not sick.

Type II Error: The patient will be thought well when, in fact, he is sick.

The error with the greater consequence is the Type II error: the patient will be thought well when, in fact, he is sick. He will not be able to get treatment.[/hidden-answer]

Boy Genetic Labs claim to be able to increase the likelihood that a pregnancy will result in a boy being born. Statisticians want to test the claim. Suppose that the null hypothesis, H 0 , is: Boy Genetic Labs has no effect on gender outcome. Which type of error has the greater consequence, Type I or Type II?

H 0 : Boy Genetic Labs has no effect on gender outcome.

H a : Boy Genetic Labs has effect on gender outcome.

- Type I error: This results when a true null hypothesis is rejected. In the context of this scenario, we would state that we believe that Boy Genetic Labs influences the gender outcome, when in fact it has no effect. The probability of this error occurring is denoted by the Greek letter alpha, α .

- Type II error: This results when we fail to reject a false null hypothesis. In context, we would state that Boy Genetic Labs does not influence the gender outcome of a pregnancy when, in fact, it does. The probability of this error occurring is denoted by the Greek letter beta, β .

The error of greater consequence would be the Type I error since couples would use the Boy Genetic Labs product in hopes of increasing the chances of having a boy.

“Red tide” is a bloom of poison-producing algae–a few different species of a class of plankton called dinoflagellates. When the weather and water conditions cause these blooms, shellfish such as clams living in the area develop dangerous levels of a paralysis-inducing toxin. In Massachusetts, the Division of Marine Fisheries (DMF) monitors levels of the toxin in shellfish by regular sampling of shellfish along the coastline. If the mean level of toxin in clams exceeds 800 μg (micrograms) of toxin per kg of clam meat in any area, clam harvesting is banned there until the bloom is over and levels of toxin in clams subside. Describe both a Type I and a Type II error in this context, and state which error has the greater consequence.

[reveal-answer q=”432609″]Solution[/reveal-answer] [hidden-answer a=”432609″]

In this scenario, an appropriate null hypothesis would be H 0 : the mean level of toxins is at most 800 μg. ( H 0 : μ 0 ≤ 800 μg ) H a : the mean level of toxins exceeds 800 μg. (H a : μ 0 > 800 μg )

Type I error: The DMF believes that toxin levels are still too high when, in fact, toxin levels are at most 800 μg. The DMF continues the harvesting ban. Type II error: The DMF believes that toxin levels are within acceptable levels (are at least 800 μg) when, in fact, toxin levels are still too high (more than 800 μg). The DMF lifts the harvesting ban.

This error could be the most serious. If the ban is lifted and clams are still toxic, consumers could possibly eat tainted food. In summary, the more dangerous error would be to commit a Type II error, because this error involves the availability of tainted clams for consumption.[/hidden-answer]

A certain experimental drug claims a cure rate of higher than 75% for males with prostate cancer.

Describe both the Type I and Type II errors in context. Which error is the more serious?

H 0 : The cure rate is less than 75%.

H a : The cure rate is higher than 75%.

- Type I: A cancer patient believes the cure rate for the drug is more than 75% when the cure rate actually is less than 75%.

- Type II: A cancer patient believes the the cure rate is less than 75% cure rate when the cure rate is actually higher than 75%.

In this scenario, the Type II error contains the more severe consequence. If a patient believes the drug works at least 75% of the time, this most likely will influence the patient’s (and doctor’s) choice about whether to use the drug as a treatment option.

Determine both Type I and Type II errors for the following scenario:

Assume a null hypothesis, H 0 , that states the percentage of adults with jobs is at least 88%.

Identify the Type I and Type II errors from these four statements.

a)Not to reject the null hypothesis that the percentage of adults who have jobs is at least 88% when that percentage is actually less than 88%

b)Not to reject the null hypothesis that the percentage of adults who have jobs is at least 88% when the percentage is actually at least 88%.

c)Reject the null hypothesis that the percentage of adults who have jobs is at least 88% when the percentage is actually at least 88%.

d)Reject the null hypothesis that the percentage of adults who have jobs is at least 88% when that percentage is actually less than 88%.

[reveal-answer q=”76062″]Type I error:[/reveal-answer] [hidden-answer a=”76062″]c [/hidden-answer] [reveal-answer q=”975662″]Type II error:[/reveal-answer] [hidden-answer a=”975662″]b[/hidden-answer]

If H 0 : The percentage of adults with jobs is at least 88%, then H a : The percentage of adults with jobs is less than 88%.

[reveal-answer q=”864484″]Type I error: [/reveal-answer] [hidden-answer a=”864484″]c [/hidden-answer] [reveal-answer q=”126260″]Type II error:[/reveal-answer] [hidden-answer a=”126260″]b[/hidden-answer]

Concept Review

In every hypothesis test, the outcomes are dependent on a correct interpretation of the data. Incorrect calculations or misunderstood summary statistics can yield errors that affect the results. A Type I error occurs when a true null hypothesis is rejected. A Type II error occurs when a false null hypothesis is not rejected.

The probabilities of these errors are denoted by the Greek letters α and β , for a Type I and a Type II error respectively. The power of the test, 1 – β , quantifies the likelihood that a test will yield the correct result of a true alternative hypothesis being accepted. A high power is desirable.

Formula Review

- OpenStax, Statistics, Outcomes and the Type I and Type II Errors. Provided by : OpenStax. Located at : http://cnx.org/contents/[email protected] . License : CC BY: Attribution

- Introductory Statistics . Authored by : Barbara Illowski, Susan Dean. Provided by : Open Stax. Located at : http://cnx.org/contents/[email protected] . License : CC BY: Attribution . License Terms : Download for free at http://cnx.org/contents/[email protected]

- Type 1 errors | Inferential statistics | Probability and Statistics | Khan Academy. Authored by : Khan Academy. Located at : https://youtu.be/EowIec7Y8HM . License : All Rights Reserved . License Terms : Standard YouTube License

9.2 Outcomes and the Type I and Type II Errors

When you perform a hypothesis test, there are four possible outcomes depending on the actual truth, or falseness, of the null hypothesis H 0 and the decision to reject or not. The outcomes are summarized in the following table:

The four possible outcomes in the table are as follows:

- The decision is not to reject H 0 when H 0 is true (correct decision).

- The decision is to reject H 0 when, in fact, H 0 is true (incorrect decision known as a Type I error ).

- The decision is not to reject H 0 when, in fact, H 0 is false (incorrect decision known as a Type II error ).

- The decision is to reject H 0 when H 0 is false (correct decision whose probability is called the Power of the Test ).

Each of the errors occurs with a particular probability. The Greek letters α and β represent the probabilities.

α = probability of a Type I error = P (Type I error) = probability of rejecting the null hypothesis when the null hypothesis is true.

β = probability of a Type II error = P (Type II error) = probability of not rejecting the null hypothesis when the null hypothesis is false.

α and β should be as small as possible because they are probabilities of errors. They are rarely zero.

The Power of the Test is 1 – β . Ideally, we want a high power that is as close to one as possible. Increasing the sample size can increase the Power of the Test.

The following are examples of Type I and Type II errors.

Example 9.5

Suppose the null hypothesis, H 0 , is: Frank's rock climbing equipment is safe.

Type I error: Frank does not go rock climbing because he considers that the equipment is not safe, when in fact, the equipment is really safe. Frank is making the mistake of rejecting the null hypothesis, when the equipment is actually safe!

Type II error: Frank goes climbing, thinking that his equipment is safe, but this is a mistake, and he painfully realizes that his equipment is not as safe as it should have been. Frank assumed that the null hypothesis was true, when it was not.

α = probability that Frank thinks his rock climbing equipment may not be safe when, in fact, it really is safe. β = probability that Frank thinks his rock climbing equipment may be safe when, in fact, it is not safe.

Notice that, in this case, the error with the greater consequence is the Type II error. (If Frank thinks his rock climbing equipment is safe, he will go ahead and use it.)

Suppose the null hypothesis, H 0 , is: the blood cultures contain no traces of pathogen X . State the Type I and Type II errors.

Example 9.6

Suppose the null hypothesis, H 0 , is: a tomato plant is alive when a class visits the school garden.

Type I error: The null hypothesis claims that the tomato plant is alive, and it is true, but the students make the mistake of thinking that the plant is already dead.

Type II error: The tomato plant is already dead (the null hypothesis is false), but the students do not notice it, and believe that the tomato plant is alive.

α = probability that the class thinks the tomato plant is dead when, in fact, it is alive = P (Type I error). β = probability that the class thinks the tomato plant is alive when, in fact, it is dead = P (Type II error).

The error with the greater consequence is the Type I error. (If the class thinks the plant is dead, they will not water it.)

Suppose the null hypothesis, H 0 , is: a patient is not sick. Which type of error has the greater consequence, Type I or Type II?

Example 9.7

It’s a Boy Genetic Labs, a genetics company, claims to be able to increase the likelihood that a pregnancy will result in a boy being born. Statisticians want to test the claim. Suppose that the null hypothesis, H 0 , is: It’s a Boy Genetic Labs has no effect on gender outcome.

Type I error : This error results when a true null hypothesis is rejected. In the context of this scenario, we would state that we believe that It’s a Boy Genetic Labs influences the gender outcome, when in fact it has no effect. The probability of this error occurring is denoted by the Greek letter alpha, α .

Type II error : This error results when we fail to reject a false null hypothesis. In context, we would state that It’s a Boy Genetic Labs does not influence the gender outcome of a pregnancy when, in fact, it does. The probability of this error occurring is denoted by the Greek letter beta, β .

The error with the greater consequence would be the Type I error since couples would use the It’s a Boy Genetic Labs product in hopes of increasing the chances of having a boy.

Red tide is a bloom of poison-producing algae—a few different species of a class of plankton called dinoflagellates. When the weather and water conditions cause these blooms, shellfish such as clams living in the area develop dangerous levels of a paralysis-inducing toxin. In Massachusetts, the Division of Marine Fisheries montors levels of the toxin in shellfish by regular sampling of shellfish along the coastline. If the mean level of toxin in clams exceeds 800 μg (micrograms) of toxin per kilogram of clam meat in any area, clam harvesting is banned there until the bloom is over and levels of toxin in clams subside. Describe both a Type I and a Type II error in this context, and state which error has the greater consequence.

Example 9.8

A certain experimental drug claims a cure rate of at least 75 percent for males with a disease. Describe both the Type I and Type II errors in context. Which error is the more serious?

Type I : A patient believes the cure rate for the drug is less than 75 percent when it actually is at least 75 percent.

Type II : A patient believes the experimental drug has at least a 75 percent cure rate when it has a cure rate that is less than 75 percent.

In this scenario, the Type II error contains the more severe consequence. If a patient believes the drug works at least 75 percent of the time, this most likely will influence the patient’s (and doctor’s) choice about whether to use the drug as a treatment option.

Determine both Type I and Type II errors for the following scenario:

Assume a null hypothesis, H 0 , that states the percentage of adults with jobs is at least 88 percent.

Identify the Type I and Type II errors from these four possible choices.

- Not to reject the null hypothesis that the percentage of adults who have jobs is at least 88 percent when that percentage is actually less than 88 percent

- Not to reject the null hypothesis that the percentage of adults who have jobs is at least 88 percent when the percentage is actually at least 88 percent

- Reject the null hypothesis that the percentage of adults who have jobs is at least 88 percent when the percentage is actually at least 88 percent

- Reject the null hypothesis that the percentage of adults who have jobs is at least 88 percent when that percentage is actually less than 88 percent

As an Amazon Associate we earn from qualifying purchases.

This book may not be used in the training of large language models or otherwise be ingested into large language models or generative AI offerings without OpenStax's permission.

Want to cite, share, or modify this book? This book uses the Creative Commons Attribution License and you must attribute Texas Education Agency (TEA). The original material is available at: https://www.texasgateway.org/book/tea-statistics . Changes were made to the original material, including updates to art, structure, and other content updates.

Access for free at https://openstax.org/books/statistics/pages/1-introduction

- Authors: Barbara Illowsky, Susan Dean

- Publisher/website: OpenStax

- Book title: Statistics

- Publication date: Mar 27, 2020

- Location: Houston, Texas

- Book URL: https://openstax.org/books/statistics/pages/1-introduction

- Section URL: https://openstax.org/books/statistics/pages/9-2-outcomes-and-the-type-i-and-type-ii-errors

© Jan 23, 2024 Texas Education Agency (TEA). The OpenStax name, OpenStax logo, OpenStax book covers, OpenStax CNX name, and OpenStax CNX logo are not subject to the Creative Commons license and may not be reproduced without the prior and express written consent of Rice University.

User Preferences

Content preview.

Arcu felis bibendum ut tristique et egestas quis:

- Ut enim ad minim veniam, quis nostrud exercitation ullamco laboris

- Duis aute irure dolor in reprehenderit in voluptate

- Excepteur sint occaecat cupidatat non proident

Keyboard Shortcuts

6.1 - type i and type ii errors.

When conducting a hypothesis test there are two possible decisions: reject the null hypothesis or fail to reject the null hypothesis. You should remember though, hypothesis testing uses data from a sample to make an inference about a population. When conducting a hypothesis test we do not know the population parameters. In most cases, we don't know if our inference is correct or incorrect.

When we reject the null hypothesis there are two possibilities. There could really be a difference in the population, in which case we made a correct decision. Or, it is possible that there is not a difference in the population (i.e., \(H_0\) is true) but our sample was different from the hypothesized value due to random sampling variation. In that case we made an error. This is known as a Type I error.

When we fail to reject the null hypothesis there are also two possibilities. If the null hypothesis is really true, and there is not a difference in the population, then we made the correct decision. If there is a difference in the population, and we failed to reject it, then we made a Type II error.

Rejecting \(H_0\) when \(H_0\) is really true, denoted by \(\alpha\) ("alpha") and commonly set at .05

\(\alpha=P(Type\;I\;error)\)

Failing to reject \(H_0\) when \(H_0\) is really false, denoted by \(\beta\) ("beta")

\(\beta=P(Type\;II\;error)\)

Example: Trial Section

A man goes to trial where he is being tried for the murder of his wife.

We can put it in a hypothesis testing framework. The hypotheses being tested are:

- \(H_0\) : Not Guilty

- \(H_a\) : Guilty

Type I error is committed if we reject \(H_0\) when it is true. In other words, did not kill his wife but was found guilty and is punished for a crime he did not really commit.

Type II error is committed if we fail to reject \(H_0\) when it is false. In other words, if the man did kill his wife but was found not guilty and was not punished.

Example: Culinary Arts Study Section

A group of culinary arts students is comparing two methods for preparing asparagus: traditional steaming and a new frying method. They want to know if patrons of their school restaurant prefer their new frying method over the traditional steaming method. A sample of patrons are given asparagus prepared using each method and asked to select their preference. A statistical analysis is performed to determine if more than 50% of participants prefer the new frying method:

- \(H_{0}: p = .50\)

- \(H_{a}: p>.50\)

Type I error occurs if they reject the null hypothesis and conclude that their new frying method is preferred when in reality is it not. This may occur if, by random sampling error, they happen to get a sample that prefers the new frying method more than the overall population does. If this does occur, the consequence is that the students will have an incorrect belief that their new method of frying asparagus is superior to the traditional method of steaming.

Type II error occurs if they fail to reject the null hypothesis and conclude that their new method is not superior when in reality it is. If this does occur, the consequence is that the students will have an incorrect belief that their new method is not superior to the traditional method when in reality it is.

Error in Research

Chris nickson.

- Nov 3, 2020

- error in research can be systematic or random

- systematic error is also referred to as bias

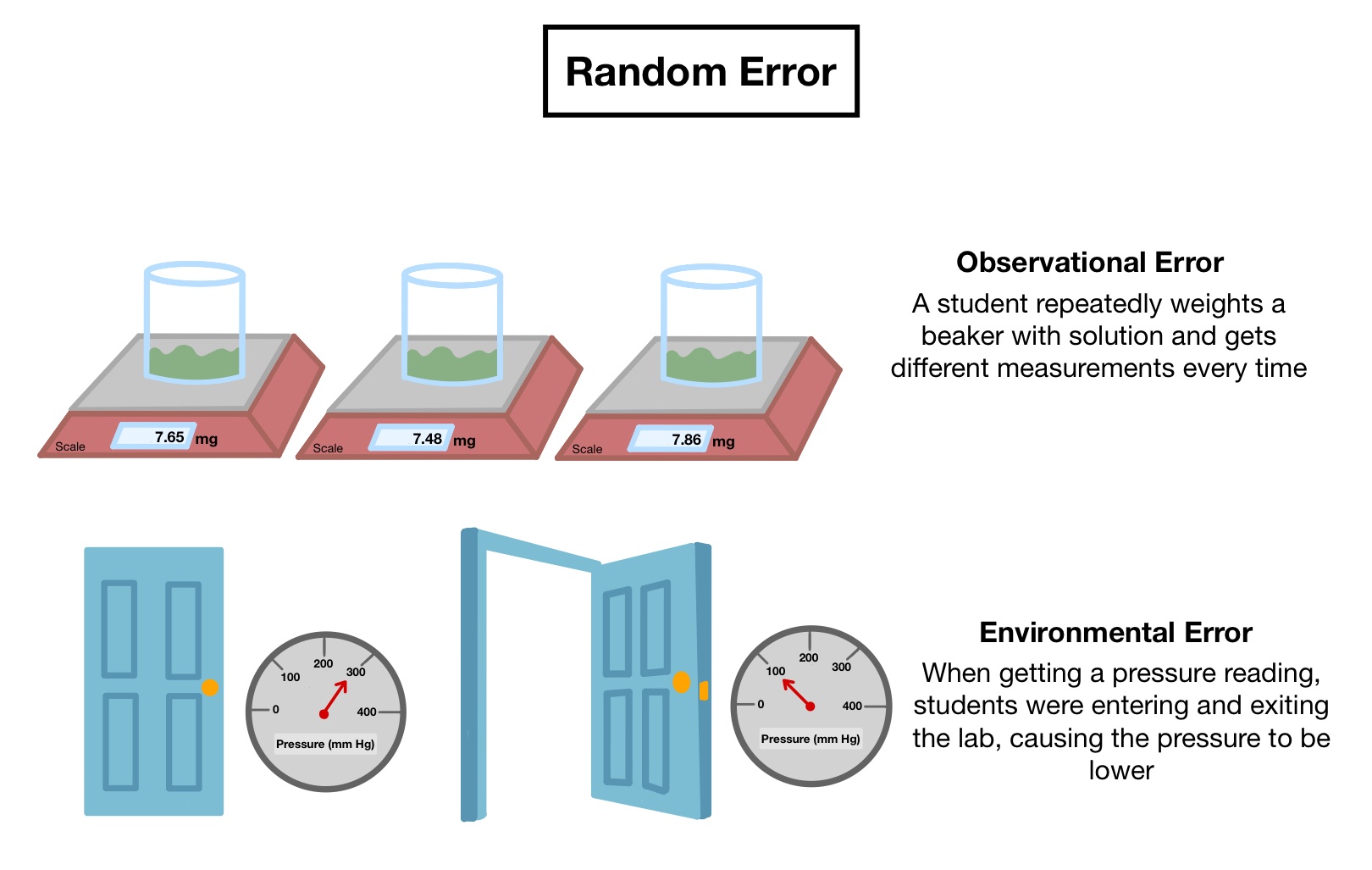

Random error

- error introduced by a lack of precision in conducting the study

- defined in terms of the null hypothesis, which is no difference between the intervention group and the control group

- reduced by meticulous technique and by large sample size

Type 1 error

- ‘false positive’ study

- the chance of incorrectly rejecting the null hypothesis (finding a difference which does not exist)

- the alpha value determines this risk

- alpha (ɑ) is normally 0.05 (same as the p-value or 95% confidence interval) so there is a 5% chance of making a type 1 error

- the error may result in the implementation of a therapy that is ineffective

Type 2 error

- ‘false negative’ study

- the chance of incorrectly accepting the null hypothesis (not finding the difference, despite one existing)

- this risk is determined by (1 – beta)

- beta (𝛽) is normally 0.8 (this is the power of a study) so the chance of making a type 2 error is 20%

- may result in an effective treatment strategy/drug not being used

- Type I errors , also known as false positives , occur when you see things that are not there.

- Type II errors , or false negatives , occur when you don’t see things that are there

TECHNIQUES TO MINIMIZE ERROR

Prior to Study

- study type: a well constructed Randomised control trial (RCT) is the ‘gold standard’

- appropriate power and sample size calculations

- choose an appropriate effect size (clinically significant difference one wishes to detect between groups; this is arbitrary but needs to be: — reasonable — informed by previous studies and current clinical practice — acceptable to peers

During Study

- minimise bias

- sequential trial design — allows a clinical trial to be carried out so that, as soon as a significant result is obtained, the study can be stopped — minimises the sample size, cost & morbidity

- interim analysis — pre-planned comparison of groups at specified times during a trial — allows a trial to be stopped early if a significant difference is found

At Analysis Stage, avoid:

- use of inappropriate tests to analyze data — e.g. parametric vs non-parametric, t-tests, ANOVA, Chi, Fishers exact, Yates correction, paired or unpaired, one-tailed or two-tailed

At Presentation, avoid:

- failure to report data points or standard error

- reporting mean with standard error (smaller) rather than standard deviation

- assumption that statistical significance is equivalent to clinical significance

- failure give explicit details of study and statistical analysis

- publication bias

References and Links

- CCC — Bias in Research

- Paul Ellis: Effect size FAQs

Critical Care

Chris is an Intensivist and ECMO specialist at the Alfred ICU in Melbourne. He is also a Clinical Adjunct Associate Professor at Monash University . He is a co-founder of the Australia and New Zealand Clinician Educator Network (ANZCEN) and is the Lead for the ANZCEN Clinician Educator Incubator programme. He is on the Board of Directors for the Intensive Care Foundation and is a First Part Examiner for the College of Intensive Care Medicine . He is an internationally recognised Clinician Educator with a passion for helping clinicians learn and for improving the clinical performance of individuals and collectives.

After finishing his medical degree at the University of Auckland, he continued post-graduate training in New Zealand as well as Australia’s Northern Territory, Perth and Melbourne. He has completed fellowship training in both intensive care medicine and emergency medicine, as well as post-graduate training in biochemistry, clinical toxicology, clinical epidemiology, and health professional education.

He is actively involved in in using translational simulation to improve patient care and the design of processes and systems at Alfred Health. He coordinates the Alfred ICU’s education and simulation programmes and runs the unit’s education website, INTENSIVE . He created the ‘Critically Ill Airway’ course and teaches on numerous courses around the world. He is one of the founders of the FOAM movement (Free Open-Access Medical education) and is co-creator of litfl.com , the RAGE podcast , the Resuscitology course, and the SMACC conference.

His one great achievement is being the father of three amazing children.

On Twitter, he is @precordialthump .

| INTENSIVE | RAGE | Resuscitology | SMACC

Leave a Reply Cancel reply

This site uses Akismet to reduce spam. Learn how your comment data is processed .

Privacy Overview

Want to create or adapt books like this? Learn more about how Pressbooks supports open publishing practices.

12 Errors in Research

This chapter is derived from the Australian Bureau of Statistics [1] ; licensed under a Creative Commons Attribution 4.0 International license

Learning Objectives

By the end of this chapter, students must be able to:

- explain sampling errors and non-sampling errors in research

- understand the sources leading to such errors

- demonstrate an understanding of strategies to reduce such errors

Image: Error from istockphoto under an unrestricted licence .

Introduction.

The accuracy of a survey estimate refers to the closeness of the estimate to the true population value. Where there is a discrepancy between the value of the survey estimate and the true population value, the difference between the two is referred to as the error of the survey estimate. The total error of the survey estimate results from two types of error:

- sampling error, which arises when only a part of the population is used to represent the whole population. Sampling error can be measured mathematically

- a non-sampling error can occur at any stage of a sample survey

It is important for a researcher to be aware of these errors, in particular non-sampling errors, so that they can be either minimised or eliminated from the survey. An introduction to sampling error and non-sampling error is provided in the following sections.

Sampling Error

Sampling error reflects the difference between an estimate (e.g., average) derived from a sample survey and the “true value” (i.e., actual population average) that would be obtained if the whole survey population was enumerated. It can be measured from the population values, but as these are unknown (or very difficult to calculate), it can also be estimated from the sample data. It is important to consider sampling error when publishing survey results as it gives an indication of the accuracy of the estimate and therefore reflects the importance that can be placed on interpretations. If sampling principles are applied carefully within the constraints of available resources, sampling error can be accurately measured and kept to a minimum.

Factors Affecting Sampling Error

Sampling error is affected by a number of factors including sample size, sample design, the sampling fraction, and the variability within the population. In general, larger sample sizes decrease the sampling error, however, this decrease is not directly proportional. As a rough rule of thumb, you need to increase the sample size fourfold to halve the sampling error. Of much lesser influence is the sampling fraction (the fraction of the population size in the sample), but as the sample size increases as a fraction of the population, the sampling error should decrease.

The population variability also affects the sampling error. More variable populations give rise to larger errors as the samples or the estimates calculated from different samples are more likely to have greater variation. The effect of the variability within the population can be reduced by increasing the sample size to make it more representative of the survey population. Various sample design options also affect the size of the sampling error. For example, stratification reduces sampling error whereas cluster sampling tends to increase it. A sampling error can be estimated statistically and is used while interpreting statistical results.

https://www.youtube.com/watch?v=XE7QDfdaQ68

Source: Frances Chumney [2]

Non-sampling error.

Non-sampling error is comprised of all other errors in the estimate (e.g., a sample average). These include all errors which occur due to reasons other than sample plan or sample size. Some examples of causes of non-sampling error are a low response rate to the questionnaire, a badly designed questionnaire, respondent bias, and processing errors. Non-sampling errors can occur at any stage of the process. These errors can be found in censuses and sample surveys.

Sources of non-sampling errors are discussed below.

Non-Response Bias

Non-response refers to the situation when respondents either do not respond to any of the survey questions (i.e., total non-response) or do not respond to some survey questions owing to sensitive questions, recall problems, inability to answer, etc. (partial non-response). To improve response rates, care should be taken in designing the questionnaires, training interviewers, assuring the respondent of confidentiality, and calling back at different times if having difficulties contacting the respondent. “Call-backs” are successful in reducing non-response but can be expensive for personal interviews. A gentle email reminder for online surveys is also used as a tool to improve survey response rates.

Questionnaire problems

The content and wording of the questionnaire may be misleading and the layout of the questionnaire may make it difficult to accurately record responses. Questions should not be loaded, double-barrelled, misleading, or ambiguous, and should be directly relevant to the objectives of the survey.

It is essential that questionnaires are tested on a sample of respondents before they are finalised to identify questionnaire flow and question-wording problems and allow sufficient time for improvements to be made to the questionnaire. The questionnaire should then be re-tested to ensure changes made do not introduce other problems.

Respondent Bias

At times, respondents may provide inaccurate information as they believe they are protecting their personal interests and integrity. Careful questionnaire design and effective questionnaire testing can overcome these problems to some extent. Given below are two types of situations that can be avoided through better design and implementation of surveys.

- Sensitivity If respondents are faced with a question that they find embarrassing, they may refuse to answer, or choose a response that prevents them from having to continue with the questions. For example, if asked the question: “Are you taking any oral contraceptive pills for any reason?”, and knowing that if they say “Yes” they will be asked for more details, respondents who are embarrassed by the question are likely to answer “No”, even if this is incorrect.

- Fatigue Fatigue can be a problem in surveys that require a high level of commitment from respondents. For example, diary surveys where respondents have to record all expenses made in a two-week period. In these types of surveys, the level of accuracy and detail supplied may decrease as respondents become tired of recording all expenditures.

Processing Errors

There are four stages in the processing of the data where errors may occur: data grooming, data capture, editing, and estimation. Data grooming involves preliminary checking before entering the data onto the processing system in the capture stage. Inadequate checking and quality management at this stage can introduce data loss (where data is not entered into the system) and data duplication (where the same data is entered into the system more than once). Inappropriate edit checks and inaccurate weights in the estimation procedure can also introduce errors to the data. To minimise these errors, processing staff should be given adequate training and realistic workloads.

Misinterpretation of Results

This can occur if the researcher is not aware of certain factors that influence the characteristics under investigation. A researcher or any other user not involved in the collection stage of the data gathering may be unaware of trends built into the data due to the nature of the collection, such as its scope. (eg. a survey which collected income as a data item with the survey coverage and scope of all adult persons (ie. 18 years or older), would expect to produce a different estimate than that produced by the ABS Survey of Average Weekly Earnings (AWE) simply because AWE includes persons of age 16 and 17 years as part of its scope). Researchers should carefully investigate the methodology used in any given survey.

Time Period Bias

This occurs when a survey is conducted during an unrepresentative time period. For example, if a survey aims to collect details on ice-cream sales, but only collects a week’s worth of data during the hottest part of summer, it is unlikely to represent the average weekly sales of ice cream for the year.

https://www.youtube.com/watch?v=zF37RvnNHnk

Source: Frances Chumney [3]

Minimising non-sampling error.

Non-sampling error can be difficult to measure accurately, but it can be minimised by

- careful selection of the time the survey is conducted,

- using an up-to-date and accurate sampling frame,

- planning for follow up of non-respondents,

- careful questionnaire design,

- providing thorough training for interviewers and processing staff and

- being aware of all the factors affecting the topic under consideration.

Since many surveys suffer from poor response rates, we have especially discussed ways of reducing non-response from the potential respondents.

Minimising Non-Response

Response rates can be improved through good survey design via short, simple questions, good forms design techniques and explaining survey purposes and uses. Assurances of confidentiality are very important as many respondents are unwilling to respond due to a fear of lack of privacy. Targeted follow-ups on non-contacts or those initially unable to reply can increase response rates significantly. Following are some hints on how to minimise refusals in a personal or phone contact:

- Find out the reasons for refusal and try to talk through them

- Use positive language

- State how and what you plan to do to help with the questionnaire

- Stress the importance of the survey

- Explain the importance of their response as a representative of other units

- Emphasise the benefits from the survey results, explain how they can obtain results

- Give assurance of the confidentiality of the responses

Other measures that can improve respondent cooperation and maximise response include:

- Public awareness activities include discussions with key organisations and interest groups, news releases, media interviews, and articles. This is aimed at informing the community about the survey, identifying issues of concern, and addressing them.

- Advice to selected units by letter, giving them advance notice and explaining the purposes of the survey and how the survey is going to be conducted.

In the case of a mail survey, most of the points above can be stated in an introductory letter or through a publicity campaign.

Allowing for Non-Response

Where response rates are still low after all reasonable attempts of follow-up are undertaken, you can reduce bias by using population benchmarks to post-stratify the sample, intensive follow-up of a subsample of the non-respondents, or imputation for item non-response (non-response to a particular question).

The main aim of imputation is to produce consistent data without going back to the respondent for the correct values thus reducing both respondent burden and costs associated with the survey. Broadly speaking the imputation methods fall into three groups:

- the imputed value is derived from other information supplied by the unit;

- values by other units can be used to derive a value for the non-respondent (e.g., average);

- an exact value of another unit (called the donor) is used as a value for the non-respondent (called recipient);

When deciding on the method of imputation it is desirable to know what effect will imputation have on the final estimates. If a large amount of imputation is performed the results can be misleading, particularly if the imputation used distorts the distribution of data.

If at the planning stage it is believed that there is likely to be a high non-response rate, then the sample size could be increased to allow for this. However, the non-response bias will not be overcome by just increasing the sample size, particularly if the non-responding units have different characteristics from the responding units. Post-stratification and imputation also fail to totally eliminate non-response bias from the results.

Example: Effect of Non-Response

Suppose a postal survey of 3421 fruit growers was run to estimate the average number of fruit trees on a farm. There was an initial period for response and following low response rates, two series of follow-up reminders were sent out. The response and results were as follows:

After two follow-up reminders, there was still only a 37% response rate. From other information, it was known that the overall average was 329. The result based on this survey would have been:

If results had been published without any follow-up then the average number of trees would have been too high as farms with a greater number of trees appeared to have responded more readily. With follow-up, smaller farms sent back survey forms and the estimate became closer to the true value.

- Australian Bureau of Statistics n.d., Errors in statistical data , viewed 31 March 2022, <https://www.abs.gov.au/websitedbs/d3310114.nsf/home/Basic+Survey+Design+-+Sample+Design>. ↵

- Chumney, 2016, Introduction to error sources in survey research: sampling error , 14 August, online video, viewed 4 April 2022, <https://www.youtube.com/watch?v=XE7QDfdaQ68>. ↵

- Chumney, 2016, Introduction to error sources in survey research: measurement errors , 14 August, online video, viewed 4 April 2022, <https://www.youtube.com/watch?v=zF37RvnNHnk>. ↵

Customer Insights Copyright © 2022 by Aila Khan is licensed under a Creative Commons Attribution-NonCommercial-ShareAlike 4.0 International License , except where otherwise noted.

Share This Book

- Science Notes Posts

- Contact Science Notes

- Todd Helmenstine Biography

- Anne Helmenstine Biography

- Free Printable Periodic Tables (PDF and PNG)

- Periodic Table Wallpapers

- Interactive Periodic Table

- Periodic Table Posters

- How to Grow Crystals

- Chemistry Projects

- Fire and Flames Projects

- Holiday Science

- Chemistry Problems With Answers

- Physics Problems

- Unit Conversion Example Problems

- Chemistry Worksheets

- Biology Worksheets

- Periodic Table Worksheets

- Physical Science Worksheets

- Science Lab Worksheets

- My Amazon Books

Sources of Error in Science Experiments Recently updated !

Science labs usually ask you to compare your results against theoretical or known values. This helps you evaluate your results and compare them against other people’s values. The difference between your results and the expected or theoretical results is called error. The amount of error that is acceptable depends on the experiment, but a margin of error of 10% is generally considered acceptable. If there is a large margin of error, you’ll be asked to go over your procedure and identify any mistakes you may have made or places where error might have been introduced. So, you need to know the different types and sources of error and how to calculate them.

How to Calculate Absolute Error

One method of measuring error is by calculating absolute error , which is also called absolute uncertainty. This measure of accuracy is reported using the units of measurement. Absolute error is simply the difference between the measured value and either the true value or the average value of the data.

absolute error = measured value – true value

For example, if you measure gravity to be 9.6 m/s 2 and the true value is 9.8 m/s 2 , then the absolute error of the measurement is 0.2 m/s 2 . You could report the error with a sign, so the absolute error in this example could be -0.2 m/s 2 .

If you measure the length of a sample three times and get 1.1 cm, 1.5 cm, and 1.3 cm, then the absolute error is +/- 0.2 cm or you would say the length of the sample is 1.3 cm (the average) +/- 0.2 cm.

Some people consider absolute error to be a measure of how accurate your measuring instrument is. If you are using a ruler that reports length to the nearest millimeter, you might say the absolute error of any measurement taken with that ruler is to the nearest 1 mm or (if you feel confident you can see between one mark and the next) to the nearest 0.5 mm.

How to Calculate Relative Error

Relative error is based on the absolute error value. It compares how large the error is to the magnitude of the measurement. So, an error of 0.1 kg might be insignificant when weighing a person, but pretty terrible when weighing a apple. Relative error is a fraction, decimal value, or percent.

Relative Error = Absolute Error / Total Value

For example, if your speedometer says you are going 55 mph, when you’re really going 58 mph, the absolute error is 3 mph / 58 mph or 0.05, which you could multiple by 100% to give 5%. Relative error may be reported with a sign. In this case, the speedometer is off by -5% because the recorded value is lower than the true value.

Because the absolute error definition is ambiguous, most lab reports ask for percent error or percent difference.

How to Calculate Percent Error

The most common error calculation is percent error , which is used when comparing your results against a known, theoretical, or accepted value. As you probably guess from the name, percent error is expressed as a percentage. It is the absolute (no negative sign) difference between your value and the accepted value, divided by the accepted value, multiplied by 100% to give the percent:

% error = [accepted – experimental ] / accepted x 100%

How to Calculate Percent Difference

Another common error calculation is called percent difference . It is used when you are comparing one experimental result to another. In this case, no result is necessarily better than another, so the percent difference is the absolute value (no negative sign) of the difference between the values, divided by the average of the two numbers, multiplied by 100% to give a percentage:

% difference = [experimental value – other value] / average x 100%

Sources and Types of Error

Every experimental measurement, no matter how carefully you take it, contains some amount of uncertainty or error. You are measuring against a standard, using an instrument that can never perfectly duplicate the standard, plus you’re human, so you might introduce errors based on your technique. The three main categories of errors are systematic errors, random errors , and personal errors. Here’s what these types of errors are and common examples.

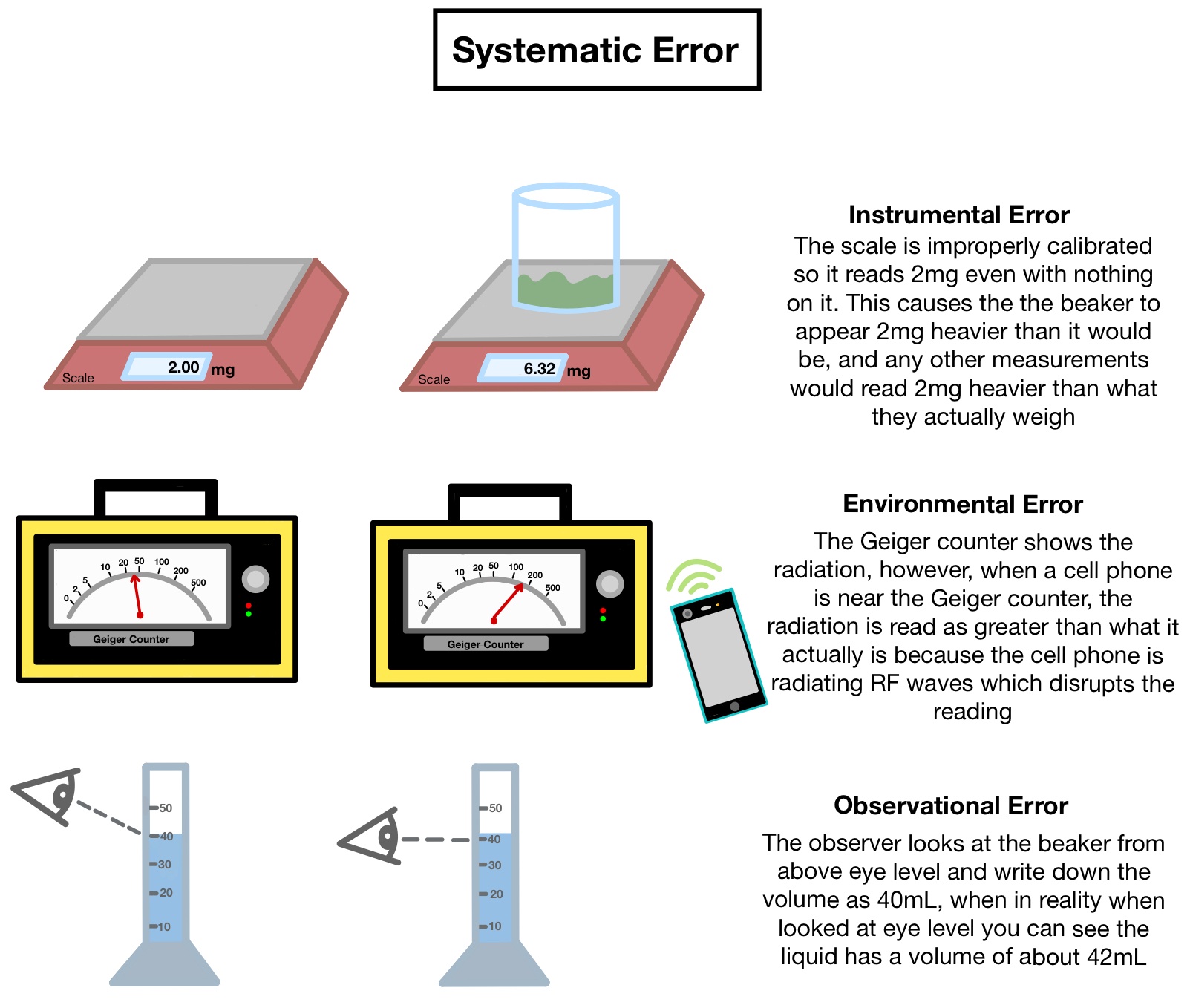

Systematic Errors

Systematic error affects all the measurements you take. All of these errors will be in the same direction (greater than or less than the true value) and you can’t compensate for them by taking additional data. Examples of Systematic Errors

- If you forget to calibrate a balance or you’re off a bit in the calibration, all mass measurements will be high/low by the same amount. Some instruments require periodic calibration throughout the course of an experiment , so it’s good to make a note in your lab notebook to see whether the calibrations appears to have affected the data.

- Another example is measuring volume by reading a meniscus (parallax). You likely read a meniscus exactly the same way each time, but it’s never perfectly correct. Another person taking the reading may take the same reading, but view the meniscus from a different angle, thus getting a different result. Parallax can occur in other types of optical measurements, such as those taken with a microscope or telescope.