Random Assignment in Psychology: Definition & Examples

Julia Simkus

Editor at Simply Psychology

BA (Hons) Psychology, Princeton University

Julia Simkus is a graduate of Princeton University with a Bachelor of Arts in Psychology. She is currently studying for a Master's Degree in Counseling for Mental Health and Wellness in September 2023. Julia's research has been published in peer reviewed journals.

Learn about our Editorial Process

Saul Mcleod, PhD

Editor-in-Chief for Simply Psychology

BSc (Hons) Psychology, MRes, PhD, University of Manchester

Saul Mcleod, PhD., is a qualified psychology teacher with over 18 years of experience in further and higher education. He has been published in peer-reviewed journals, including the Journal of Clinical Psychology.

Olivia Guy-Evans, MSc

Associate Editor for Simply Psychology

BSc (Hons) Psychology, MSc Psychology of Education

Olivia Guy-Evans is a writer and associate editor for Simply Psychology. She has previously worked in healthcare and educational sectors.

In psychology, random assignment refers to the practice of allocating participants to different experimental groups in a study in a completely unbiased way, ensuring each participant has an equal chance of being assigned to any group.

In experimental research, random assignment, or random placement, organizes participants from your sample into different groups using randomization.

Random assignment uses chance procedures to ensure that each participant has an equal opportunity of being assigned to either a control or experimental group.

The control group does not receive the treatment in question, whereas the experimental group does receive the treatment.

When using random assignment, neither the researcher nor the participant can choose the group to which the participant is assigned. This ensures that any differences between and within the groups are not systematic at the onset of the study.

In a study to test the success of a weight-loss program, investigators randomly assigned a pool of participants to one of two groups.

Group A participants participated in the weight-loss program for 10 weeks and took a class where they learned about the benefits of healthy eating and exercise.

Group B participants read a 200-page book that explains the benefits of weight loss. The investigator randomly assigned participants to one of the two groups.

The researchers found that those who participated in the program and took the class were more likely to lose weight than those in the other group that received only the book.

Importance

Random assignment ensures that each group in the experiment is identical before applying the independent variable.

In experiments , researchers will manipulate an independent variable to assess its effect on a dependent variable, while controlling for other variables. Random assignment increases the likelihood that the treatment groups are the same at the onset of a study.

Thus, any changes that result from the independent variable can be assumed to be a result of the treatment of interest. This is particularly important for eliminating sources of bias and strengthening the internal validity of an experiment.

Random assignment is the best method for inferring a causal relationship between a treatment and an outcome.

Random Selection vs. Random Assignment

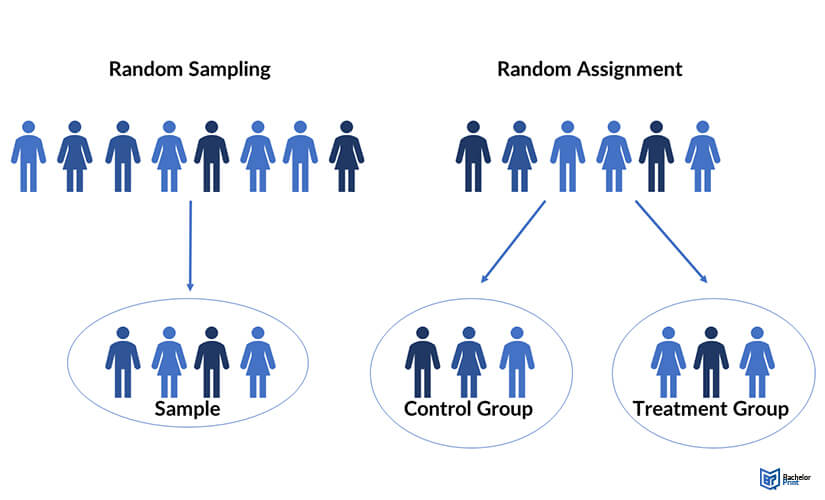

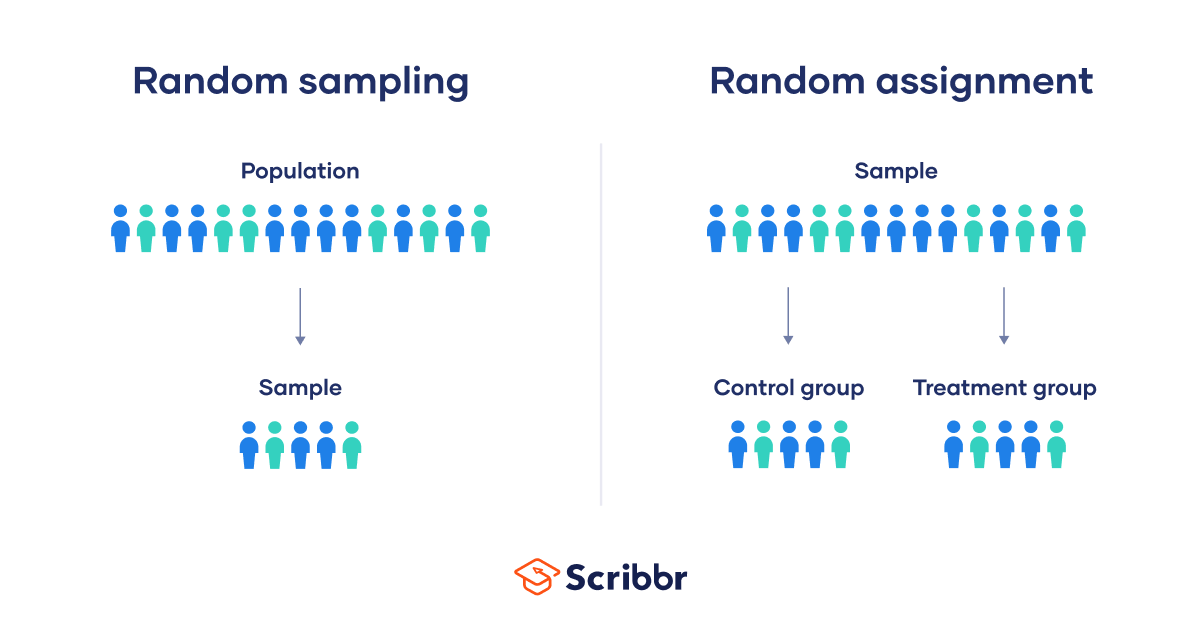

Random selection (also called probability sampling or random sampling) is a way of randomly selecting members of a population to be included in your study.

On the other hand, random assignment is a way of sorting the sample participants into control and treatment groups.

Random selection ensures that everyone in the population has an equal chance of being selected for the study. Once the pool of participants has been chosen, experimenters use random assignment to assign participants into groups.

Random assignment is only used in between-subjects experimental designs, while random selection can be used in a variety of study designs.

Random Assignment vs Random Sampling

Random sampling refers to selecting participants from a population so that each individual has an equal chance of being chosen. This method enhances the representativeness of the sample.

Random assignment, on the other hand, is used in experimental designs once participants are selected. It involves allocating these participants to different experimental groups or conditions randomly.

This helps ensure that any differences in results across groups are due to manipulating the independent variable, not preexisting differences among participants.

When to Use Random Assignment

Random assignment is used in experiments with a between-groups or independent measures design.

In these research designs, researchers will manipulate an independent variable to assess its effect on a dependent variable, while controlling for other variables.

There is usually a control group and one or more experimental groups. Random assignment helps ensure that the groups are comparable at the onset of the study.

How to Use Random Assignment

There are a variety of ways to assign participants into study groups randomly. Here are a handful of popular methods:

- Random Number Generator : Give each member of the sample a unique number; use a computer program to randomly generate a number from the list for each group.

- Lottery : Give each member of the sample a unique number. Place all numbers in a hat or bucket and draw numbers at random for each group.

- Flipping a Coin : Flip a coin for each participant to decide if they will be in the control group or experimental group (this method can only be used when you have just two groups)

- Roll a Die : For each number on the list, roll a dice to decide which of the groups they will be in. For example, assume that rolling 1, 2, or 3 places them in a control group and rolling 3, 4, 5 lands them in an experimental group.

When is Random Assignment not used?

- When it is not ethically permissible: Randomization is only ethical if the researcher has no evidence that one treatment is superior to the other or that one treatment might have harmful side effects.

- When answering non-causal questions : If the researcher is just interested in predicting the probability of an event, the causal relationship between the variables is not important and observational designs would be more suitable than random assignment.

- When studying the effect of variables that cannot be manipulated: Some risk factors cannot be manipulated and so it would not make any sense to study them in a randomized trial. For example, we cannot randomly assign participants into categories based on age, gender, or genetic factors.

Drawbacks of Random Assignment

While randomization assures an unbiased assignment of participants to groups, it does not guarantee the equality of these groups. There could still be extraneous variables that differ between groups or group differences that arise from chance. Additionally, there is still an element of luck with random assignments.

Thus, researchers can not produce perfectly equal groups for each specific study. Differences between the treatment group and control group might still exist, and the results of a randomized trial may sometimes be wrong, but this is absolutely okay.

Scientific evidence is a long and continuous process, and the groups will tend to be equal in the long run when data is aggregated in a meta-analysis.

Additionally, external validity (i.e., the extent to which the researcher can use the results of the study to generalize to the larger population) is compromised with random assignment.

Random assignment is challenging to implement outside of controlled laboratory conditions and might not represent what would happen in the real world at the population level.

Random assignment can also be more costly than simple observational studies, where an investigator is just observing events without intervening with the population.

Randomization also can be time-consuming and challenging, especially when participants refuse to receive the assigned treatment or do not adhere to recommendations.

What is the difference between random sampling and random assignment?

Random sampling refers to randomly selecting a sample of participants from a population. Random assignment refers to randomly assigning participants to treatment groups from the selected sample.

Does random assignment increase internal validity?

Yes, random assignment ensures that there are no systematic differences between the participants in each group, enhancing the study’s internal validity .

Does random assignment reduce sampling error?

Yes, with random assignment, participants have an equal chance of being assigned to either a control group or an experimental group, resulting in a sample that is, in theory, representative of the population.

Random assignment does not completely eliminate sampling error because a sample only approximates the population from which it is drawn. However, random sampling is a way to minimize sampling errors.

When is random assignment not possible?

Random assignment is not possible when the experimenters cannot control the treatment or independent variable.

For example, if you want to compare how men and women perform on a test, you cannot randomly assign subjects to these groups.

Participants are not randomly assigned to different groups in this study, but instead assigned based on their characteristics.

Does random assignment eliminate confounding variables?

Yes, random assignment eliminates the influence of any confounding variables on the treatment because it distributes them at random among the study groups. Randomization invalidates any relationship between a confounding variable and the treatment.

Why is random assignment of participants to treatment conditions in an experiment used?

Random assignment is used to ensure that all groups are comparable at the start of a study. This allows researchers to conclude that the outcomes of the study can be attributed to the intervention at hand and to rule out alternative explanations for study results.

Further Reading

- Bogomolnaia, A., & Moulin, H. (2001). A new solution to the random assignment problem . Journal of Economic theory , 100 (2), 295-328.

- Krause, M. S., & Howard, K. I. (2003). What random assignment does and does not do . Journal of Clinical Psychology , 59 (7), 751-766.

- Skip to secondary menu

- Skip to main content

- Skip to primary sidebar

Statistics By Jim

Making statistics intuitive

Random Assignment in Experiments

By Jim Frost 4 Comments

Random assignment uses chance to assign subjects to the control and treatment groups in an experiment. This process helps ensure that the groups are equivalent at the beginning of the study, which makes it safer to assume the treatments caused any differences between groups that the experimenters observe at the end of the study.

Huh? That might be a big surprise! At this point, you might be wondering about all of those studies that use statistics to assess the effects of different treatments. There’s a critical separation between significance and causality:

- Statistical procedures determine whether an effect is significant.

- Experimental designs determine how confidently you can assume that a treatment causes the effect.

In this post, learn how using random assignment in experiments can help you identify causal relationships.

Correlation, Causation, and Confounding Variables

Random assignment helps you separate causation from correlation and rule out confounding variables. As a critical component of the scientific method , experiments typically set up contrasts between a control group and one or more treatment groups. The idea is to determine whether the effect, which is the difference between a treatment group and the control group, is statistically significant. If the effect is significant, group assignment correlates with different outcomes.

However, as you have no doubt heard, correlation does not necessarily imply causation. In other words, the experimental groups can have different mean outcomes, but the treatment might not be causing those differences even though the differences are statistically significant.

The difficulty in definitively stating that a treatment caused the difference is due to potential confounding variables or confounders. Confounders are alternative explanations for differences between the experimental groups. Confounding variables correlate with both the experimental groups and the outcome variable. In this situation, confounding variables can be the actual cause for the outcome differences rather than the treatments themselves. As you’ll see, if an experiment does not account for confounding variables, they can bias the results and make them untrustworthy.

Related posts : Understanding Correlation in Statistics , Causation versus Correlation , and Hill’s Criteria for Causation .

Example of Confounding in an Experiment

- Control group: Does not consume vitamin supplements

- Treatment group: Regularly consumes vitamin supplements.

Imagine we measure a specific health outcome. After the experiment is complete, we perform a 2-sample t-test to determine whether the mean outcomes for these two groups are different. Assume the test results indicate that the mean health outcome in the treatment group is significantly better than the control group.

Why can’t we assume that the vitamins improved the health outcomes? After all, only the treatment group took the vitamins.

Related post : Confounding Variables in Regression Analysis

Alternative Explanations for Differences in Outcomes

The answer to that question depends on how we assigned the subjects to the experimental groups. If we let the subjects decide which group to join based on their existing vitamin habits, it opens the door to confounding variables. It’s reasonable to assume that people who take vitamins regularly also tend to have other healthy habits. These habits are confounders because they correlate with both vitamin consumption (experimental group) and the health outcome measure.

Random assignment prevents this self sorting of participants and reduces the likelihood that the groups start with systematic differences.

In fact, studies have found that supplement users are more physically active, have healthier diets, have lower blood pressure, and so on compared to those who don’t take supplements. If subjects who already take vitamins regularly join the treatment group voluntarily, they bring these healthy habits disproportionately to the treatment group. Consequently, these habits will be much more prevalent in the treatment group than the control group.

The healthy habits are the confounding variables—the potential alternative explanations for the difference in our study’s health outcome. It’s entirely possible that these systematic differences between groups at the start of the study might cause the difference in the health outcome at the end of the study—and not the vitamin consumption itself!

If our experiment doesn’t account for these confounding variables, we can’t trust the results. While we obtained statistically significant results with the 2-sample t-test for health outcomes, we don’t know for sure whether the vitamins, the systematic difference in habits, or some combination of the two caused the improvements.

Learn why many randomized clinical experiments use a placebo to control for the Placebo Effect .

Experiments Must Account for Confounding Variables

Your experimental design must account for confounding variables to avoid their problems. Scientific studies commonly use the following methods to handle confounders:

- Use control variables to keep them constant throughout an experiment.

- Statistically control for them in an observational study.

- Use random assignment to reduce the likelihood that systematic differences exist between experimental groups when the study begins.

Let’s take a look at how random assignment works in an experimental design.

Random Assignment Can Reduce the Impact of Confounding Variables

Note that random assignment is different than random sampling. Random sampling is a process for obtaining a sample that accurately represents a population .

Random assignment uses a chance process to assign subjects to experimental groups. Using random assignment requires that the experimenters can control the group assignment for all study subjects. For our study, we must be able to assign our participants to either the control group or the supplement group. Clearly, if we don’t have the ability to assign subjects to the groups, we can’t use random assignment!

Additionally, the process must have an equal probability of assigning a subject to any of the groups. For example, in our vitamin supplement study, we can use a coin toss to assign each subject to either the control group or supplement group. For more complex experimental designs, we can use a random number generator or even draw names out of a hat.

Random Assignment Distributes Confounders Equally

The random assignment process distributes confounding properties amongst your experimental groups equally. In other words, randomness helps eliminate systematic differences between groups. For our study, flipping the coin tends to equalize the distribution of subjects with healthier habits between the control and treatment group. Consequently, these two groups should start roughly equal for all confounding variables, including healthy habits!

Random assignment is a simple, elegant solution to a complex problem. For any given study area, there can be a long list of confounding variables that you could worry about. However, using random assignment, you don’t need to know what they are, how to detect them, or even measure them. Instead, use random assignment to equalize them across your experimental groups so they’re not a problem.

Because random assignment helps ensure that the groups are comparable when the experiment begins, you can be more confident that the treatments caused the post-study differences. Random assignment helps increase the internal validity of your study.

Comparing the Vitamin Study With and Without Random Assignment

Let’s compare two scenarios involving our hypothetical vitamin study. We’ll assume that the study obtains statistically significant results in both cases.

Scenario 1: We don’t use random assignment and, unbeknownst to us, subjects with healthier habits disproportionately end up in the supplement treatment group. The experimental groups differ by both healthy habits and vitamin consumption. Consequently, we can’t determine whether it was the habits or vitamins that improved the outcomes.

Scenario 2: We use random assignment and, consequently, the treatment and control groups start with roughly equal levels of healthy habits. The intentional introduction of vitamin supplements in the treatment group is the primary difference between the groups. Consequently, we can more confidently assert that the supplements caused an improvement in health outcomes.

For both scenarios, the statistical results could be identical. However, the methodology behind the second scenario makes a stronger case for a causal relationship between vitamin supplement consumption and health outcomes.

How important is it to use the correct methodology? Well, if the relationship between vitamins and health outcomes is not causal, then consuming vitamins won’t cause your health outcomes to improve regardless of what the study indicates. Instead, it’s probably all the other healthy habits!

Learn more about Randomized Controlled Trials (RCTs) that are the gold standard for identifying causal relationships because they use random assignment.

Drawbacks of Random Assignment

Random assignment helps reduce the chances of systematic differences between the groups at the start of an experiment and, thereby, mitigates the threats of confounding variables and alternative explanations. However, the process does not always equalize all of the confounding variables. Its random nature tends to eliminate systematic differences, but it doesn’t always succeed.

Sometimes random assignment is impossible because the experimenters cannot control the treatment or independent variable. For example, if you want to determine how individuals with and without depression perform on a test, you cannot randomly assign subjects to these groups. The same difficulty occurs when you’re studying differences between genders.

In other cases, there might be ethical issues. For example, in a randomized experiment, the researchers would want to withhold treatment for the control group. However, if the treatments are vaccinations, it might be unethical to withhold the vaccinations.

Other times, random assignment might be possible, but it is very challenging. For example, with vitamin consumption, it’s generally thought that if vitamin supplements cause health improvements, it’s only after very long-term use. It’s hard to enforce random assignment with a strict regimen for usage in one group and non-usage in the other group over the long-run. Or imagine a study about smoking. The researchers would find it difficult to assign subjects to the smoking and non-smoking groups randomly!

Fortunately, if you can’t use random assignment to help reduce the problem of confounding variables, there are different methods available. The other primary approach is to perform an observational study and incorporate the confounders into the statistical model itself. For more information, read my post Observational Studies Explained .

Read About Real Experiments that Used Random Assignment

I’ve written several blog posts about studies that have used random assignment to make causal inferences. Read studies about the following:

- Flu Vaccinations

- COVID-19 Vaccinations

Sullivan L. Random assignment versus random selection . SAGE Glossary of the Social and Behavioral Sciences, SAGE Publications, Inc.; 2009.

Share this:

Reader Interactions

November 13, 2019 at 4:59 am

Hi Jim, I have a question of randomly assigning participants to one of two conditions when it is an ongoing study and you are not sure of how many participants there will be. I am using this random assignment tool for factorial experiments. http://methodologymedia.psu.edu/most/rannumgenerator It asks you for the total number of participants but at this point, I am not sure how many there will be. Thanks for any advice you can give me, Floyd

May 28, 2019 at 11:34 am

Jim, can you comment on the validity of using the following approach when we can’t use random assignments. I’m in education, we have an ACT prep course that we offer. We can’t force students to take it and we can’t keep them from taking it either. But we want to know if it’s working. Let’s say that by senior year all students who are going to take the ACT have taken it. Let’s also say that I’m only including students who have taking it twice (so I can show growth between first and second time taking it). What I’ve done to address confounders is to go back to say 8th or 9th grade (prior to anyone taking the ACT or the ACT prep course) and run an analysis showing the two groups are not significantly different to start with. Is this valid? If the ACT prep students were higher achievers in 8th or 9th grade, I could not assume my prep course is effecting greater growth, but if they were not significantly different in 8th or 9th grade, I can assume the significant difference in ACT growth (from first to second testing) is due to the prep course. Yes or no?

May 26, 2019 at 5:37 pm

Nice post! I think the key to understanding scientific research is to understand randomization. And most people don’t get it.

May 27, 2019 at 9:48 pm

Thank you, Anoop!

I think randomness in an experiment is a funny thing. The issue of confounding factors is a serious problem. You might not even know what they are! But, use random assignment and, voila, the problem usually goes away! If you can’t use random assignment, suddenly you have a whole host of issues to worry about, which I’ll be writing about in more detail in my upcoming post about observational experiments!

Comments and Questions Cancel reply

Random Assignment in Psychology (Definition + 40 Examples)

Have you ever wondered how researchers discover new ways to help people learn, make decisions, or overcome challenges? A hidden hero in this adventure of discovery is a method called random assignment, a cornerstone in psychological research that helps scientists uncover the truths about the human mind and behavior.

Random Assignment is a process used in research where each participant has an equal chance of being placed in any group within the study. This technique is essential in experiments as it helps to eliminate biases, ensuring that the different groups being compared are similar in all important aspects.

By doing so, researchers can be confident that any differences observed are likely due to the variable being tested, rather than other factors.

In this article, we’ll explore the intriguing world of random assignment, diving into its history, principles, real-world examples, and the impact it has had on the field of psychology.

History of Random Assignment

Stepping back in time, we delve into the origins of random assignment, which finds its roots in the early 20th century.

The pioneering mind behind this innovative technique was Sir Ronald A. Fisher , a British statistician and biologist. Fisher introduced the concept of random assignment in the 1920s, aiming to improve the quality and reliability of experimental research .

His contributions laid the groundwork for the method's evolution and its widespread adoption in various fields, particularly in psychology.

Fisher’s groundbreaking work on random assignment was motivated by his desire to control for confounding variables – those pesky factors that could muddy the waters of research findings.

By assigning participants to different groups purely by chance, he realized that the influence of these confounding variables could be minimized, paving the way for more accurate and trustworthy results.

Early Studies Utilizing Random Assignment

Following Fisher's initial development, random assignment started to gain traction in the research community. Early studies adopting this methodology focused on a variety of topics, from agriculture (which was Fisher’s primary field of interest) to medicine and psychology.

The approach allowed researchers to draw stronger conclusions from their experiments, bolstering the development of new theories and practices.

One notable early study utilizing random assignment was conducted in the field of educational psychology. Researchers were keen to understand the impact of different teaching methods on student outcomes.

By randomly assigning students to various instructional approaches, they were able to isolate the effects of the teaching methods, leading to valuable insights and recommendations for educators.

Evolution of the Methodology

As the decades rolled on, random assignment continued to evolve and adapt to the changing landscape of research.

Advances in technology introduced new tools and techniques for implementing randomization, such as computerized random number generators, which offered greater precision and ease of use.

The application of random assignment expanded beyond the confines of the laboratory, finding its way into field studies and large-scale surveys.

Researchers across diverse disciplines embraced the methodology, recognizing its potential to enhance the validity of their findings and contribute to the advancement of knowledge.

From its humble beginnings in the early 20th century to its widespread use today, random assignment has proven to be a cornerstone of scientific inquiry.

Its development and evolution have played a pivotal role in shaping the landscape of psychological research, driving discoveries that have improved lives and deepened our understanding of the human experience.

Principles of Random Assignment

Delving into the heart of random assignment, we uncover the theories and principles that form its foundation.

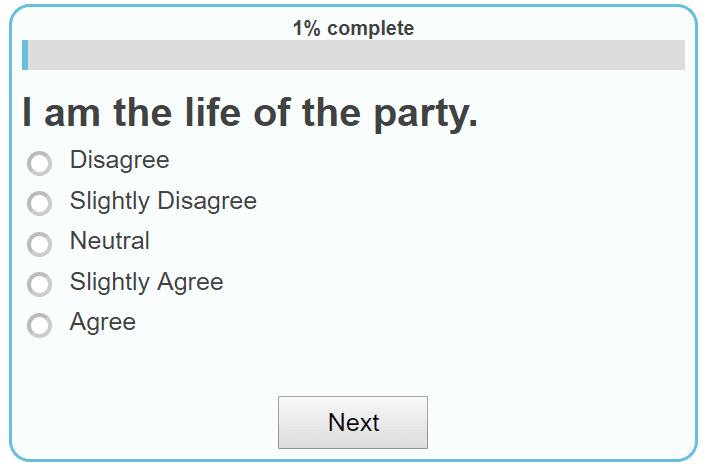

The method is steeped in the basics of probability theory and statistical inference, ensuring that each participant has an equal chance of being placed in any group, thus fostering fair and unbiased results.

Basic Principles of Random Assignment

Understanding the core principles of random assignment is key to grasping its significance in research. There are three principles: equal probability of selection, reduction of bias, and ensuring representativeness.

The first principle, equal probability of selection , ensures that every participant has an identical chance of being assigned to any group in the study. This randomness is crucial as it mitigates the risk of bias and establishes a level playing field.

The second principle focuses on the reduction of bias . Random assignment acts as a safeguard, ensuring that the groups being compared are alike in all essential aspects before the experiment begins.

This similarity between groups allows researchers to attribute any differences observed in the outcomes directly to the independent variable being studied.

Lastly, ensuring representativeness is a vital principle. When participants are assigned randomly, the resulting groups are more likely to be representative of the larger population.

This characteristic is crucial for the generalizability of the study’s findings, allowing researchers to apply their insights broadly.

Theoretical Foundation

The theoretical foundation of random assignment lies in probability theory and statistical inference .

Probability theory deals with the likelihood of different outcomes, providing a mathematical framework for analyzing random phenomena. In the context of random assignment, it helps in ensuring that each participant has an equal chance of being placed in any group.

Statistical inference, on the other hand, allows researchers to draw conclusions about a population based on a sample of data drawn from that population. It is the mechanism through which the results of a study can be generalized to a broader context.

Random assignment enhances the reliability of statistical inferences by reducing biases and ensuring that the sample is representative.

Differentiating Random Assignment from Random Selection

It’s essential to distinguish between random assignment and random selection, as the two terms, while related, have distinct meanings in the realm of research.

Random assignment refers to how participants are placed into different groups in an experiment, aiming to control for confounding variables and help determine causes.

In contrast, random selection pertains to how individuals are chosen to participate in a study. This method is used to ensure that the sample of participants is representative of the larger population, which is vital for the external validity of the research.

While both methods are rooted in randomness and probability, they serve different purposes in the research process.

Understanding the theories, principles, and distinctions of random assignment illuminates its pivotal role in psychological research.

This method, anchored in probability theory and statistical inference, serves as a beacon of reliability, guiding researchers in their quest for knowledge and ensuring that their findings stand the test of validity and applicability.

Methodology of Random Assignment

Implementing random assignment in a study is a meticulous process that involves several crucial steps.

The initial step is participant selection, where individuals are chosen to partake in the study. This stage is critical to ensure that the pool of participants is diverse and representative of the population the study aims to generalize to.

Once the pool of participants has been established, the actual assignment process begins. In this step, each participant is allocated randomly to one of the groups in the study.

Researchers use various tools, such as random number generators or computerized methods, to ensure that this assignment is genuinely random and free from biases.

Monitoring and adjusting form the final step in the implementation of random assignment. Researchers need to continuously observe the groups to ensure that they remain comparable in all essential aspects throughout the study.

If any significant discrepancies arise, adjustments might be necessary to maintain the study’s integrity and validity.

Tools and Techniques Used

The evolution of technology has introduced a variety of tools and techniques to facilitate random assignment.

Random number generators, both manual and computerized, are commonly used to assign participants to different groups. These generators ensure that each individual has an equal chance of being placed in any group, upholding the principle of equal probability of selection.

In addition to random number generators, researchers often use specialized computer software designed for statistical analysis and experimental design.

These software programs offer advanced features that allow for precise and efficient random assignment, minimizing the risk of human error and enhancing the study’s reliability.

Ethical Considerations

The implementation of random assignment is not devoid of ethical considerations. Informed consent is a fundamental ethical principle that researchers must uphold.

Informed consent means that every participant should be fully informed about the nature of the study, the procedures involved, and any potential risks or benefits, ensuring that they voluntarily agree to participate.

Beyond informed consent, researchers must conduct a thorough risk and benefit analysis. The potential benefits of the study should outweigh any risks or harms to the participants.

Safeguarding the well-being of participants is paramount, and any study employing random assignment must adhere to established ethical guidelines and standards.

Conclusion of Methodology

The methodology of random assignment, while seemingly straightforward, is a multifaceted process that demands precision, fairness, and ethical integrity. From participant selection to assignment and monitoring, each step is crucial to ensure the validity of the study’s findings.

The tools and techniques employed, coupled with a steadfast commitment to ethical principles, underscore the significance of random assignment as a cornerstone of robust psychological research.

Benefits of Random Assignment in Psychological Research

The impact and importance of random assignment in psychological research cannot be overstated. It is fundamental for ensuring the study is accurate, allowing the researchers to determine if their study actually caused the results they saw, and making sure the findings can be applied to the real world.

Facilitating Causal Inferences

When participants are randomly assigned to different groups, researchers can be more confident that the observed effects are due to the independent variable being changed, and not other factors.

This ability to determine the cause is called causal inference .

This confidence allows for the drawing of causal relationships, which are foundational for theory development and application in psychology.

Ensuring Internal Validity

One of the foremost impacts of random assignment is its ability to enhance the internal validity of an experiment.

Internal validity refers to the extent to which a researcher can assert that changes in the dependent variable are solely due to manipulations of the independent variable , and not due to confounding variables.

By ensuring that each participant has an equal chance of being in any condition of the experiment, random assignment helps control for participant characteristics that could otherwise complicate the results.

Enhancing Generalizability

Beyond internal validity, random assignment also plays a crucial role in enhancing the generalizability of research findings.

When done correctly, it ensures that the sample groups are representative of the larger population, so can allow researchers to apply their findings more broadly.

This representative nature is essential for the practical application of research, impacting policy, interventions, and psychological therapies.

Limitations of Random Assignment

Potential for implementation issues.

While the principles of random assignment are robust, the method can face implementation issues.

One of the most common problems is logistical constraints. Some studies, due to their nature or the specific population being studied, find it challenging to implement random assignment effectively.

For instance, in educational settings, logistical issues such as class schedules and school policies might stop the random allocation of students to different teaching methods .

Ethical Dilemmas

Random assignment, while methodologically sound, can also present ethical dilemmas.

In some cases, withholding a potentially beneficial treatment from one of the groups of participants can raise serious ethical questions, especially in medical or clinical research where participants' well-being might be directly affected.

Researchers must navigate these ethical waters carefully, balancing the pursuit of knowledge with the well-being of participants.

Generalizability Concerns

Even when implemented correctly, random assignment does not always guarantee generalizable results.

The types of people in the participant pool, the specific context of the study, and the nature of the variables being studied can all influence the extent to which the findings can be applied to the broader population.

Researchers must be cautious in making broad generalizations from studies, even those employing strict random assignment.

Practical and Real-World Limitations

In the real world, many variables cannot be manipulated for ethical or practical reasons, limiting the applicability of random assignment.

For instance, researchers cannot randomly assign individuals to different levels of intelligence, socioeconomic status, or cultural backgrounds.

This limitation necessitates the use of other research designs, such as correlational or observational studies , when exploring relationships involving such variables.

Response to Critiques

In response to these critiques, people in favor of random assignment argue that the method, despite its limitations, remains one of the most reliable ways to establish cause and effect in experimental research.

They acknowledge the challenges and ethical considerations but emphasize the rigorous frameworks in place to address them.

The ongoing discussion around the limitations and critiques of random assignment contributes to the evolution of the method, making sure it is continuously relevant and applicable in psychological research.

While random assignment is a powerful tool in experimental research, it is not without its critiques and limitations. Implementation issues, ethical dilemmas, generalizability concerns, and real-world limitations can pose significant challenges.

However, the continued discourse and refinement around these issues underline the method's enduring significance in the pursuit of knowledge in psychology.

By being careful with how we do things and doing what's right, random assignment stays a really important part of studying how people act and think.

Real-World Applications and Examples

Random assignment has been employed in many studies across various fields of psychology, leading to significant discoveries and advancements.

Here are some real-world applications and examples illustrating the diversity and impact of this method:

- Medicine and Health Psychology: Randomized Controlled Trials (RCTs) are the gold standard in medical research. In these studies, participants are randomly assigned to either the treatment or control group to test the efficacy of new medications or interventions.

- Educational Psychology: Studies in this field have used random assignment to explore the effects of different teaching methods, classroom environments, and educational technologies on student learning and outcomes.

- Cognitive Psychology: Researchers have employed random assignment to investigate various aspects of human cognition, including memory, attention, and problem-solving, leading to a deeper understanding of how the mind works.

- Social Psychology: Random assignment has been instrumental in studying social phenomena, such as conformity, aggression, and prosocial behavior, shedding light on the intricate dynamics of human interaction.

Let's get into some specific examples. You'll need to know one term though, and that is "control group." A control group is a set of participants in a study who do not receive the treatment or intervention being tested , serving as a baseline to compare with the group that does, in order to assess the effectiveness of the treatment.

- Smoking Cessation Study: Researchers used random assignment to put participants into two groups. One group received a new anti-smoking program, while the other did not. This helped determine if the program was effective in helping people quit smoking.

- Math Tutoring Program: A study on students used random assignment to place them into two groups. One group received additional math tutoring, while the other continued with regular classes, to see if the extra help improved their grades.

- Exercise and Mental Health: Adults were randomly assigned to either an exercise group or a control group to study the impact of physical activity on mental health and mood.

- Diet and Weight Loss: A study randomly assigned participants to different diet plans to compare their effectiveness in promoting weight loss and improving health markers.

- Sleep and Learning: Researchers randomly assigned students to either a sleep extension group or a regular sleep group to study the impact of sleep on learning and memory.

- Classroom Seating Arrangement: Teachers used random assignment to place students in different seating arrangements to examine the effect on focus and academic performance.

- Music and Productivity: Employees were randomly assigned to listen to music or work in silence to investigate the effect of music on workplace productivity.

- Medication for ADHD: Children with ADHD were randomly assigned to receive either medication, behavioral therapy, or a placebo to compare treatment effectiveness.

- Mindfulness Meditation for Stress: Adults were randomly assigned to a mindfulness meditation group or a waitlist control group to study the impact on stress levels.

- Video Games and Aggression: A study randomly assigned participants to play either violent or non-violent video games and then measured their aggression levels.

- Online Learning Platforms: Students were randomly assigned to use different online learning platforms to evaluate their effectiveness in enhancing learning outcomes.

- Hand Sanitizers in Schools: Schools were randomly assigned to use hand sanitizers or not to study the impact on student illness and absenteeism.

- Caffeine and Alertness: Participants were randomly assigned to consume caffeinated or decaffeinated beverages to measure the effects on alertness and cognitive performance.

- Green Spaces and Well-being: Neighborhoods were randomly assigned to receive green space interventions to study the impact on residents’ well-being and community connections.

- Pet Therapy for Hospital Patients: Patients were randomly assigned to receive pet therapy or standard care to assess the impact on recovery and mood.

- Yoga for Chronic Pain: Individuals with chronic pain were randomly assigned to a yoga intervention group or a control group to study the effect on pain levels and quality of life.

- Flu Vaccines Effectiveness: Different groups of people were randomly assigned to receive either the flu vaccine or a placebo to determine the vaccine’s effectiveness.

- Reading Strategies for Dyslexia: Children with dyslexia were randomly assigned to different reading intervention strategies to compare their effectiveness.

- Physical Environment and Creativity: Participants were randomly assigned to different room setups to study the impact of physical environment on creative thinking.

- Laughter Therapy for Depression: Individuals with depression were randomly assigned to laughter therapy sessions or control groups to assess the impact on mood.

- Financial Incentives for Exercise: Participants were randomly assigned to receive financial incentives for exercising to study the impact on physical activity levels.

- Art Therapy for Anxiety: Individuals with anxiety were randomly assigned to art therapy sessions or a waitlist control group to measure the effect on anxiety levels.

- Natural Light in Offices: Employees were randomly assigned to workspaces with natural or artificial light to study the impact on productivity and job satisfaction.

- School Start Times and Academic Performance: Schools were randomly assigned different start times to study the effect on student academic performance and well-being.

- Horticulture Therapy for Seniors: Older adults were randomly assigned to participate in horticulture therapy or traditional activities to study the impact on cognitive function and life satisfaction.

- Hydration and Cognitive Function: Participants were randomly assigned to different hydration levels to measure the impact on cognitive function and alertness.

- Intergenerational Programs: Seniors and young people were randomly assigned to intergenerational programs to study the effects on well-being and cross-generational understanding.

- Therapeutic Horseback Riding for Autism: Children with autism were randomly assigned to therapeutic horseback riding or traditional therapy to study the impact on social communication skills.

- Active Commuting and Health: Employees were randomly assigned to active commuting (cycling, walking) or passive commuting to study the effect on physical health.

- Mindful Eating for Weight Management: Individuals were randomly assigned to mindful eating workshops or control groups to study the impact on weight management and eating habits.

- Noise Levels and Learning: Students were randomly assigned to classrooms with different noise levels to study the effect on learning and concentration.

- Bilingual Education Methods: Schools were randomly assigned different bilingual education methods to compare their effectiveness in language acquisition.

- Outdoor Play and Child Development: Children were randomly assigned to different amounts of outdoor playtime to study the impact on physical and cognitive development.

- Social Media Detox: Participants were randomly assigned to a social media detox or regular usage to study the impact on mental health and well-being.

- Therapeutic Writing for Trauma Survivors: Individuals who experienced trauma were randomly assigned to therapeutic writing sessions or control groups to study the impact on psychological well-being.

- Mentoring Programs for At-risk Youth: At-risk youth were randomly assigned to mentoring programs or control groups to assess the impact on academic achievement and behavior.

- Dance Therapy for Parkinson’s Disease: Individuals with Parkinson’s disease were randomly assigned to dance therapy or traditional exercise to study the effect on motor function and quality of life.

- Aquaponics in Schools: Schools were randomly assigned to implement aquaponics programs to study the impact on student engagement and environmental awareness.

- Virtual Reality for Phobia Treatment: Individuals with phobias were randomly assigned to virtual reality exposure therapy or traditional therapy to compare effectiveness.

- Gardening and Mental Health: Participants were randomly assigned to engage in gardening or other leisure activities to study the impact on mental health and stress reduction.

Each of these studies exemplifies how random assignment is utilized in various fields and settings, shedding light on the multitude of ways it can be applied to glean valuable insights and knowledge.

Real-world Impact of Random Assignment

Random assignment is like a key tool in the world of learning about people's minds and behaviors. It’s super important and helps in many different areas of our everyday lives. It helps make better rules, creates new ways to help people, and is used in lots of different fields.

Health and Medicine

In health and medicine, random assignment has helped doctors and scientists make lots of discoveries. It’s a big part of tests that help create new medicines and treatments.

By putting people into different groups by chance, scientists can really see if a medicine works.

This has led to new ways to help people with all sorts of health problems, like diabetes, heart disease, and mental health issues like depression and anxiety.

Schools and education have also learned a lot from random assignment. Researchers have used it to look at different ways of teaching, what kind of classrooms are best, and how technology can help learning.

This knowledge has helped make better school rules, develop what we learn in school, and find the best ways to teach students of all ages and backgrounds.

Workplace and Organizational Behavior

Random assignment helps us understand how people act at work and what makes a workplace good or bad.

Studies have looked at different kinds of workplaces, how bosses should act, and how teams should be put together. This has helped companies make better rules and create places to work that are helpful and make people happy.

Environmental and Social Changes

Random assignment is also used to see how changes in the community and environment affect people. Studies have looked at community projects, changes to the environment, and social programs to see how they help or hurt people’s well-being.

This has led to better community projects, efforts to protect the environment, and programs to help people in society.

Technology and Human Interaction

In our world where technology is always changing, studies with random assignment help us see how tech like social media, virtual reality, and online stuff affect how we act and feel.

This has helped make better and safer technology and rules about using it so that everyone can benefit.

The effects of random assignment go far and wide, way beyond just a science lab. It helps us understand lots of different things, leads to new and improved ways to do things, and really makes a difference in the world around us.

From making healthcare and schools better to creating positive changes in communities and the environment, the real-world impact of random assignment shows just how important it is in helping us learn and make the world a better place.

So, what have we learned? Random assignment is like a super tool in learning about how people think and act. It's like a detective helping us find clues and solve mysteries in many parts of our lives.

From creating new medicines to helping kids learn better in school, and from making workplaces happier to protecting the environment, it’s got a big job!

This method isn’t just something scientists use in labs; it reaches out and touches our everyday lives. It helps make positive changes and teaches us valuable lessons.

Whether we are talking about technology, health, education, or the environment, random assignment is there, working behind the scenes, making things better and safer for all of us.

In the end, the simple act of putting people into groups by chance helps us make big discoveries and improvements. It’s like throwing a small stone into a pond and watching the ripples spread out far and wide.

Thanks to random assignment, we are always learning, growing, and finding new ways to make our world a happier and healthier place for everyone!

Related posts:

- 19+ Experimental Design Examples (Methods + Types)

- Cluster Sampling vs Stratified Sampling

- 41+ White Collar Job Examples (Salary + Path)

- 47+ Blue Collar Job Examples (Salary + Path)

- McDonaldization of Society (Definition + Examples)

Reference this article:

About The Author

Free Personality Test

Free Memory Test

Free IQ Test

PracticalPie.com is a participant in the Amazon Associates Program. As an Amazon Associate we earn from qualifying purchases.

Follow Us On:

Youtube Facebook Instagram X/Twitter

Psychology Resources

Developmental

Personality

Relationships

Psychologists

Serial Killers

Psychology Tests

Personality Quiz

Memory Test

Depression test

Type A/B Personality Test

© PracticalPsychology. All rights reserved

Privacy Policy | Terms of Use

The Plagiarism Checker Online For Your Academic Work

Start Plagiarism Check

Editing & Proofreading for Your Research Paper

Get it proofread now

Online Printing & Binding with Free Express Delivery

Configure binding now

- Academic essay overview

- The writing process

- Structuring academic essays

- Types of academic essays

- Academic writing overview

- Sentence structure

- Academic writing process

- Improving your academic writing

- Titles and headings

- APA style overview

- APA citation & referencing

- APA structure & sections

- Citation & referencing

- Structure and sections

- APA examples overview

- Commonly used citations

- Other examples

- British English vs. American English

- Chicago style overview

- Chicago citation & referencing

- Chicago structure & sections

- Chicago style examples

- Citing sources overview

- Citation format

- Citation examples

- College essay overview

- Application

- How to write a college essay

- Types of college essays

- Commonly confused words

- Definitions

- Dissertation overview

- Dissertation structure & sections

- Dissertation writing process

- Graduate school overview

- Application & admission

- Study abroad

- Master degree

- Harvard referencing overview

- Language rules overview

- Grammatical rules & structures

- Parts of speech

- Punctuation

- Methodology overview

- Analyzing data

- Experiments

- Observations

- Inductive vs. Deductive

- Qualitative vs. Quantitative

- Types of validity

- Types of reliability

- Sampling methods

- Theories & Concepts

- Types of research studies

- Types of variables

- MLA style overview

- MLA examples

- MLA citation & referencing

- MLA structure & sections

- Plagiarism overview

- Plagiarism checker

- Types of plagiarism

- Printing production overview

- Research bias overview

- Types of research bias

- Example sections

- Types of research papers

- Research process overview

- Problem statement

- Research proposal

- Research topic

- Statistics overview

- Levels of measurment

- Frequency distribution

- Measures of central tendency

- Measures of variability

- Hypothesis testing

- Parameters & test statistics

- Types of distributions

- Correlation

- Effect size

- Hypothesis testing assumptions

- Types of ANOVAs

- Types of chi-square

- Statistical data

- Statistical models

- Spelling mistakes

- Tips overview

- Academic writing tips

- Dissertation tips

- Sources tips

- Working with sources overview

- Evaluating sources

- Finding sources

- Including sources

- Types of sources

Your Step to Success

Plagiarism Check within 10min

Printing & Binding with 3D Live Preview

Random Assignment – A Simple Introduction with Examples

How do you like this article cancel reply.

Save my name, email, and website in this browser for the next time I comment.

Completing a research or thesis paper is more work than most students imagine. For instance, you must conduct experiments before coming up with conclusions. Random assignment, a key methodology in academic research, ensures every participant has an equal chance of being placed in any group within an experiment. In experimental studies, the random assignment of participants is a vital element, which this article will discuss.

Inhaltsverzeichnis

- 1 Random Assignment – In a Nutshell

- 2 Definition: Random assignment

- 3 Importance of random assignment

- 4 Random assignment vs. random sampling

- 5 How to use random assignment

- 6 When random assignment is not used

Random Assignment – In a Nutshell

- Random assignment is where you randomly place research participants into specific groups.

- This method eliminates bias in the results by ensuring that all participants have an equal chance of getting into either group.

- Random assignment is usually used in independent measures or between-group experiment designs.

Definition: Random assignment

Pearson Correlation is a descriptive statistical procedure that describes the measure of linear dependence between two variables. It entails a sample, control group , experimental design , and randomized design. In this statistical procedure, random assignment is used. Random assignment is the random placement of participants into different groups in experimental research.

Importance of random assignment

Random assessment is essential for strengthening the internal validity of experimental research. Internal validity helps make a casual relationship’s conclusions reliable and trustworthy.

In experimental research, researchers isolate independent variables and manipulate them as they assess the impact while managing other variables. To achieve this, an independent variable for diverse member groups is vital. This experimental design is called an independent or between-group design.

Example: Different levels of independent variables

- In a medical study, you can research the impact of nutrient supplements on the immune (nutrient supplements = independent variable, immune = dependent variable)

Three independent participant levels are applicable here:

- Control group (given 0 dosages of iron supplements)

- The experimental group (low dosage)

- The second experimental group (high dosage)

This assignment technique in experiments ensures no bias in the treatment sets at the beginning of the trials. Therefore, if you do not use this technique, you won’t be able to exclude any alternate clarifications for your findings.

In the research experiment above, you can recruit participants randomly by handing out flyers at public spaces like gyms, cafés, and community centers. Then:

- Place the group from cafés in the control group

- Community center group in the low prescription trial group

- Gym group in the high-prescription group

Even with random participant assignment, other extraneous variables may still create bias in experiment results. However, these variations are usually low, hence should not hinder your research. Therefore, using random placement in experiments is highly necessary, especially where it is ethically required or makes sense for your research subject.

Random assignment vs. random sampling

Simple random sampling is a method of choosing the participants for a study. On the other hand, the random assignment involves sorting the participants selected through random sampling. Another difference between random sampling and random assignment is that the former is used in several types of studies, while the latter is only applied in between-subject experimental designs.

Your study researches the impact of technology on productivity in a specific company.

In such a case, you have contact with the entire staff. So, you can assign each employee a quantity and apply a random number generator to pick a specific sample.

For instance, from 500 employees, you can pick 200. So, the full sample is 200.

Random sampling enhances external validity, as it guarantees that the study sample is unbiased, and that an entire population is represented. This way, you can conclude that the results of your studies can be accredited to the autonomous variable.

After determining the full sample, you can break it down into two groups using random assignment. In this case, the groups are:

- The control group (does get access to technology)

- The experimental group (gets access to technology)

Using random assignment assures you that any differences in the productivity results for each group are not biased and will help the company make a decision.

How to use random assignment

Firstly, give each participant a unique number as an identifier. Then, use a specific tool to simplify assigning the participants to the sample groups. Some tools you can use are:

| Computer programs to generate numbers from the list of participants | |

| Place the numbers in a container and draw them randomly for each group | |

| If you have two sets or groups only, you can toss a coin to determine which one will be the regulated or trial group | |

| If you have three groups, you can roll a dice to determine which participant joins each group. |

Random member assignment is a prevailing technique for placing participants in specific groups because each person has a fair opportunity of being put in either group.

Random assignment in block experimental designs

In complex experimental designs , you must group your participants into blocks before using the random assignment technique.

You can create participant blocks depending on demographic variables, working hours, or scores. However, the blocks imply that you will require a bigger sample to attain high statistical power.

After grouping the participants in blocks, you can use random assignments inside each block to allocate the members to a specific treatment condition. Doing this will help you examine if quality impacts the result of the treatment.

Depending on their unique characteristics, you can also use blocking in experimental matched designs before matching the participants in each block. Then, you can randomly allot each partaker to one of the treatments in the research and examine the results.

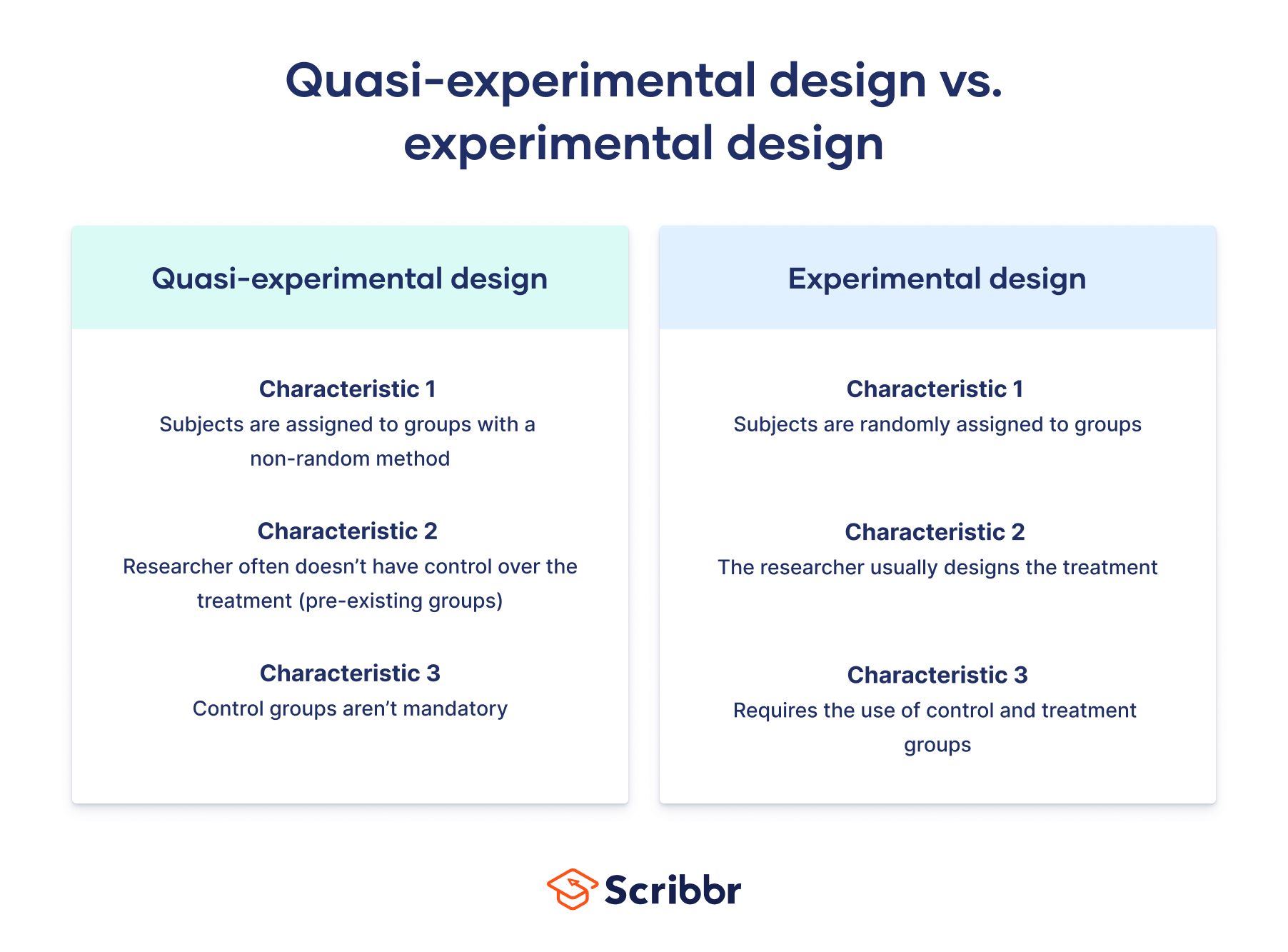

When random assignment is not used

As powerful a tool as it is, random assignment does not apply in all situations. Like the following:

Comparing different groups

When the purpose of your study is to assess the differences between the participants, random member assignment may not work.

If you want to compare teens and the elderly with and without specific health conditions, you must ensure that the participants have specific characteristics. Therefore, you cannot pick them randomly.

In such a study, the medical condition (quality of interest) is the independent variable, and the participants are grouped based on their ages (different levels). Also, all partakers are tried similarly to ensure they have the medical condition, and their outcomes are tested per group level.

No ethical justifiability

Another situation where you cannot use random assignment is if it is ethically not permitted.

If your study involves unhealthy or dangerous behaviors or subjects, such as drug use. Instead of assigning random partakers to sets, you can conduct quasi-experimental research.

When using a quasi-experimental design , you examine the conclusions of pre-existing groups you have no control over, such as existing drug users. While you cannot randomly assign them to groups, you can use variables like their age, years of drug use, or socioeconomic status to group the participants.

What is the definition of random assignment?

It is an experimental research technique that involves randomly placing participants from your samples into different groups. It ensures that every sample member has the same opportunity of being in whichever group (control or experimental group).

When is random assignment applicable?

You can use this placement technique in experiments featuring an independent measures design. It helps ensure that all your sample groups are comparable.

What is the importance of random assignment?

It can help you enhance your study’s validity . This technique also helps ensure that every sample has an equal opportunity of being assigned to a control or trial group.

When should you NOT use random assignment

You should not use this technique if your study focuses on group comparisons or if it is not legally ethical.

Because of the positive experience, I recommend this printing service not just...

We use cookies on our website. Some of them are essential, while others help us to improve this website and your experience.

- External Media

Individual Privacy Preferences

Cookie Details Privacy Policy Imprint

Here you will find an overview of all cookies used. You can give your consent to whole categories or display further information and select certain cookies.

Accept all Save

Essential cookies enable basic functions and are necessary for the proper function of the website.

Show Cookie Information Hide Cookie Information

| Name | |

|---|---|

| Anbieter | Eigentümer dieser Website, |

| Zweck | Speichert die Einstellungen der Besucher, die in der Cookie Box von Borlabs Cookie ausgewählt wurden. |

| Cookie Name | borlabs-cookie |

| Cookie Laufzeit | 1 Jahr |

| Name | |

|---|---|

| Anbieter | Bachelorprint |

| Zweck | Erkennt das Herkunftsland und leitet zur entsprechenden Sprachversion um. |

| Datenschutzerklärung | |

| Host(s) | ip-api.com |

| Cookie Name | georedirect |

| Cookie Laufzeit | 1 Jahr |

Statistics cookies collect information anonymously. This information helps us to understand how our visitors use our website.

| Akzeptieren | |

|---|---|

| Name | |

| Anbieter | Google Ireland Limited, Gordon House, Barrow Street, Dublin 4, Ireland |

| Zweck | Cookie von Google zur Steuerung der erweiterten Script- und Ereignisbehandlung. |

| Datenschutzerklärung | |

| Cookie Name | _ga,_gat,_gid |

| Cookie Laufzeit | 2 Jahre |

Content from video platforms and social media platforms is blocked by default. If External Media cookies are accepted, access to those contents no longer requires manual consent.

| Akzeptieren | |

|---|---|

| Name | |

| Anbieter | Meta Platforms Ireland Limited, 4 Grand Canal Square, Dublin 2, Ireland |

| Zweck | Wird verwendet, um Facebook-Inhalte zu entsperren. |

| Datenschutzerklärung | |

| Host(s) | .facebook.com |

| Akzeptieren | |

|---|---|

| Name | |

| Anbieter | Google Ireland Limited, Gordon House, Barrow Street, Dublin 4, Ireland |

| Zweck | Wird zum Entsperren von Google Maps-Inhalten verwendet. |

| Datenschutzerklärung | |

| Host(s) | .google.com |

| Cookie Name | NID |

| Cookie Laufzeit | 6 Monate |

| Akzeptieren | |

|---|---|

| Name | |

| Anbieter | Meta Platforms Ireland Limited, 4 Grand Canal Square, Dublin 2, Ireland |

| Zweck | Wird verwendet, um Instagram-Inhalte zu entsperren. |

| Datenschutzerklärung | |

| Host(s) | .instagram.com |

| Cookie Name | pigeon_state |

| Cookie Laufzeit | Sitzung |

| Akzeptieren | |

|---|---|

| Name | |

| Anbieter | Openstreetmap Foundation, St John’s Innovation Centre, Cowley Road, Cambridge CB4 0WS, United Kingdom |

| Zweck | Wird verwendet, um OpenStreetMap-Inhalte zu entsperren. |

| Datenschutzerklärung | |

| Host(s) | .openstreetmap.org |

| Cookie Name | _osm_location, _osm_session, _osm_totp_token, _osm_welcome, _pk_id., _pk_ref., _pk_ses., qos_token |

| Cookie Laufzeit | 1-10 Jahre |

| Akzeptieren | |

|---|---|

| Name | |

| Anbieter | Twitter International Company, One Cumberland Place, Fenian Street, Dublin 2, D02 AX07, Ireland |

| Zweck | Wird verwendet, um Twitter-Inhalte zu entsperren. |

| Datenschutzerklärung | |

| Host(s) | .twimg.com, .twitter.com |

| Cookie Name | __widgetsettings, local_storage_support_test |

| Cookie Laufzeit | Unbegrenzt |

| Akzeptieren | |

|---|---|

| Name | |

| Anbieter | Vimeo Inc., 555 West 18th Street, New York, New York 10011, USA |

| Zweck | Wird verwendet, um Vimeo-Inhalte zu entsperren. |

| Datenschutzerklärung | |

| Host(s) | player.vimeo.com |

| Cookie Name | vuid |

| Cookie Laufzeit | 2 Jahre |

| Akzeptieren | |

|---|---|

| Name | |

| Anbieter | Google Ireland Limited, Gordon House, Barrow Street, Dublin 4, Ireland |

| Zweck | Wird verwendet, um YouTube-Inhalte zu entsperren. |

| Datenschutzerklärung | |

| Host(s) | google.com |

| Cookie Name | NID |

| Cookie Laufzeit | 6 Monate |

Privacy Policy Imprint

10 Things You Need to Know About Randomization

This guide will help you design and execute different types of randomization in your experiments. We focus on the big ideas and provide examples and tools that you can use in R. For why to do randomization see this methods guide .

1 Some ways are better than others

There are many ways to randomize. The simplest is to flip a coin each time you want to determine whether a given subject gets treatment or not. This ensures that each subject has a .5 probability of receiving the treatment and a .5 probability of not receiving it. Done this way, whether one subject receives the treatment in no way affects whether the next subject receives the treatment, every subject has an equal chance of getting the treatment, and the treatment will be independent from all confounding factors — at least in expectation.

This is not a bad approach but it has shortcomings. First, using this method, you cannot know in advance how many units will be in treatment and how many in control. If you want to know this, you need some way to do selections so that the different draws are not statistically independent from each other (like drawing names from a hat). Second, you may want to assert control over the exact share of units assigned to treatment and control. That’s hard to do with a coin. Third, you might want to be able to replicate your randomization to show that there was no funny business. That’s hard to do with coins and hats. Finally, as we show below, there are all sorts of ways to do randomization to improve power and ensure balance in various ways that are very hard to achieve using coins and hats.

Fortunately though, flexible replicable randomization is very easy to do with freely available software. The following simple R code can, for example, be used to generate a random assignment, specifying the number of units to be treated. Here, N (100) is the number of units you have and m (34) is the number you want to treat. The “seed” makes it possible to replicate the same draw each time you run the code (or you can change the seed for a different draw). 1

2 Block randomization: You can ensure that treatment and control groups are balanced

It is possible, when randomizing, to specify the balance of particular factors you care about between treatment and control groups, even though it is not possible to specify which particular units are selected for either group.

For example, you can specify that treatment and control groups contain equal ratios of men to women. In doing so, you avoid any randomization that might produce a distinctly male treatment group and a distinctly female control group, or vice-versa.

Why is this desirable? Not because our estimate of the average treatment effect would otherwise be biased, but because it could be really noisy. Suppose that a random assignment happened to generate a very male treatment group and a very female control group. We would observe a correlation between gender and treatment status. If we were to estimate a treatment effect, that treatment effect would still be unbiased because gender did not cause treatment status. However, it would be more difficult to reject the null hypothesis that it was not our treatment but gender that was producing the effect. In short, the imbalance produces a noisy estimate, which makes it more difficult for us to be confident in our estimates.

Block (sometimes called stratified) randomization helps us to rig our experiment so that our treatment and control groups are balanced along important dimensions but are still randomly assigned. Essentially, this type of randomization design constructs multiple mini-experiments: for example, it might take women and randomly assign half to treatment and half to control, and then it would assign half of men to treatment and half to control. This guarantees a gender balance when treatment and control groups are pooled.

Another advantage of block randomization is that it ensures that we will be able to estimate treatment effects for subgroups of interest. For example, imagine that we are interested in estimating the effect of the treatment among women. If we do not block on gender, we may, by chance, end up with a random assignment that puts only few women into the treatment group. Our estimate of the treatment effect among women would then be very noisy. However, if we assign treatment separately among women and among men, we can ensure that we will have enough women in, respectively, the treatment and control group to obtain a precise estimate among this subgroup.

The blockTools package is a useful package for conducting block randomization. Let’s start by generating a fake data set for 60 subjects, 36 of whom are male and 24 of whom are female.

Suppose we would like to block on gender. Based on our data, blockTools will generate the smallest possible blocks, each a grouping of two units with the same gender, one of which will be assigned to treatment, and one to control.

You can check the mean of the variable on which you blocked for treatment and control to see that treatment and control groups are in fact perfectly balanced on gender.

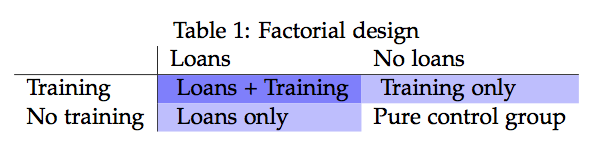

3 Factorial designs: You can randomize multiple treatments at the same time without using up power

Suppose there are multiple components of a treatment that you want to test. For example, you may want to evaluate the impact of a microfinance program. Two specific treatments might be lending money to women and providing them with training. A factorial design looks at all possible combinations of these treatments: (1) Loans, (2) Training, (3) Loans + Training, and (4) Control. Subjects are then randomly assigned to one of these four conditions.

Factorial designs are especially useful when evaluating interventions that include a package of treatments. As in the example above, many development interventions come with several arms, and it is sometimes difficult to tell which arms are producing the observed effect. A factorial design separates out these different treatments and also allows us to see the interaction between them.

The following code shows you how to randomize for a factorial design.

4 You can randomize whole clusters together (but the bigger your clusters, the weaker tends to be your power)

Sometimes it is impossible to randomize at the level of the individual. For example, a radio appeal to get individuals to a polling station must inherently be broadcast to a whole media market; it is impossible to broadcast just to some individuals but not others. Whether it is by necessity or by choice, sometimes you will randomize clusters instead of individuals.

The disadvantage of cluster randomization is that it reduces your power, since the number of randomly assigned units now reflects the number of clusters and not simply your total number of subjects. If your sample consisted of two clusters of 1,000 individuals each, the functional number of units might be closer to 2, not 2,000. For this reason, it is preferable to make clusters as small as possible.

The degree to which clustering reduces your power depends on the extent to which units in the same cluster resemble each other. It is desirable to have heterogeneity within your clusters so that they are as representative as possible of your broader population. If the individuals within clusters are very similar to each other, they may have similar potential outcomes, which means that groups of individuals with similar potential outcomes will all be assigned to treatment or control together. If a cluster has particularly high or low potential outcomes, this assignment procedure will increase the overall correlation between potential outcomes and treatment assignment. As a result, your estimates become more variable. In brief, if your clusters are more representative of the broader population, your estimates of the average treatment effect will be more precise. See our guide on cluster random assignment for more details.