Target Output

What is target output?

A target output is the true output or labels on a given dataset. The function that maps the input to its correct labels is called the target function. Therefore, the underlying goal of many machine learning methods is to produce a function that matches the target function as close as possible without giving up generalizability. The target output can be used to compare the predictions of a model and determine its accuracy.

Example of Target Output

Consider a neural network that classifies images. This could be a classification like dog vs cat vs bird, +1 vs -1, and so on. Lets say our network classifies dogs, cats, and birds and that we have (in order) the following input:

We need to classify each of these images to know if our neural network is correctly classifying them as well. In order, we classify each of these as follows:

[cat, cat, dog, bird, dog]

This array is called the “target array” and contains the target outputs . If our network were to predict [cat, dog, cat, bird, dog], this would be called the “predicted array” which contains the predicted outputs.

When the predicted and target arrays are compared, we see that the model correctty classified ⅗ of the inputs. This model, thus, has an accuracy of 60% on this data set.

The world's most comprehensive data science & artificial intelligence glossary

Please sign up or login with your details

Generation Overview

AI Generator calls

AI Chat messages

Genius Mode messages

Genius Mode images

AD-free experience

Private images

- Includes 500 AI Image generations, 1750 AI Chat Messages, 60 Genius Mode Messages and 60 Genius Mode Images per month. If you go over any of these limits, you will be charged an extra $5 for that group.

- For example: if you go over 500 AI images, but stay within the limits for AI Chat and Genius Mode, you'll be charged $5 per additional 500 AI Image generations.

- Includes 100 AI Image generations and 300 AI Chat Messages. If you go over any of these limits, you will have to pay as you go.

- For example: if you go over 100 AI images, but stay within the limits for AI Chat, you'll have to reload on credits to generate more images. Choose from $5 - $1000. You'll only pay for what you use.

Out of credits

Refill your membership to continue using DeepAI

- Welcome to the Staff Intranet

- My Workplace

- Staff Directory

- Service Status

- Student Charter & Professional Standards

- Quick links

- Bright Red Triangle

- New to Edinburgh Napier?

- Regulations

- Academic Skills

- A-Z Resources

- ENroute: Professional Recognition Framework

- ENhance: Curriculum Enhancement Framework

- Programmes and Modules

- QAA Enhancement Themes

- Quality & Standards

- L&T ENssentials Quick Guides & Resources

- DLTE Research

- Student Interns

- Intercultural Communication

- Far From Home

- Annual Statutory Accounts

- A-Z Documents

- Finance Regulations

- Insurance Certificates

- Procurement

- Who's Who

- Staff Briefing Note on Debt Sanctions

- Operational Communications

- Who's Who in Governance & Compliance

- Governance Services

- Health & Safety

- Customer Charter

- Pay and Benefits

- HR Policy and Forms

- Working at the University

- Recruitment

- Leaving the University

- Industrial Action

- Learning Technology

- Digital Skills

- IS Policies

- Plans & Performance

- Research Cycle

- International & EU Recruitment

- International Marketing and Intelligence

- International Programmes

- Global Online

- Global Mobility

- English for Academic Purposes (EAP)

- UCAS Results Embargo

- UK Recruitment

- Visa and International Support

- Useful Documents

- Communications

- Corporate Gifts

- Development & Alumni Engagement

- NSS Staff Hub

- Planning & Performance

- Business Intelligence

- Market Intelligence

- Data Governance

- Principal & Vice-Chancellor

- University Leadership Team

- The University Chancellor

- University Strategy

- Catering, Events & Vacation Lettings

- Environmental Sustainability

- Facilities Service Desk

- Print Services

- Property and Maintenance

- Student Accommodation

- A-Z of Services

- Directorate

- Staff Documents

- Design principles

- Business Engagement

- Commercialise Your Research

- Intellectual Property

- Research Process

- Policies and Guidance

- External Projects

- Public Engagement

- Research Data

- Research Degrees

- Researcher Development

- Research Governance

- Research Induction

- Research Integrity

- Worktribe Log-in

- Worktribe RMS

- Knowledge Exchange Concordat

- Consultancy and Commercial Activity Framework

- Academic Appeals

- Academic Calendar

- Academic Integrity

- Curriculum Management

- Examinations

- Graduations

- Key Dates Calendar

- My Programme template

- Our Charter

- PASS Process Guides

- Student Centre & Campus Receptions (iPoints)

- Student Check In

- Student Decision and Status related codes

- Student Engagement Reporting

- Student Records

- Students requesting to leave

- The Student Charter

- Student Sudden Death

- Programme and Student Support (PASS)

- Timetabling

- Strategy Hub

- Careers & Skills Development

- Placements & Practice Learning

- Graduate Recruitment

- Student Ambassadors

- Confident Futures

- Disability Inclusion

- Student Funding

- Report and Support

- Keep On Track

- Student Pregnancy, Maternity, Paternity and Adoption

- Counselling

- Widening Access

- About the AUA

- Edinburgh Napier Students' Association

- Join UNISON

- Member Information & Offers

- LGPS Pensions Bulletin

- Donations made to Charity

- REF2021 - Results

- You Said, We Listened

- Outputs from Research

- Impact from Research

- REF Training and Development

- Sector Consultation

Outputs from Research

A research output is the product of research . It can take many different forms or types. See here for a full glossary of output types.

The tables below sets out the generic criteria for assessing outputs and the definitions of the starred levels, as used during the REF2021 exercise.

Definitions

'World-leading', 'internationally' and 'nationally' in this context refer to quality standards. They do not refer to the nature or geographical scope of particular subjects, nor to the locus of research, nor its place of dissemination.

Definitions of Originality, Rigour and Significance

Supplementary output criteria – understanding the thresholds:.

The 'Panel criteria' explains in more detail how the sub-panels apply the assessment criteria and interpret the thresholds:

Main Panel A: Medicine, health and life sciences Main Panel B: Physical sciences, engineering and mathematics Main Panel C: Social sciences Main Panel D: Arts and humanities

Definition of Research for the REF

1. For the purposes of the REF, research is defined as a process of investigation leading to new insights, effectively shared.

2. It includes work of direct relevance to the needs of commerce, industry, culture, society, and to the public and voluntary sectors; scholarship; the invention and generation of ideas, images, performances, artefacts including design, where these lead to new or substantially improved insights; and the use of existing knowledge in experimental development to produce new or substantially improved materials, devices, products and processes, including design and construction. It excludes routine testing and routine analysis of materials, components and processes such as for the maintenance of national standards, as distinct from the development of new analytical techniques.

It also excludes the development of teaching materials that do not embody original research.

3. It includes research that is published, disseminated or made publicly available in the form of assessable research outputs, and confidential reports

Output FAQs

Q. what is a research output.

A research output is the product of research. An underpinning principle of the REF is that all forms of research output will be assessed on a fair and equal basis. Sub-panels will not regard any particular form of output as of greater or lesser quality than another per se. You can access the full list of eligible output types here.

Q. When is the next Research Excellence Framework?

The next exercise will be REF 2029, with results published in 2029. It is therefore likely that we will make our submission towards the end of 2028, but the actual timetable hasn't been confirmed yet.

A sector-wide consultation is currently occurring to help refine the detail of the next exercise. You can learn more about the emerging REF 2029 here.

Q. Why am I being contacted now, if we don't know the final details for a future assessment?

Although we don't know all of the detail, we know that some of the core components of the previous exercise will be retained. This will include the assessment of research outputs.

To make the internal process more manageable and avoid a rush at the end of the REF cycle, we will be conducting an output review process on an annual basis, in some shape and form to spread the workload.

Furthermore, regardless of any external assessment frameworks, it is also important for us to understand the quality of research being produced at Edinburgh Napier University and to introduce support mechanisms that will enhance the quality of the research conducted. This is of benefit to the University and to you and your career development.

Q. I haven't produced any REF-eligible outputs as yet, what should I do?

We recognise that not everyone contacted this year will have produced a REF-eligible output so early on in a new REF cycle. If this is the case, you can respond with a nil return and you may be contacted again in a future annual review.

If you need additional support to help you deliver on your research objectives, please contact your line manager and/or Head of Research to discuss.

Q. I was contacted last year to identify an output, but I have not received a notification for the 2024 annual cycle, why not?

Due to administrative capacity in RIE and the lack of detail on the REF 2029 rules relating to staff and outputs, we are restricting this years' scoring activity to a manageable volume based on a set of pre-defined, targeted criteria.

An output review process will be repeated annually. If an output is not reviewed in the current year, we anticipate that it will be included in a future review process if it remains in your top selection.

Once we know more about the shape of future REF, we will adapt the annual process to meet the new eligibility criteria and aim to increase the volume of outputs being reviewed.

Q. I am unfamiliar with the REF criteria, and I do not feel well-enough equipped to provide a score or qualitative statement for my output/s, what should I do?

The output self-scoring field is optional. We appreciate that some staff may not be familiar with the criteria and are therefore unable to provide a reliable score.

The REF team has been working with Schools to develop a programme of REF awareness and output quality enhancement which aims to promote understanding of REF criteria and enable staff to score their work in future. We aim to deliver quality enhancement training in all Schools by the end of the 2023-24 academic cycle.

Please look out for further communications on this.

For those staff who do wish to provide a score and commentary, please refer specifically to the REF main panel output criteria: Main Panel A: Medicine, health and life sciences Main Panel B: Physical sciences, engineering and mathematics Main Panel C: Social sciences Main Panel D: Arts and humanities

Q. Can I refer to Journal impact factors or other metrics as a basis of Output quality?

An underpinning principle of REF is that journal impact factors or any hierarchy of journals, journal-based metrics (this includes ABS rating, journal ranking and total citations) should not be used in the assessment of outputs. No output is privileged or disadvantaged on the basis of the publisher, where it is published or the medium of its publication.

An output should be assessed on its content and contribution to advancing knowledge in its own right and in the context of the REF quality threshold criteria, irrespective of the ranking of the journal or publication outlet in which it appears.

You should refer only to the REF output quality criteria (please see definitions above) if you are adding the optional self-score and commentary field and you should not refer to any journal ranking sources.

Q. What is Open Access Policy and how does it affect my outputs?

Under current rules, to be eligible for future research assessment exercises, higher education institutions (HEIs) are required to implement processes and procedures to comply with the REF Open Access policy.

It is a requirement for all journal articles and conference proceedings with an International Standard Serial Number (ISSN), accepted for publication after 1 April 2016, to be made open access. This can be achieved by either publishing the output in an open access journal outlet or by depositing an author accepted manuscript version in the University's repository within three months of the acceptance date.

Although the current Open Access policy applies only to journal and conference proceedings with an ISSN, Edinburgh Napier University expects staff to deposit all forms of research output in the University research management system, subject to any publishers' restrictions.

You can read the University's Open Access Policy here .

Q. My Output is likely to form part of a portfolio of work (multi-component output), how do I collate and present this type of output for assessment?

The REF team will be working with relevant School research leadership teams to develop platforms to present multicomponent / portfolio submissions. In the meantime, please use the commentary section to describe how your output could form part of a multicomponent submission and provide any useful contextual information about the research question your work is addressing.

Q. How will the information I provide about my outputs be used and for what purpose?

In the 2024 output cycle, a minimum of one output identified by each identified author will be reviewed by a panel of internal and external subject experts.

The information provided will be used to enable us to report on research quality measures as identified in the University R&I strategy.

Output quality data will be recorded centrally on the University's REF module in Worktribe. Access to this data is restricted to a core team of REF staff based with the Research, Innovation and Enterprise Office and key senior leaders in the School.

The data will not be used for any other purpose, other than for monitoring REF-related preparations.

Q. Who else will be involved in reviewing my output/s?

Outputs will be reviewed by an expert panel of internal and external independent reviewers.

Q. Will I receive feedback on my Output/s?

The REF team encourages open and transparent communication relating to output review and feedback. We will be working with senior research leaders within the School to promote this.

Q. I have identified more than one Output, will all of my identified outputs be reviewed this year?

In the 2024 cycle, we are committed to reviewing at least one output from each contacted author via an internal, external and moderation review process in the 2024 cycle.

Once we know more about the shape of a future REF, we will adapt the annual process to meet the new criteria / eligibility.

Get in touch

- Report a bug

- Privacy Policy

Edinburgh Napier University is a registered Scottish charity. Registration number SC018373

- Research Outputs

Original Research Article:

An article published in an academic journal can go by several names: original research, an article, a scholarly article, or a peer reviewed article. This format is an important output for many fields and disciplines. Original research articles are written by one or a number of authors who typically advance a new argument or idea to their field.

Short Reports or Letters:

Short reports or letters, sometimes also referred to as brief communications, are summaries of original research that are significantly less lengthy than academic articles. This format is often intended to quickly keep researchers and scholars abreast of current practices in a field. Short reports may also be a preview for more extensive research that is published later.

Review Articles:

A review article summarizes the state of research within a field or about a certain topic. This type of article often cites large numbers of scholars to establish a broad overview, and can inform readers about issues such as active debates in a field, noteworthy contributors or scholars, gaps in understanding, or it may predict the direction a field will go into the future.

Case Studies:

Case studies are in depth investigations of a particular person, place, group, or situation during a specified time period. The purpose of case studies is to explore and explain the underlying concepts, causal links, and impacts a case subject has in its real-life context. Case studies are common in social sciences and sciences.

Conference Presentations or Proceedings:

Conferences are organized events, usually centered on one field or topic, where researchers gather to present and discuss their work. Typically, presenters submit abstracts, or short summaries of their work, before a conference, and a group of organizers select a number of researchers who will present. Conference presentations are frequently transcribed and published in written form after they are given.

Chapter:

Books are often composed of a collection of chapters, each written by a unique author. Usually, these kinds of books are organized by theme, with each author's chapter presenting a unique argument or perspective. Books with uniquely authored chapters are often curated and organized by one or more editors, who may contribute a chapter or foreward themselves.

Often, when researchers perform their work, they will produce or work with large amounts of data, which they compile into datasets. Datasets can contain information about a wide variety of topics, from genetic code to demographic information. These datasets can then be published either independently, or as an accompaniment to another scholarly output, such as an article. Many scientific grants and journals now require researchers to publish datasets.

For some scholars, artwork is a primary research output. Scholars’ artwork can come in diverse forms and media, such as paintings, sculptures, musical performances, choreography, or literary works like poems.

Reports can come in many forms and may serve many functions. They can be authored by one or a number of people, and are frequently commissioned by government or private agencies. Some examples of reports are market reports, which analyze and predict a sector of an economy, technical reports, which can explain to researchers or clients how to complete a complex task, or white papers, which can inform or persuade an audience about a wide range of complex issues.

Digital Scholarship:

Digital scholarship is a research output that significantly incorporates or relies on digital methodologies, authoring, presentation, and presentation. Digital scholarship often complements and adds to more traditional research outputs, and may be presented in a multimedia format. Some examples include mapping projects; multimodal projects that may be composed of text, visual, and audio elements; or digital, interactive archives.

Books:

Researchers from every field and discipline produce books as a research output. Because of this, books can vary widely in content, length, form, and style, but often provide a broad overview of a topic compared to research outputs that are more limited in length, such as articles or conference proceedings. Books may be written by one or many authors, and researchers may contribute to a book in a number of ways: they could author an entire book, write a forward, or collect and organize existing works in an anthology, among others.

Interview:

Scholars may be called upon by media outlets to share their knowledge about the topic they study. Interviews can provide an opportunity for researchers to teach a more general audience about the work that they perform.

Article in a Newspaper or Magazine:

While a significant amount of researchers’ work is intended for a scholarly audience, occasionally researchers will publish in popular newspapers or magazines. Articles in these popular genres can be intended to inform a general audience of an issue in which the researcher is an expert, or they may be intended to persuade an audience about an issue.

Blog:

In addition to other scholarly outputs, many researchers also compose blogs about the work they do. Unlike books or articles, blogs are often shorter, more general, and more conversational, which makes them accessible to a wider audience. Blogs, again unlike other formats, can be published almost in real time, which can allow scholars to share current developments of their work.

- Output Types

- University of Colorado Boulder Libraries

- Research Guides

- Site: Research Strategies

- Last Updated: Oct 29, 2020 1:53 PM

- URL: https://libguides.colorado.edu/products

- Policy Library

Research Output

- All Policy and Procedure A-Z

- Policy and Procedure Categories

- Enterprise Agreement

- Current Activity

- Policy Framework

- Definitions Dictionary

Definition overview

1 definition, 2 references, 3 definition information.

An output is an outcome of research and can take many forms. Research Outputs must meet the definition of Research.

The Excellence in Research for Australia assessment defines the following eligible research output types:

- books—authored research

- chapters in research books—authored research

- journal articles—refereed, scholarly journal

- conference publications—full paper refereed

- original creative works

- live performance of creative works

- recorded/rendered creative works

- curated or produced substantial public exhibitions and events

- research reports for an external body

Source: Australian Research Council Excellence in Research for Australia 2018 Submission Guidelines.

Complying with the law and observing Policy and Procedure is a condition of working and/or studying at the University.

* This file is available in Portable Document Format (PDF) which requires the use of Adobe Acrobat Reader. A free copy of Acrobat Reader may be obtained from Adobe. Users who are unable to access information in PDF should email [email protected] to obtain this information in an alternative format.

- Library Hours

- (314) 362-7080

- [email protected]

- Library Website

- Electronic Books & Journals

- Database Directory

- Catalog Home

- Library Home

Research Impact : Outputs and Activities

- Outputs and Activities

- Establishing Your Author Name and Presence

- Enhancing Your Impact

- Tracking Your Work

- Telling Your Story

- Impact Frameworks

What are Scholarly Outputs and Activities?

Scholarly/research outputs and activities represent the various outputs and activities created or executed by scholars and investigators in the course of their academic and/or research efforts.

One common output is in the form of scholarly publications which are defined by Washington University as:

". . . articles, abstracts, presentations at professional meetings and grant applications, [that] provide the main vehicle to disseminate findings, thoughts, and analysis to the scientific, academic, and lay communities. For academic activities to contribute to the advancement of knowledge, they must be published in sufficient detail and accuracy to enable others to understand and elaborate the results. For the authors of such work, successful publication improves opportunities for academic funding and promotion while enhancing scientific and scholarly achievement and repute."

Examples of activities include: editorial board memberships, leadership in professional societies, meeting organizer, consultative efforts, contributions to successful grant applications, invited talks and presentations, admininstrative roles, contribution of service to a clinical laboratory program, to name a few. For more examples of activities, see Washington University School of Medicine Appointments & Promotions Guidelines and Requirements or the "Examples of Outputs and Activities" box below. Also of interest is Table 1 in the " Research impact: We need negative metrics too " work.

Tracking your research outputs and activities is key to being able to document the impact of your research. One starting point for telling a story about your research impact is your publications. Advances in digital technology afford numerous avenues for scholars to not only disseminate research findings but also to document the diffusion of their research. The capacity to measure and report tangible outcomes can be used for a variety of purposes and tailored for various audiences ranging from the layperson, physicians, investigators, organizations, and funding agencies. Publication data can be used to craft a compelling narrative about your impact. See Quantifying the Impact of My Publications for examples of how to tell a story using publication data.

Another tip is to utilize various means of disseminating your research. See Strategies for Enhancing Research Impact for more information.

Examples of Outputs and Activities

- << Previous: Impact

- Next: Establishing Your Author Name and Presence >>

- Last Updated: Mar 12, 2024 1:26 PM

- URL: https://beckerguides.wustl.edu/impact

Library for Staff: Types of Research outputs

- Research Skills and Resources

- Information Literacy and the Library This link opens in a new window

- The Wintec Research Archive

- Copyright for Staff

- Publishing at Wintec

- Liaison Librarians

- Wintec Library Collection Policy

- Reciprocal agreement UOW & DHB

- Teaching Books

- Staff guidelines for APA assesment

- Adult and Tertiary Education

Types of Research outputs

- Last Updated: Mar 12, 2024 7:29 AM

- URL: https://libguides.wintec.ac.nz/staff

An official website of the United States government

The .gov means it’s official. Federal government websites often end in .gov or .mil. Before sharing sensitive information, make sure you’re on a federal government site.

The site is secure. The https:// ensures that you are connecting to the official website and that any information you provide is encrypted and transmitted securely.

- Publications

- Account settings

Preview improvements coming to the PMC website in October 2024. Learn More or Try it out now .

- Advanced Search

- Journal List

- J R Soc Med

- v.107(1 Suppl); 2014 May

Research output of health research institutions and its use in 42 sub-Saharan African countries: results of a questionnaire-based survey

To describe and analyse research output from surveyed national health research institutions in Africa.

The survey used a structured questionnaire to solicit information from 847 health research institutions in 42 countries of the World Health Organization African Region.

Eight hundred and forty-seven health research institutions in 42 sub-Saharan African countries.

Participants

Key informants from the health research institutions.

Main outcome measures

Volume, type and medium of publications, and distribution of research outputs.

Books or chapters for books accounted for the highest number of information products published (on average 16.7 per respondent institution), followed by patents registered in country (8.2), discussion or working papers (6.5) and conference proceedings (6.4). Publication in a peer-reviewed journal constituted only a minor part of research output (on average about 1 paper per institution). Radio and TV broadcasts on health research accounted for the highest number of products issued by institution staff (on average 5.5 per institution), followed by peer-reviewed journals indexed internationally (3.8) or nationally (3.1). There were, on average, 1.5 press releases, 1.5 newspaper or magazine articles, and 1.4 policy briefs per institution. Over half of respondent institutions (52%) developed briefs and summaries of articles to share with their target audiences, 43% developed briefs for possible actions and 37% provided articles and reports upon request. Only a small proportion of information products produced were available in institutional databases.

Conclusions

The research output of health research institutions in the Region is significant, but more effort is needed to strengthen research capacity, including human and financial resources.

Introduction

The Health Research System Analysis (HRSA) Initiative institutional survey 1 constitutes the first attempt to collect large amount of comparable information across a large number of African countries on what health research institutions are doing, how they are organised and what their objectives are. The survey is an important part of a wider effort to describe and analyse national health research systems and intends to ‘provide estimates for benchmarks of national health research systems, as a means to describe, monitor and analyse national health research activities and to improve national research capacities’.

There are few studies addressing research outputs and use in African countries. Generally, these studies measure health research output for African countries using a narrow, though practical, definition of research output, i.e. the number of publications indexed in bibliographic databases.

One of these studies 2 found that health research output, as measured by publications indexed in Thomson Reuters (formerly ISI) Web of Science®, is strongly clustered in a few high-income countries. Of the top 20 producers that accounted for more than 90% of research output in 1992–2001, only three (none of which was African) were not from high-income countries.

More recently, it has been shown using the same data that the World Health Organization (WHO) African Region was the only WHO region to have decreased its relative share of global health-related publications during 1992–2001. 3 The decrease in the (initially very low) share was over 15%. However, it is important to note that the absolute number of publications from the Region increased during this period.

A shortcoming of both of the above studies is that the only variable they use to measure health-related research output is the number of publications in high-impact international journals. Other important dimensions of the process of dissemination of research products, such as publishing in regional or national journals, editing working papers, strengthening or increasing human resources, or developing new products are not considered, as no such data were available.

A third study 4 considers a different period of time (1996–2005) and uses a different bibliographic database (PubMed®) to measure African research output. This database is not as complete as Thomson Reuters Web of Science® because it only records the affiliation of the first author and it gives roughly the same picture as PubMed®, i.e. African research production is strongly concentrated in a few countries, with Egypt, Nigeria and South Africa accounting for 60% of the continent’s production.

Finally, another study 5 of 158 African medical journals from 33 countries documents that most:

- are owned by academic institutions

- have considerable problems in increasing their relatively small circulation

- lack funding

- have serious problems in maintaining publication frequency, which keeps them out of international databases such as the Thomson Reuters Web of Knowledge

Considering the information gap about how African health research institutions are organised, what they produce, what type of interaction they have with each other and who funds them, the institutional survey is an important tool to start documenting and analysing some of these questions. In this paper, the main results of Module 4000 (health research outputs, synthesis, dissemination and knowledge management) are presented.

The methods followed to assess national health information systems are described elsewhere 6 but are summarised briefly here.

The survey used Tool 6 from the HRSA Initiative: Methods for Collecting Benchmarks and Systems Analysis Toolkit. 1 Within the institutional survey, seven questionnaires, representing separate ‘modules’, were completed by the respondent institutions. This report draws on data from two of those questionnaires:

- Module 1000 – Identification, introduction and background information

- Module 4000 – Research output and use

The questionnaires were designed to focus on issues pertaining to the output and use of research at the institutional level.

For responses to questions where institutions were asked to rank items in the questionnaire, we used weighting schemes to arrive at composite ranks. For example, where the response required ranking an item on a 1–5 scale, a weight of five was given to the first rank, four to the second rank and so on, with the fifth rank getting the least weight of one. The average of these was used to derive a composite rank of items.

We used IBM® SPSS® Statistics Version 19 to analyse the data.

The institution survey dataset included responses from up to 847 institutions in 42 countries in the WHO African Region (all except Algeria, Angola, Sierra Leone and South Africa). About two-thirds of the respondent institutions were aged 30 years, 70.3% belonged to the public sector, 12.5% were independent research institutions and 64.3% functioned at the national level ( Table 1 ).

Characteristics of health research institutions in 42 sub-Saharan African countries, 2009.

The diversity of institutions taking part in this exercise was seen not only in the different types of institutions and their research level but also in their contributions and priorities. Respondents were asked to list the top three and bottom three contributions since 2000 from a list of alternatives. The most frequently mentioned most important option was ‘influencing health policies or programmes’, with 27% of respondent institutions putting this at the top of their list ( Figure 1 ). The second most frequently mentioned category was ‘producing new knowledge’, cited by 25%. The third most frequently mentioned category was ‘publishing articles in peer-reviewed scientific journals’, cited by 23%.

Health research institutions’ perceived most important contribution to research outputs, exchange and dissemination activities in 42 sub-Saharan African countries, 2009.

The most frequently mentioned least important option was ‘increasing profits’, cited by 38% of respondent institutions ( Figure 2 ), followed by ‘developing products’ (cited by 11%) and ‘being technological leader’ (cited by 10%). These results clearly demonstrated that neither revenue generation nor being innovative in terms of products was among the main objectives of the institutions targeted by this survey.

Health research institutions’ perceived least important contribution to research outputs, exchange and dissemination activities in 42 sub-Saharan African countries, 2009.

The production of information products was measured by a number of questions. Two basic indicators of research activity were measured by the set of questions:

- In the previous 12 months, how many of the following items that contain or communicate research findings addressing health topics did the institution publish or edit?

- In the previous 12 months, how many of the following research outputs addressing health topics were produced by the institute staff?

Books or chapters for books accounted for the highest information products published (on average 16.7 per respondent institution), followed by patents registered in country (8.2), discussion or working papers (6.5) and conference proceedings (6.4). Wider dissemination, non-technical information products were also published, although not as many as more academic, technical papers. There were, on average, 5.9 TV or radio broadcast materials per institution, 2.6 press releases or media briefs and 1.5 policy briefs or report series. Publication in a journal, peer-reviewed or otherwise, constituted only a minor part of the research output, with an average of about one paper per institution ( Table 2 ).

Average number of information products issued per respondent health research institution in the 12 months preceding the survey in 42 sub-Saharan African countries, 2009.

Radio and TV broadcasts on health research accounted for the highest products issued by institution staff (on average 5.5 per respondent institution), peer-reviewed journals indexed internationally (3.8) or nationally (3.1). There were, on average, 1.5 press releases, 1.5 newspaper or magazine articles and 1.4 policy briefs per institution.

The frequency with which wider publication and dissemination events were organised by the different institutions was also surveyed. These questions aimed to measure the effort made by institutions to communicate research findings during the previous 12 months of answering the questionnaire. Over half of the respondent institutions (52%) developed briefs and summaries of articles to share with their target audiences, 43% developed briefs for possible action and 37% provided articles and reports upon request ( Figure 3 ). Regarding the audience targeted for sharing research findings, the national ministry of health was targeted by 60% of institutions, followed by offices of international agencies (45%) and academic and research institutions (43%) ( Figure 4 ).

Percentage of health research institutions that have taken various types of action to share research findings with intended target audience in the five years preceding the survey in 42 sub-Saharan African countries, 2009.

Percentages of health research institutions that have frequently involved target audience or users of research in activities aimed at sharing research findings in the five years preceding the survey in 42 sub-Saharan African countries, 2009.

Another form of communicating and disseminating research findings is through the maintenance and publication of databases containing information about ongoing research projects and research findings. Questions q4102 and q4103 collected such information. These questions asked about the existence of databases, a precondition for further inquiry (e.g. completeness of databases; frequency of update; whether user-friendly or not, as databases can be accessible but impossible to use by the public).

Table 2 shows the number of databases containing either ongoing project information or research findings. These databases may or may not be accessible to the public. The table shows that only a small proportion of information products produced were available in institutional databases. The top three items available in databases were discussion and working papers (on average 0.56 per respondent institution), followed by conference proceedings (0.48), and policy briefs and report series (0.3).

Health research institutions shared their research outputs with the public sector organisations, academic institutions, international agencies, civil society groups, healthcare facilities and private for-profit companies ( Table 3 ). National ministries of health are the largest recipients of research output (466 products or an average of 0.82 products) per institution, followed by academic institutions (466/0.55). Products were mostly disseminated through the medium of scientific seminars at the institutions (1395 products, an average of 3.22 per institution) and at national conferences and seminars targeting specific groups such as practitioners and community members.

Users of research outputs from respondent health research institutions in 42 sub-Saharan African countries, 2009 and mean number of information products per respondent institution.

These results show that while most of the respondent institutions have research as a high priority, publishing that research may not be so important for them. It may be that the data presented in other studies, which consider publications as an indication of research activities, give an incomplete picture and this may be the case in all countries, not only in African countries. It also appears that the institutions surveyed do not generate profits or have activities that could provide revenues, such as developing products or acquiring patents, as main priorities.

Although the survey has provided new, previously unavailable information, the results should be considered carefully as there are some limitations. The aim was to obtain a complete census of all health research institutions across the Region. However, as a complete census was not obtained, it is difficult to determine the representativeness of the results using statistical tools on the data gathered. The data described in this report are not representative, in a statistical sense, of what could be happening in some countries. The data only record research output and use at the institutions surveyed by this questionnaire.

The findings on research outputs do not provide information about productivity of these institutions. It may be that the more active institutions also employ more or better-trained staff. This information provides a baseline, and subsequent surveys may show how the information evolves over time. Based on online searches of publications in high-impact international journals by countries, it is quite possible that the downward share of African publications on health reported in the literature 2 has not been reversed.

Patents registered, nationally or internationally, were not frequently reported by institutions. The acquisition of patents and other intellectual property depends on linkage between research institutions and productive firms, and it is to be expected that such linkages are more frequent and stronger in more developed and larger economies.

The findings of the study regarding the sharing of research outputs are useful in obtaining an overview of wider dissemination activities. However, individual institutions should ideally keep track of the number of people who are invited to these events or the range of stakeholders in the audience. Without this information, event numbers should be interpreted with caution in terms of the impact on individuals.

The first key piece of evidence gained from this survey is that research activities are broader in scope and richer in form than is shown by other databases such as bibliographic or reference databases such as PubMed® or Thomson Reuters Web of Science®. Health research institutions not only produce articles but also have a significant level of production of non-academic research output such as short policy reports, media releases and articles. According to the survey, publishing articles is not the top priority of research institutions; instead, priorities are influencing health policies or programmes or producing new knowledge.

The survey shows that a large number of health research institutions make an effort to disseminate and share their research production with their intended audiences. First, there is a sizeable effort to publish or edit academic and non-academic publications, with government institutions being the most active in this field. Second, a large number of academic and non-academic events such as scientific conferences, seminars, workshops and various types of public forums are organised and conducted, again mainly by government institutions. Finally, some institutions maintain a number of databases of ongoing research projects, most of which are open to the public. Government institutions most frequently host such databases.

The survey shows that even though health research institutions in sub-Saharan Africa are not the main worldwide producers of research, and they are not the main producers even among developing countries, their research activity is significant. It should be a policy goal to measure such activity in depth, as research in these countries may be the key to solving, or at least improving, the urgent health problems faced by individual countries in the Region. The institutional survey serves as an initial step in this direction, but further steps should be implemented, building on this extensive mapping of research institutions in the continent.

Declarations

Competing interests.

None declared

WHO Regional Office for Africa

Ethical approval

Not applicable

Contributorship

DK wrote the paper and carried out the statistical analyses; CK, PEM, MP, IS and WK reviewed the paper; PSLD reviewed the initial design of the study and provided support and overall leadership.

Acknowledgements

WHO Country Office focal persons for information, research and knowledge management are acknowledged for their contribution in coordinating the surveys in countries. Their counterparts in ministries of health are also acknowledged. These surveys would not have been possible without the active participation of the head of health research institutions and their department heads who have given their time and effort to fill out and send back the completed modules and questionnaires. We also acknowledge the contribution of the consultant who prepared the background material for this paper.

Not commissioned; peer-reviewed by Richard Sullivan

Resource allocation and target setting: a CSW–DEA based approach

- Original Research

- Published: 30 April 2022

- Volume 318 , pages 557–589, ( 2022 )

Cite this article

- Mehdi Soltanifar ORCID: orcid.org/0000-0001-7386-5337 1 ,

- Farhad Hosseinzadeh Lotfi ORCID: orcid.org/0000-0001-5022-553X 2 ,

- Hamid Sharafi ORCID: orcid.org/0000-0003-1121-6803 2 &

- Sebastián Lozano ORCID: orcid.org/0000-0002-0401-3584 3

486 Accesses

11 Citations

Explore all metrics

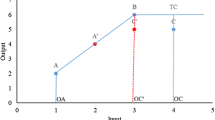

Resource allocation and target setting is part of the strategic management process of an organization. In this paper, we address the important issue of “optimally” allocating additional resources to the different operating units. Three different managerial interpretations of this question are presented, differing on the assumptions on the expected output increases. In each case, using multiplier data envelopment analysis (DEA) models and common set of weights (CSW), a new procedure for resource allocation and target setting is proposed. The proposed approach is innovative in its use of CSW and multi objective optimization, both of which are consistent with the centralized decision making character of the problem. The validity and usefulness of the proposed CSW–DEA models is shown using different datasets.

This is a preview of subscription content, log in via an institution to check access.

Access this article

Price includes VAT (Russian Federation)

Instant access to the full article PDF.

Rent this article via DeepDyve

Institutional subscriptions

Similar content being viewed by others

An equitable DEA-based approach for assigning fixed resources along with targets

Ruiyue Lin, Zhiping Chen & Zongxin Li

Fixed costs and shared resources allocation in two-stage network DEA

Weiwei Zhu, Qian Zhang & Haiqing Wang

Centralized resource allocation based on cross-evaluation considering organizational objective and individual preferences

Menghan Chen, Sheng Ang, … Feng Yang

Amirteimoori, A., & Mohaghegh Tabar, M. (2010). Resource allocation and target setting in data envelopment analysis. Expert Systems with Applications, 37 (4), 3036–3039.

Article Google Scholar

An, Q., Tao, X., Xiong, B., & Chen, X. (2021). Frontier-based incentive mechanisms for allocating common revenues or fixed costs. European Journal of Operational Research . https://doi.org/10.1016/j.ejor.2021.12.039

An, Q., Wen, Y., Ding, T., & Li, Y. (2019). Resource sharing and payoff allocation in a three-stage system: Integrating network DEA with the Shapley value method. Omega, 85 , 16–25.

Asghariniya, S., Zhiani Rezai, H., & Mehrabian, S. (2020). Resource allocation: A common set of weights model. Numerical Algebra, Control and Optimization, 10 (3), 257–273.

Athanassopoulos, A. D. (1998). Decision support for target-based resource allocation of public services in multiunit and multilevel systems. Management Science, 44 , 173–187.

Banker, R. D., Charnes, A., & Cooper, W. W. (1984). Some models for estimating technical and scale in efficiencies in data envelopment analysis. Management Science, 30 , 1078–1092.

Beasley, J. E. (2003). Allocating fixed costs and resources via data envelopment analysis. European Journal of Operational Research, 147 (1), 198–216.

Bi, G., Ding, J., Luo, Y., & Liang, L. (2011). Resource allocation and target setting for parallel production system based on DEA. Applied Mathematical Modelling, 35 (9), 4270–4280.

Charnes, A., Cooper, W. W., & Rhodes, E. (1978). Measuring the efficiency of decision making units. European Journal of Operational Research, 2 , 429–444.

Chen, M., Ang, S., Jiang, L., & Yang, F. (2020). Centralized resource allocation based on cross-evaluation considering organizational objective and individual preferences. Or Spectrum, 42 , 529–565.

Contreras, I., & Lozano, S. (2020). Allocating additional resources to public universities. A DEA bargaining approach. Socio-Economic Planning Sciences, 71 , 100752.

Cook, W. D., & Kress, M. (1999). Characterizing an equitable allocation of shared costs: A DEA approach. European Journal of Operational Research, 119 (3), 652–661.

Cook, W. D., & Zhu, J. (2005). Allocation of shared costs among decision making units: A DEA approach. Computers and Operations Research, 32 (8), 2171–2178.

Ding, T., Chen, Y., Wu, H., & Wei, Y. (2018). Centralized fixed cost and resource allocation considering technology heterogeneity: A DEA approach. Annals of Operations Research, 268 (1), 497–511.

Du, J., Cook, W. D., Liang, L., & Zhu, J. (2014). Fixed cost and resource allocation based on DEA cross efficiency. European Journal of Operational Research, 235 (1), 206–214.

Estañ, T., Llorca, N., Martínez, R., & Sánchez-Soriano, J. (2021). On how to allocate the fixed cost of transport systems. Annals of Operations Research, 301 , 81–105.

Fang, L., & Zhang, C. Q. (2008). Resource allocation based on the DEA model. Journal of the Operational Research Society, 59 (8), 1136–1141.

Fare, R., Grabowski, R., Grosskopf, S., & Kraft, S. (1997). Efficiency of a fixed but allocate able input: A nonparametric approach. Economic Letters, 56 , 187–193.

Golany, B., Phillips, F. Y., & Rousseau, J. J. (1993). Models for improved effectiveness based on DEA efficiency results. IIE Transactions, 25 (6), 2–10.

Golany, B., & Tamir, E. (1995). Evaluating efficiency-effectiveness-equality trade-offs: A data envelopment analysis approach. Management Science, 41 , 1172–1184.

Hadi-Vencheh, A., Foroughi, A. A., & Soleimani-damaneh, M. (2008). A DEA model for resource allocation. Economic Modelling, 25 (5), 983–993.

Hosseinzadeh Lotfi, F., Hatami-Marbini, A., Agrell, P., Aghayi, A., & Gholami, K. (2013). Allocating fixed resources and setting targets using a common-weights DEA approach. Computers and Industrial Engineering, 64 , 631–640.

Hosseinzadeh Lotfi, F., Noora, A. A., Jahanshahloo, G. R., Gerami, J., & Mozaffari, M. R. (2010). Centralized resource allocation for enhanced Russell models. Journal of Computational and Applied Mathematics, 235 , 1–10.

Jahanshahloo, G. R., Memariani, A., Hosseinzadeh Lotfi, F., & Rezai, H. R. (2005). A note on some of DEA models and finding efficiency and complete ranking using common set of weights. Applied Mathematics and Computation, 166 , 265–281.

Korhonen, P., & Syrjanen, M. (2004). Resource allocation based on efficiency analysis. Management Science, 50 , 1134–1144.

Li, F., Song, J., Dolgui, A., & Liang, L. (2017). Using common weights and efficiency invariance principles for resource allocation and target setting. International Journal of Production Research, 55 (17), 4982–4997.

Liu, F.-H.F., & Peng, H. H. (2008). Ranking of units on the DEA frontier with common weights. Computers & Operations Research, 35 , 1624–1637.

Liu, T., Zheng, Z., & Du, Y. (2021). Evaluation on regional science and technology resources allocation in China based on the zero sum gains data envelopment analysis. Journal of Intelligent Manufacturing, 32 , 1729–1737.

Lozano, S., & Villa, G. (2004). Centralized resource allocation using data envelopment analysis. Journal of Productivity Analysis, 22 , 143–161.

Lozano, S., Villa, G., & Adenso-Díaz, B. (2004). Centralized target setting for regional recycling operations using DEA. Omega, 32 , 101–110.

Lozano, S., Villa, G., & Brännlund, R. (2009). Centralized reallocation of emission permits using DEA. European Journal of Operational Research, 193 , 752–760.

Lozano, S., Villa, G., & Canca, D. (2011). Application of centralised DEA approach to capital budgeting in Spanish ports. Computers and Industrial Engineering, 60 , 455–465.

Nemati, M., & Matin, R. K. (2019). A data envelopment analysis approach for resource allocation with undesirable outputs: An application to home appliance production companies. Sādhanā, 44 , 11.

Rezaei Sadrabadi, M., & Sadjadi, S. J. (2009). A new interactive method to solve multi objective linear programming problems. Journal of Software Engineering and Applications, 2 , 237–247.

Ripoll-Zarraga, A. E., & Lozano, S. (2020). A centralised DEA approach to resource reallocation in Spanish airports. Annals of Operations Research, 288 , 701–732.

Sadeghi, J., Ghiyasi, M., & Dehnokhalaji, A. (2020). Resource allocation and target setting based on virtual profit improvement. Numerical Algebra, Control and Optimization, 10 (2), 127–142.

Sharahi, S. J., Khalili-Damghani, K., Abtahi, A. R., & Rashidi Komijan, A. (2021). Fuzzy type-II resource allocation and target setting in data envelopment analysis: a real case of gas refineries. International Journal of Uncertainty, Fuzziness and Knowledge-Based Systems, 29 (01), 65–105.

Soltanifar, M. (2011). Ranking of different common set of weights models using a voting model and its application in determining efficient DMUs. International Journal of Advanced Operations Management, 3 (3–4), 290–308.

Steuer, R. E. (1986). Multiple criteria optimization: Theory, computation, and application . Wiley.

Google Scholar

Tone, K. (2017). Advances in DEA theory and applications: With extensions to forecasting models . Wiley.

Book Google Scholar

Wang, M., Li, L., Dai, Q., & Shi, F. (2021). Resource allocation based on DEA and non-cooperative game. Journal of Systems Science and Complexity, 34 , 2231–2249.

Wei, Q. L., Zhang, J., & Zhang, X. (2000). An inverse DEA model for input/output estimate. European Journal of Operational Research, 121 (1), 151–163.

White, S. W., & Bordoloi, S. K. (2015). A review of DEA-based resource and cost allocation models: Implications for services. International Journal of Services and Operations Management, 20 (1), 86–101.

Wu, J., An, Q., Ali, S., & Liang, L. (2013). DEA based resource allocation considering environmental factors. Mathematical and Computer Modelling, 58 (5–6), 1128–1137.

Yang, T., Wang, P., & Li, F. (2018). Centralized resource allocation and target setting based on data envelopment analysis model. Mathematical Problems in Engineering Article ID 3826096.

Yang, Z., & Zhang, Q. (2015). Resource allocation based on DEA and modified Shapley value. Applied Mathematics and Computation, 263 , 280–286.

Zhang, B., Xin, Q., Tang, M., Niu, N., Du, H., Chang, X., & Wang, Z. (2021). Revenue allocation for interfirm collaboration on carbon emission reduction: Complete information in a big data context. Annals of Operations Research https://doi.org/10.1007/s10479-021-04017-z .

Zhang, J., Wu, Q., & Zhou, Z. (2019). A two-stage DEA model for resource allocation in industrial pollution treatment and its application in China. Journal of Cleaner Production, 228 , 29–39.

Zhang, X. S., & Cui, J. C. (1999). A project evaluation system in the state economic information system of china an operations research practice in public sectors. International Transactions in Operational Research, 6 , 441–452.

Zionts, S., & Wallenius, J. (1983). An interactive programming method for solving the multiple criteria problem. Management Science, 22 (6), 652–663.

Download references

Author information

Authors and affiliations.

Department of Mathematics, Semnan Branch, Islamic Azad University, Semnan, Iran

Mehdi Soltanifar

Department of Mathematics, Science and Research Branch, Islamic Azad University, Tehran, Iran

Farhad Hosseinzadeh Lotfi & Hamid Sharafi

Department of Industrial Management, University of Seville, Seville, Spain

Sebastián Lozano

You can also search for this author in PubMed Google Scholar

Corresponding author

Correspondence to Mehdi Soltanifar .

Additional information

Publisher's note.

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

1.1 Proof of theorem 1

Suppose \({\mathbf{(u,v,\alpha }}_{{\mathbf{1}}} {\mathbf{,\alpha }}_{{\mathbf{2}}} {\mathbf{,}}...{{\varvec{\upalpha}}}_{{\mathbf{n}}} {\mathbf{)}}\) is a weak efficient solution of model ( 4 ). It is clear that this solution will be a feasible solution of model ( 5 ). By contradiction assume that this solution is not a weak efficient solution of model ( 5 ), then there is a feasible solution \({\mathbf{(}}\overline{{\mathbf{u}}} {\mathbf{,}}\overline{{\mathbf{v}}} {\mathbf{,}}\overline{{{\varvec{\upalpha}}}}_{{\mathbf{1}}} {\mathbf{,}}\overline{{{\varvec{\upalpha}}}}_{{\mathbf{2}}} {\mathbf{,}}...{\mathbf{,}}\overline{{{\varvec{\upalpha}}}}_{{\mathbf{n}}} {\mathbf{)}}\) of model ( 5 ) such that \({\mathbf{uy}}_{{\mathbf{j}}} < \overline{{\mathbf{u}}} {\mathbf{y}}_{{\mathbf{j}}} \quad \& \,\quad {\mathbf{vx}}_{{\mathbf{j}}} {\mathbf{ + }}\left\| {{{\varvec{\upalpha}}}_{{\mathbf{j}}} } \right\|_{{\mathbf{1}}} > \,\overline{{\mathbf{v}}} {\mathbf{x}}_{{\mathbf{j}}} {\mathbf{ + }}\left\| {\overline{{{\varvec{\upalpha}}}}_{{\mathbf{j}}} } \right\|_{{\mathbf{1}}} ,j = 1,2,...,n\) . According to the constraints and variables of models (4) and (5), \(\left( {{\mathbf{uy}}_{{\mathbf{j}}} } \right),\left( {\overline{{\mathbf{u}}} {\mathbf{y}}_{{\mathbf{j}}} } \right),\left( {{\mathbf{vx}}_{{\mathbf{j}}} {\mathbf{ + }}\left\| {{{\varvec{\upalpha}}}_{{\mathbf{j}}} } \right\|_{{\mathbf{1}}} } \right),\,\left( {\overline{{\mathbf{v}}} {\mathbf{x}}_{{\mathbf{j}}} {\mathbf{ + }}\left\| {\overline{{{\varvec{\upalpha}}}}_{{\mathbf{j}}} } \right\|_{{\mathbf{1}}} } \right) > 0,j = 1,2,...,n\) and we have \({\mathbf{uy}}_{{\mathbf{j}}} < \overline{{\mathbf{u}}} {\mathbf{y}}_{{\mathbf{j}}} \quad \& \,\quad \frac{1}{{{\mathbf{vx}}_{{\mathbf{j}}} {\mathbf{ + }}\left\| {{{\varvec{\upalpha}}}_{{\mathbf{j}}} } \right\|_{{\mathbf{1}}} }} < \,\frac{1}{{\overline{{\mathbf{v}}} {\mathbf{x}}_{{\mathbf{j}}} {\mathbf{ + }}\left\| {\overline{{{\varvec{\upalpha}}}}_{{\mathbf{j}}} } \right\|_{{\mathbf{1}}} }},j = 1,2,...,n\) . This implies that \(\frac{{{\mathbf{uy}}_{{\mathbf{j}}} }}{{{\mathbf{vx}}_{{\mathbf{j}}} {\mathbf{ + }}\left\| {{{\varvec{\upalpha}}}_{{\mathbf{j}}} } \right\|_{{\mathbf{1}}} }} < \frac{{\overline{{\mathbf{u}}} {\mathbf{y}}_{{\mathbf{j}}} }}{{\overline{{\mathbf{v}}} {\mathbf{x}}_{{\mathbf{j}}} {\mathbf{ + }}\left\| {\overline{{{\varvec{\upalpha}}}}_{{\mathbf{j}}} } \right\|_{{\mathbf{1}}} }},\,\,j = 1,2,...,n\) which contradicts the weak efficiency of \({\mathbf{(u,v,\alpha }}_{{\mathbf{1}}} {\mathbf{,\alpha }}_{{\mathbf{2}}} {\mathbf{,}}...{{\varvec{\upalpha}}}_{{\mathbf{n}}} {\mathbf{)}}\) for model ( 4 ).

2.1 Proof of theorem 2

First of all, note that the constraints of models ( 6 ) and ( 10 ) are equivalent, i.e. both models define the same feasible region. Moreover, their objective functions are also the same. Thus, the objective function in model ( 6 ) is the sum of the differences between the weighted sum of the outputs and the weighted sum of the inputs and this is maximized. In model ( 10 ), the slack variables \(d_{j}\) is equal to the opposite difference, i.e. the weighted sum of the inputs and the weighted sum of the outputs, and the sum of these slacks variables is minimized. This shows that models ( 6 ) and ( 10 ) are equivalent.

Rights and permissions

Reprints and permissions

About this article

Soltanifar, M., Hosseinzadeh Lotfi, F., Sharafi, H. et al. Resource allocation and target setting: a CSW–DEA based approach. Ann Oper Res 318 , 557–589 (2022). https://doi.org/10.1007/s10479-022-04721-4

Download citation

Accepted : 08 April 2022

Published : 30 April 2022

Issue Date : November 2022

DOI : https://doi.org/10.1007/s10479-022-04721-4

Share this article

Anyone you share the following link with will be able to read this content:

Sorry, a shareable link is not currently available for this article.

Provided by the Springer Nature SharedIt content-sharing initiative

- Data envelopment analysis (DEA)

- Common set of weights (CSW)

- Resource allocation

- Target setting

- Multi-objective linear programming

- Goal programming (GP)

- Find a journal

- Publish with us

- Track your research

IMAGES

VIDEO

COMMENTS

The target output can be used to compare the predictions of a model and determine its accuracy. Example of Target Output. Consider a neural network that classifies images. This could be a classification like dog vs cat vs bird, +1 vs -1, and so on. Lets say our network classifies dogs, cats, and birds and that we have (in order) the following ...

Originality will be understood as the extent to which the output makes an important and innovative contribution to understanding and knowledge in the field.Research outputs that demonstrate originality may do one or more of the following: produce and interpret new empirical findings or new material; engage with new and/or complex problems; develop innovative research methods, methodologies and ...

Mills-Schofield argues outcomes in contrast to outputs are the "difference made by the outputs" ( 2012, n.p.). She cites examples such as better traffic flow, shorter travel times and few accidents as outcomes of a new highway, whereby the outputs are "project design and the number of highway miles built and repaired" (Mills-Scofield ...

Digital scholarship is a research output that significantly incorporates or relies on digital methodologies, authoring, presentation, and presentation. Digital scholarship often complements and adds to more traditional research outputs, and may be presented in a multimedia format. Some examples include mapping projects; multimodal projects that ...

This chapter provides an overview of considerations for the development of outcome measures for observational comparative effectiveness research (CER) studies, describes implications of the proposed outcomes for study design, and enumerates issues of bias that may arise in incorporating the ascertainment of outcomes into observational research, and means of evaluating, preventing and/or ...

This will target how we can be demonstrate effective 'day-to-day' science and getting your PhD. This is the process of discussing our experiments, writing up our work and completing the PhD thesis. ... These mile-stones are often aligned with the output of key research outputs, such as papers, talks or reports, along the way and are likely ...

Additionally, evaluating a research group, a research center, or a department may be distinct from evaluating an individual and require somewhat different metrics (e.g., Hughes et al., 2010), but once suitable measures of output are available, productivity can be evaluated in terms of either years of effort, number of people involved, research ...

This chapter is aimed at enlightening researchers on the importance of taking their research outcomes to target beneficiaries. It explained the concept of research uptake, highlighted the benefits of conducting research uptake to both the target audience and researchers and further prescribed a guide to researchers on the process of conducting ...

The Excellence in Research for Australia assessment defines the following eligible research output types: books—authored research. chapters in research books—authored research. journal articles—refereed, scholarly journal. conference publications—full paper refereed. original creative works. live performance of creative works.

Scholarly/research outputs and activities represent the various outputs and activities created or executed by scholars and investigators in the course of their academic and/or research efforts. One common output is in the form of scholarly publications which are defined by Washington University as:". . . articles, abstracts, presentations at ...

Furthermore, the proposed target output distribution accounts for a biased simulation model with stochastic outputs—specifically, simulation output distribution—using limited numbers of input and output test data. ... There have been numerous research efforts to account for reducible uncertainty due to limited test data for UQ tasks. First, ...

For a specific case, assessment results can be output by inputting 1 + ... which is made up of remarks of the research object; Step 3 The evaluation matrix: ... the total score of the PE for target tracking can be given by: D = 0.1494 × 90 + 0.4120 × 80 + 0.4386 × 70 = 77.108, and the result showed that the performance for target tracking ...

THE TARGET VALUE. Performance indicator target values should be expressed in the same data type as the performance indicator baseline and actual data. For instance, if the performance indicator is the number of the staff trained, then the target should be a count of staff trained, not a percentage change in the number of staff trained.

to apply the research recommendations and have the necessary skills to use or apply the results. The results of each research project needs a targeted strategy targeted at the intended users. Research organizations may have recognized that a publication is not enough to disseminate their information and influence implementation or change.

Abstract. Purpose: A study was conducted at two merged South African higher education institutions to determine which management factors, as identified in a literature study as well as through a ...

A creative research/problem-solving output in the form of design drawings, books, models, exhibitions, websites, installations or build works. This can include (but is not limited) to: fashion/textile design. graphic design. interior design. other designs. industrial design. architectural design. multimedia design.

The comparisons of the actual target output values and output from the neural network are given in Table 2. In the first case, the first output value m is perfectly matched with the target output ...

Research with efficiency improvement or target setting in DEA literature is scarce [2, 30]. However, there has been some contributions such as combined goal programming and inverse DEA for bank mergers ... To maintain feasibility of the predefined input/output target in the models, the law of diminishing marginal returns suggests that, ...

Generally, these studies measure health research output for African countries using a narrow, though practical, definition of research output, i.e. the number of publications indexed in bibliographic databases. ... Percentage of health research institutions that have taken various types of action to share research findings with intended target ...

target output - Free download as Word Doc (.doc / .docx), PDF File (.pdf), Text File (.txt) or read online for free.

Furthermore, in order to achieve multiplication factor F greater than one, with F defined as the ratio of output fusion energy to the energy of injected protons, it is found there exists a minimum possible density of boron target, which is 1.8 × 10 5 ρ s when the kinetic energy of injected protons is 880 keV.

Resource allocation and target setting is part of the strategic management process of an organization. In this paper, we address the important issue of "optimally" allocating additional resources to the different operating units. Three different managerial interpretations of this question are presented, differing on the assumptions on the expected output increases. In each case, using ...