Have a language expert improve your writing

Run a free plagiarism check in 10 minutes, generate accurate citations for free.

- Knowledge Base

- Research bias

Types of Bias in Research | Definition & Examples

Research bias results from any deviation from the truth, causing distorted results and wrong conclusions. Bias can occur at any phase of your research, including during data collection , data analysis , interpretation, or publication. Research bias can occur in both qualitative and quantitative research .

Understanding research bias is important for several reasons.

- Bias exists in all research, across research designs , and is difficult to eliminate.

- Bias can occur at any stage of the research process .

- Bias impacts the validity and reliability of your findings, leading to misinterpretation of data.

It is almost impossible to conduct a study without some degree of research bias. It’s crucial for you to be aware of the potential types of bias, so you can minimize them.

For example, the success rate of the program will likely be affected if participants start to drop out ( attrition ). Participants who become disillusioned due to not losing weight may drop out, while those who succeed in losing weight are more likely to continue. This in turn may bias the findings towards more favorable results.

Table of contents

Information bias, interviewer bias.

- Publication bias

Researcher bias

Response bias.

Selection bias

Cognitive bias

How to avoid bias in research

Other types of research bias, frequently asked questions about research bias.

Information bias , also called measurement bias, arises when key study variables are inaccurately measured or classified. Information bias occurs during the data collection step and is common in research studies that involve self-reporting and retrospective data collection. It can also result from poor interviewing techniques or differing levels of recall from participants.

The main types of information bias are:

- Recall bias

- Observer bias

Performance bias

Regression to the mean (rtm).

Over a period of four weeks, you ask students to keep a journal, noting how much time they spent on their smartphones along with any symptoms like muscle twitches, aches, or fatigue.

Recall bias is a type of information bias. It occurs when respondents are asked to recall events in the past and is common in studies that involve self-reporting.

As a rule of thumb, infrequent events (e.g., buying a house or a car) will be memorable for longer periods of time than routine events (e.g., daily use of public transportation). You can reduce recall bias by running a pilot survey and carefully testing recall periods. If possible, test both shorter and longer periods, checking for differences in recall.

- A group of children who have been diagnosed, called the case group

- A group of children who have not been diagnosed, called the control group

Since the parents are being asked to recall what their children generally ate over a period of several years, there is high potential for recall bias in the case group.

The best way to reduce recall bias is by ensuring your control group will have similar levels of recall bias to your case group. Parents of children who have childhood cancer, which is a serious health problem, are likely to be quite concerned about what may have contributed to the cancer.

Thus, if asked by researchers, these parents are likely to think very hard about what their child ate or did not eat in their first years of life. Parents of children with other serious health problems (aside from cancer) are also likely to be quite concerned about any diet-related question that researchers ask about.

Observer bias is the tendency of research participants to see what they expect or want to see, rather than what is actually occurring. Observer bias can affect the results in observationa l and experimental studies, where subjective judgment (such as assessing a medical image) or measurement (such as rounding blood pressure readings up or down) is part of the d ata collection process.

Observer bias leads to over- or underestimation of true values, which in turn compromise the validity of your findings. You can reduce observer bias by using double-blinded and single-blinded research methods.

Based on discussions you had with other researchers before starting your observations , you are inclined to think that medical staff tend to simply call each other when they need specific patient details or have questions about treatments.

At the end of the observation period, you compare notes with your colleague. Your conclusion was that medical staff tend to favor phone calls when seeking information, while your colleague noted down that medical staff mostly rely on face-to-face discussions. Seeing that your expectations may have influenced your observations, you and your colleague decide to conduct semi-structured interviews with medical staff to clarify the observed events. Note: Observer bias and actor–observer bias are not the same thing.

Performance bias is unequal care between study groups. Performance bias occurs mainly in medical research experiments, if participants have knowledge of the planned intervention, therapy, or drug trial before it begins.

Studies about nutrition, exercise outcomes, or surgical interventions are very susceptible to this type of bias. It can be minimized by using blinding , which prevents participants and/or researchers from knowing who is in the control or treatment groups. If blinding is not possible, then using objective outcomes (such as hospital admission data) is the best approach.

When the subjects of an experimental study change or improve their behavior because they are aware they are being studied, this is called the Hawthorne effect (or observer effect). Similarly, the John Henry effect occurs when members of a control group are aware they are being compared to the experimental group. This causes them to alter their behavior in an effort to compensate for their perceived disadvantage.

Regression to the mean (RTM) is a statistical phenomenon that refers to the fact that a variable that shows an extreme value on its first measurement will tend to be closer to the center of its distribution on a second measurement.

Medical research is particularly sensitive to RTM. Here, interventions aimed at a group or a characteristic that is very different from the average (e.g., people with high blood pressure) will appear to be successful because of the regression to the mean. This can lead researchers to misinterpret results, describing a specific intervention as causal when the change in the extreme groups would have happened anyway.

In general, among people with depression, certain physical and mental characteristics have been observed to deviate from the population mean .

This could lead you to think that the intervention was effective when those treated showed improvement on measured post-treatment indicators, such as reduced severity of depressive episodes.

However, given that such characteristics deviate more from the population mean in people with depression than in people without depression, this improvement could be attributed to RTM.

Interviewer bias stems from the person conducting the research study. It can result from the way they ask questions or react to responses, but also from any aspect of their identity, such as their sex, ethnicity, social class, or perceived attractiveness.

Interviewer bias distorts responses, especially when the characteristics relate in some way to the research topic. Interviewer bias can also affect the interviewer’s ability to establish rapport with the interviewees, causing them to feel less comfortable giving their honest opinions about sensitive or personal topics.

Participant: “I like to solve puzzles, or sometimes do some gardening.”

You: “I love gardening, too!”

In this case, seeing your enthusiastic reaction could lead the participant to talk more about gardening.

Establishing trust between you and your interviewees is crucial in order to ensure that they feel comfortable opening up and revealing their true thoughts and feelings. At the same time, being overly empathetic can influence the responses of your interviewees, as seen above.

Publication bias occurs when the decision to publish research findings is based on their nature or the direction of their results. Studies reporting results that are perceived as positive, statistically significant , or favoring the study hypotheses are more likely to be published due to publication bias.

Publication bias is related to data dredging (also called p -hacking ), where statistical tests on a set of data are run until something statistically significant happens. As academic journals tend to prefer publishing statistically significant results, this can pressure researchers to only submit statistically significant results. P -hacking can also involve excluding participants or stopping data collection once a p value of 0.05 is reached. However, this leads to false positive results and an overrepresentation of positive results in published academic literature.

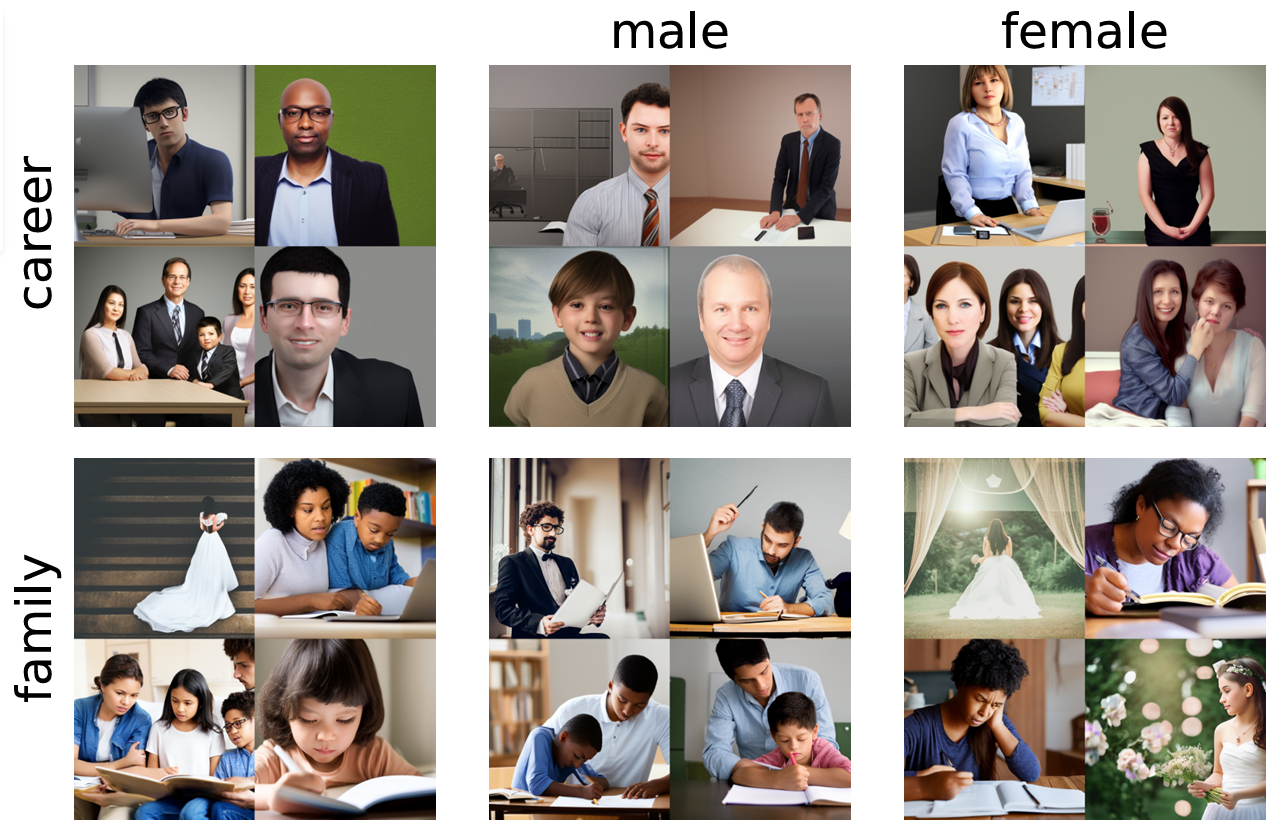

Researcher bias occurs when the researcher’s beliefs or expectations influence the research design or data collection process. Researcher bias can be deliberate (such as claiming that an intervention worked even if it didn’t) or unconscious (such as letting personal feelings, stereotypes, or assumptions influence research questions ).

The unconscious form of researcher bias is associated with the Pygmalion effect (or Rosenthal effect ), where the researcher’s high expectations (e.g., that patients assigned to a treatment group will succeed) lead to better performance and better outcomes.

Researcher bias is also sometimes called experimenter bias, but it applies to all types of investigative projects, rather than only to experimental designs .

- Good question: What are your views on alcohol consumption among your peers?

- Bad question: Do you think it’s okay for young people to drink so much?

Response bias is a general term used to describe a number of different situations where respondents tend to provide inaccurate or false answers to self-report questions, such as those asked on surveys or in structured interviews .

This happens because when people are asked a question (e.g., during an interview ), they integrate multiple sources of information to generate their responses. Because of that, any aspect of a research study may potentially bias a respondent. Examples include the phrasing of questions in surveys, how participants perceive the researcher, or the desire of the participant to please the researcher and to provide socially desirable responses.

Response bias also occurs in experimental medical research. When outcomes are based on patients’ reports, a placebo effect can occur. Here, patients report an improvement despite having received a placebo, not an active medical treatment.

While interviewing a student, you ask them:

“Do you think it’s okay to cheat on an exam?”

Common types of response bias are:

Acquiescence bias

Demand characteristics.

- Social desirability bias

Courtesy bias

- Question-order bias

Extreme responding

Acquiescence bias is the tendency of respondents to agree with a statement when faced with binary response options like “agree/disagree,” “yes/no,” or “true/false.” Acquiescence is sometimes referred to as “yea-saying.”

This type of bias occurs either due to the participant’s personality (i.e., some people are more likely to agree with statements than disagree, regardless of their content) or because participants perceive the researcher as an expert and are more inclined to agree with the statements presented to them.

Q: Are you a social person?

People who are inclined to agree with statements presented to them are at risk of selecting the first option, even if it isn’t fully supported by their lived experiences.

In order to control for acquiescence, consider tweaking your phrasing to encourage respondents to make a choice truly based on their preferences. Here’s an example:

Q: What would you prefer?

- A quiet night in

- A night out with friends

Demand characteristics are cues that could reveal the research agenda to participants, risking a change in their behaviors or views. Ensuring that participants are not aware of the research objectives is the best way to avoid this type of bias.

On each occasion, patients reported their pain as being less than prior to the operation. While at face value this seems to suggest that the operation does indeed lead to less pain, there is a demand characteristic at play. During the interviews, the researcher would unconsciously frown whenever patients reported more post-op pain. This increased the risk of patients figuring out that the researcher was hoping that the operation would have an advantageous effect.

Social desirability bias is the tendency of participants to give responses that they believe will be viewed favorably by the researcher or other participants. It often affects studies that focus on sensitive topics, such as alcohol consumption or sexual behavior.

You are conducting face-to-face semi-structured interviews with a number of employees from different departments. When asked whether they would be interested in a smoking cessation program, there was widespread enthusiasm for the idea.

Note that while social desirability and demand characteristics may sound similar, there is a key difference between them. Social desirability is about conforming to social norms, while demand characteristics revolve around the purpose of the research.

Courtesy bias stems from a reluctance to give negative feedback, so as to be polite to the person asking the question. Small-group interviewing where participants relate in some way to each other (e.g., a student, a teacher, and a dean) is especially prone to this type of bias.

Question order bias

Question order bias occurs when the order in which interview questions are asked influences the way the respondent interprets and evaluates them. This occurs especially when previous questions provide context for subsequent questions.

When answering subsequent questions, respondents may orient their answers to previous questions (called a halo effect ), which can lead to systematic distortion of the responses.

Extreme responding is the tendency of a respondent to answer in the extreme, choosing the lowest or highest response available, even if that is not their true opinion. Extreme responding is common in surveys using Likert scales , and it distorts people’s true attitudes and opinions.

Disposition towards the survey can be a source of extreme responding, as well as cultural components. For example, people coming from collectivist cultures tend to exhibit extreme responses in terms of agreement, while respondents indifferent to the questions asked may exhibit extreme responses in terms of disagreement.

Selection bias is a general term describing situations where bias is introduced into the research from factors affecting the study population.

Common types of selection bias are:

Sampling or ascertainment bias

- Attrition bias

- Self-selection (or volunteer) bias

- Survivorship bias

- Nonresponse bias

- Undercoverage bias

Sampling bias occurs when your sample (the individuals, groups, or data you obtain for your research) is selected in a way that is not representative of the population you are analyzing. Sampling bias threatens the external validity of your findings and influences the generalizability of your results.

The easiest way to prevent sampling bias is to use a probability sampling method . This way, each member of the population you are studying has an equal chance of being included in your sample.

Sampling bias is often referred to as ascertainment bias in the medical field.

Attrition bias occurs when participants who drop out of a study systematically differ from those who remain in the study. Attrition bias is especially problematic in randomized controlled trials for medical research because participants who do not like the experience or have unwanted side effects can drop out and affect your results.

You can minimize attrition bias by offering incentives for participants to complete the study (e.g., a gift card if they successfully attend every session). It’s also a good practice to recruit more participants than you need, or minimize the number of follow-up sessions or questions.

You provide a treatment group with weekly one-hour sessions over a two-month period, while a control group attends sessions on an unrelated topic. You complete five waves of data collection to compare outcomes: a pretest survey, three surveys during the program, and a posttest survey.

Self-selection or volunteer bias

Self-selection bias (also called volunteer bias ) occurs when individuals who volunteer for a study have particular characteristics that matter for the purposes of the study.

Volunteer bias leads to biased data, as the respondents who choose to participate will not represent your entire target population. You can avoid this type of bias by using random assignment —i.e., placing participants in a control group or a treatment group after they have volunteered to participate in the study.

Closely related to volunteer bias is nonresponse bias , which occurs when a research subject declines to participate in a particular study or drops out before the study’s completion.

Considering that the hospital is located in an affluent part of the city, volunteers are more likely to have a higher socioeconomic standing, higher education, and better nutrition than the general population.

Survivorship bias occurs when you do not evaluate your data set in its entirety: for example, by only analyzing the patients who survived a clinical trial.

This strongly increases the likelihood that you draw (incorrect) conclusions based upon those who have passed some sort of selection process—focusing on “survivors” and forgetting those who went through a similar process and did not survive.

Note that “survival” does not always mean that participants died! Rather, it signifies that participants did not successfully complete the intervention.

However, most college dropouts do not become billionaires. In fact, there are many more aspiring entrepreneurs who dropped out of college to start companies and failed than succeeded.

Nonresponse bias occurs when those who do not respond to a survey or research project are different from those who do in ways that are critical to the goals of the research. This is very common in survey research, when participants are unable or unwilling to participate due to factors like lack of the necessary skills, lack of time, or guilt or shame related to the topic.

You can mitigate nonresponse bias by offering the survey in different formats (e.g., an online survey, but also a paper version sent via post), ensuring confidentiality , and sending them reminders to complete the survey.

You notice that your surveys were conducted during business hours, when the working-age residents were less likely to be home.

Undercoverage bias occurs when you only sample from a subset of the population you are interested in. Online surveys can be particularly susceptible to undercoverage bias. Despite being more cost-effective than other methods, they can introduce undercoverage bias as a result of excluding people who do not use the internet.

Cognitive bias refers to a set of predictable (i.e., nonrandom) errors in thinking that arise from our limited ability to process information objectively. Rather, our judgment is influenced by our values, memories, and other personal traits. These create “ mental shortcuts” that help us process information intuitively and decide faster. However, cognitive bias can also cause us to misunderstand or misinterpret situations, information, or other people.

Because of cognitive bias, people often perceive events to be more predictable after they happen.

Although there is no general agreement on how many types of cognitive bias exist, some common types are:

- Anchoring bias

- Framing effect

- Actor-observer bias

- Availability heuristic (or availability bias)

- Confirmation bias

- Halo effect

- The Baader-Meinhof phenomenon

Anchoring bias

Anchoring bias is people’s tendency to fixate on the first piece of information they receive, especially when it concerns numbers. This piece of information becomes a reference point or anchor. Because of that, people base all subsequent decisions on this anchor. For example, initial offers have a stronger influence on the outcome of negotiations than subsequent ones.

- Framing effect

Framing effect refers to our tendency to decide based on how the information about the decision is presented to us. In other words, our response depends on whether the option is presented in a negative or positive light, e.g., gain or loss, reward or punishment, etc. This means that the same information can be more or less attractive depending on the wording or what features are highlighted.

Actor–observer bias

Actor–observer bias occurs when you attribute the behavior of others to internal factors, like skill or personality, but attribute your own behavior to external or situational factors.

In other words, when you are the actor in a situation, you are more likely to link events to external factors, such as your surroundings or environment. However, when you are observing the behavior of others, you are more likely to associate behavior with their personality, nature, or temperament.

One interviewee recalls a morning when it was raining heavily. They were rushing to drop off their kids at school in order to get to work on time. As they were driving down the highway, another car cut them off as they were trying to merge. They tell you how frustrated they felt and exclaim that the other driver must have been a very rude person.

At another point, the same interviewee recalls that they did something similar: accidentally cutting off another driver while trying to take the correct exit. However, this time, the interviewee claimed that they always drive very carefully, blaming their mistake on poor visibility due to the rain.

- Availability heuristic

Availability heuristic (or availability bias) describes the tendency to evaluate a topic using the information we can quickly recall to our mind, i.e., that is available to us. However, this is not necessarily the best information, rather it’s the most vivid or recent. Even so, due to this mental shortcut, we tend to think that what we can recall must be right and ignore any other information.

- Confirmation bias

Confirmation bias is the tendency to seek out information in a way that supports our existing beliefs while also rejecting any information that contradicts those beliefs. Confirmation bias is often unintentional but still results in skewed results and poor decision-making.

Let’s say you grew up with a parent in the military. Chances are that you have a lot of complex emotions around overseas deployments. This can lead you to over-emphasize findings that “prove” that your lived experience is the case for most families, neglecting other explanations and experiences.

The halo effect refers to situations whereby our general impression about a person, a brand, or a product is shaped by a single trait. It happens, for instance, when we automatically make positive assumptions about people based on something positive we notice, while in reality, we know little about them.

The Baader-Meinhof phenomenon

The Baader-Meinhof phenomenon (or frequency illusion) occurs when something that you recently learned seems to appear “everywhere” soon after it was first brought to your attention. However, this is not the case. What has increased is your awareness of something, such as a new word or an old song you never knew existed, not their frequency.

While very difficult to eliminate entirely, research bias can be mitigated through proper study design and implementation. Here are some tips to keep in mind as you get started.

- Clearly explain in your methodology section how your research design will help you meet the research objectives and why this is the most appropriate research design.

- In quantitative studies , make sure that you use probability sampling to select the participants. If you’re running an experiment, make sure you use random assignment to assign your control and treatment groups.

- Account for participants who withdraw or are lost to follow-up during the study. If they are withdrawing for a particular reason, it could bias your results. This applies especially to longer-term or longitudinal studies .

- Use triangulation to enhance the validity and credibility of your findings.

- Phrase your survey or interview questions in a neutral, non-judgmental tone. Be very careful that your questions do not steer your participants in any particular direction.

- Consider using a reflexive journal. Here, you can log the details of each interview , paying special attention to any influence you may have had on participants. You can include these in your final analysis.

- Baader–Meinhof phenomenon

- Sampling bias

- Ascertainment bias

- Self-selection bias

- Hawthorne effect

- Omitted variable bias

- Pygmalion effect

- Placebo effect

Research bias affects the validity and reliability of your research findings , leading to false conclusions and a misinterpretation of the truth. This can have serious implications in areas like medical research where, for example, a new form of treatment may be evaluated.

Observer bias occurs when the researcher’s assumptions, views, or preconceptions influence what they see and record in a study, while actor–observer bias refers to situations where respondents attribute internal factors (e.g., bad character) to justify other’s behavior and external factors (difficult circumstances) to justify the same behavior in themselves.

Response bias is a general term used to describe a number of different conditions or factors that cue respondents to provide inaccurate or false answers during surveys or interviews. These factors range from the interviewer’s perceived social position or appearance to the the phrasing of questions in surveys.

Nonresponse bias occurs when the people who complete a survey are different from those who did not, in ways that are relevant to the research topic. Nonresponse can happen because people are either not willing or not able to participate.

Is this article helpful?

Other students also liked.

- Attrition Bias | Examples, Explanation, Prevention

- Observer Bias | Definition, Examples, Prevention

- What Is Social Desirability Bias? | Definition & Examples

More interesting articles

- Demand Characteristics | Definition, Examples & Control

- Hostile Attribution Bias | Definition & Examples

- Regression to the Mean | Definition & Examples

- Representativeness Heuristic | Example & Definition

- Sampling Bias and How to Avoid It | Types & Examples

- Self-Fulfilling Prophecy | Definition & Examples

- The Availability Heuristic | Example & Definition

- The Baader–Meinhof Phenomenon Explained

- What Is a Ceiling Effect? | Definition & Examples

- What Is Actor-Observer Bias? | Definition & Examples

- What Is Affinity Bias? | Definition & Examples

- What Is Anchoring Bias? | Definition & Examples

- What Is Ascertainment Bias? | Definition & Examples

- What Is Belief Bias? | Definition & Examples

- What Is Bias for Action? | Definition & Examples

- What Is Cognitive Bias? | Definition, Types, & Examples

- What Is Confirmation Bias? | Definition & Examples

- What Is Conformity Bias? | Definition & Examples

- What Is Correspondence Bias? | Definition & Example

- What Is Explicit Bias? | Definition & Examples

- What Is Generalizability? | Definition & Examples

- What Is Hindsight Bias? | Definition & Examples

- What Is Implicit Bias? | Definition & Examples

- What Is Information Bias? | Definition & Examples

- What Is Ingroup Bias? | Definition & Examples

- What Is Negativity Bias? | Definition & Examples

- What Is Nonresponse Bias? | Definition & Example

- What Is Normalcy Bias? | Definition & Example

- What Is Omitted Variable Bias? | Definition & Examples

- What Is Optimism Bias? | Definition & Examples

- What Is Outgroup Bias? | Definition & Examples

- What Is Overconfidence Bias? | Definition & Examples

- What Is Perception Bias? | Definition & Examples

- What Is Primacy Bias? | Definition & Example

- What Is Publication Bias? | Definition & Examples

- What Is Recall Bias? | Definition & Examples

- What Is Recency Bias? | Definition & Examples

- What Is Response Bias? | Definition & Examples

- What Is Selection Bias? | Definition & Examples

- What Is Self-Selection Bias? | Definition & Example

- What Is Self-Serving Bias? | Definition & Example

- What Is Status Quo Bias? | Definition & Examples

- What Is Survivorship Bias? | Definition & Examples

- What Is the Affect Heuristic? | Example & Definition

- What Is the Egocentric Bias? | Definition & Examples

- What Is the Framing Effect? | Definition & Examples

- What Is the Halo Effect? | Definition & Examples

- What Is the Hawthorne Effect? | Definition & Examples

- What Is the Placebo Effect? | Definition & Examples

- What Is the Pygmalion Effect? | Definition & Examples

- What Is Unconscious Bias? | Definition & Examples

- What Is Undercoverage Bias? | Definition & Example

- What Is Vividness Bias? | Definition & Examples

Research Bias 101: What You Need To Know

By: Derek Jansen (MBA) | Expert Reviewed By: Dr Eunice Rautenbach | September 2022

If you’re new to academic research, research bias (also sometimes called researcher bias) is one of the many things you need to understand to avoid compromising your study. If you’re not careful, research bias can ruin the credibility of your study.

In this post, we’ll unpack the thorny topic of research bias. We’ll explain what it is , look at some common types of research bias and share some tips to help you minimise the potential sources of bias in your research.

Overview: Research Bias 101

- What is research bias (or researcher bias)?

- Bias #1 – Selection bias

- Bias #2 – Analysis bias

- Bias #3 – Procedural (admin) bias

So, what is research bias?

Well, simply put, research bias is when the researcher – that’s you – intentionally or unintentionally skews the process of a systematic inquiry , which then of course skews the outcomes of the study . In other words, research bias is what happens when you affect the results of your research by influencing how you arrive at them.

For example, if you planned to research the effects of remote working arrangements across all levels of an organisation, but your sample consisted mostly of management-level respondents , you’d run into a form of research bias. In this case, excluding input from lower-level staff (in other words, not getting input from all levels of staff) means that the results of the study would be ‘biased’ in favour of a certain perspective – that of management.

Of course, if your research aims and research questions were only interested in the perspectives of managers, this sampling approach wouldn’t be a problem – but that’s not the case here, as there’s a misalignment between the research aims and the sample .

Now, it’s important to remember that research bias isn’t always deliberate or intended. Quite often, it’s just the result of a poorly designed study, or practical challenges in terms of getting a well-rounded, suitable sample. While perfect objectivity is the ideal, some level of bias is generally unavoidable when you’re undertaking a study. That said, as a savvy researcher, it’s your job to reduce potential sources of research bias as much as possible.

To minimize potential bias, you first need to know what to look for . So, next up, we’ll unpack three common types of research bias we see at Grad Coach when reviewing students’ projects . These include selection bias , analysis bias , and procedural bias . Keep in mind that there are many different forms of bias that can creep into your research, so don’t take this as a comprehensive list – it’s just a useful starting point.

Bias #1 – Selection Bias

First up, we have selection bias . The example we looked at earlier (about only surveying management as opposed to all levels of employees) is a prime example of this type of research bias. In other words, selection bias occurs when your study’s design automatically excludes a relevant group from the research process and, therefore, negatively impacts the quality of the results.

With selection bias, the results of your study will be biased towards the group that it includes or favours, meaning that you’re likely to arrive at prejudiced results . For example, research into government policies that only includes participants who voted for a specific party is going to produce skewed results, as the views of those who voted for other parties will be excluded.

Selection bias commonly occurs in quantitative research , as the sampling strategy adopted can have a major impact on the statistical results . That said, selection bias does of course also come up in qualitative research as there’s still plenty room for skewed samples. So, it’s important to pay close attention to the makeup of your sample and make sure that you adopt a sampling strategy that aligns with your research aims. Of course, you’ll seldom achieve a perfect sample, and that okay. But, you need to be aware of how your sample may be skewed and factor this into your thinking when you analyse the resultant data.

Need a helping hand?

Bias #2 – Analysis Bias

Next up, we have analysis bias . Analysis bias occurs when the analysis itself emphasises or discounts certain data points , so as to favour a particular result (often the researcher’s own expected result or hypothesis). In other words, analysis bias happens when you prioritise the presentation of data that supports a certain idea or hypothesis , rather than presenting all the data indiscriminately .

For example, if your study was looking into consumer perceptions of a specific product, you might present more analysis of data that reflects positive sentiment toward the product, and give less real estate to the analysis that reflects negative sentiment. In other words, you’d cherry-pick the data that suits your desired outcomes and as a result, you’d create a bias in terms of the information conveyed by the study.

Although this kind of bias is common in quantitative research, it can just as easily occur in qualitative studies, given the amount of interpretive power the researcher has. This may not be intentional or even noticed by the researcher, given the inherent subjectivity in qualitative research. As humans, we naturally search for and interpret information in a way that confirms or supports our prior beliefs or values (in psychology, this is called “confirmation bias”). So, don’t make the mistake of thinking that analysis bias is always intentional and you don’t need to worry about it because you’re an honest researcher – it can creep up on anyone .

To reduce the risk of analysis bias, a good starting point is to determine your data analysis strategy in as much detail as possible, before you collect your data . In other words, decide, in advance, how you’ll prepare the data, which analysis method you’ll use, and be aware of how different analysis methods can favour different types of data. Also, take the time to reflect on your own pre-conceived notions and expectations regarding the analysis outcomes (in other words, what do you expect to find in the data), so that you’re fully aware of the potential influence you may have on the analysis – and therefore, hopefully, can minimize it.

Bias #3 – Procedural Bias

Last but definitely not least, we have procedural bias , which is also sometimes referred to as administration bias . Procedural bias is easy to overlook, so it’s important to understand what it is and how to avoid it. This type of bias occurs when the administration of the study, especially the data collection aspect, has an impact on either who responds or how they respond.

A practical example of procedural bias would be when participants in a study are required to provide information under some form of constraint. For example, participants might be given insufficient time to complete a survey, resulting in incomplete or hastily-filled out forms that don’t necessarily reflect how they really feel. This can happen really easily, if, for example, you innocently ask your participants to fill out a survey during their lunch break.

Another form of procedural bias can happen when you improperly incentivise participation in a study. For example, offering a reward for completing a survey or interview might incline participants to provide false or inaccurate information just to get through the process as fast as possible and collect their reward. It could also potentially attract a particular type of respondent (a freebie seeker), resulting in a skewed sample that doesn’t really reflect your demographic of interest.

The format of your data collection method can also potentially contribute to procedural bias. If, for example, you decide to host your survey or interviews online, this could unintentionally exclude people who are not particularly tech-savvy, don’t have a suitable device or just don’t have a reliable internet connection. On the flip side, some people might find in-person interviews a bit intimidating (compared to online ones, at least), or they might find the physical environment in which they’re interviewed to be uncomfortable or awkward (maybe the boss is peering into the meeting room, for example). Either way, these factors all result in less useful data.

Although procedural bias is more common in qualitative research, it can come up in any form of fieldwork where you’re actively collecting data from study participants. So, it’s important to consider how your data is being collected and how this might impact respondents. Simply put, you need to take the respondent’s viewpoint and think about the challenges they might face, no matter how small or trivial these might seem. So, it’s always a good idea to have an informal discussion with a handful of potential respondents before you start collecting data and ask for their input regarding your proposed plan upfront.

Let’s Recap

Ok, so let’s do a quick recap. Research bias refers to any instance where the researcher, or the research design , negatively influences the quality of a study’s results, whether intentionally or not.

The three common types of research bias we looked at are:

- Selection bias – where a skewed sample leads to skewed results

- Analysis bias – where the analysis method and/or approach leads to biased results – and,

- Procedural bias – where the administration of the study, especially the data collection aspect, has an impact on who responds and how they respond.

As I mentioned, there are many other forms of research bias, but we can only cover a handful here. So, be sure to familiarise yourself with as many potential sources of bias as possible to minimise the risk of research bias in your study.

Psst… there’s more (for free)

This post is part of our dissertation mini-course, which covers everything you need to get started with your dissertation, thesis or research project.

You Might Also Like:

This is really educational and I really like the simplicity of the language in here, but i would like to know if there is also some guidance in regard to the problem statement and what it constitutes.

Do you have a blog or video that differentiates research assumptions, research propositions and research hypothesis?

Submit a Comment Cancel reply

Your email address will not be published. Required fields are marked *

Save my name, email, and website in this browser for the next time I comment.

- Print Friendly

Thank you for visiting nature.com. You are using a browser version with limited support for CSS. To obtain the best experience, we recommend you use a more up to date browser (or turn off compatibility mode in Internet Explorer). In the meantime, to ensure continued support, we are displaying the site without styles and JavaScript.

- View all journals

- My Account Login

- Explore content

- About the journal

- Publish with us

- Sign up for alerts

- Open access

- Published: 11 December 2020

Quantifying and addressing the prevalence and bias of study designs in the environmental and social sciences

- Alec P. Christie ORCID: orcid.org/0000-0002-8465-8410 1 ,

- David Abecasis ORCID: orcid.org/0000-0002-9802-8153 2 ,

- Mehdi Adjeroud 3 ,

- Juan C. Alonso ORCID: orcid.org/0000-0003-0450-7434 4 ,

- Tatsuya Amano ORCID: orcid.org/0000-0001-6576-3410 5 ,

- Alvaro Anton ORCID: orcid.org/0000-0003-4108-6122 6 ,

- Barry P. Baldigo ORCID: orcid.org/0000-0002-9862-9119 7 ,

- Rafael Barrientos ORCID: orcid.org/0000-0002-1677-3214 8 ,

- Jake E. Bicknell ORCID: orcid.org/0000-0001-6831-627X 9 ,

- Deborah A. Buhl 10 ,

- Just Cebrian ORCID: orcid.org/0000-0002-9916-8430 11 ,

- Ricardo S. Ceia ORCID: orcid.org/0000-0001-7078-0178 12 , 13 ,

- Luciana Cibils-Martina ORCID: orcid.org/0000-0002-2101-4095 14 , 15 ,

- Sarah Clarke 16 ,

- Joachim Claudet ORCID: orcid.org/0000-0001-6295-1061 17 ,

- Michael D. Craig 18 , 19 ,

- Dominique Davoult 20 ,

- Annelies De Backer ORCID: orcid.org/0000-0001-9129-9009 21 ,

- Mary K. Donovan ORCID: orcid.org/0000-0001-6855-0197 22 , 23 ,

- Tyler D. Eddy 24 , 25 , 26 ,

- Filipe M. França ORCID: orcid.org/0000-0003-3827-1917 27 ,

- Jonathan P. A. Gardner ORCID: orcid.org/0000-0002-6943-2413 26 ,

- Bradley P. Harris 28 ,

- Ari Huusko 29 ,

- Ian L. Jones 30 ,

- Brendan P. Kelaher 31 ,

- Janne S. Kotiaho ORCID: orcid.org/0000-0002-4732-784X 32 , 33 ,

- Adrià López-Baucells ORCID: orcid.org/0000-0001-8446-0108 34 , 35 , 36 ,

- Heather L. Major ORCID: orcid.org/0000-0002-7265-1289 37 ,

- Aki Mäki-Petäys 38 , 39 ,

- Beatriz Martín 40 , 41 ,

- Carlos A. Martín 8 ,

- Philip A. Martin 1 , 42 ,

- Daniel Mateos-Molina ORCID: orcid.org/0000-0002-9383-0593 43 ,

- Robert A. McConnaughey ORCID: orcid.org/0000-0002-8537-3695 44 ,

- Michele Meroni 45 ,

- Christoph F. J. Meyer ORCID: orcid.org/0000-0001-9958-8913 34 , 35 , 46 ,

- Kade Mills 47 ,

- Monica Montefalcone 48 ,

- Norbertas Noreika ORCID: orcid.org/0000-0002-3853-7677 49 , 50 ,

- Carlos Palacín 4 ,

- Anjali Pande 26 , 51 , 52 ,

- C. Roland Pitcher ORCID: orcid.org/0000-0003-2075-4347 53 ,

- Carlos Ponce 54 ,

- Matt Rinella 55 ,

- Ricardo Rocha ORCID: orcid.org/0000-0003-2757-7347 34 , 35 , 56 ,

- María C. Ruiz-Delgado 57 ,

- Juan J. Schmitter-Soto ORCID: orcid.org/0000-0003-4736-8382 58 ,

- Jill A. Shaffer ORCID: orcid.org/0000-0003-3172-0708 10 ,

- Shailesh Sharma ORCID: orcid.org/0000-0002-7918-4070 59 ,

- Anna A. Sher ORCID: orcid.org/0000-0002-6433-9746 60 ,

- Doriane Stagnol 20 ,

- Thomas R. Stanley 61 ,

- Kevin D. E. Stokesbury 62 ,

- Aurora Torres 63 , 64 ,

- Oliver Tully 16 ,

- Teppo Vehanen ORCID: orcid.org/0000-0003-3441-6787 65 ,

- Corinne Watts 66 ,

- Qingyuan Zhao 67 &

- William J. Sutherland 1 , 42

Nature Communications volume 11 , Article number: 6377 ( 2020 ) Cite this article

14k Accesses

41 Citations

62 Altmetric

Metrics details

- Environmental impact

- Scientific community

- Social sciences

Building trust in science and evidence-based decision-making depends heavily on the credibility of studies and their findings. Researchers employ many different study designs that vary in their risk of bias to evaluate the true effect of interventions or impacts. Here, we empirically quantify, on a large scale, the prevalence of different study designs and the magnitude of bias in their estimates. Randomised designs and controlled observational designs with pre-intervention sampling were used by just 23% of intervention studies in biodiversity conservation, and 36% of intervention studies in social science. We demonstrate, through pairwise within-study comparisons across 49 environmental datasets, that these types of designs usually give less biased estimates than simpler observational designs. We propose a model-based approach to combine study estimates that may suffer from different levels of study design bias, discuss the implications for evidence synthesis, and how to facilitate the use of more credible study designs.

Similar content being viewed by others

Citizen science in environmental and ecological sciences

Dilek Fraisl, Gerid Hager, … Mordechai Haklay

Improving quantitative synthesis to achieve generality in ecology

Rebecca Spake, Rose E. O’Dea, … James M. Bullock

Empirical evidence of widespread exaggeration bias and selective reporting in ecology

Kaitlin Kimmel, Meghan L. Avolio & Paul J. Ferraro

Introduction

The ability of science to reliably guide evidence-based decision-making hinges on the accuracy and credibility of studies and their results 1 , 2 . Well-designed, randomised experiments are widely accepted to yield more credible results than non-randomised, ‘observational studies’ that attempt to approximate and mimic randomised experiments 3 . Randomisation is a key element of study design that is widely used across many disciplines because of its ability to remove confounding biases (through random assignment of the treatment or impact of interest 4 , 5 ). However, ethical, logistical, and economic constraints often prevent the implementation of randomised experiments, whereas non-randomised observational studies have become popular as they take advantage of historical data for new research questions, larger sample sizes, less costly implementation, and more relevant and representative study systems or populations 6 , 7 , 8 , 9 . Observational studies nevertheless face the challenge of accounting for confounding biases without randomisation, which has led to innovations in study design.

We define ‘study design’ as an organised way of collecting data. Importantly, we distinguish between data collection and statistical analysis (as opposed to other authors 10 ) because of the belief that bias introduced by a flawed design is often much more important than bias introduced by statistical analyses. This was emphasised by Light, Singer & Willet 11 (p. 5): “You can’t fix by analysis what you bungled by design…”; and Rubin 3 : “Design trumps analysis.” Nevertheless, the importance of study design has often been overlooked in debates over the inability of researchers to reproduce the original results of published studies (so-called ‘reproducibility crises’ 12 , 13 ) in favour of other issues (e.g., p-hacking 14 and Hypothesizing After Results are Known or ‘HARKing’ 15 ).

To demonstrate the importance of study designs, we can use the following decomposition of estimation error equation 16 :

This demonstrates that even if we improve the quality of modelling and analysis (to reduce modelling bias through a better bias-variance trade-off 17 ) or increase sample size (to reduce statistical noise), we cannot remove the intrinsic bias introduced by the choice of study design (design bias) unless we collect the data in a different way. The importance of study design in determining the levels of bias in study results therefore cannot be overstated.

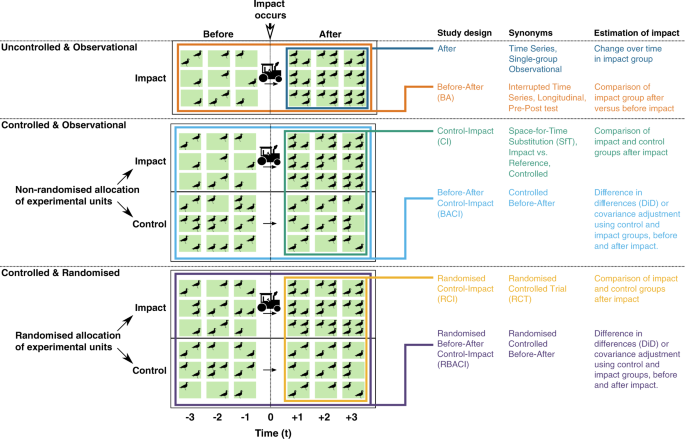

For the purposes of this study we consider six commonly used study designs; differences and connections can be visualised in Fig. 1 . There are three major components that allow us to define these designs: randomisation, sampling before and after the impact of interest occurs, and the use of a control group.

A hypothetical study set-up is shown where the abundance of birds in three impact and control replicates (e.g., fields represented by blocks in a row) are monitored before and after an impact (e.g., ploughing) that occurs in year zero. Different colours represent each study design and illustrate how replicates are sampled. Approaches for calculating an estimate of the true effect of the impact for each design are also shown, along with synonyms from different disciplines.

Of the non-randomised observational designs, the Before-After Control-Impact (BACI) design uses a control group and samples before and after the impact occurs (i.e., in the ‘before-period’ and the ‘after-period’). Its rationale is to explicitly account for pre-existing differences between the impact group (exposed to the impact) and control group in the before-period, which might otherwise bias the estimate of the impact’s true effect 6 , 18 , 19 .

The BACI design improves upon several other commonly used observational study designs, of which there are two uncontrolled designs: After, and Before-After (BA). An After design monitors an impact group in the after-period, while a BA design compares the state of the impact group between the before- and after-periods. Both designs can be expected to yield poor estimates of the impact’s true effect (large design bias; Equation (1)) because changes in the response variable could have occurred without the impact (e.g., due to natural seasonal changes; Fig. 1 ).

The other observational design is Control-Impact (CI), which compares the impact group and control group in the after-period (Fig. 1 ). This design may suffer from design bias introduced by pre-existing differences between the impact group and control group in the before-period; bias that the BACI design was developed to account for 20 , 21 . These differences have many possible sources, including experimenter bias, logistical and environmental constraints, and various confounding factors (variables that change the propensity of receiving the impact), but can be adjusted for through certain data pre-processing techniques such as matching and stratification 22 .

Among the randomised designs, the most commonly used are counterparts to the observational CI and BACI designs: Randomised Control-Impact (R-CI) and Randomised Before-After Control-Impact (R-BACI) designs. The R-CI design, often termed ‘Randomised Controlled Trials’ (RCTs) in medicine and hailed as the ‘gold standard’ 23 , 24 , removes any pre-impact differences in a stochastic sense, resulting in zero design bias (Equation ( 1 )). Similarly, the R-BACI design should also have zero design bias, and the impact group measurements in the before-period could be used to improve the efficiency of the statistical estimator. No randomised equivalents exist of After or BA designs as they are uncontrolled.

It is important to briefly note that there is debate over two major statistical methods that can be used to analyse data collected using BACI and R-BACI designs, and which is superior at reducing modelling bias 25 (Equation (1)). These statistical methods are: (i) Differences in Differences (DiD) estimator; and (ii) covariance adjustment using the before-period response, which is an extension of Analysis of Covariance (ANCOVA) for generalised linear models — herein termed ‘covariance adjustment’ (Fig. 1 ). These estimators rely on different assumptions to obtain unbiased estimates of the impact’s true effect. The DiD estimator assumes that the control group response accurately represents the impact group response had it not been exposed to the impact (‘parallel trends’ 18 , 26 ) whereas covariance adjustment assumes there are no unmeasured confounders and linear model assumptions hold 6 , 27 .

From both theory and Equation (1), with similar sample sizes, randomised designs (R-BACI and R-CI) are expected to be less biased than controlled, observational designs with sampling in the before-period (BACI), which in turn should be superior to observational designs without sampling in the before-period (CI) or without a control group (BA and After designs 7 , 28 ). Between randomised designs, we might expect that an R-BACI design performs better than a R-CI design because utilising extra data before the impact may improve the efficiency of the statistical estimator by explicitly characterising pre-existing differences between the impact group and control group.

Given the likely differences in bias associated with different study designs, concerns have been raised over the use of poorly designed studies in several scientific disciplines 7 , 29 , 30 , 31 , 32 , 33 , 34 , 35 . Some disciplines, such as the social and medical sciences, commonly undertake direct comparisons of results obtained by randomised and non-randomised designs within a single study 36 , 37 , 38 or between multiple studies (between-study comparisons 39 , 40 , 41 ) to specifically understand the influence of study designs on research findings. However, within-study comparisons are limited in their scope (e.g., a single study 42 , 43 ) and between-study comparisons can be confounded by variability in context or study populations 44 . Overall, we lack quantitative estimates of the prevalence of different study designs and the levels of bias associated with their results.

In this work, we aim to first quantify the prevalence of different study designs in the social and environmental sciences. To fill this knowledge gap, we take advantage of summaries for several thousand biodiversity conservation intervention studies in the Conservation Evidence database 45 ( www.conservationevidence.com ) and social intervention studies in systematic reviews by the Campbell Collaboration ( www.campbellcollaboration.org ). We then quantify the levels of bias in estimates obtained by different study designs (R-BACI, R-CI, BACI, BA, and CI) by applying a hierarchical model to approximately 1000 within-study comparisons across 49 raw environmental datasets from a range of fields. We show that R-BACI, R-CI and BACI designs are poorly represented in studies testing biodiversity conservation and social interventions, and that these types of designs tend to give less biased estimates than simpler observational designs. We propose a model-based approach to combine study estimates that may suffer from different levels of study design bias, discuss the implications for evidence synthesis, and how to facilitate the use of more credible study designs.

Prevalence of study designs

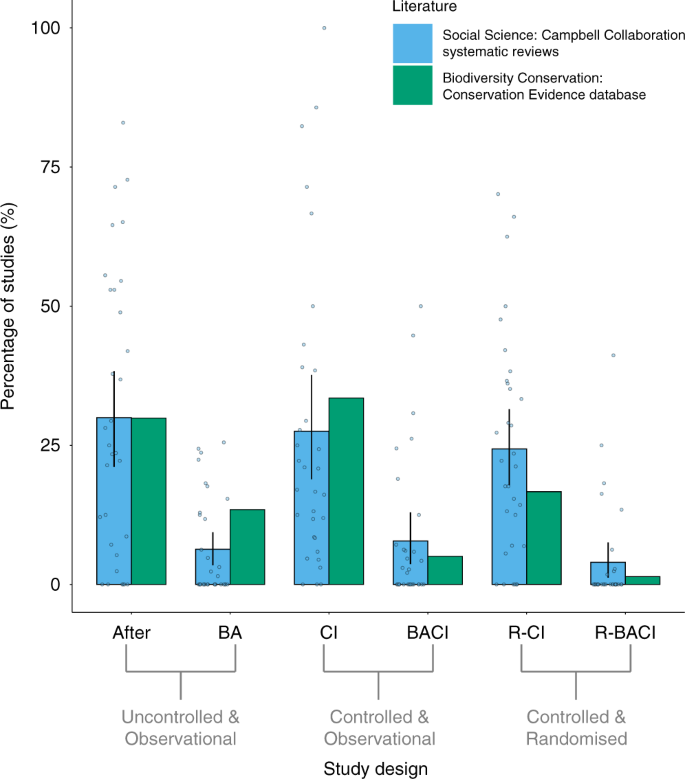

We found that the biodiversity-conservation (conservation evidence) and social-science (Campbell collaboration) literature had similarly high proportions of intervention studies that used CI designs and After designs, but low proportions that used R-BACI, BACI, or BA designs (Fig. 2 ). There were slightly higher proportions of R-CI designs used by intervention studies in social-science systematic reviews than in the biodiversity-conservation literature (Fig. 2 ). The R-BACI, R-CI, and BACI designs made up 23% of intervention studies for biodiversity conservation, and 36% of intervention studies for social science.

Intervention studies from the biodiversity-conservation literature were screened from the Conservation Evidence database ( n =4260 studies) and studies from the social-science literature were screened from 32 Campbell Collaboration systematic reviews ( n =1009 studies – note studies excluded by these reviews based on their study design were still counted). Percentages for the social-science literature were calculated for each systematic review (blue data points) and then averaged across all 32 systematic reviews (blue bars and black vertical lines represent mean and 95% Confidence Intervals, respectively). Percentages for the biodiversity-conservation literature are absolute values (shown as green bars) calculated from the entire Conservation Evidence database (after excluding any reviews). Source data are provided as a Source Data file. BA before-after, CI control-impact, BACI before-after-control-impact, R-BACI randomised BACI, R-CI randomised CI.

Influence of different study designs on study results

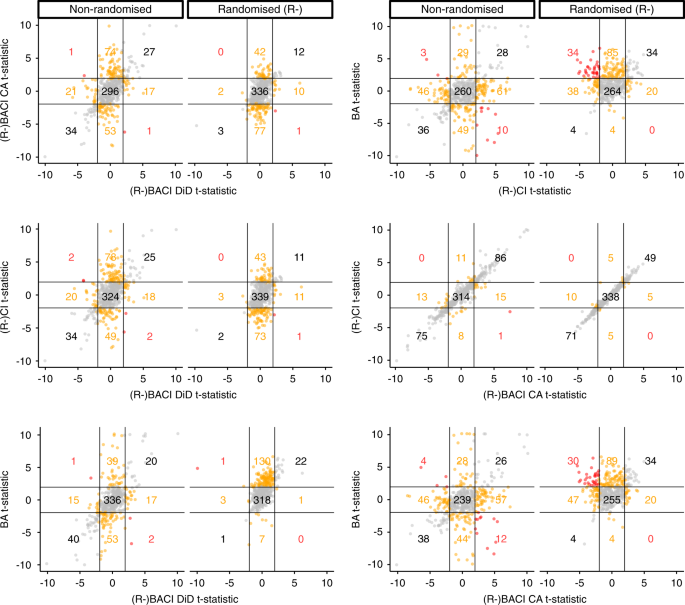

In non-randomised datasets, we found that estimates of BACI (with covariance adjustment) and CI designs were very similar, while the point estimates for most other designs often differed substantially in their magnitude and sign. We found similar results in randomised datasets for R-BACI (with covariance adjustment) and R-CI designs. For ~30% of responses, in both non-randomised and randomised datasets, study design estimates differed in their statistical significance (i.e., p < 0.05 versus p > =0.05), except for estimates of (R-)BACI (with covariance adjustment) and (R-)CI designs (Table 1 ; Fig. 3 ). It was rare for the 95% confidence intervals of different designs’ estimates to not overlap – except when comparing estimates of BA designs to (R-)BACI (with covariance adjustment) and (R-)CI designs (Table 1 ). It was even rarer for estimates of different designs to have significantly different signs (i.e., one estimate with entirely negative confidence intervals versus one with entirely positive confidence intervals; Table 1 , Fig. 3 ). Overall, point estimates often differed greatly in their magnitude and, to a lesser extent, in their sign between study designs, but did not differ as greatly when accounting for the uncertainty around point estimates – except in terms of their statistical significance.

t-statistics were obtained from two-sided t-tests of estimates obtained by each design for different responses in each dataset using Generalised Linear Models (see Methods). For randomised datasets, BACI and CI axis labels refer to R-BACI and R-CI designs (denoted by ‘R-’). DiD Difference in Differences; CA covariance adjustment. Lines at t-statistic values of 1.96 denote boundaries between cells and colours of points indicate differences in direction and statistical significance ( p < 0.05; grey = same sign and significance, orange = same sign but difference in significance, red = different sign and significance). Numbers refer to the number of responses in each cell. Source data are provided as a Source Data file. BA Before-After, CI Control-Impact, BACI Before-After-Control-Impact.

Levels of bias in estimates of different study designs

We modelled study design bias using a random effect across datasets in a hierarchical Bayesian model; σ is the standard deviation of the bias term, and assuming bias is randomly distributed across datasets and is on average zero, larger values of σ will indicate a greater magnitude of bias (see Methods). We found that, for randomised datasets, estimates of both R-BACI (using covariance adjustment; CA) and R-CI designs were affected by negligible amounts of bias (very small values of σ; Table 2 ). When the R-BACI design used the DiD estimator, it suffered from slightly more bias (slightly larger values of σ), whereas the BA design had very high bias when applied to randomised datasets (very large values of σ; Table 2 ). There was a highly positive correlation between the estimates of R-BACI (using covariance adjustment) and R-CI designs (Ω[R-BACI CA, R-CI] was close to 1; Table 2 ). Estimates of R-BACI using the DiD estimator were also positively correlated with estimates of R-BACI using covariance adjustment and R-CI designs (moderate positive mean values of Ω[R-BACI CA, R-BACI DiD] and Ω[R-BACI DiD, R-CI]; Table 2 ).

For non-randomised datasets, controlled designs (BACI and CI) were substantially less biased (far smaller values of σ) than the uncontrolled BA design (Table 2 ). A BACI design using the DiD estimator was slightly less biased than the BACI design using covariance adjustment, which was, in turn, slightly less biased than the CI design (Table 2 ).

Standard errors estimated by the hierarchical Bayesian model were reasonably accurate for the randomised datasets (see λ in Methods and Table 2 ), whereas there was some underestimation of standard errors and lack-of-fit for non-randomised datasets.

Our approach provides a principled way to quantify the levels of bias associated with different study designs. We found that randomised study designs (R-BACI and R-CI) and observational BACI designs are poorly represented in the environmental and social sciences; collectively, descriptive case studies (the After design), the uncontrolled, observational BA design, and the controlled, observational CI design made up a substantially greater proportion of intervention studies (Fig. 2 ). And yet R-BACI, R-CI and BACI designs were found to be quantifiably less biased than other observational designs.

As expected the R-CI and R-BACI designs (using a covariance adjustment estimator) performed well; the R-BACI design using a DiD estimator performed slightly less well, probably because the differencing of pre-impact data by this estimator may introduce additional statistical noise compared to covariance adjustment, which controls for these data using a lagged regression variable. Of the observational designs, the BA design performed very poorly (both when analysing randomised and non-randomised data) as expected, being uncontrolled and therefore prone to severe design bias 7 , 28 . The CI design also tended to be more biased than the BACI design (using a DiD estimator) due to pre-existing differences between the impact and control groups. For BACI designs, we recommend that the underlying assumptions of DiD and CA estimators are carefully considered before choosing to apply them to data collected for a specific research question 6 , 27 . Their levels of bias were negligibly different and their known bracketing relationship suggests they will typically give estimates with the same sign, although their tendency to over- or underestimate the true effect will depend on how well the underlying assumptions of each are met (most notably, parallel trends for DiD and no unmeasured confounders for CA; see Introduction) 6 , 27 . Overall, these findings demonstrate the power of large within-study comparisons to directly quantify differences in the levels of bias associated with different designs.

We must acknowledge that the assumptions of our hierarchical model (that the bias for each design (j) is on average zero and normally distributed) cannot be verified without gold standard randomised experiments and that, for observational designs, the model was overdispersed (potentially due to underestimation of statistical error by GLM(M)s or positively correlated design biases). The exact values of our hierarchical model should therefore be treated with appropriate caution, and future research is needed to refine and improve our approach to quantify these biases more precisely. Responses within datasets may also not be independent as multiple species could interact; therefore, the estimates analysed by our hierarchical model are statistically dependent on each other, and although we tried to account for this using a correlation matrix (see Methods, Eq. ( 3 )), this is a limitation of our model. We must also recognise that we collated datasets using non-systematic searches 46 , 47 and therefore our analysis potentially exaggerates the intrinsic biases of observational designs (i.e., our data may disproportionately reflect situations where the BACI design was chosen to account for confounding factors). We nevertheless show that researchers were wise to use the BACI design because it was less biased than CI and BA designs across a wide range of datasets from various environmental systems and locations. Without undertaking costly and time-consuming pre-impact sampling and pilot studies, researchers are also unlikely to know the levels of bias that could affect their results. Finally, we did not consider sample size, but it is likely that researchers might use larger sample sizes for CI and BA designs than BACI designs. This is, however, unlikely to affect our main conclusions because larger sample sizes could increase type I errors (false positive rate) by yielding more precise, but biased estimates of the true effect 28 .

Our analyses provide several empirically supported recommendations for researchers designing future studies to assess an impact of interest. First, using a controlled and/or randomised design (if possible) was shown to strongly reduce the level of bias in study estimates. Second, when observational designs must be used (as randomisation is not feasible or too costly), we urge researchers to choose the BACI design over other observational designs—and when that is not possible, to choose the CI design over the uncontrolled BA design. We acknowledge that limited resources, short funding timescales, and ethical or logistical constraints 48 may force researchers to use the CI design (if randomisation and pre-impact sampling are impossible) or the BA design (if appropriate controls cannot be found 28 ). To facilitate the usage of less biased designs, longer-term investments in research effort and funding are required 43 . Far greater emphasis on study designs in statistical education 49 and better training and collaboration between researchers, practitioners and methodologists, is needed to improve the design of future studies; for example, potentially improving the CI design by pairing or matching the impact group and control group 22 , or improving the BA design using regression discontinuity methods 48 , 50 . Where the choice of study design is limited, researchers must transparently communicate the limitations and uncertainty associated with their results.

Our findings also have wider implications for evidence synthesis, specifically the exclusion of certain observational study designs from syntheses (the ‘rubbish in, rubbish out’ concept 51 , 52 ). We believe that observational designs should be included in systematic reviews and meta-analyses, but that careful adjustments are needed to account for their potential biases. Exclusion of observational studies often results from subjective, checklist-based ‘Risk of Bias’ or quality assessments of studies (e.g., AMSTRAD 2 53 , ROBINS-I 54 , or GRADE 55 ) that are not data-driven and often neglect to identify the actual direction, or quantify the magnitude, of possible bias introduced by observational studies when rating the quality of a review’s recommendations. We also found that there was a small proportion of studies that used randomised designs (R-CI or R-BACI) or observational BACI designs (Fig. 2 ), suggesting that systematic reviews and meta-analyses risk excluding a substantial proportion of the literature and limiting the scope of their recommendations if such exclusion criteria are used 32 , 56 , 57 . This problem is compounded by the fact that, at least in conservation science, studies using randomised or BACI designs are strongly concentrated in Europe, Australasia, and North America 31 . Systematic reviews that rely on these few types of study designs are therefore likely to fail to provide decision makers outside of these regions with locally relevant recommendations that they prefer 58 . The Covid-19 pandemic has highlighted the difficulties in making locally relevant evidence-based decisions using studies conducted in different countries with different demographics and cultures, and on patients of different ages, ethnicities, genetics, and underlying health issues 59 . This problem is also acute for decision-makers working on biodiversity conservation in the tropical regions, where the need for conservation is arguably the greatest (i.e., where most of Earth’s biodiversity exists 60 ) but they either have to rely on very few well-designed studies that are not locally relevant (i.e., have low generalisability), or more studies that are locally relevant but less well-designed 31 , 32 . Either option could lead decision-makers to take ineffective or inefficient decisions. In the long-term, improving the quality and coverage of scientific evidence and evidence syntheses across the world will help solve these issues, but shorter-term solutions to synthesising patchy evidence bases are required.

Our work furthers sorely needed research on how to combine evidence from studies that vary greatly in their design. Our approach is an alternative to conventional meta-analyses which tend to only weight studies by their sample size or the inverse of their variance 61 ; when studies vary greatly in their study design, simply weighting by inverse variance or sample size is unlikely to account for different levels of bias introduced by different study designs (see Equation (1)). For example, a BA study could receive a larger weight if it had lower variance than a BACI study, despite our results suggesting a BA study usually suffers from greater design bias. Our model provides a principled way to weight studies by both their variance and the likely amount of bias introduced by their study design; it is therefore a form of ‘bias-adjusted meta-analysis’ 62 , 63 , 64 , 65 , 66 . However, instead of relying on elicitation of subjective expert opinions on the bias of each study, we provide a data-driven, empirical quantification of study biases – an important step that was called for to improve such meta-analytic approaches 65 , 66 .

Future research is needed to refine our methodology, but our empirically grounded form of bias-adjusted meta-analysis could be implemented as follows: 1.) collate studies for the same true effect, their effect size estimates, standard errors, and the type of study design; 2.) enter these data into our hierarchical model, where effect size estimates share the same intercept (the true causal effect), a random effect term due to design bias (whose variance is estimated by the method we used), and a random effect term for statistical noise (whose variance is estimated by the reported standard error of studies); 3.) fit this model and estimate the shared intercept/true effect. Heuristically, this can be thought of as weighting studies by both their design bias and their sampling variance and could be implemented on a dynamic meta-analysis platform (such as metadataset.com 67 ). This approach has substantial potential to develop evidence synthesis in fields (such as biodiversity conservation 31 , 32 ) with patchy evidence bases, where reliably synthesising findings from studies that vary greatly in their design is a fundamental and unavoidable challenge.

Our study has highlighted an often overlooked aspect of debates over scientific reproducibility: that the credibility of studies is fundamentally determined by study design. Testing the effectiveness of conservation and social interventions is undoubtedly of great importance given the current challenges facing biodiversity and society in general and the serious need for more evidence-based decision-making 1 , 68 . And yet our findings suggest that quantifiably less biased study designs are poorly represented in the environmental and social sciences. Greater methodological training of researchers and funding for intervention studies, as well as stronger collaborations between methodologists and practitioners is needed to facilitate the use of less biased study designs. Better communication and reporting of the uncertainty associated with different study designs is also needed, as well as more meta-research (the study of research itself) to improve standards of study design 69 . Our hierarchical model provides a principled way to combine studies using a variety of study designs that vary greatly in their risk of bias, enabling us to make more efficient use of patchy evidence bases. Ultimately, we hope that researchers and practitioners testing interventions will think carefully about the types of study designs they use, and we encourage the evidence synthesis community to embrace alternative methods for combining evidence from heterogeneous sets of studies to improve our ability to inform evidence-based decision-making in all disciplines.

Quantifying the use of different designs

We compared the use of different study designs in the literature that quantitatively tested interventions between the fields of biodiversity conservation (4,260 studies collated by Conservation Evidence 45 ) and social science (1,009 studies found by 32 systematic reviews produced by the Campbell Collaboration: www.campbellcollaboration.org ).

Conservation Evidence is a database of intervention studies, each of which has quantitatively tested a conservation intervention (e.g., sowing strips of wildflower seeds on farmland to benefit birds), that is continuously being updated through comprehensive, manual searches of conservation journals for a wide range of fields in biodiversity conservation (e.g., amphibian, bird, peatland, and farmland conservation 45 ). To obtain the proportion of studies that used each design from Conservation Evidence, we simply extracted the type of study design from each study in the database in 2019 – the study design was determined using a standardised set of criteria; reviews were not included (Table 3 ). We checked if the designs reported in the database accurately reflected the designs in the original publication and found that for a random subset of 356 studies, 95.1% were accurately described.

Each systematic review produced by the Campbell Collaboration collates and analyses studies that test a specific social intervention; we collated systematic reviews that tested a variety of social interventions across several fields in the social sciences, including education, crime and justice, international development and social welfare (Supplementary Data 1 ). We retrieved systematic reviews produced by the Campbell Collaboration by searching their website ( www.campbellcollaboration.org ) for reviews published between 2013‒2019 (as of 8th September 2019) — we limited the date range as we could not go through every review. As we were interested in the use of study designs in the wider social-science literature, we only considered reviews (32 in total) that contained sufficient information on the number of included and excluded studies that used different study designs. Studies may be excluded from systematic reviews for several reasons, such as their relevance to the scope of the review (e.g., testing a relevant intervention) and their study design. We only considered studies if the sole reason for their exclusion from the systematic review was their study design – i.e., reviews clearly reported that the study was excluded because it used a particular study design, and not because of any other reason, such as its relevance to the review’s research questions. We calculated the proportion of studies that used each design in each systematic review (using the same criteria as for the biodiversity-conservation literature – see Table 3 ) and then averaged these proportions across all systematic reviews.

Within-study comparisons of different study designs

We wanted to make direct within-study comparisons between the estimates obtained by different study designs (e.g., see 38 , 70 , 71 for single within-study comparisons) for many different studies. If a dataset contains data collected using a BACI design, subsets of these data can be used to mimic the use of other study designs (a BA design using only data for the impact group, and a CI design using only data collected after the impact occurred). Similarly, if data were collected using a R-BACI design, subsets of these data can be used to mimic the use of a BA design and a R-CI design. Collecting BACI and R-BACI datasets would therefore allow us to make direct within-study comparisons of the estimates obtained by these designs.