Teaching for Excellence and Equity pp 7–17 Cite as

A Review of the Literature on Teacher Effectiveness and Student Outcomes

- Nathan Burroughs 25 ,

- Jacqueline Gardner 26 ,

- Youngjun Lee 27 ,

- Siwen Guo 28 ,

- Israel Touitou 29 ,

- Kimberly Jansen 30 &

- William Schmidt 31

- Open Access

- First Online: 24 May 2019

142k Accesses

38 Citations

74 Altmetric

Part of the book series: IEA Research for Education ((IEAR,volume 6))

Researchers agree that teachers are one of the most important school-based resources in determining students’ future academic success and lifetime outcomes, yet have simultaneously had difficulties in defining what teacher characteristics make for an effective teacher. This chapter reviews the large body of literature on measures of teacher effectiveness, underscoring the diversity of methods by which the general construct of “teacher quality” has been explored, including experience, professional knowledge, and opportunity to learn. Each of these concepts comprises a number of different dimensions and methods of operationalizing. Single-country research (and particularly research from the United States) is distinguished from genuinely comparative work. Despite a voluminous research literature on the question of teacher quality, evidence for the impact of teacher characteristics (experience and professional knowledge) on student outcomes remains quite limited. There is a smaller, but more robust set of findings for the effect of teacher support on opportunity to learn. Five measures may be associated with higher student achievement: teacher experience (measured by years of teaching), teacher professional knowledge (measured by education and self-reported preparation to teach mathematics), and teacher provision of opportunity to learn (measured by time on mathematics and content coverage). These factors provide the basis for a comparative cross-country model.

- Opportunity to learn

- Teacher education

- Teacher experience

- Teacher quality

- Trends in International Mathematics and Science Study (TIMSS)

You have full access to this open access chapter, Download chapter PDF

2.1 Defining Teacher Effectiveness

Researchers agree that teachers are one of the most important school-based resources in determining students’ future academic success and lifetime outcomes (Chetty et al. 2014 ; Rivkin et al. 2005 ; Rockoff 2004 ). As a consequence, there has been a strong emphasis on improving teacher effectiveness as a means to enhancing student learning. Goe ( 2007 ), among others, defined teacher effectiveness in terms of growth in student learning, typically measured by student standardized assessment results. Chetty et al. ( 2014 ) found that students taught by highly effective teachers, as defined by the student growth percentile (SGPs) and value-added measures (VAMs), were more likely to attend college, earn more, live in higher-income neighborhoods, save more money for retirement, and were less likely to have children during their teenage years. This potential of a highly effective teacher to significantly enhance the lives of their students makes it essential that researchers and policymakers properly understand the factors that contribute to a teacher’s effectiveness. However, as we will discuss in more detail later in this report, studies have found mixed results regarding the relationships between specific teacher characteristics and student achievement (Wayne and Youngs 2003 ). In this chapter, we explore these findings, focusing on the three main categories of teacher effectiveness identified and examined in the research literature: namely, teacher experience, teacher knowledge, and teacher behavior. Here we emphasize that much of the existing body of research is based on studies from the United States, and so the applicability of such national research to other contexts remains open to discussion.

2.2 Teacher Experience

Teacher experience refers to the number of years that a teacher has worked as a classroom teacher. Many studies show a positive relationship between teacher experiences and student achievement (Wayne and Youngs 2003 ). For example, using data from 4000 teachers in North Carolina, researchers found that teacher experience was positively related to student achievement in both reading and mathematics (Clotfelter et al. 2006 ). Rice ( 2003 ) found that the relationship between teacher experience and student achievement was most pronounced for students at the secondary level. Additional work in schools in the United States by Wiswall ( 2013 ), Papay and Kraft ( 2015 ), and Ladd and Sorenson ( 2017 ), and a Dutch twin study by Gerritsen et al. ( 2014 ), also indicated that teacher experience had a cumulative effect on student outcomes.

Meanwhile, other studies have failed to identify consistent and statistically significant associations between student achievement and teacher experience (Blomeke et al. 2016 ; Gustaffsson and Nilson 2016 ; Hanushek and Luque 2003 ; Luschei and Chudgar 2011 ; Wilson and Floden 2003 ). Some research from the United States has indicated that experience matters very much early on in a teacher’s career, but that, in later years, there were little to no additional gains (Boyd et al. 2006 ; Rivkin et al. 2005 ; Staiger and Rockoff 2010 ). In the first few years of a teacher’s career, accruing more years of experience seems to be more strongly related to student achievement (Rice 2003 ). Rockoff ( 2004 ) found that, when comparing teacher effectiveness (understood as value-added) to student test scores in reading and mathematics, teacher experience was positively related to student mathematics achievement; however, such positive relationships leveled off after teachers had gained two years of teaching experience. Drawing on data collected from teachers of grades four to eight between 2000 and 2008 within a large urban school district in the United States, Papay and Kraft ( 2015 ) confirmed previous research on the benefits experience can add to a novice teacher’s career. They found that student outcomes increased most rapidly during their teachers’ first few years of employment. They also found some further student gains due to additional years of teaching experience beyond the first five years. The research of Pil and Leana ( 2009 ) adds additional nuance; they found that acquiring teacher experience at the same grade level over a number of years, not just teacher experience in general (i.e. at multiple grades), was positively related to student achievement.

2.3 Teacher Professional Knowledge

A teacher’s professional knowledge refers to their subject-matter knowledge, curricular knowledge, and pedagogical knowledge (Collinson 1999 ). This professional knowledge is influenced by the undergraduate degrees earned by a teacher, the college attended, graduate studies undertaken, and opportunities to engage with on-the job training, commonly referred to as professional development (Collinson 1999 ; Rice 2003 ; Wayne and Youngs 2003 ). After undertaking in-depth quantitative analyses of the United States’ 1993–1994 Schools and Staffing Survey (SASS) and National Assessment of Educational Progress (NAEP) data sets, Darling-Hammond ( 2000 ) argued that measures of teacher preparation and certification were by far the strongest correlates of student achievement in reading and mathematics, after controlling for student poverty levels and language status.

As with experience, research on the impact of teacher advanced degrees, subject specializations, and certification has been inconclusive, with several studies (Aaronson et al. 2007 ; Blomeke et al. 2016 ; Hanushek and Luque 2003 ; Harris and Sass 2011 ; Luschei and Chudgar 2011 ) suggesting weak, inconsistent, or non-significant relationships with student achievement. However, several international studies comparing country means found that teacher degrees (Akiba et al. 2007 ; Gustaffsson and Nilson 2016 ; Montt 2011 ) were related to student outcomes, as did Woessman’s ( 2003 ) student-level study of multiple countries.

2.3.1 Undergraduate Education

In their meta-analysis of teacher effectiveness, Wayne and Youngs ( 2003 ) found three studies that showed some relationship between the quality of the undergraduate institution that a teacher attended and their future students’ success in standardized tests. In a thorough review of the research on teacher effectiveness attributes, Rice ( 2003 ) found that the selectivity of undergraduate institution and the teacher preparation program may be related to student achievement for students at the high school level and for high-poverty students.

In terms of teacher preparation programs, Boyd et al. ( 2009 ) found that overall these programs varied in their effectiveness. In their study of 31 teacher preparation programs designed to prepare teachers for the New York City School District, Boyd et al. ( 2009 ) drew from data based on document analyses, interviews, surveys of teacher preparation instructors, surveys of participants and graduates, and student value-added scores. They found that if a program was effective in preparing teachers to teach one subject, it tended to also have success in preparing teachers to teach other subjects as well. They also found that teacher preparation programs that focused on the practice of teaching and the classroom, and provided opportunities for teachers to study classroom practices, tended to prepare more effective teachers. Finally, they found that programs that included some sort of final project element (such as a personal research paper, or portfolio presentation) tended to prepare more effective teachers.

Beyond the institution a teacher attends, the coursework they choose to take within that program may also be related to their future students’ achievement. These associations vary by subject matter. A study by Rice ( 2003 ) indicated that, for teachers teaching at the secondary level, subject-specific coursework had a greater impact on their future students’ achievement. Similarly Goe ( 2007 ) found that, for mathematics, an increase in the amount of coursework undertaken by a trainee teacher was positively related to their future students’ achievement. By contrast, the meta-analysis completed by Wayne and Youngs ( 2003 ) found that, for history and English teachers, there was no evidence of a relationship between a teacher’s undergraduate coursework and their future students’ achievement in those subjects.

2.3.2 Graduate Education

In a review of 14 studies, Wilson and Floden ( 2003 ) were unable to identify consistent relationships between a teacher’s level of education and their students’ achievement. Similarly, in their review of data from 4000 teachers in North Carolina, Clotfelter et al. ( 2006 ) found that teachers who held a master’s degree were associated with lower student achievement. However, specifically in terms of mathematics instruction, teachers with higher degrees and who undertook more coursework during their education seem to be positively related to their students’ mathematics achievement (Goe 2007 ). Likewise, Harris and Sass ( 2011 ) found that there was a positive relationship between teachers who had obtained an advanced degree during their teaching career and their students’ achievement in middle school mathematics. They did not find any significant relationships between advanced degrees and student achievement in any other subject area. Further, using data from the United States’ Early Childhood Longitudinal Study (ECLS-K), Phillips ( 2010 ) found that subject-specific graduate degrees in elementary or early-childhood education were positively related to students’ reading achievement gains.

2.3.3 Certification Status

Another possible indicator of teacher effectiveness could be whether or not a teacher holds a teaching certificate. Much of this research has focused on the United States, which uses a variety of certification approaches, with lower grades usually having multi-subject general certifications and higher grades requiring certification in specific subjects. Wayne and Youngs ( 2003 ) found no clear relationship between US teachers’ certification status and their students’ achievement, with the exception of the subject area of mathematics, where students tended have higher test scores when their teachers had a standard mathematics certification. Rice ( 2003 ) also found that US teacher certification was related to high school mathematics achievement, and also found that there was some evidence of a relationship between certification status and student achievement in lower grades. Meanwhile, in their study of grade one students, Palardy and Rumberger ( 2008 ) also found evidence that students made greater gains in reading ability when taught by fully certified teachers.

In a longitudinal study using data from teachers teaching grades four and five and their students in the Houston School District in Texas, Darling-Hammond et al. ( 2005 ) found that those teachers who had completed training that resulted in a recognized teaching certificate were more effective that those who had no dedicated teaching qualifications. The study results suggested that teachers without recognized US certification or with non-standard certifications generally had negative effects on student achievement after controlling for student characteristics and prior achievement, as well as the teacher’s experience and degrees. The effects of teacher certification on student achievement were generally much stronger than the effects for teacher experience. Conversely, analyzing data from the ECLS-K, Phillips ( 2010 ) found that grade one students tended to have lower mathematics achievement gains when they had teachers with standard certification. In sum, the literature the influence of teacher certification remains deeply ambiguous.

2.3.4 Professional Development

Although work by Desimone et al. ( 2002 , 2013 ) suggested that professional development may influence the quality of instruction, most researchers found that teachers’ professional development experiences showed only limited associations with their effectiveness, although middle- and high-school mathematics teachers who undertook more content-focused training may be the exception (Blomeke et al. 2016 ; Harris and Sass 2011 ). In their meta-analysis of the effects of professional development on student achievement, Blank and De Las Alas ( 2009 ) found that 16 studies reported significant and positive relationships between professional development and student achievement. For mathematics, the average effect size of studies using a pre-post assessment design was 0.21 standard deviations.

Analyzing the data from six data sets, two from the Beginning Teacher Preparation Survey conducted in Connecticut and Tennessee, and four from the United States National Center for Education Statistics’ National Assessment of Educational Progress (NAEP), Wallace ( 2009 ) used structural equation modeling to find that professional development had a very small, but occasionally statistically significant effect on student achievement. She found, for example, that for NAEP mathematics data from the year 2000, 1.2 additional hours of professional development per year were related to an increase in average student scores of 0.62 points, and for reading, an additional 1.1 h of professional development were related to an average increase in student scores of 0.24 points. Overall, Wallace ( 2009 ) identified professional development had moderate effects on teacher practice and some small effects on student achievement when mediated by teacher practice.

2.3.5 Teacher Content Knowledge

Of course, characteristics like experience and education may be imperfect proxies for teacher content knowledge; unfortunately, content knowledge is difficult to assess directly. However, there is a growing body of work suggesting that teacher content knowledge may associated with student learning. It should be noted that there is an important distinction between general content knowledge about a subject (CK) and pedagogical content knowledge (PCK) specifically related to teaching that subject, each of which may be independently related to student outcomes (Baumert et al. 2010 ).

Studies from the United States (see for example, Chingos and Peterson 2011 ; Clotfelter et al. 2006 ; Constantine et al. 2009 ; Hill et al. 2005 ; Shuls and Trivitt 2015 ) have found some evidence that higher teacher cognitive skills in mathematics are associated with higher student scores. Positive associations between teacher content knowledge and student outcomes were also found in studies based in Germany (Baumert et al. 2010 ) and Peru (Metzler and Woessman 2012 ), and in a comparative study using Programme for the International Assessment of Adult Competencies (PIAAC) data undertaken by Hanushek et al. ( 2018 ). These findings are not universal, however, other studies from the United States (Blazar 2015 ; Garet et al. 2016 ; Rockoff et al. 2011 ) failed to find a statistically significant association between teacher content knowledge and student learning.

The studies we have discussed all used some direct measure of teacher content knowledge. An alternative method of assessing mathematics teacher content knowledge is self-reported teacher preparation to teach mathematics topics. Both TIMSS and IEA’s Teacher Education and Development Study in Mathematics (TEDS-M, conducted in 2007–2008) have included many questions, asking teachers to report on their preparedness to teach particular topics. Although Luschei and Chudgar ( 2011 ) and Gustafsson and Nilson ( 2016 ) found that these items had a weak direct relationship to student achievement across countries, other studies have suggested that readiness is related to instructional quality (Blomeke et al. 2016 ), as well as content knowledge and content preparation (Schmidt et al. 2017 ), suggesting that instructional quality may have an indirect effect on student learning.

2.4 Teacher Behaviors and Opportunity to Learn

Although the impact of teacher characteristics (experience, education, and preparedness to teach) on student outcomes remains an open question, there is much a much more consistent relationship between student achievement and teacher behaviors (instructional time and instructional content), especially behaviors related instructional content. Analyzing TIMSS, Schmidt et al. ( 2001 ) found an association between classroom opportunity to learn (OTL), interpreted narrowly as student exposure to instructional content, and student achievement. In a later study using student-level PISA data, Schmidt et al. ( 2015 ) identified a robust relationship between OTL and mathematics literacy across 62 different educational systems. The importance of instructional content has been recognized by national policymakers, and has helped motivate standards-based reform in an effort to improve student achievement, such as the Common Core in the United States (Common Core Standards Initiative 2018 ). However, we found that there was little research on whether teacher instructional content that aligned with national standards had improved student learning; the only study that we were able to identify found that such alignment had only very weak associations with student mathematics scores (Polikoff and Porter 2014 ). Student-reported data indicates that instructional time (understood as classroom time on a particular subject) does seem to be related to mathematics achievement (Cattaneo et al. 2016 ; Jerrim et al. 2017 ; Lavy 2015 ; Rivkin and Schiman 2015 ; Woessman 2003 ).

2.5 Conclusion

This review of the literature simply brushes the surface of the exceptional body of work on the relationship between student achievement and teacher characteristics and behaviors. Whether analyzing US-based, international, or the (limited) number of comparative studies, the associations between easily measurable teacher characteristics, like experience and education, and student outcomes in mathematics, remains debatable. In contrast, there is more evidence to support the impact of teacher behaviors, such as instructional content and time on task, on student achievement. Our goal was to incorporate all these factors into a comparative model across countries, with the aim of determining what an international cross-national study like TIMSS could reveal about the influence of teachers on student outcomes in mathematics. The analysis that follows draws on the existing body of literature on teacher effectiveness, which identified key teacher factors that may be associated with higher student achievement: teacher experience, teacher professional knowledge (measured by education and self-reported preparation to teach mathematics), and teacher provision of opportunity to learn (time on mathematics and content coverage).

Aaronson, D., Barrow, L., & Sander, W. (2007). Teachers and student achievement in the Chicago public high schools. Journal of Labor Economics, 25 (1), 95–135.

Article Google Scholar

Akiba, M., LeTendre, G., & Scribner, J. (2007). Teacher quality, opportunity gap, and national achievement in 46 countries. Educational Researcher, 36 (7), 369–387.

Baumert, J., Kunter, M., Blum, W., Brunner, M., Voss, T., Jordan, A., et al. (2010). Teachers’ mathematical knowledge, cognitive activation in the classroom, and student progress. American Educational Research Journal, 47 (1), 133–180.

Blank, R. K., & De Las Alas, N. (2009). The effects of teacher professional development on gains in student achievement: How meta analysis provides scientific evidence useful to education leaders . Washington, DC: Council of Chief State School Officers. Retrieved from https://files.eric.ed.gov/fulltext/ED544700.pdf .

Blazar, D. (2015). Effective teaching in elementary mathematics: Identifying classroom practices that support student achievement. Economics of Education Review, 48, 16–29.

Blomeke, S., Olsen, R., & Suhl, U. (2016). Relation of student achievement to the quality of their teachers and instructional quality. In T. Nilson & J. Gustafsson (Eds.), Teacher quality, instructional quality and student outcomes . IEA Research for Education (Vol. 2, pp. 21–50). Cham, Switzerland: Springer. Retrieved from https://link.springer.com/chapter/10.1007/978-3-319-41252-8_2 .

Boyd, D., Grossman, P., Lankford, H., & Loeb, S. (2006). How changes in entry requirements alter the teacher workforce and affect student achievement. Education Finance and Policy, 1 (2), 176–216.

Boyd, D. J., Grossman, P. L., Lankford, H., Loeb, S., & Wyckoff, J. (2009). Teacher preparation and student achievement. Educational Evaluation and Policy Analysis, 31 (4), 416–440.

Cattaneo, M., Oggenfuss, C., & Wolter, S. (2016). The more, the better? The impact of instructional time on student performance. Institute for the Study of Labor (IZA) Discussion Paper No. 9797. Bonn, Germany: Forschungsinstitut zur Zukunft der Arbeit/Institute for the Study of Labor. Retrieved from ftp.iza.org/dp9797.pdf .

Chetty, R., Friedman, J. N., & Rockoff, J. E. (2014). Measuring the impacts of teachers II: Teacher value-added and student outcomes in adulthood. American Economic Review, 104 (9), 2633–2679.

Chingos, M., & Peterson, P. (2011). It’s easier to pick a good teacher than to train one: Familiar and new results on the correlates of teacher effectiveness. Economics of Education Review, 30 (3), 449–465.

Clotfelter, C. T., Ladd, H. F., & Vigdor, J. L. (2006). Teacher-student matching and the assessment of teacher effectiveness. Journal of Human Resources, 41 (4), 778–820.

Collinson, V. (1999). Redefining teacher excellence. Theory Into Practice , 38 (1), 4–11. Retrieved from https://doi.org/10.1080/00405849909543824 .

Common Core Standards Initiative. (2018). Preparing America’s students for success [www document]. Retrieved from http://www.corestandards.org/ .

Constantine, J., Player, D., Silva, T., Hallgren, K., Grider, M., & Deke, J. (2009). An evaluation of teachers trained through different routes to certification. National Center for Education Evaluation and Regional Assistance paper 2009-4043. Washington, DC: National Center for Education Evaluation and Regional Assistance, Institute of Education Sciences, US Department of Education. Retrieved from https://ies.ed.gov/ncee/pubs/20094043/ .

Darling-Hammond, L. (2000). Teacher quality and student achievement. Education Policy Analysis Archives , 8, 1. Retrieved from https://epaa.asu.edu/ojs/article/viewFile/392/515 .

Darling-Hammond, L., Holtzman, D. J., Gatlin, S. J., & Vasquez Heilig, J. (2005). Does teacher preparation matter? Evidence about teacher certification, Teach for America, and teacher effectiveness. Education Policy Analysis Archives, 13, 42. Retrieved from https://epaa.asu.edu/ojs/article/view/147 .

Desimone, L., Porter, A., Garet, M., Yoon, K., & Birman, B. (2002). Effects of professional development on teachers’ instruction: Results from a three-year longitudinal study. Education Evaluation and Policy Analysis, 24 (2), 81–112.

Desimone, L., Smith, T., & Phillips, K. (2013). Teacher and administrator responses to standards-based reform. Teachers College Record, 115 (8), 1–53.

Google Scholar

Garet, M. S., Heppen, J. B., Walters, K., Parkinson, J., Smith, T. M., Song, M., et al. (2016). Focusing on mathematical knowledge: The impact of content-intensive teacher professional development . National Center for Education Evaluation and Regional Assistance paper 2016-4010. Washington, DC: National Center for Education Evaluation and Regional Assistance, Institute of Education Sciences, US Department of Education. Retrieved from https://ies.ed.gov/ncee/pubs/20094010/ .

Gerritsen, S., Plug, E., & Webbink, D. (2014). Teacher quality and student achievement: Evidence from a Dutch sample of twins . CPB discussion paper 294. The Hague, The Netherlands: Central Plan Bureau/Netherlands Bureau for Economic Policy Analysis. Retrieved from https://ideas.repec.org/p/cpb/discus/294.html .

Goe, L. (2007). The link between teacher quality and student outcomes: A research synthesis . NCCTQ Report. Washington, DC: National Comprehensive Center for Teacher Quality. Retrieved from http://www.gtlcenter.org/sites/default/files/docs/LinkBetweenTQandStudentOutcomes.pdf .

Gustafsson, J., & Nilson, T. (2016). The impact of school climate and teacher quality on mathematics achievement: A difference-in-differences approach. In T. Nilson & J. Gustafsson (Eds.), Teacher quality, instructional quality and student outcomes , IEA Research for Education (Vol. 2, pp. 81–95). Cham, Switzerland: Springer. Retrieved from https://link.springer.com/chapter/10.1007/978-3-319-41252-8_4 .

Hanushek, E., & Luque, J. (2003). Efficiency and equity in schools around the world. Economics of Education Review, 22 (5), 481–502.

Hanushek, E., Piopiunik, M., & Wiederhold, S. (2018). The value of smarter teachers: International evidence on teacher cognitive skills and student performance . Journal of Human Resources (in press). https://doi.org/10.3368/jhr.55.1.0317.8619r1 .

Harris, D. N., & Sass, T. R. (2011). Teacher training, teacher quality and student achievement. Journal of Public Economics, 95 (7–8), 798–812.

Hill, H., Rowan, B., & Ball, D. (2005). Effects of teachers’ mathematical knowledge for teaching on student achievement. American Educational Research Journal, 42 (2), 371–406.

Jerrim, J., Lopez-Agudo, L., Marcenaro-Gutierrez, O., & Shure, N. (2017). What happens when econometrics and psychometrics collide? An example using the PISA data. Economics of Education Review, 61, 51–58.

Ladd, H. F., & Sorenson, L. C. (2017). Returns to teacher experience: Student achievement and motivation in middle school. Education Finance and Policy, 12 (2), 241–279. Retrieved from https://www.mitpressjournals.org/doi/10.1162/EDFP_a_00194 .

Lavy, V. (2015). Do differences in schools’ instruction time explain international achievement gaps? Evidence from developed and developing countries. The Economic Journal, 125 (11), 397–424.

Luschei, T., & Chudgar, A. (2011). Teachers, student achievement, and national income: A cross-national examination of relationships and interactions. Prospects, 41, 507–533.

Metzler, J., & Woessman, L. (2012). The impact of teacher subject knowledge on student achievement: Evidence from within-teacher within-student variation. Journal of Development Economics, 99 (2), 486–496.

Montt, G. (2011). Cross-national differences in educational achievement inequality. Sociology of Education, 84 (1), 49–68.

Palardy, G. J., & Rumberger, R. W. (2008). Teacher effectiveness in first grade: The importance of background qualifications, attitudes, and instructional practices for student learning. Educational Evaluation and Policy Analysis, 30 (2), 111–140.

Papay, J., & Kraft, M. (2015). Productivity returns to experience in the teacher labor market: Methodological challenges and new evidence on long-term career improvement. Journal of Public Economics, 130, 105–119.

Phillips, K. J. (2010). What does ‘highly qualified’ mean for student achievement? Evaluating the relationships between teacher quality indicators and at-risk students’ mathematics and reading achievement gains in first grade. The Elementary School Journal, 110 (4), 464–493.

Pil, F. K., & Leana, C. (2009). Applying organizational research to public school reform: The effects of teacher human and social capital on student performance. Academy of Management Journal , 52 (6), 1101–1124.

Polikoff, M., & Porter, A. (2014). Instructional alignment as a measure of teaching quality. Educational Evaluation and Policy Analysis, 36 (4), 399–416.

Rice, J. K. (2003). Teacher quality: Understanding the effectiveness of teacher attributes . Washington DC: Economic Policy Institute.

Rivkin, S., Hanushek, E., & Kain, J. (2005). Teachers, schools, and academic achievement. Econometrica, 73 (2), 417–458.

Rivkin, S., & Schiman, J. (2015). Instruction time, classroom quality, and academic achievement. The Economic Journal, 125 (11), 425–448.

Rockoff, J. (2004). The impact of individual teachers on student achievement: Evidence from panel data. The American Economic Review, 94 (2), 247–252.

Rockoff, J. E., Jacob, B. A., Kane, T. J., & Staiger, D. O. (2011). Can you recognize an effective teacher when you recruit one? Education Finance and Policy, 6 (1), 43–74.

Schmidt, W., Burroughs, N., Cogan, L., & Houang, R. (2017). The role of subject-matter content in teacher preparation: An international perspective for mathematics. Journal of Curriculum Studies, 49 (2), 111–131.

Schmidt, W., Burroughs, N., Zoido, P., & Houang, R. (2015). The role of schooling in perpetuating educational inequality: An international perspective. Education Researcher, 44 (4), 371–386.

Schmidt, W., McKnight, C., Houang, R., Wang, H., Wiley, D., Cogan, L., et al. (2001). Why schools matter: A cross-national comparison of curriculum and learning . San Francisco, CA: Jossey-Bass.

Shuls, J., & Trivitt, J. (2015). Teacher effectiveness: An analysis of licensure screens. Educational Policy, 29 (4), 645–675.

Staiger, D., & Rockoff, J. (2010). Searching for effective teachers with imperfect information. Journal of Economic Perspectives, 24 (3), 97–118.

Wallace, M. R. (2009). Making sense of the links: Professional development, teacher practices, and student achievement. Teachers College Record, 111 (2), 573–596.

Wayne, A. J., & Youngs, P. (2003). Teacher characteristics and student achievement gains: A review. Review of Educational Research, 73 (1), 89–122.

Wilson, S. M., & Floden, R. E. (2003). Creating effective teachers: Concise answers for hard questions. An addendum to the report “Teacher preparation research: Current knowledge, gaps, and recommendations . Washington, DC: AACTE Publications.

Wiswall, M. (2013). The dynamics of teacher quality. Journal of Public Economics, 100, 61–78.

Woessman, L. (2003). Schooling resources, educational institutions, and student performance: The international evidence. Oxford Bulletin of Economics and Statistics, 65 (2), 117–170.

Download references

Author information

Authors and affiliations.

CREATE for STEM, Michigan State University, East Lansing, MI, USA

Nathan Burroughs

Office of K-12 Outreach, Michigan State University, East Lansing, MI, USA

Jacqueline Gardner

Michigan State University, East Lansing, MI, USA

Youngjun Lee

College of Education, Michigan State University, East Lansing, MI, USA

Israel Touitou

Kimberly Jansen

Center for the Study of Curriculum Policy, Michigan State University, East Lansing, MI, USA

William Schmidt

You can also search for this author in PubMed Google Scholar

Corresponding author

Correspondence to Nathan Burroughs .

Rights and permissions

Open Access This chapter is licensed under the terms of the Creative Commons Attribution-NonCommercial 4.0 International License (http://creativecommons.org/licenses/by-nc/4.0/), which permits any noncommercial use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license and indicate if changes were made.

The images or other third party material in this chapter are included in the chapter's Creative Commons license, unless indicated otherwise in a credit line to the material. If material is not included in the chapter's Creative Commons license and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder.

Reprints and permissions

Copyright information

© 2019 International Association for the Evaluation of Educational Achievement (IEA)

About this chapter

Cite this chapter.

Burroughs, N. et al. (2019). A Review of the Literature on Teacher Effectiveness and Student Outcomes. In: Teaching for Excellence and Equity. IEA Research for Education, vol 6. Springer, Cham. https://doi.org/10.1007/978-3-030-16151-4_2

Download citation

DOI : https://doi.org/10.1007/978-3-030-16151-4_2

Published : 24 May 2019

Publisher Name : Springer, Cham

Print ISBN : 978-3-030-16150-7

Online ISBN : 978-3-030-16151-4

eBook Packages : Education Education (R0)

Share this chapter

Anyone you share the following link with will be able to read this content:

Sorry, a shareable link is not currently available for this article.

Provided by the Springer Nature SharedIt content-sharing initiative

- Publish with us

Policies and ethics

- Find a journal

- Track your research

- Reference Manager

- Simple TEXT file

People also looked at

Original research article, preparing teacher training students for evidence-based practice promoting students’ research competencies in research-learning projects.

- 1 Department of Education and Psychology, Freie Universität Berlin, Berlin, Germany

- 2 Institute for Secondary Education and Subject Didactics, University College of Teacher Education Vorarlberg, Feldkirch, FK, Austria

Research-learning projects (RLP) enable teacher training students to acquire competencies for evidence-based practice (EBP) in the context of their university studies. The aim of this longitudinal study is to develop, implement, and evaluate a RLP format to promote competencies for EBP in teacher training students. These competencies can be broken down into the categories of using research, which involves reflection on and use of evidence to solve problems in teaching practice, and establishing research, which involves investigating a research question independently by applying research methods. In a longitudinal study we evaluate the increase in competencies based on a self-assessment of competencies (indirect measurement) focusing on establishing research, and a competence test (direct measurement) focusing on using research. We also add a retrospective pre-assessment version (quasi-indirect measurement) to consider response shift and over- or underestimation in self-assessments. Our findings show that teacher training students can be prepared for EBP through RLP. Further development potential for the RLP format is being discussed.

Introduction

Teachers in practice encounter findings from (inter)national student performance studies and comparative assessments (e.g., Programme for International Student Assessment—PISA, Trends in International Mathematics and Science Study—TIMMS, or written comparison tests in Germany named “VERgleichsArbeiten”—VERA) on a daily basis in their work. Evaluations of teaching quality and evidence-based approaches to school development are also having a growing impact on professional teaching practice ( Humpert et al., 2006 ; Mandinach and Gummer, 2016 ; Kippers et al., 2018 ). As a result, the ability to make use of scientific evidence is becoming increasingly crucial to teachers’ practice in classroom. The need for evidence-based practice (EBP) among teachers has been the subject of international discussion for some time (e.g., Hargreaves, 1996 , 1997 ; Hammersley, 1997 , Hammersley, 2007 ).

The concept of EBP originated in medicine and was applied to the teaching context in the early 1990s ( Hargreaves, 1996 , 1997 ; Sackett et al., 1996 ; Hammersley, 1997 , Hammersley, 2007 ; Moon et al., 2000 ). According to Davies (1999) , “Evidence-based education means integrating individual teaching and learning expertise with the best available external evidence from systematic research” (p. 117). In Germany, the curricular prerequisites were created in the 2000s with when EBP was anchored in the National Standards for Teacher Education ( Kultusministerkonferenz, 2004 , 2014 ), which define competencies that teacher training students should have acquired by the end of their study programs. For universities, this implies that teacher training programs should be designed to prepare teacher training students for their role as evidence-based experts ( Mandinach and Gummer, 2016 ) of their own professional teaching practice.

The majority of teacher training programs in Germany are divided into two phases at university: bachelor’s and master’s degree programs. In both degree programs, teacher training students study their future two subjects of teaching as well as educational science. After this phase, they become trainee teachers at school. At the end, after final exams, they can work as teachers in schools. In 2016, an internship semester, a 6-month period of practical training in schools, was introduced to Berlin universities in the master’s degree programs. Thereby, EBP was anchored in the teacher training curriculum. This means that students in teacher training should already acquire EBP competencies during the course of their studies and hence, before they become trainee teachers at schools. While concepts of teaching and learning that can be used to support the development of these competencies have been tested and evaluated in Anglo-American contexts ( Reeves and Honig, 2015 ; Green et al., 2016 ; Reeves and Chiang, 2017 ; van der Scheer et al., 2017 ; Kippers et al., 2018 ), only a few such studies have been conducted in Germany to date ( Groß Ophoff et al., 2017 ; Thoren et al., 2020 ).

This article presents the concept for a course designed to teach teacher training students EBP competencies, as well as the findings from our evaluation study. We begin by discussing the competencies required for EBP. We then look in detail at several important aspects that should be considered in planning a course. The introduction concludes with the course concept.

Evidence-based practice competencies can be divided, according to Davies (1999) , into the categories of using research and establishing research , whereas Borg (2010) divides EBP into the categories of engagement with research and engagement in research . Using research , or engagement with research , comprises reflection on and use of evidence to solve problems in teaching practice. It means knowing where and how to find systematic and comprehensible evidence (for instance, in educational databases), and how to use this evidence to answer questions. Establishing research , or engagement in research , is based on the aforementioned competencies and requires additional competencies in investigating a research question independently through the use of basic research methods. This includes, for example, the planning and preparation of a study, data collection, and analysis. In contrast to another form of teacher research, action research, which requires going through various investigative cycles ( Borg, 2010 ), establishing research or engagement in research is defined by the logic of the research process. In the following, we use the terms using research and establishing research proposed by Davies (1999 ; see Figure 1 ), but they stand equally for the terms used by Borg (2010) .

Figure 1. Relation of using and establishing research.

Establishing research requires competencies in research, comprising (a) Skills in Reviewing the State of Research, (b) Methodological Skills, (c) Skills in Reflecting on Research Findings, (d) Communication Skills, and (e) Content Knowledge ( Thiel and Böttcher, 2014 ; Böttcher and Thiel, 2018 ; Böttcher-Oschmann et al., 2019 ). Teachers should be able to

(a) frame questions, search for relevant literature, and evaluate its quality ( Ghali et al., 2000 ; Valter and Akerlind, 2010 ; Green et al., 2016 ; Mandinach and Gummer, 2016 );

(b) plan and prepare the research process as well as collect and analyze data ( Holbrook and Devonshire, 2005 ; Valter and Akerlind, 2010 ; Reeves and Honig, 2015 ; Green et al., 2016 ; Mandinach and Gummer, 2016 ; van der Scheer et al., 2017 ; Kippers et al., 2018 );

(c) interpret evidence and draw conclusions for teaching practice ( Ghali et al., 2000 ; Holbrook and Devonshire, 2005 ; Valter and Akerlind, 2010 ; Reeves and Honig, 2015 ; Green et al., 2016 ; Mandinach and Gummer, 2016 ; Reeves and Chiang, 2017 ; Kippers et al., 2018 );

(d) communicate about this evidence ( Valter and Akerlind, 2010 ; Mandinach and Gummer, 2016 ).

(e) To do this, teachers need knowledge about research, for example, about how to develop a research design ( Green et al., 2016 ).

At Freie Universität Berlin, we developed a learning environment to foster teacher training students’ acquisition of these competencies: the research-learning projects (RLP). When designing the RLP learning environment to promote the development of competencies, we included features that foster students’ cognitive processing and motivation, as well as social interaction among students. At the same time, we wanted the environment to be as authentic and realistic as possible. In the following, we will explain these assumptions in more detail.

To foster cognitive processing of competencies that are not directly observable, instructors can use methods from the cognitive apprenticeship approach ( Collins et al., 1989 ): cognitive modeling, coaching, and scaffolding. Through cognitive modeling , students can create a mental representation of a target strategy, such as strategies for literature review, enabling them to externalize instructors’ internally available strategies, for instance, through prompting. When students adopt the instructors’ strategy and begin using it on their own, instructors can provide coaching to support them in this process. This means that when students begin their own literature review, instructors’ coaching can help students apply strategies they have already learned. When students reach the limits of their abilities, instructors can help by providing scaffolding , in which specific hints (prompts) are given. This could be, for example, hints such as the use of alternative search words or other databases for literature review.

Students’ learning motivation to perform a particular learning activity may depend on both intrinsic and extrinsic motivation ( Deci and Ryan, 2000 ). Factors that influence these types of learning motivation should therefore be integrated into the learning environment. Such factors may include needs for competence (e.g., feeling satisfied because of a perceive progress in a specific competence), autonomy (e.g., the ability to self-organize and self-regulate the learning process), and relatedness (e.g., relatedness to a group; Deci and Ryan, 2000 ).

At the level of social interaction , elements can be integrated that, on the one hand, support cognitive processing and, on the other hand, also foster sustained learning motivation by creating a feeling of social inclusion ( Wecker and Fischer, 2014 ): instructors’ supervision of students and group work. Instructors’ supervision of students represents the interaction between members of a community of practice with different levels of expertise: Instructors are the experienced community members that promote students’ competence development ( Reinmann and Mandl, 2006 ). Moreover, this interaction allows students to engage with the thought patterns, attitudes, and normative standards of this community of practice ( Reinmann and Mandl, 2006 ; Nückles and Wittwer, 2014 ). Group work enables the interaction between members of a community of practice with similar levels of expertise, such as teacher training students in RLP. Group members have the opportunity to learn and develop their own understanding through social activities that promote learning, such as explaining and thus externalizing their own thoughts ( Wecker and Fischer, 2014 ) to, in this case, their fellow students.

Research-oriented teaching ( Thiel and Böttcher, 2014 ; Böttcher and Thiel, 2018 ) formats, such as the RLP format, are suitable when designing learning opportunities around authentic and realistic situations: Students are enabled to establishing research by going through either individual phases of the research process or the entire process independently. They are empowered to meet the demands of this process by developing the necessary routines. Instructors support and advise students throughout these phases. In addition, students also regularly switch out of this active role and assume a receptive role. In these phases, the instructors impart knowledge about specific phases needed to go through the research process. An authentic and realistic learning environment is guaranteed by the fact that students either participate actively in research, for example, in the framework of a research internship, or go through the entire research process themselves as part of a RLP.

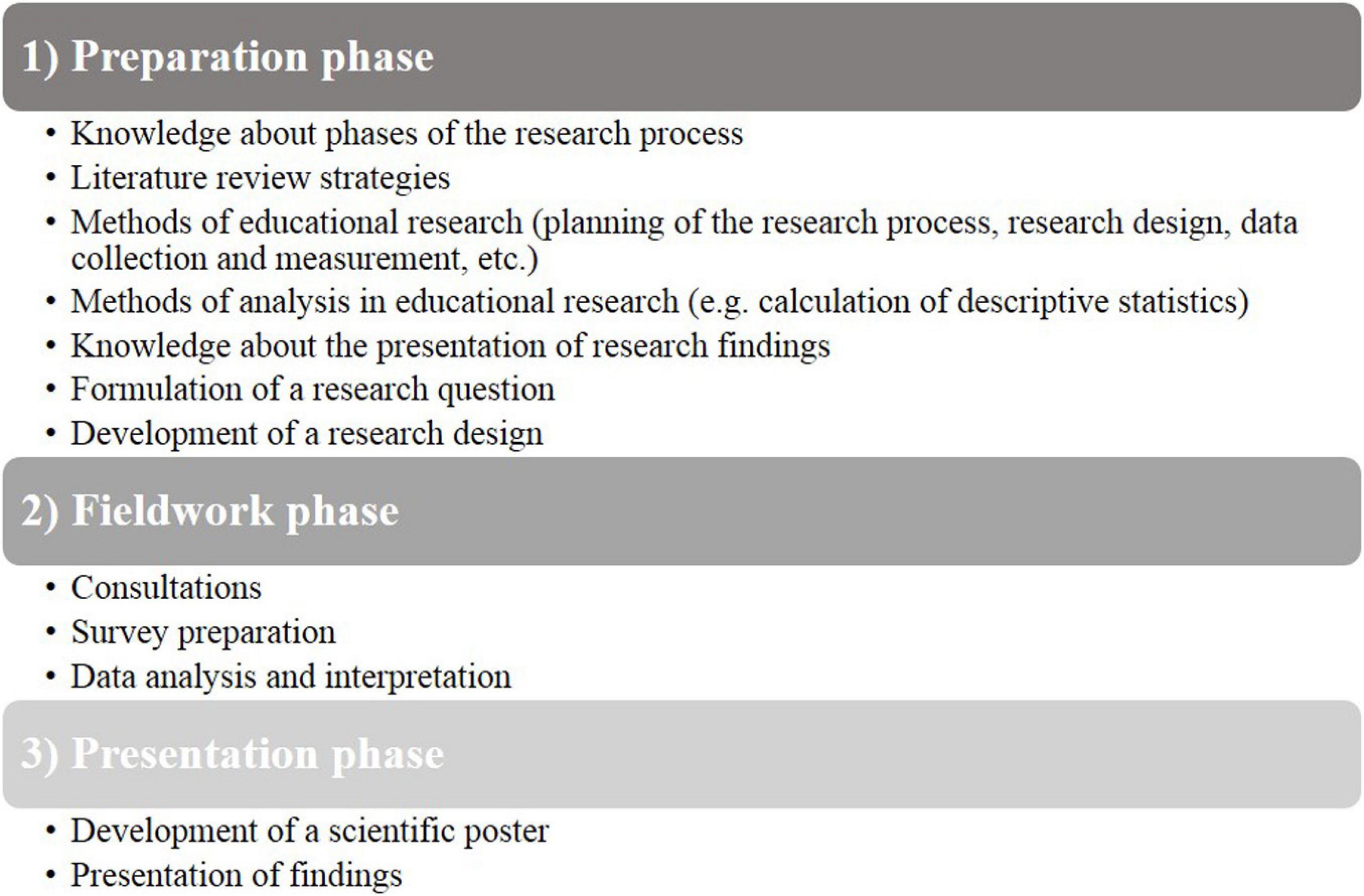

The RLP course concept is based on the aforementioned assumptions about the design of learning environments and research-oriented teaching formats. The aim is that teacher training students are able to evaluate their own teaching quality on the basis of acquired competencies for EBP. To reduce complexity of the topic teaching quality, students could choose from the subtopics instructional quality, learning motivation or classroom management. The course is divided into three phases: (1) preparation, (2) fieldwork, and (3) presentation (see Figure 2 ). During the first phase, preparation, instructors impart knowledge about the phases of the research process: literature review, educational research methods 1 (planning of the research process, research design, data collection and measurement, etc.), and analytical procedures (e.g., calculation of descriptive statistics) as well as the presentation of findings. Students get together in groups, then choose one of the three subtopics and start to work on a literature review, formulate their research question, and develop an appropriate research design. In this phase, modeling promotes the development of Content Knowledge, Skills in Reviewing the State of Research, and Methodological Skills.

Figure 2. The three phases of a research-learning project (RLP).

In the second phase, fieldwork, students prepare their studies and carry out data analysis and interpretation. They come to the university for two consultation sessions in which instructors provide coaching and scaffolding to support their process. In this phase, instructors focus on the development of Methodological Skills and Skills in Reflecting on Research Findings.

In the third phase, presentation, students create a scientific poster and present their findings. In this phase, the emphasis is on the development of Communication Skills.

The development of EBP competencies in RLP is fostered through the following aspects: The students go through the research process independently in an authentic and realistic field of action: here, the field of research. They receive advice (prompts) from instructors when they have reached the limits of their abilities. This enables students to experience the feeling of competence. The feeling of autonomy is strengthened through the large degree of freedom students have in the research process (to independently formulate their research question, develop a research design, and select instruments). Relatedness is encouraged by having students go through the research process in groups with support from instructors. In this social context, students have the opportunity to be socialized into the scientific community of practice: first, through interaction with instructors representing experienced researchers and, second, through the interaction with their fellow students.

With the introduction of an internship semester in 2016, EBP was anchored in the teacher training curriculum at Berlin universities. This was to implement the directives of the National Standards for Teacher Education ( Kultusministerkonferenz, 2004 , 2014 ) that teacher trainings students should be prepared for an EBP during the course of their studies. Hence, the RLP format was introduced at Berlin universities. At Freie Universität Berlin, we then defined competencies needed for EBP as well as developed and implemented a RLP learning envorinment to foster their acqisition. In this study, we want to evaluate if teacher training students can be enabled to acquire EBP competencies in the newly developed RLP format. The study design and findings are presented below.

Materials and Methods

Study design and sampling.

In a longitudinal study, data were collected at Freie Universität Berlin in the winter semester of 2016/17: at the beginning of the semester, in October 2016, and at the end of the semester, in February 2017. From September 2016 to February 2017 teacher training students completed their internship 2 . The goal of this internship is for master’s teacher training students to gain their first teaching experience before becoming trainee teachers with greater responsibility in schools. During the winter semester, teacher training students attended university courses in parallel. These included the RLP format described above.

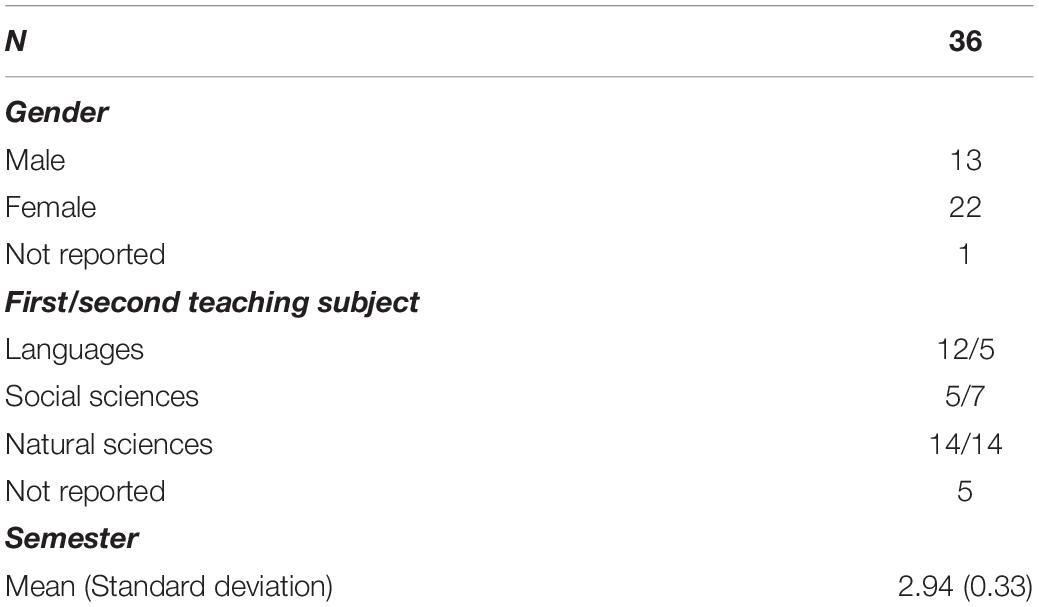

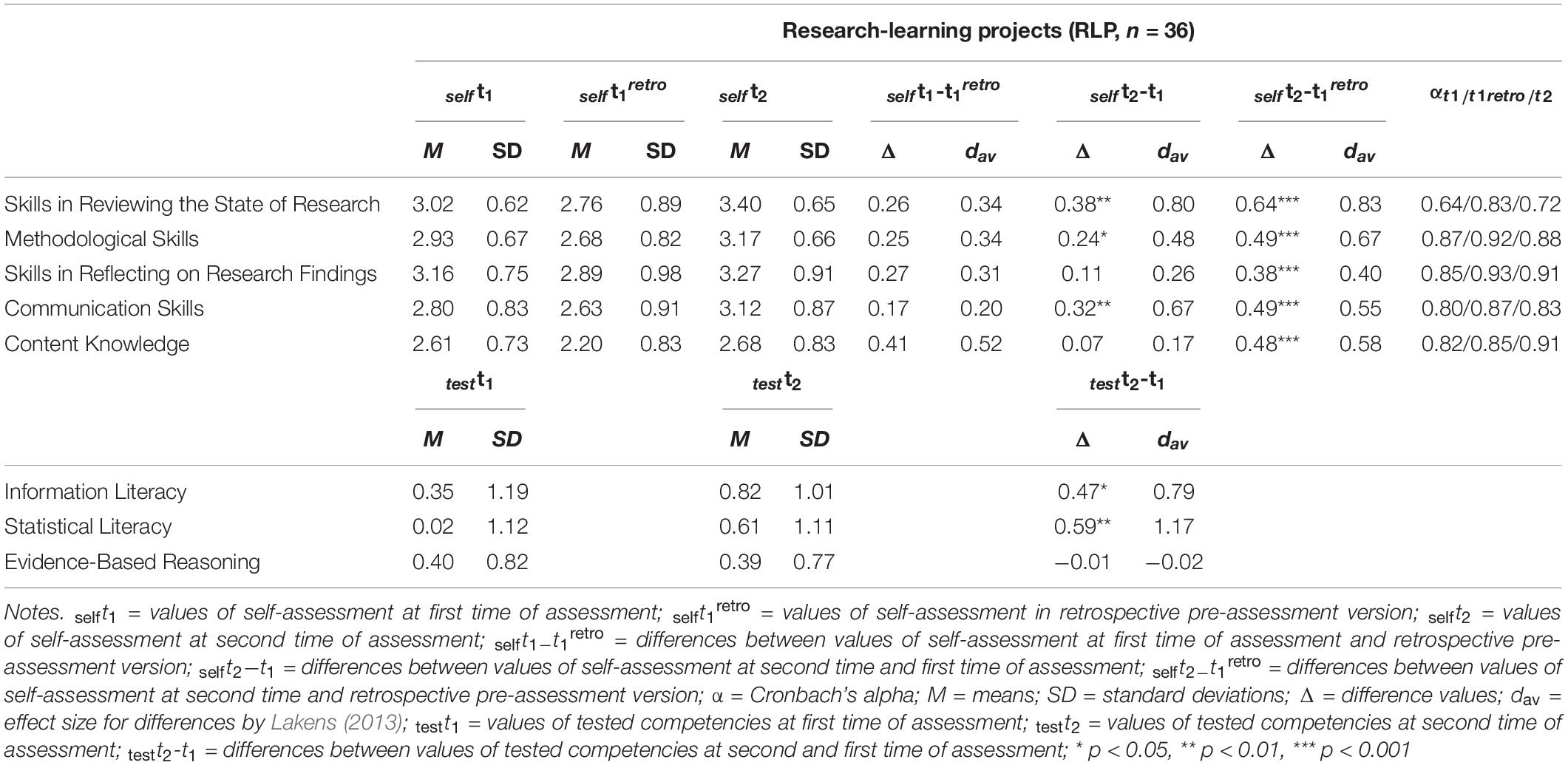

Ninety-seven teacher training students participated at the first point of assessment and 78 at the second point. These students were distributed among nine seminars with five instructors. For the longitudinal analysis, students were selected who had taken part in both waves of the survey ( n = 36; see Table 1 ). During the internship semester, most RLP students were in the third semester of their master’s studies ( M = 2.94, SD = 0.33).

Table 1. Characteristics of participants in the longitudinal sample.

It should be noted that the sample of teacher training students is subject to a certain degree of pre-selection bias because students could choose to register for the RLP course on their own. A maximum of 15 students were distributed, first, on the basis of preferences and second, in case of overbooking, randomly among the remaining seminars. Hence, this is a non-randomized sample.

The studies involving human participants were reviewed and approved by the Ethikkommission der Freien Universität Berlin Fachbereich Erziehungswissenschaft und Psychologie (the Ethics Committee of Freie Universität Berlin Department of Education and Psychology, own translation). Written informed consent from the participants was not required to participate in this study in accordance with the national legislation and the institutional requirements.

Measures and Data Collection

To evaluate the competence development, we used a combination of self-assessment of competencies and competence testing. This combination was chosen to counteract the weaknesses of each survey method: on the one hand, the possibility of misjudgments or tendencies toward bias in self-assessments ( Lucas and Baird, 2006 ; Chevalier et al., 2009 ), and on the other hand, the tendency of competence tests to focus solely on selected areas of knowledge due to time limitations ( Cramer, 2010 ; Mertens and Gräsel, 2018 ). We used the two methods together, as recommended by Lucas and Baird (2006) , to compensate for the specific weaknesses of each.

Self-Assessment of Competencies

Participants self-assessed their competencies with the instrument for assessing student research competencies (R-Comp; Böttcher and Thiel, 2016 , 2018 ). The R-Comp consists of 32 items (with a five-point response scale ranging from “1 – strongly disagree” to “5 – strongly agree”) on five scales: Skills in Reviewing the State of Research (four items; α = 0.87; e.g., “I am able to systematically review the state of research regarding a specific topic.”), Methodological Skills (eight items; α = 0.88; e.g., “I am able to decide which data/sources/materials I need to address my research question.”), Skills in Reflecting on Research Findings (six items; α = 0.92; e.g., “I am able to critically reflect on methodological limitations of my own research findings.”), Communication Skills (five items; α = 0.89; e.g., “I am able to write a publication in accordance with the standards of my discipline.”), and Content Knowledge [nine items; α = 0.88; e.g., “I am informed about the main (current) theories in my discipline.”]. The R-Comp thus measures the competencies that are necessary for the entire research process and thus focuses on establishing research ( Davies, 1999 ). However, the R-Comp is a cross-disciplinary instrument ( Böttcher and Thiel, 2016 , 2018 ). To specifically address the research process in the field of educational research, the R-Comp included instructions asking students to answer questions specifically for their studies in education. This was intended to ensure that students self-assessed their research competencies in the specific area of educational research.

The self-assessment of competencies brings with it certain problems. These include overestimation and underestimation of competencies ( Böttcher-Oschmann et al., 2019 ) and the phenomenon of response shift (“a change in the meaning of one’s self-evaluation of a target construct”, Schwartz and Sprangers, 1999 , p. 1532; Schwartz and Sprangers, 2010 ; Piwowar and Thiel, 2014 ). Therefore, a retrospective pre-assessment version of R-Comp ( Schwartz and Sprangers, 1999 , 2010 ) was used additionally at the second point of assessment. In this version, the answers in R-Comp were reformulated asking students to indicate how they had assessed their skills and knowledge “before the RLP.” On the one hand, this ensured that the same internal standards were used when answering the items at the second point of assessment ( Schwartz and Sprangers, 2010 ), making it possible to prevent response shift from biasing the results. Furthermore, the retrospective pre-assessment version offered students the possibility to reflect on their own increase in competencies ( Hill and Betz, 2005 ).

Competence Testing

We used the test instrument for assessing Educational Research Literacy (ERL; Groß Ophoff et al., 2014 , 2017 ) to measure student competencies. This test was developed especially for teacher training students and we were therefore able to use it without making any changes. It should be noted that the test only measures using research ( Davies, 1999 ; Groß Ophoff et al., 2017 ). Two test booklets were used, consisting of 18 items in the pretest version (Information Literacy: 7 items; Statistical Literacy: 7 items; Evidence-Based Reasoning: 4 items) and 17 items in the posttest version (Information Literacy: 7 items; Statistical Literacy: 5 items; Evidence-Based Reasoning: 5 items). These included items on the use of research strategies for Information Literacy and statistical/numerical tasks for Statistical Literacy, both mainly in multiple-choice formats. Items in the field of Evidence-Based Reasoning included, for example, two abstracts that had to be evaluated regarding the admissibility of several statements. Test items were selected from a large pool of 193 items that were standardized in a large-scale study ( N i = 1360, cf. Groß Ophoff et al., 2014 ). In the selection of items, care was taken to ensure a broad spectrum of competencies and sufficient discriminatory power of selected items ( M(r it ) = 0.31).

Self-assessment and competence testing were carried out before (t 1 ) and after (t 2 ) the RLP in a paper-and-pencil survey. At t 2 , self-assessment was additionally used in the retrospective pre-assessment version (t 1 retro ). Prior to both surveys, students were informed about the study’s aims and the voluntary nature of the survey, and their anonymity was guaranteed. At the end of each survey, students provided some personal data (gender, first and second teaching subject 3 , and number of semesters).

Statistical Characteristics

For each version of the R-Comp (first point of assessment: self t 1 , second point of assessment: self t 2 , retrospective pre-assessment version: self t 1 retro ), five scale scores were calculated according to the five dimensions of competence in the RMRC-K model. The hierarchical structure of the empirical model ( Böttcher and Thiel, 2018 ) was thus taken into account. Internal consistency for all R-Comp scales was evaluated using Cronbach’s α.

Test instrument for assessing Educational Research Literacy

Scale scores were computed using the three-dimensional ERL model ( Information Literacy, Statistical Literacy , and Evidence-Based Reasoning ), which is viable for course evaluation (cf. Groß Ophoff et al., 2017 ). Person measures for each sub-dimension were determined on the basis of a dichotomous response format of the items (“1 = correctly solved” and “0 = not correctly solved”) using the WLE estimator ( Warm, 1989 ). The person measures from the manifest data were estimated using a maximum likelihood function ( Hartig and Kühnbach, 2006 ; Strobl, 2010 ). Item difficulties were fixed (external anchor design; see Wright and Douglas, 1996 ; Mittelhaëuser et al., 2011 ) in order to compare results from this study to those from the standardization study ( Groß Ophoff et al., 2014 ).

Difference Values and Effect Sizes

In order to compare differences (Δ) between the points of assessment, the following values were calculated. For self-assessment: (a) Δ self t 2 – t 1 to determine indirect differences in competencies; (b) Δ self t 2 – t 1 retro to determine the quasi-indirect adjusted differences in competencies via retrospective assessment without response shift; (c) Δ self t 1 – t 1 retro to identify response shift, if difference values are not equal to zero, and misjudgments, whereby positive difference scores indicate overestimation and negative difference scores indicate underestimation ( Schwartz and Sprangers, 2010 ). For the competence test, (d) Δ test t 2 – t 1 was calculated to determine the direct differences in competencies.

The calculation of effect sizes for the differences was carried out according to Lakens’ recommendations inspecting d AV (2013). The effects were interpreted in line with Cohen’s (1992) benchmarks : ≥ 0.20 “small,” ≥0.50 “medium,” and ≥0.80 “large.”

Longitudinal Analyses

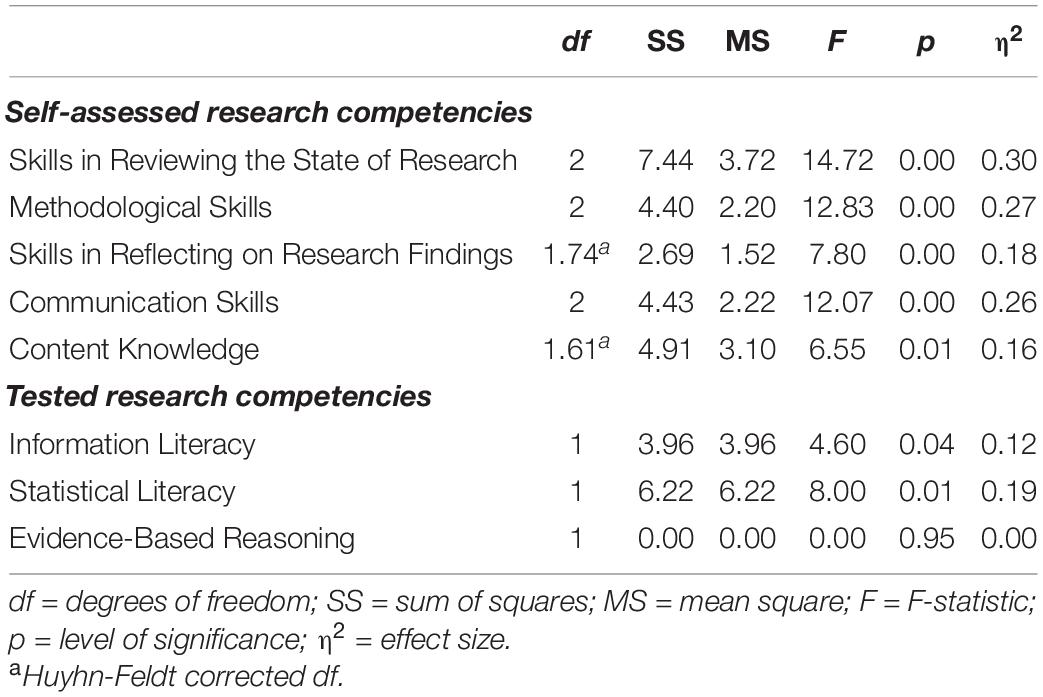

Multivariate and multifactorial variance analyses (MANOVA) with repeated measurement were conducted in SPSS 25. Because of the small sample size, the hierarchical structure of the data was not taken into account. MANOVA with repeated measurement was performed according to the general linear model (GLM) with the factor time. If the Mauchly test was not applied, the Huynh field corrected degrees of freedom were used (see Table 3 ; Field, 2009 ). After this, univariate analyses were performed to identify major effects. Additionally, individual comparisons between factor levels were determined using the Bonferroni correction to account for the problem of multiple comparisons. The overall significance level was set at p = 0.05, while effect sizes η 2 were interpreted according to Cohen’s (1988) benchmarks, with ≥0.01 “small,” ≥0.06 “medium,” and ≥0.14 “large.”

For the analyses of self-assessment, MANOVA included the time points of assessment t 1 , t 1 retro , t 2 for the five R-Comp scales. Although t 1 retro is a theoretical time point of assessment, it was included in MANOVA to investigate the occurrence of response shift. For the analyses of the competence test, MANOVA included test values for the three ERL scales at t 1 and t 2 .

Missing Values

Non-response occurs when participants either do not take part at one of the time points or do not answer individual items ( Sax et al., 2003 ). Only 37% of the students who took part at the first time point remained in the sample at the second time point. There was no systematic dropout by students’ personal characteristics or competencies at the first time point. 4 Unanswered items in the self-assessment of competencies did not occur more than twice per variable at any (theoretical) time point of assessment and were not imputed or replaced. For the analyses of the competence test, omissions were not imputed or replaced but treated as missing values ( Groß Ophoff et al., 2017 ). With regard to personal information, 9.7% missing values occurred altogether, most of them for the first (10.2%) and second (22.0%) teaching subject. 5

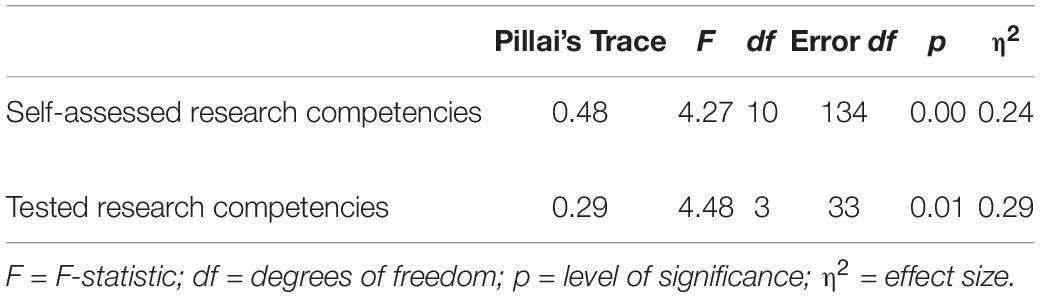

Self-Assessed Competencies

For self-assessed research competencies , a significant and large multivariate effect was found in MANOVA ( Table 2 ). Moreover, results of univariate analysis indicated that this was caused by all five skills and knowledge dimensions, which showed large effects ( Table 3 ). To find out how the individual effects are distributed over the individual time points of measurement, we consider the differences in mean values below. All mean values, standard deviations, differences in mean values, and effect sizes can be found in Table 4 .

Table 2. Multivariate effects for self-assessed and tested research competencies.

Indirect differences in competencies (Δ self t 2 – t 1 ): Significant increases occurred in Skills in Reviewing the State of Research, Methodological Skills , and Communication Skills . Skills in Reviewing the State of Research showed a large effect, Communication Skills a medium effect, Methodological Skills and Skills in Reflecting on Research Findings a small, positive effect, and Content Knowledge no effect.

Quasi-indirect differences in competencies (Δ self t 2 – t 1 retro ): Significant increases occurred in all skills and knowledge scales. Skills in Reviewing the State of Research showed a large effect, Methodological Skills, Communication Skills , and Content Knowledge a medium effect, and Skills in Reflecting on Research Findings a small effect.

Differences indicating response shift and overestimation of competencies (Δ self t 2 – t 1 retro ): Positive but no significant differences occurred. Content Knowledge showed a medium effect, and all skills scales a small effect. All identified effects indicate initial over-estimation.

Tested Competencies

For tested research competencies , a significant and large multivariate effect was found in MANOVA ( Table 2 ). Moreover, results of univariate analysis indicated that this effect was caused by Information Literacy , with a medium effect, and Statistical Literacy , with a large effect ( Table 3 ). These results are consistent with differences in mean values (direct differences in competencies; Δ tes t t 2 -t 1 ): Significant increases occurred for Information Literacy and Statistical Literacy , both with large, positive effects, whereas Evidence-Based Reasoning showed no effect.

Table 3. Univariate effects for self-assessed and tested research competencies.

Table 4. Mean values, standard deviations, differences in mean values, effect sizes, and Cronbach’s alpha.

The aim of this study was to develop, implement, and evaluate a RLP format to promote EBP in teacher training students, enabling them to acquire competencies for EBP in the context of their university studies. These competencies can be broken down into the categories of using research , which involves reflection on and use of evidence to solve problems in teaching practice, and establishing research , which involves investigating a research question independently by applying research methods. We conducted a longitudinal study to evaluate the increase in competencies based on a self-assessment of competencies (indirect measurement) focusing on establishing research , and a competence test (direct measurement) focusing on using research . We also added retrospective pre-assessment version (quasi-indirect measurement) to consider response shift and overestimation or underestimation in self-assessments.

Overall, the results show increases in the competencies examined, albeit to varying degrees. The indirect measurement showed that teacher training students in the RLP perceived an increase in S kills in Reviewing the State of Research, Methodological Skills , and Communication Skills . Moreover, the quasi-indirect measurement indicated that students perceived an increase in all skill dimensions as well as in Content Knowledge . Thus, a difference between indirect and quasi-indirect increases in competencies became apparent, indicating the occurrence of response shift. The direct measurement showed that students improved in Information Literacy and Statistical Literacy . The results of the competence test correspond to the results of the self-assessment of competencies. Students showed increases in similar dimensions: Information Literac y and Skills in Reviewing the State of Research as well as Statistical Literacy and Methodological Skills . In general, it can be noted that students seemed to benefit from the RLP format in every aspect of competence except for Skills in Reflecting on Research Findings and Evidence-Based Reasoning.

In summary, the RLP provided teacher training students the opportunity to go through the entire research process themselves. The similar increases found between the self-assessment and the competence test can be explained well: While the self-assessment with R-Comp deals with establishing research , the competence test deals with using research and thus one facet of establishing research . This indicates that in the RLP, students learned not only to apply evidence but also to generate evidence themselves in order to use it in teaching practice. The design of the seminar around the structure of research process with close supervision by instructors seems to have contributed to the acquisition of EBP competencies by students. Only in the areas of Interpreting Evidence and Drawing Conclusions for Practice did the students appear not to have benefited from the RLP. On the one hand, the RLP did not explicitly promote reasoning through targeted exercises. The interpretation of results and reflection on their implications were only addressed in consultations about students’ concrete findings. Since people rate their competencies in a more differentiated way when they have increased experience ( Bach, 2013 ; Mertens and Gräsel, 2018 ), the students may still not have been able to assess their competencies in this area accurately. On the other hand, reasoning is an extremely complex process and the corresponding test items are therefore highly challenging ( Groß Ophoff et al., 2014 , 2017 ). In the future, we will adapt the RLP format to take this into account. There will be specific exercises for reflection, argumentation, how to contextualize one’s own findings in relation to current research, and how to draw conclusions and implications for one’s own practice. The work of Fischer et al. (2014) on scientific reasoning provides important insights in this respect.

The combination of self-assessments of competencies and a competence test allowed us to gain a comprehensive picture of the increase in competence. Each method has its own advantages and disadvantages, and the two therefore complement each other well ( Lucas and Baird, 2006 ). Although self-assessments may be inaccurate due to misjudgments or bias ( Lucas and Baird, 2006 ; Chevalier et al., 2009 ), they may still affect people’s actions ( Bach, 2013 ). Moreover, the quasi-indirect measurement of competence may have an impact on self-efficacy beliefs ( Hill and Betz, 2005 ). As self-assessed research competencies are highly correlated with self-efficacy beliefs ( Mertens and Gräsel, 2018 ; Böttcher-Oschmann et al., 2019 ), it would be desirable if the self-evaluation of competence as well as the belief in having completed the research process successfully had positive effects on future EBP. Future studies could include a follow-up survey in their design to examine whether the effects translated into practice.

Through the additional use of the retrospective pre-assessment version of R-Comp, differences were identified in the increase in competence between indirect and quasi-indirect measurement. These differences indicate that a response shift ( Schwartz and Sprangers, 1999 , 2010 ; Sprangers and Schwartz, 1999 ) and misjudgments ( Kruger and Dunning, 1999 ; Lucas and Baird, 2006 ) occurred in the longitudinal measurement of self-assessed competencies. First, the fact that the difference between the first measurement point and the retrospective estimate is not equal to zero indicates a response shift. This assumption is supported by the fact that the quasi-indirect, adjusted difference values differ from the indirect difference values: In indirect measurement, two dimensions— Skills in Reflecting on Research Findings , and Content Knowledge —did not change over time, whereas in the quasi-indirect adjusted measurement, an increase was found in all areas. It seems that a change occurred in the individual’s internal standards of measurement had occurred in these dimensions, a recalibration response shift ( Schwartz and Sprangers, 1999 , 2010 ; Sprangers and Schwartz, 1999 ). Second, students overestimated their competencies prior to the RLP. The reason for the initial overestimation may be the students’ low level of experience ( Mertens and Gräsel, 2018 ) at the beginning of the semester. Hence, students might have had a vague idea about research competencies and therefore misjudged themselves, although some of them achieved good test scores. At the end of the semester, students may have been better able to evaluate themselves in a differentiated way.

Some limitations to our study need to be considered. On the one hand, the explanatory power is limited by the small sample size. This can be seen, for example, in the fact that large effects sometimes did not reach the level of significance. Although a larger sample was originally planned, the high dropout rate meant that only a small number of students could be reached at both time points. Students may have been oversaturated by the other surveys that took place at university at the same time ( Sax et al., 2003 ).

The interdisciplinary self-assessment and the domain-specific competence test are only partially compatible, as the two instruments cover different levels of EBP. A competence test that also covers establishing research would be desirable, although developing such a test would be challenging. Nevertheless, the combination of self-assessment and competence testing should be maintained in future studies.

The phenomenon of response shift could not be sufficiently investigated in this study. With the retrospective pre-assessment version of R-Comp we were only able to detect a recalibration response shift. A reconceptualization response shift is also conceivable, however, since RLPs are designed with the explicit aim of imparting the skills and knowledge needed to complete the entire research process, but this cannot be answered here based on the available data. Measurement invariance testing would be necessary as a statistical approach ( Meredith, 1993 ; Piwowar and Thiel, 2014 ), but invariance testing for response shift was not possible here due to the small sample size. Such testing would be useful to compare results from our design-based approach to results from a statistical approach ( Schwartz and Sprangers, 1999 ; Piwowar and Thiel, 2014 ). It should also be noted that in retrospective measurement, other forms of bias may occur, such as social desirability, recall bias, and the implicit theory of change ( Hill and Betz, 2005 ).

Further analyses are necessary to empirically confirm the results reported here. An implementation check should be carried out to take account of composition effects, teaching subject combinations, school type, or previous experience with the research process. Moreover, due to the small sample size, the hierarchical structure of the data has not been considered. To increase internal validity, and in particular to verify the results against a general increase in competencies as a result of attending other courses, it would be advisable to include a control group that attended a seminar on research-based, practical approaches to teaching and learning. As one cannot fully eliminate environmental influences, studies should control for the influence of courses attended in parallel.

Our study provides evidence that teacher training students can be prepared for EBP through RLP. One strength of this study is its design: The longitudinal approach used while students were in the field shows good external validity. Another strength is the learning environment, which offers students the opportunity to acquire the necessary competencies in EBP in the course of their teacher training based on international examples. The results show that through RLP, teacher training students learn to apply methods of self-assessment and external assessment. If it would succeed better to strengthen the competencies Interpreting Evidence and Drawing Conclusions for Practice , then teacher training students should be able to develop and monitor the quality of their teaching practice by, first, reflecting on their own experiences and competencies, second, by drawing conclusions from these reflections, and third, by applying what they have learned to their professional practice. We recommend that research-oriented teaching formats, such as the RLP format, should be integrated in other German master’s degree programs to fulfill the directives of the National Standards for Teacher Education ( Kultusministerkonferenz, 2004 , 2014 ) that teacher trainings students should be prepared for an EBP.

Data Availability Statement

The datasets presented in this article are not readily available because data privacy must be guaranteed. Requests to access the datasets should be directed to FB-O, [email protected].

Ethics Statement

The studies involving human participants were reviewed and approved by Ethikkommission der Freien Universität Berlin Fachbereich Erziehungswissenschaft und Psychologie (the Ethics Committee of Freie Universität Berlin Department of Education and Psychology, own translation). Written informed consent for participation was not required for this study in accordance with the national legislation and the institutional requirements.

Author Contributions

All authors contributed to the article and approved the submitted version.

Conflict of Interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Acknowledgments

We acknowledge support by the Open Access Publication Fund of the Freie Universität Berlin. The content of this manuscript has been published in part of the thesis of Böttcher-Oschmann (2019) .

- ^ The focus was primarily on quantitative research methods.

- ^ This is the second practical experience that accompanies the course of study. The first internship at the beginning of the bachelor’s degree program is a much shorter internship to get to know the teaching profession better.

- ^ After the study had begun, students were given the option to opt out of answering the question about their teaching subjects. Some students had been worried that they could be identified by their unusual combination of teaching subjects.

- ^ However, the teacher training students in our study were also the target group in two other surveys that took place at the same time.

- ^ These missing values probably occurred because students were given the option of not answering these items (see above).

Bach, A. (2013). Kompetenzentwicklung im Schulpraktikum: Ausmaß und Zeitliche Stabilität von Lerneffekten Hochschulischer Praxisphasen [Competence Development in school Internships: Extent and Temporal Stability of Learning Effects of Practical Phases in Higher Education, 1st Edn.] , Münster: Waxmann.

Google Scholar

Borg, S. (2010). Language teacher research engagement. Lang. Teach. 43, 391–429. doi: 10.1017/S0261444810000170

CrossRef Full Text | Google Scholar

Böttcher, F., and Thiel, F. (2016). “Der Fragebogen zur Erfassung studentischer Forschungskompetenzen - Ein Instrument auf der Grundlage des RMRKW-Modells zur Evaluation von Formaten forschungsorientierter Lehre [The questionnaire to assess university students’ research competences – An instrument based on theRMRC-K model to evaluate research-oriented teaching and learning arrangements],” in Neues Handbuch Hochschullehre , eds B. Berendt, A. Fleischmann, N. Schaper, B. Szczyrba, and J. Wildt (Berlin: DUZ Medienhaus), 57–74.

Böttcher, F., and Thiel, F. (2018). Evaluating research-oriented teaching: a new instrument to assess university students’ research competences. Higher Educ. 75, 91–110. doi: 10.1007/s10734-017-0128-y