- Skip to main content

- Skip to primary sidebar

- Skip to footer

- QuestionPro

- Solutions Industries Gaming Automotive Sports and events Education Government Travel & Hospitality Financial Services Healthcare Cannabis Technology Use Case NPS+ Communities Audience Contactless surveys Mobile LivePolls Member Experience GDPR Positive People Science 360 Feedback Surveys

- Resources Blog eBooks Survey Templates Case Studies Training Help center

Home Market Research Research Tools and Apps

Unit of Analysis: Definition, Types & Examples

The unit of analysis is the people or things whose qualities will be measured. The unit of analysis is an essential part of a research project. It’s the main thing that a researcher looks at in his research.

A unit of analysis is the object about which you hope to have something to say at the end of your analysis, perhaps the major subject of your research.

In this blog, we will define:

- Definition of “unit of analysis”

Types of “unit of analysis”

What is a unit of analysis.

A unit of analysis is the thing you want to discuss after your research, probably what you would regard to be the primary emphasis of your research.

The researcher plans to comment on the primary topic or object in the research as a unit of analysis. The research question plays a significant role in determining it. The “who” or “what” that the researcher is interested in investigating is, to put it simply, the unit of analysis.

In his book “Man, the State, and War” from 2001, author Waltz divides the world into three distinct spheres of study: the individual, the state, and war.

Understanding the reasoning behind the unit of analysis is vital. The likelihood of fruitful research increases if the rationale is understood. An individual, group, organization, nation, social phenomenon, etc., are a few examples.

LEARN ABOUT: Data Analytics Projects

In business research, there are almost unlimited types of possible analytical units. Data analytics and data analysis are closely related processes that involve extracting insights from data to make informed decisions. Even though the most typical unit of analysis is the individual, many research questions can be more precisely answered by looking at other types of units. Let’s find out,

Individual Level

The most prevalent unit of analysis in business research is the individual. These are the primary analytical units. The researcher may be interested in looking into:

- Employee actions

- Perceptions

- Attitudes, or opinions.

Employees may come from wealthy or low-income families, as well as from rural or metropolitan areas.

A researcher might investigate if personnel from rural areas are more likely to arrive on time than those from urban areas. Additionally, he can check whether workers from rural areas who come from poorer families arrive on time compared to those from rural areas who come from wealthy families.

Each time, the individual (employee) serving as the analytical unit is discussed and explained. Employee analysis as a unit of analysis can shed light on issues in business, including customer and human resource behavior.

For example, employee work satisfaction and consumer purchasing patterns impact business, making research into these topics vital.

Psychologists typically concentrate on the research of individuals. The research of individuals may significantly aid the success of a firm. Their knowledge and experiences reveal vital information. Individuals are so heavily utilized in business research.

Aggregates Level

People are not usually the focus of social science research. By combining the reactions of individuals, social scientists frequently describe and explain social interactions, communities, and groupings. Additionally, they research the collective of individuals, including communities, groups, and countries.

Aggregate levels can be divided into two types: Groups (groups with an ad hoc structure) and Organizations (groups with a formal organization).

Groups of people make up the following levels of the unit of analysis. A group is defined as two or more individuals interacting, having common traits, and feeling connected to one another.

Many definitions also emphasize interdependence or objective resemblance (Turner, 1982; Platow, Grace, & Smithson, 2011) and those who identify as group members (Reicher, 1982) .

As a result, society and gangs serve as examples of groups. According to Webster’s Online Dictionary (2012), they can resemble some clubs but be far less formal.

Siblings, identical twins, family, and small group functioning are examples of studies with many units of analysis.

In such circumstances, a whole group might be compared to another. Families, gender-specific groups, pals, Facebook groups, and work departments can all be groups.

By analyzing groups, researchers can learn how they form and how age, experience, class, and gender affect them. When aggregated, an individual’s data describes the group to which they belong.

LEARN ABOUT: Data Management Framework

Sociologists study groups like economists. Businesspeople form teams to complete projects. They’re continually researching groups and group behavior.

Organizations

The next level of the unit of analysis is organizations, which are groups of people. Organizations are groups set up formally. It could include businesses, religious groups, parts of the military, colleges, academic departments, supermarkets, business groups, and so on.

The social organization includes things like sexual composition, styles of leadership, organizational structure, systems of communication, and so on. (Susan & Wheelan, 2005; Chapais & Berman, 2004) . (Lim, Putnam, and Robert, 2010) say that well-known social organizations and religious institutions are among them.

Moody, White, and Douglas (2003) say that social organizations are hierarchical. Hasmath, Hildebrandt, and Hsu (2016) say that social organizations can take different forms. For example, they can be made by institutions like schools or governments.

Sociology, economics, political science, psychology, management, and organizational communication (Douma & Schreuder, 2013) are some social science fields that study organizations.

Organizations are different from groups in that they are more formal and have better organization. A researcher might want to study a company to generalize its results to the whole population of companies.

One way to look at an organization is by the number of employees, the net annual revenue, the net assets, the number of projects, and so on. He might want to know if big companies hire more or fewer women than small companies.

Organization researchers might be interested in how companies like Reliance, Amazon, and HCL affect our social and economic lives. People who work in business often study business organizations.

Social Level

The social level has 2 types,

Social Artifacts Level

Things are studied alongside humans. Social artifacts are human-made objects from diverse communities. Social artifacts are items, representations, assemblages, institutions, knowledge, and conceptual frameworks used to convey, interpret, or achieve a goal (IGI Global, 2017).

Cultural artifacts are anything humans generate that reveals their culture (Watts, 1981).

Social artifacts include books, newspapers, advertising, websites, technical devices, films, photographs, paintings, clothes, poems, jokes, students’ late excuses, scientific breakthroughs, furniture, machines, structures, etc. Infinite.

Humans build social objects for social behavior. As people or groups suggest a population in business research, each social object implies a class of items.

Same-class goods include business books, magazines, articles, and case studies. A business magazine’s quantity of articles, frequency, price, content, and editor in a research study may be characterized.

Then, a linked magazine’s population might be evaluated for description and explanation. Marx W. Wartofsky (1979) defined artifacts as primary artifacts utilized in production (like a camera), secondary artifacts connected to primary artifacts (like a camera user manual), and tertiary objects related to representations of secondary artifacts (like a camera user-manual sculpture).

An artifact’s scientific study reveals its creators and users. The artifacts researcher may be interested in advertising, marketing, distribution, buying, etc.

Social Interaction Level

Social artifacts include social interaction. Such as:

- Eye contact with a coworker

- Buying something in a store

- Friendship decisions

- Road accidents

- Airline hijackings

- Professional counseling

- Whatsapp messaging

A researcher might study youthful employees’ smartphone addictions . Some addictions may involve social media, while others involve online games and movies that inhibit connection.

Smartphone addictions are examined as a societal phenomenon. Observation units are probably individuals (employees).

Anthropologists typically study social artifacts. They may be interested in the social order. A researcher who examines social interactions may be interested in how broader societal structures and factors impact daily behavior, festivals, and weddings.

LEARN ABOUT: Level of Analysis

Even though there is no perfect way to do research, it is generally agreed that researchers should try to find a unit of analysis that keeps the context needed to make sense of the data.

Researchers should consider the details of their research when deciding on the unit of analysis.

They should keep in mind that consistent use of these units throughout the analysis process (from coding to developing categories and themes to interpreting the data) is essential to gaining insight from qualitative data and protecting the reliability of the results.

QuestionPro does much more than merely serve as survey software. For every sector of the economy and every kind of issue, we have a solution. We also have systems for managing data, such as our research repository Insights Hub.

LEARN MORE FREE TRIAL

MORE LIKE THIS

Top 17 UX Research Software for UX Design in 2024

Apr 5, 2024

Healthcare Staff Burnout: What it Is + How To Manage It

Apr 4, 2024

Top 15 Employee Retention Software in 2024

Top 10 Employee Development Software for Talent Growth

Apr 3, 2024

Other categories

- Academic Research

- Artificial Intelligence

- Assessments

- Brand Awareness

- Case Studies

- Communities

- Consumer Insights

- Customer effort score

- Customer Engagement

- Customer Experience

- Customer Loyalty

- Customer Research

- Customer Satisfaction

- Employee Benefits

- Employee Engagement

- Employee Retention

- Friday Five

- General Data Protection Regulation

- Insights Hub

- Life@QuestionPro

- Market Research

- Mobile diaries

- Mobile Surveys

- New Features

- Online Communities

- Question Types

- Questionnaire

- QuestionPro Products

- Release Notes

- Research Tools and Apps

- Revenue at Risk

- Survey Templates

- Training Tips

- Uncategorized

- Video Learning Series

- What’s Coming Up

- Workforce Intelligence

Join thousands of product people at Insight Out Conf on April 11. Register free.

Insights hub solutions

Analyze data

Uncover deep customer insights with fast, powerful features, store insights, curate and manage insights in one searchable platform, scale research, unlock the potential of customer insights at enterprise scale.

Featured reads

Inspiration

Three things to look forward to at Insight Out

Tips and tricks

Make magic with your customer data in Dovetail

Four ways Dovetail helps Product Managers master continuous product discovery

Events and videos

© Dovetail Research Pty. Ltd.

Unit of analysis: definition, types, examples, and more

Last updated

16 April 2023

Reviewed by

Cathy Heath

Make research less tedious

Dovetail streamlines research to help you uncover and share actionable insights

- What is a unit of analysis?

A unit of analysis is an object of study within a research project. It is the smallest unit a researcher can use to identify and describe a phenomenon—the 'what' or 'who' the researcher wants to study.

For example, suppose a consultancy firm is hired to train the sales team in a solar company that is struggling to meet its targets. To evaluate their performance after the training, the unit of analysis would be the sales team—it's the main focus of the study.

Different methods, such as surveys , interviews, or sales data analysis, can be used to evaluate the sales team's performance and determine the effectiveness of the training.

- Units of observation vs. units of analysis

A unit of observation refers to the actual items or units being measured or collected during the research. In contrast, a unit of analysis is the entity that a researcher can comment on or make conclusions about at the end of the study.

In the example of the solar company sales team, the unit of observation would be the individual sales transactions or deals made by the sales team members. In contrast, the unit of analysis would be the sales team as a whole.

The firm may observe and collect data on individual sales transactions, but the ultimate conclusion would be based on the sales team's overall performance, as this is the entity that the firm is hired to improve.

In some studies, the unit of observation may be the same as the unit of analysis, but researchers need to define both clearly to themselves and their audiences.

- Unit of analysis types

Below are the main types of units of analysis:

Individuals – These are the smallest levels of analysis.

Groups – These are people who interact with each other.

Artifacts –These are material objects created by humans that a researcher can study using empirical methods.

Geographical units – These are smaller than a nation and range from a province to a neighborhood.

Social interactions – These are formal or informal interactions between society members.

- Importance of selecting the correct unit of analysis in research

Selecting the correct unit of analysis helps reveal more about the subject you are studying and how to continue with the research. It also helps determine the information you should use in the study. For instance, if a researcher has a large sample, the unit of analysis will help decide whether to focus on the whole population or a subset of it.

- Examples of a unit of analysis

Here are examples of a unit of analysis:

Individuals – A person, an animal, etc.

Groups – Gangs, roommates, etc.

Artifacts – Phones, photos, books, etc.

Geographical units – Provinces, counties, states, or specific areas such as neighborhoods, city blocks, or townships

Social interaction – Friendships, romantic relationships, etc.

- Factors to consider when selecting a unit of analysis

The main things to consider when choosing a unit of analysis are:

Research questions and hypotheses

Research questions can be descriptive if the study seeks to describe what exists or what is going on.

It can be relational if the study seeks to look at the relationship between variables. Or, it can be causal if the research aims at determining whether one or more variables affect or cause one or more outcome variables.

Your study's research question and hypothesis should guide you in choosing the correct unit of analysis.

Data availability and quality

Consider the nature of the data collected and the time spent observing each participant or studying their behavior. You should also consider the scale used to measure variables.

Some studies involve measuring every variable on a one-to-one scale, while others use variables with discrete values. All these influence the selection of a unit of analysis.

Feasibility and practicality

Look at your study and think about the unit of analysis that would be feasible and practical.

Theoretical framework and research design

The theoretical framework is crucial in research as it introduces and describes the theory explaining why the problem under research exists. As a structure that supports the theory of a study, it is a critical consideration when choosing the unit of analysis. Moreover, consider the overall strategy for collecting responses to your research questions.

- Common mistakes when choosing a unit of analysis

Below are common errors that occur when selecting a unit of analysis:

Reductionism

This error occurs when a researcher uses data from a lower-level unit of analysis to make claims about a higher-level unit of analysis. This includes using individual-level data to make claims about groups.

However, claiming that Rosa Parks started the movement would be reductionist. There are other factors behind the rise and success of the US civil rights movement. These include the Supreme Court’s historic decision to desegregate schools, protests over legalized racial segregation, and the formation of groups such as the Student Nonviolent Coordinating Committee (SNCC). In short, the movement is attributable to various political, social, and economic factors.

Ecological fallacy

This mistake occurs when researchers use data from a higher-level unit of analysis to make claims about one lower-level unit of analysis. It usually occurs when only group-level data is collected, but the researcher makes claims about individuals.

For instance, let's say a study seeks to understand whether addictions to electronic gadgets are more common in certain universities than others.

The researcher moves on and obtains data on the percentage of gadget-addicted students from different universities around the country. But looking at the data, the researcher notes that universities with engineering programs have more cases of gadget additions than campuses without the programs.

Concluding that engineering students are more likely to become addicted to their electronic gadgets would be inappropriate. The data available is only about gadget addiction rates by universities; thus, one can only make conclusions about institutions, not individual students at those universities.

Making claims about students while the data available is about the university puts the researcher at risk of committing an ecological fallacy.

- The lowdown

A unit of analysis is what you would consider the primary emphasis of your study. It is what you want to discuss after your study. Researchers should determine a unit of analysis that keeps the context required to make sense of the data. They should also keep the unit of analysis in mind throughout the analysis process to protect the reliability of the results.

What is the most common unit of analysis?

The individual is the most prevalent unit of analysis.

Can the unit of analysis and the unit of observation be one?

Some situations have the same unit of analysis and observation. For instance, let's say a tutor is hired to improve the oral French proficiency of a student who finds it difficult. A few months later, the tutor wants to evaluate the student's proficiency based on what they have taught them for the time period. In this case, the student is both the unit of analysis and the unit of observation.

Get started today

Go from raw data to valuable insights with a flexible research platform

Editor’s picks

Last updated: 21 December 2023

Last updated: 16 December 2023

Last updated: 6 October 2023

Last updated: 17 February 2024

Last updated: 5 March 2024

Last updated: 19 November 2023

Last updated: 15 February 2024

Last updated: 11 March 2024

Last updated: 12 December 2023

Last updated: 6 March 2024

Last updated: 10 April 2023

Last updated: 20 December 2023

Latest articles

Related topics, log in or sign up.

Get started for free

Community Blog

Keep up-to-date on postgraduate related issues with our quick reads written by students, postdocs, professors and industry leaders.

The Unit of Analysis Explained

- By DiscoverPhDs

- October 3, 2020

The unit of analysis refers to the main parameter that you’re investigating in your research project or study. Example of the different types of unit analysis that may be used in a project include:

- Individual people

- Groups of people

- Objects such as photographs, newspapers and books

- Geographical unit based on parameters such as cities or counties

- Social parameters such as births, deaths, divorces

The unit of analysis is named as such because the unit type is determined based on the actual data analysis that you perform in your project or study.

For example, if your research is based around data on exam grades for students at two different universities, then the unit of analysis is the data for the individual student due to each student having an exam score associated with them.

Conversely if your study is based on comparing noise level data between two different lecture halls full of students, then your unit of analysis here is the collective group of students in each hall rather than any data associated with an individual student.

In the same research study involving the same students, you may perform different types of analysis and this will be reflected by having different units of analysis. In the example of student exam scores, if you’re comparing individual exam grades then the unit of analysis is the individual student.

On the other hand, if you’re comparing the average exam grade between two universities, then the unit of analysis is now the group of students as you’re comparing the average of the group rather than individual exam grades.

These different levels of hierarchies of units of analysis can become complex with multiple levels. In fact, its complexity has led to a new field of statistical analysis that’s commonly known as hierarchical modelling.

As a researcher, you need to be clear on what your specific research questio n is. Based on this, you can define each data, observation or other variable and how they make up your dataset.

A clarity of your research question will help you identify your analysis units and the appropriate sample size needed to obtain a meaningful result (and is this a random sample/sampling unit or something else).

In developing your research method, you need to consider whether you’ll need any repeated observation of each measurement. You also need to consider whether you’re working with qualitative data/qualitative research or if this is quantitative content analysis.

The unit of analysis of your study is the specifically ‘who’ or what’ it is that your analysing – for example are you analysing the individual student, the group of students or even the whole university. You may have to consider a different unit of analysis based on the concept you’re considering, even if working with the same observation data set.

The scope and delimitations of a thesis, dissertation or paper define the topic and boundaries of a research problem – learn how to form them.

Learning how to effectively collaborate with others is an important skill for anyone in academia to develop.

A science investigatory project is a science-based research project or study that is performed by school children in a classroom, exhibition or science fair.

Join thousands of other students and stay up to date with the latest PhD programmes, funding opportunities and advice.

Browse PhDs Now

Choosing a good PhD supervisor will be paramount to your success as a PhD student, but what qualities should you be looking for? Read our post to find out.

How should you spend your first week as a PhD student? Here’s are 7 steps to help you get started on your journey.

Dr Smethurst gained her DPhil in astrophysics from the University of Oxford in 2017. She is now an independent researcher at Oxford, runs a YouTube channel with over 100k subscribers and has published her own book.

Helena is a final year PhD student at the Natural History Museum in London and the University of Oxford. Her research is on understanding the evolution of asteroids through analysis of meteorites.

Join Thousands of Students

- Unit of Analysis: Definition, Types & Examples

Introduction

A unit of analysis is the smallest level of analysis for a research project. It’s important to choose the right unit of analysis because it helps you make more accurate conclusions about your data.

What Is a Unit of Analysis?

A unit of analysis is the smallest element in a data set that can be used to identify and describe a phenomenon or the smallest unit that can be used to gather data about a subject. The unit of analysis will determine how you will define your variables, which are the things that you measure in your data.

If you want to understand why people buy a particular product, you should choose a unit of analysis that focuses on buying behavior. This means choosing a unit of analysis that is relevant to your research topic and question .

For example, if you want to study the needs of soldiers in a war zone, you will need to choose an appropriate unit of analysis for this study: soldiers or the war zone. In this case, choosing the right unit of analysis would be important because it could help you decide if your research design is appropriate for this particular subject and situation.

Why is Choosing the Right Unit of Analysis Important?

The unit of analysis is important because it helps you understand what you are trying to find out about your subject, and it also helps you to make decisions about how to proceed with your research.

Choosing the right unit of analysis is also important because it determines what information you’re going to use in your research. If you have a small sample, then you’ll have to choose whether or not to focus on the entire population or just a subset of it.

If you have a large sample, then you’ll be able to find out more about specific groups within your population. For example, if you want to understand why people buy certain types of products, then you should choose a unit of analysis that focuses on buying behavior.

This means choosing a unit of analysis that is relevant to your research topic and question.

Unit of Analysis vs Unit of Observation

Unit of analysis is a term used to refer to a particular part of a data set that can be analyzed. For example, in the case of a survey, the unit of analysis is an individual: the person who was selected to take part in the survey.

Unit of analysis is used in the social sciences to refer to the individuals or groups that have been studied. It can also be referred to as the unit of observation.

Unit of observation refers to a specific person or group in the study being observed by the researcher. An example would be a particular town, census tract, state, or other geographical location being studied by researchers conducting research on crime rates in that area.

Unit of analysis refers to the individual or group being studied by the researcher. An example would be an entire town being analyzed for crime rates over time.

Types of “Unit of Analysis”

The unit of analysis is a way to understand and study a phenomenon. There are four main types of unit of analysis: individuals, groups, artifacts (books, photos, newspapers), and geographical units (towns, census tracts, states).

- Individuals are the smallest level of analysis. For example, an individual may be a person or an animal. A group can be composed of individuals or a collection of people who interact with each other. For example, an individual might go to college with other individuals or a family might live together as roommates.

- An artifact is anything that can be studied using empirical methods—including books and photos but also any physical object like knives or phones.

- A geographical unit is smaller than an entire country but larger than just one city block or neighborhood; it may be smaller than just two houses but larger than just two houses in the same street.

- Social interactions include dyadic relations (such as friendships or romantic relationships) and divorces among many other things such as arrests.

Examples of Each Type of Unit of Analysis

- Individuals are the smallest unit of analysis. An individual is a person, animal, or thing. For example, an individual can be a person or a building.

- Artifacts are the next largest units of analysis. An artifact is something produced by human beings and is not alive. For example, a child’s toy is an artifact. Artifacts can include any material object that was produced by human activity and which has meaning to someone. Artifacts can be tangible or intangible and may be produced intentionally or accidentally.

- Geographical units are large geographic areas such as states, counties, provinces, etc. Geographical units may also refer to specific locations within these areas such as cities or townships.

- Social interaction refers to interactions between members of society (e.g., family members interacting with each other). Social interaction includes both formal interactions (such as attending school) and informal interactions (such as talking on the phone).

How Does a Social Scientist Choose a Unit of Analysis?

Social scientists choose a unit of analysis based on the purpose of their research, their research question, and the type of data they have. For example, if they are trying to understand the relationship between a person’s personality and their behavior, they would choose to study personality traits.

For example, if a researcher wanted to study the effects of legalizing marijuana on crime rates, they may choose to use administrative data from police departments. However, if they wanted to study how culture influences crime rates, they might use survey data from smaller groups of people who are further removed from the influence of culture (e.g., individuals living in different areas or countries).

Factors to Consider When Choosing a Unit of Analysis

The unit of analysis is the object or person that you are studying, and it determines what kind of data you are collecting and how you will analyze it.

Factors to consider when choosing a unit of analysis include:

- What is your purpose for studying this topic? Is it for a research paper or an article? If so, which type of paper do you want to write?

- What is the most appropriate unit for your study? If you are studying a specific event or period of time, this may be obvious. But if your focus is broader, such as all social sciences or all human development, then you need to determine how broad your scope should be before beginning any research process (see question one above) so that you know where to start in order for it to be effective (see question three below).

- How do other people define their units? This can be helpful when trying to understand what other people mean when they use certain terms like “social science” or “human development” because they may define those terms differently than what you would expect them to.

- The nature of the data collected. Is it quantitative or qualitative? If it’s qualitative, what kind of data is collected? How much time was spent observing each participant/examining their behavior?

- The scale used to measure variables. Is every variable measured on a one-to-one scale (like measurements between people)? Or do some variables only take on discrete values (like yes/no questions)?

The unit of analysis is the smallest part of a data set that you analyze. It’s important to remember that your data is made up of more than just one unit—you have lots of different units in your dataset, and each of those units has its own characteristics that you need to think about when you’re trying to analyze it.

Connect to Formplus, Get Started Now - It's Free!

- Data Collection

- research questions

- unit of analysis

- Olayemi Jemimah Aransiola

You may also like:

What is Field Research: Meaning, Examples, Pros & Cons

Introduction Field research is a method of research that deals with understanding and interpreting the social interactions of groups of...

The McNamara Fallacy: How Researchers Can Detect and to Avoid it.

Introduction The McNamara Fallacy is a common problem in research. It happens when researchers take a single piece of data as evidence...

Projective Techniques In Surveys: Definition, Types & Pros & Cons

Introduction When you’re conducting a survey, you need to find out what people think about things. But how do you get an accurate and...

Research Summary: What Is It & How To Write One

Introduction A research summary is a requirement during academic research and sometimes you might need to prepare a research summary...

Formplus - For Seamless Data Collection

Collect data the right way with a versatile data collection tool. try formplus and transform your work productivity today..

Want to create or adapt books like this? Learn more about how Pressbooks supports open publishing practices.

Chapter 4: Measurement and Units of Analysis

4.4 Units of Analysis and Units of Observation

Another point to consider when designing a research project, and which might differ slightly in qualitative and quantitative studies, has to do with units of analysis and units of observation. These two items concern what you, the researcher, actually observe in the course of your data collection and what you hope to be able to say about those observations. Table 3.1 provides a summary of the differences between units of analysis and observation.

Unit of Analysis

A unit of analysis is the entity that you wish to be able to say something about at the end of your study, probably what you would consider to be the main focus of your study.

Unit of Observation

A unit of observation is the item (or items) that you actually observe, measure, or collect in the course of trying to learn something about your unit of analysis. In a given study, the unit of observation might be the same as the unit of analysis, but that is not always the case. Further, units of analysis are not required to be the same as units of observation. What is required, however, is for researchers to be clear about how they define their units of analysis and observation, both to themselves and to their audiences. More specifically, your unit of analysis will be determined by your research question. Your unit of observation, on the other hand, is determined largely by the method of data collection that you use to answer that research question.

To demonstrate these differences, let us look at the topic of students’ addictions to their cell phones. We will consider first how different kinds of research questions about this topic will yield different units of analysis. Then we will think about how those questions might be answered and with what kinds of data. This leads us to a variety of units of observation.

If I were to ask, “Which students are most likely to be addicted to their cell phones?” our unit of analysis would be the individual. We might mail a survey to students on a university or college campus, with the aim to classify individuals according to their membership in certain social classes and, in turn, to see how membership in those classes correlates with addiction to cell phones. For example, we might find that students studying media, males, and students with high socioeconomic status are all more likely than other students to become addicted to their cell phones. Alternatively, we could ask, “How do students’ cell phone addictions differ and how are they similar? In this case, we could conduct observations of addicted students and record when, where, why, and how they use their cell phones. In both cases, one using a survey and the other using observations, data are collected from individual students. Thus, the unit of observation in both examples is the individual. But the units of analysis differ in the two studies. In the first one, our aim is to describe the characteristics of individuals. We may then make generalizations about the populations to which these individuals belong, but our unit of analysis is still the individual. In the second study, we will observe individuals in order to describe some social phenomenon, in this case, types of cell phone addictions. Consequently, our unit of analysis would be the social phenomenon.

Another common unit of analysis in sociological inquiry is groups. Groups, of course, vary in size, and almost no group is too small or too large to be of interest to sociologists. Families, friendship groups, and street gangs make up some of the more common micro-level groups examined by sociologists. Employees in an organization, professionals in a particular domain (e.g., chefs, lawyers, sociologists), and members of clubs (e.g., Girl Guides, Rotary, Red Hat Society) are all meso-level groups that sociologists might study. Finally, at the macro level, sociologists sometimes examine citizens of entire nations or residents of different continents or other regions.

A study of student addictions to their cell phones at the group level might consider whether certain types of social clubs have more or fewer cell phone-addicted members than other sorts of clubs. Perhaps we would find that clubs that emphasize physical fitness, such as the rugby club and the scuba club, have fewer cell phone-addicted members than clubs that emphasize cerebral activity, such as the chess club and the sociology club. Our unit of analysis in this example is groups. If we had instead asked whether people who join cerebral clubs are more likely to be cell phone-addicted than those who join social clubs, then our unit of analysis would have been individuals. In either case, however, our unit of observation would be individuals.

Organizations are yet another potential unit of analysis that social scientists might wish to say something about. Organizations include entities like corporations, colleges and universities, and even night clubs. At the organization level, a study of students’ cell phone addictions might ask, “How do different colleges address the problem of cell phone addiction?” In this case, our interest lies not in the experience of individual students but instead in the campus-to-campus differences in confronting cell phone addictions. A researcher conducting a study of this type might examine schools’ written policies and procedures, so his unit of observation would be documents. However, because he ultimately wishes to describe differences across campuses, the college would be his unit of analysis.

Social phenomena are also a potential unit of analysis. Many sociologists study a variety of social interactions and social problems that fall under this category. Examples include social problems like murder or rape; interactions such as counselling sessions, Facebook chatting, or wrestling; and other social phenomena such as voting and even cell phone use or misuse. A researcher interested in students’ cell phone addictions could ask, “What are the various types of cell phone addictions that exist among students?” Perhaps the researcher will discover that some addictions are primarily centred on social media such as chat rooms, Facebook, or texting, while other addictions centre on single-player games that discourage interaction with others. The resultant typology of cell phone addictions would tell us something about the social phenomenon (unit of analysis) being studied. As in several of the preceding examples, however, the unit of observation would likely be individual people.

Finally, a number of social scientists examine policies and principles, the last type of unit of analysis we will consider here. Studies that analyze policies and principles typically rely on documents as the unit of observation. Perhaps a researcher has been hired by a college to help it write an effective policy against cell phone use in the classroom. In this case, the researcher might gather all previously written policies from campuses all over the country, and compare policies at campuses where the use of cell phones in classroom is low to policies at campuses where the use of cell phones in the classroom is high.

In sum, there are many potential units of analysis that a sociologist might examine, but some of the most common units include the following:

- Individuals

- Organizations

- Social phenomena.

- Policies and principles.

Table 4.1 Units of analysis and units of observation: A hypothetical study of students’ addictions to cell phones.

Research Methods for the Social Sciences: An Introduction Copyright © 2020 by Valerie Sheppard is licensed under a Creative Commons Attribution-NonCommercial-ShareAlike 4.0 International License , except where otherwise noted.

Share This Book

Popular searches

- How to Get Participants For Your Study

- How to Do Segmentation?

- Conjoint Preference Share Simulator

- MaxDiff Analysis

- Likert Scales

- Reliability & Validity

Request consultation

Do you need support in running a pricing or product study? We can help you with agile consumer research and conjoint analysis.

Looking for an online survey platform?

Conjointly offers a great survey tool with multiple question types, randomisation blocks, and multilingual support. The Basic tier is always free.

Research Methods Knowledge Base

- Navigating the Knowledge Base

- Five Big Words

- Types of Research Questions

- Time in Research

- Types of Relationships

- Types of Data

Unit of Analysis

- Two Research Fallacies

- Philosophy of Research

- Ethics in Research

- Conceptualizing

- Evaluation Research

- Measurement

- Research Design

- Table of Contents

Fully-functional online survey tool with various question types, logic, randomisation, and reporting for unlimited number of surveys.

Completely free for academics and students .

One of the most important ideas in a research project is the unit of analysis . The unit of analysis is the major entity that you are analyzing in your study. For instance, any of the following could be a unit of analysis in a study:

- individuals

- artifacts (books, photos, newspapers)

- geographical units (town, census tract, state)

- social interactions (dyadic relations, divorces, arrests)

Why is it called the ‘unit of analysis’ and not something else (like, the unit of sampling)? Because it is the analysis you do in your study that determines what the unit is . For instance, if you are comparing the children in two classrooms on achievement test scores, the unit is the individual child because you have a score for each child. On the other hand, if you are comparing the two classes on classroom climate, your unit of analysis is the group, in this case the classroom, because you only have a classroom climate score for the class as a whole and not for each individual student. For different analyses in the same study you may have different units of analysis. If you decide to base an analysis on student scores, the individual is the unit. But you might decide to compare average classroom performance. In this case, since the data that goes into the analysis is the average itself (and not the individuals’ scores) the unit of analysis is actually the group. Even though you had data at the student level, you use aggregates in the analysis. In many areas of social research these hierarchies of analysis units have become particularly important and have spawned a whole area of statistical analysis sometimes referred to as hierarchical modeling . This is true in education, for instance, where we often compare classroom performance but collected achievement data at the individual student level.

Cookie Consent

Conjointly uses essential cookies to make our site work. We also use additional cookies in order to understand the usage of the site, gather audience analytics, and for remarketing purposes.

For more information on Conjointly's use of cookies, please read our Cookie Policy .

Which one are you?

I am new to conjointly, i am already using conjointly.

6. Sampling

6.1. Units of Analysis

Learning Objectives

- Describe units of analysis.

- Discuss how we can study the same topic using different units of analysis.

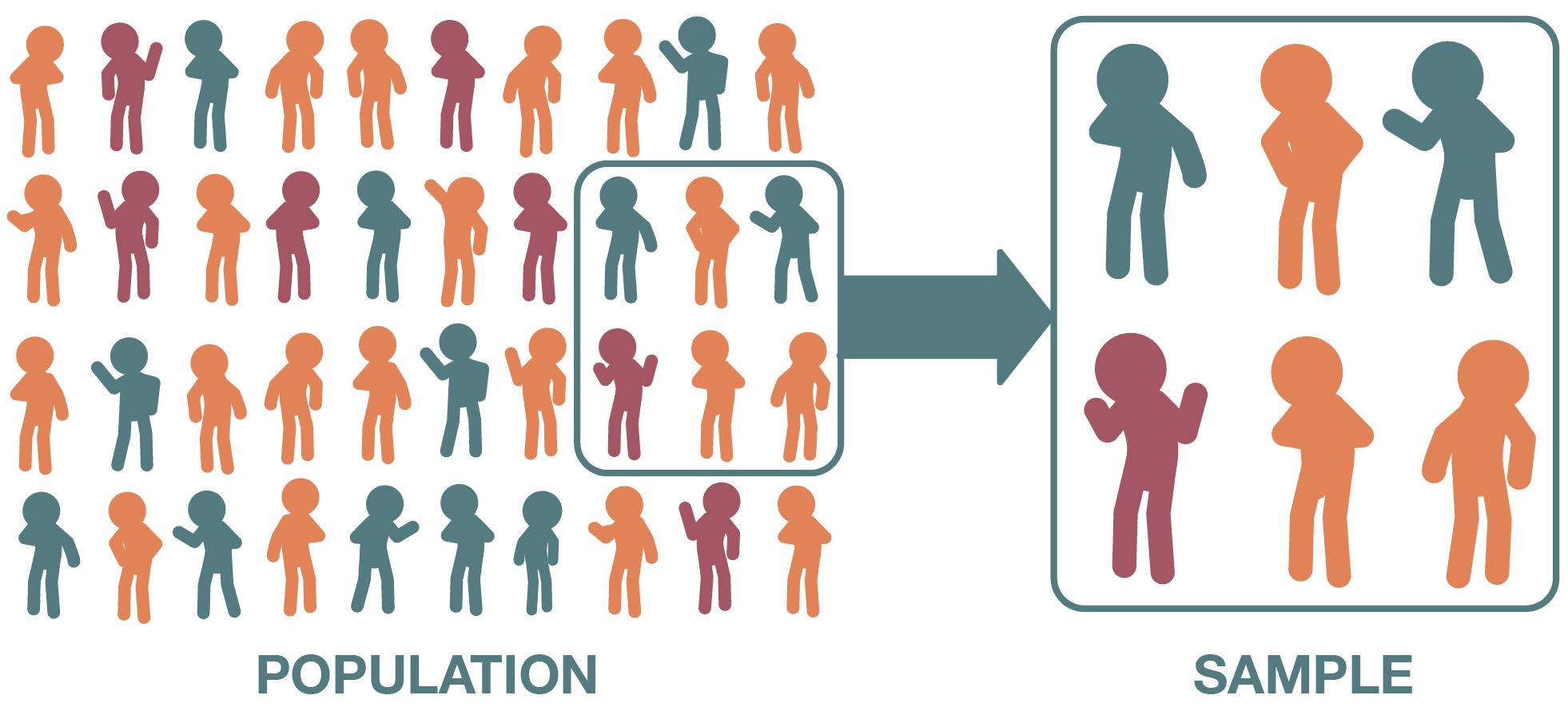

Before you can decide on a sampling strategy, you must define the unit of analysis of your scientific study. The unit of analysis refers to the person, collective, or object that you are focusing on and want to learn about through your research. As depicted in Figure 6.1 , your unit of analysis would be the type of entity (say, an individual) you’re interested in. Your sample would be a group of such entities (say, a group of individuals you survey)—which, collectively, stand in for the population you wish to study.

Typical units of analysis include individuals, groups, organizations, and countries. For instance, if we are interested in studying people’s shopping behavior, their learning outcomes, or their attitudes toward new technologies, then the unit of analysis is likely to be the individual . If we want to study characteristics of street gangs or teamwork in organizations, then the unit of analysis is probably the group . If our research is directed at understanding differences in national cultures, then our unit of analysis could be the country . In the latter two examples, even though specific individuals—the group or country’s leaders—may have a greater say over what these groups or countries do, for the sake of analysis, researchers typically think of those decisions as reflecting a collective decision rather than any one individual’s decision.

Even inanimate objects can serve as units of analysis. For instance, if we wish to study how two or more individuals engage with each other during social interactions, the unit of analysis might be each conversation , and not the individual speakers. If we wanted to track how depictions of people of color have changed in popular culture over time, we could focus on a film or television show as a unit of analysis.

Our choice of a particular unit of analysis will depend on our research question. For instance, if we wish to study why certain neighborhoods have high crime rates, then our unit of analysis becomes the neighborhood —not crimes or criminals committing such crimes—because the object of our inquiry is the neighborhood and not the people living in it. If, however, we wish to compare the prevalence of different types of crimes—homicide versus robbery versus assault, for example—across neighborhoods, our unit of analysis could very well be the crime . If we wish to study why criminals engage in illegal activities, then the unit of analysis becomes the individual (i.e., the criminal).

Now let’s consider a completely different kind of sociological study. If we want to examine why some business innovations are more successful than others, then our unit of analysis is an innovation —such as the invention of a new method for charging phones. If, however, we wish to study how some tech companies develop innovative products more consistently than others, then the unit of analysis is the organization . As you can see, two related research questions within the same study may have entirely different units of analysis.

Determining the appropriate unit of analysis is important because it influences what type of data you should collect for your study and whom you collect it from. If your unit of analysis is the organization, then you usually will want to collect organizational-level data—that is, data that has to do with the organization, such as its size, personnel structure, or revenues. Data may come from a variety of sources, such as financial records or surveys of directors or executives, who are presumed to be representing their organization when they answer your survey questions. Meanwhile, if your unit of analysis is a website, you will want to collect data about different sites, such as how one kind of site compares to others in terms of traffic. We could use the term “site-level” data—just like we’d use the term “individual-level” data when individuals are the unit of analysis. We could also talk about “lower” and “higher” levels of analysis—with individual-level data existing on a lower level than group-level data, which may, in turn, be on a lower level than national data (see the discussion of micro , meso , and macro levels of analysis in Chapter 3: The Role of Theory in Research ). It is important to note that “higher” does not imply “better” in this case. We’re just talking about whether we’re looking at smaller or larger groupings of data.

Frequently, the unit of analysis is what we observe in our research—the source of our data—but that is not always the case. In fact, sometimes we want to make a distinction between units of analysis and units of observation . The unit of analysis is what we really want to study, but sometimes we have to get at it indirectly, by observing something else. For example, surveys often ask questions about families to understand their family structure, income, and various aspects of their well-being, but they need to get information about the family through individuals—specifically, the respondent who is answering survey questions on behalf of the family. In this case, the unit of analysis for the survey’s family-related questions would be the family, but the unit of observation would be the individual. Likewise, in our earlier examples, we talked about studying organizations and websites as our units of analysis, but doing so might involve talking to individuals—the directors of those organizations, or the users of those websites, respectively.

Analyzing multiple types of units of observation can give us a fuller picture of our unit of analysis. For example, if you are conducting research about what makes particular social media apps more addictive than others, then examining differences between the apps in terms of their functionality ( app as the unit of observation) would tell you one thing, but surveying individuals about their usage of apps ( user as the unit of observation) would clarify other aspects of that question. Furthermore, it is often a good idea to collect data from a lower level of analysis and sum up, or aggregate , that data, converting it into higher-level data. This can give you a bigger-picture perspective on your unit of analysis. For instance, to study teamwork in organizations, you can survey individuals in different teams and measure how much conflict or cohesion they perceive on their teams. You can then average their individual scores to create a “team-level” score on those particular ratings. Note, however, that issues can arise when we move in the opposite direction—from a higher to a lower level of analysis (see the sidebar Deeper Dive: Ecological Fallacies ).

Ultimately, the unit of analysis will help you determine both the population you are interested in and the sample that you will study to arrive at any conclusions about that population. So you need to choose it wisely. For example, let’s say you’re interested in the average pay of chief executive officers (CEOs) at companies across the nation. The unit of analysis would be the CEO, and the population would be all individuals in the country who work as company CEOs. But the unit of analysis would be different for a very similar research question: the average amount that U.S. companies pay their CEOs. In this case, the unit of analysis is actually the company because you are interested in how much companies pay their CEOs—not how much individuals are paid as CEOs. The difference is subtle, but the main point is that your unit of analysis is linked to whatever population you actually want to say something about—in this example, either individual CEOs, or companies that have CEOs.

Deeper Dive: Ecological Fallacies

A mismatch between the unit of analysis and the unit of observation can create issues for researchers. Let’s say you want to compare the residents of different states (your unit of analysis is the individual ), but you only have access to state-level data (your unit of observation is the state ). This is a problem because you generally do not want to be making claims about a lower level of analysis based only on aggregated data at a higher level—in this example, drawing conclusions about individuals based on the states where they reside. For instance, the fact that the population of a state is, on average, wealthier than the rest of the country does not mean that residents of that state are more likely to be rich than the average American. It may be that a small contingent of superrich people have pulled up the average wealth of the state, but its many other residents actually tend to be poorer than the average American. (As you might know from your statistics classes, in this situation, mean wealth—the group’s average—differs dramatically from median wealth—how much money the person smack in the middle of the income distribution has.) This logical error—making claims about the nature of individuals based on data from the groups they belong to—is called an ecological fallacy .

Émile Durkheim’s classic study of suicide is often mentioned as an example of an ecological fallacy. One of the pioneers of the field of sociology, Durkheim argued in his 1897 book Suicide that societies in which individuals struggled to feel they belonged—that is, populations with low levels of social integration—would experience more suicide. Ideally, the unit of analysis for such a study would be the individual. Specifically, we would want to study individuals and the factors that contributed to their deaths by suicide. But Durkheim did not have individual-level data. Instead, he had higher-level data about the number of suicides in each country. To test his theory that social integration safeguarded individuals against suicide, Durkheim compared countries that were mostly Protestant to those that were mostly Catholic. The idea was that Protestantism was a more individualistic and unstructured faith than Catholicism, and so the two varieties of religious belief could stand in for less and more social integration, respectively.

Durkheim’s analysis concluded that suicide was indeed higher in Protestant-majority countries. The problem was that his data only allowed him to say that Protestant countries were more likely to have higher suicide rates—not that Protestant individuals were more likely to commit suicide. To conclude the latter would have been an ecological fallacy, and yet that was the question that Durkheim truly wanted to answer. To his credit, Durkheim also tested his theory by studying suicide rates across localities within countries—another level of analysis (Selvin 1958). (Replicating your analysis across different types of data is a good way to check the robustness of your findings, as we will discuss in later chapters.) Durkheim found the same pattern of higher levels of Protestant belief correlating with higher suicide rates within counties, giving further credence to his theory. Although flawed, Durkheim’s analysis made creative use of the data that was available to him at the time, and his work continues to inspire researchers, including those studying the growing rates of suicide among less educated Americans since 2000 (Case and Deaton 2020).

Key Takeaways

- A unit of analysis is a member of the larger group you wish to be able to say something about at the end of your study. A unit of observation is a member of the population that you actually observe.

- When researchers confuse their units of analysis and observation, they may commit an ecological fallacy—that is, when we make possibly inaccurate claims about the nature of individuals based on data from the groups they belong to.

The Craft of Sociological Research by Victor Tan Chen; Gabriela León-Pérez; Julie Honnold; and Volkan Aytar is licensed under a Creative Commons Attribution-NonCommercial-ShareAlike 4.0 International License , except where otherwise noted.

Share This Book

Unravelling the “Unit of Analysis”: A Comprehensive Guide to the 5 Key Aspects

Exploring the essential aspects of the “Unit of Analysis” in research

Introduction

Every research starts with a question- what are we studying? How do we measure and categorize it? When faced with such conundrums, a pivotal component of research that experts consistently rely on is the Unit of Analysis. This essential building block defines the main entity being analyzed in a study, be it individuals, groups, institutions, or social interactions. Comprehending the Unit of Analysis is crucial, as it establishes the foundation for consequent stages for the research process.

Unit of Analysis

The Unit of Analysis is a pivotal concept in the realm of research and data collection. In layman’s terms, it refers to the primary entity or subject under observation or study in any research endeavor. We are studying and analyzing the ‘What and ‘Who’, for example while studying a students performance in academics, the student becomes the Unit of Analysis. Understanding and correctly identifying this unit is essential as it impacts the subsequent phases of research, from data collection to result interpretation.

Types of Units

There are a variety of entities that can function as the Unit of Analysis and it is important to acknowledge their presence.

1. Individuals: People are often the most studied entities.

2. Groups: This could range from families and friend groups to companies.

3. Artefacts: Physical entities like books, photos, or tools.

4. Geographical Units: Regions, cities, or towns.

5. Social Interactions: Tweets, Facebook likes, or any form of social media interaction.

Importance in Research

The significance of correctly identifying the Unit of Analysis cannot be overstated. It’s akin to knowing the ingredients before baking a cake. It:

Provides clarity regarding data collection methods.

Helps in identifying relevant statistical techniques.

Determines the scope of generalisations.

Avoids the pitfalls of the ecological fallacy and reductionism.

Common Mistakes

There’s no beating around the bush here; even seasoned researchers can occasionally trip up:

Ecological Fallacy: Incorrectly deducing individual behaviour from group data.

Reductionism: Oversimplifying a complex process by ignoring certain variables.

Unit of Analysis vs. Unit of Observation

While they appear similar, they have their differences. The Unit of Analysis is about what is studied, and Unit of Observation is the source of the data. For example, while researching the impact of workplace culture on employee morale, the company might be the unit of analysis, but the individual employees providing data are the units of observation.

Tips for Selection

Finding the right Unit of Analysis is sometimes formidable task, so:

Start by clearly defining the research question.

Determine the level at which you wish to generalise results.

Keep in mind the availability of data.

Role in Different Fields

The role of Unit of Analysis varies across different disciplines:

In Sociology

Here, researchers often study social groups, institutions, and structures. They delve into topics like group dynamics, societal norms, and institutions’ influence on individuals.

In Economics

Economists can analyse a gamut of entities, from individual consumers or businesses to entire countries. They might study spending habits, company growth, or global trade patterns.

In Environmental Studies

Research in this area of study centers around particular ecosystems, species, or geographical locations. They can examine the effects of pollution on organisms in the water or the influence of urban development on the quality of air.

In Literature

Literary critics might analyse a particular genre, an author’s body of work, or even individual books or poems. They would study themes, narrative techniques, or cultural contexts.

In Political Science

This might involve studying political parties, government policies, or public opinion. Research could revolve around election patterns, policy impacts, or citizens’ political behaviour.

Applications in Modern Technology

The digital era has significantly expanded the boundaries of the Unit of Analysis.

In Digital Marketing

In the realm of digital engagements, marketers assess a wide range of online interactions, such as clicks, views, thumbs-ups, shares and even the timing and duration of the engagement.

In Machine Learning

Datasets might comprise individual data points, clusters, or even entire databases. Analysts need to be spot-on with their units to train models effectively.

In E-commerce

From user reviews and product ratings to sales data, the e-commerce realm offers a myriad of Units of Analysis.

In Cybersecurity

Security experts examine the possible dangers and cyber attacks, and the attributes of potential hackers.

- What is the primary purpose of the Unit of Analysis in research?

It helps in specifying the focus of the study, ensuring clarity in data collection, and accuracy in result interpretation.

- How is the Unit of Analysis different from the Unit of Observation?

The former is what you study, and the latter is where you get your data from.

- Can a research study have multiple Units of Analysis?

Absolutely! A study can analyse multiple entities simultaneously, provided the research design supports it.

- Why is it crucial to correctly identify the Unit of Analysis?

Mistakes can lead to ecological fallacies of oversimplification, jeopardizing the study’s validity.

- How has the digital age influenced the concept of the Unit of Analysis?

It has expanded the scope, introducing new units like clicks, views, and digital interactions.

- Are there specific fields where the Unit of Analysis plays a more pivotal role?

Its importance is ubiquitous, but its nature might vary from fields like sociology to machine learning.

The Unit of Analysis is undeniably the cornerstone of any research study. From laying the groundwork to influencing data interpretation, it’s an element that demands attention, understanding, and precision. As the world of research evolves in this digital era, it becomes crucial for researchers to adjust and innovate, thus guaranteeing that the Unit of analysis integrates seamlessly with research goals. It’s a concept that, despite its intricacies, can truly elevate the quality of any research endeavor.

External Links/ Sources:

Unit of analysis

UNIT OF ANALYSIS AND UNIT OF OBSERVATION

The Unit of Analysis Explained

10 Miraculous Benefits of Cluster Sampling: A Comprehensive Guide

Get the funds you need with a signature loan, leave a reply cancel reply.

Your email address will not be published. Required fields are marked *

Save my name, email, and website in this browser for the next time I comment.

Sign up for a free trial today!

Centilio’s end-to-end marketing platform solves every marketing need of your organization.

TRY FOR FREE

Deleting your Account

Add a contact in centilio, accessing the sign journey.

© 2023 Centilio Inc, All Rights Reserved.

Want to create or adapt books like this? Learn more about how Pressbooks supports open publishing practices.

7.3 Unit of analysis and unit of observation

Learning objectives.

- Define units of analysis and units of observation, and describe the two common errors people make when they confuse the two

Another point to consider when designing a research project, and which might differ slightly in qualitative and quantitative studies, has to do with units of analysis and units of observation. These two items concern what you, the researcher, actually observe in the course of your data collection and what you hope to be able to say about those observations. A unit of analysis is the entity that you wish to be able to say something about at the end of your study, probably what you’d consider to be the main focus of your study. A unit of observation is the item (or items) that you actually observe, measure, or collect in the course of trying to learn something about your unit of analysis.

In a given study, the unit of observation might be the same as the unit of analysis, but that is not always the case. For example, a study on electronic gadget addiction may interview undergraduate students (our unit of observation) for the purpose of saying something about undergraduate students (our unit of analysis) and their gadget addiction. Perhaps, if we were investigating gadget addiction in elementary school children (our unit of analysis), we might collect observations from teachers and parents (our units of observation) because younger children may not report their behavior accurately. In this case and many others, units of analysis are not the same as units of observation. What is required, however, is for researchers to be clear about how they define their units of analysis and observation, both to themselves and to their audiences.

More specifically, your unit of analysis will be determined by your research question. Your unit of observation, on the other hand, is determined largely by the method of data collection that you use to answer that research question. We’ll take a closer look at methods of data collection later on in the textbook. For now, let’s consider again a study addressing students’ addictions to electronic gadgets. We’ll consider first how different kinds of research questions about this topic will yield different units of analysis. Then, we’ll think about how those questions might be answered and with what kinds of data. This leads us to a variety of units of observation.

If we were to explore which students are most likely to be addicted to their electronic gadgets, our unit of analysis would be individual students. We might mail a survey to students on campus, and our aim would be to classify individuals according to their membership in certain social groups in order to see how membership in those classes correlated with gadget addiction. For example, we might find that majors in new media, men, and students with high socioeconomic status are all more likely than other students to become addicted to their electronic gadgets. Another possibility would be to explore how students’ gadget addictions differ and how are they similar. In this case, we could conduct observations of addicted students and record when, where, why, and how they use their gadgets. In both cases, one using a survey and the other using observations, data are collected from individual students. Thus, the unit of observation in both examples is the individual.

Another common unit of analysis in social science inquiry is groups. Groups of course vary in size, and almost no group is too small or too large to be of interest to social scientists. Families, friendship groups, and group therapy participants are some common examples of micro-level groups examined by social scientists. Employees in an organization, professionals in a particular domain (e.g., chefs, lawyers, social workers), and members of clubs (e.g., Girl Scouts, Rotary, Red Hat Society) are all meso-level groups that social scientists might study. Finally, at the macro-level, social scientists sometimes examine citizens of entire nations or residents of different continents or other regions.

A study of student addictions to their electronic gadgets at the group level might consider whether certain types of social clubs have more or fewer gadget-addicted members than other sorts of clubs. Perhaps we would find that clubs that emphasize physical fitness, such as the rugby club and the scuba club, have fewer gadget-addicted members than clubs that emphasize cerebral activity, such as the chess club and the women’s studies club. Our unit of analysis in this example is groups because groups are what we hope to say something about. If we had instead asked whether individuals who join cerebral clubs are more likely to be gadget-addicted than those who join social clubs, then our unit of analysis would have been individuals. In either case, however, our unit of observation would be individuals.

Organizations are yet another potential unit of analysis that social scientists might wish to say something about. Organizations include entities like corporations, colleges and universities, and even nightclubs. At the organization level, a study of students’ electronic gadget addictions might explore how different colleges address the problem of electronic gadget addiction. In this case, our interest lies not in the experience of individual students but instead in the campus-to-campus differences in confronting gadget addictions. A researcher conducting a study of this type might examine schools’ written policies and procedures, so her unit of observation would be documents. However, because she ultimately wishes to describe differences across campuses, the college would be her unit of analysis.

In sum, there are many potential units of analysis that a social worker might examine, but some of the most common units include the following:

- Individuals

- Organizations

One common error people make when it comes to both causality and units of analysis is something called the ecological fallacy . This occurs when claims about one lower-level unit of analysis are made based on data from some higher-level unit of analysis. In many cases, this occurs when claims are made about individuals, but only group-level data have been gathered. For example, we might want to understand whether electronic gadget addictions are more common on certain campuses than on others. Perhaps different campuses around the country have provided us with their campus percentage of gadget-addicted students, and we learn from these data that electronic gadget addictions are more common on campuses that have business programs than on campuses without them. We then conclude that business students are more likely than non-business students to become addicted to their electronic gadgets. However, this would be an inappropriate conclusion to draw. Because we only have addiction rates by campus, we can only draw conclusions about campuses, not about the individual students on those campuses. Perhaps the social work majors on the business campuses are the ones that caused the addiction rates on those campuses to be so high. The point is we simply don’t know because we only have campus-level data. By drawing conclusions about students when our data are about campuses, we run the risk of committing the ecological fallacy.

On the other hand, another mistake to be aware of is reductionism. Reductionism occurs when claims about some higher-level unit of analysis are made based on data from some lower-level unit of analysis. In this case, claims about groups or macro-level phenomena are made based on individual-level data. An example of reductionism can be seen in some descriptions of the civil rights movement. On occasion, people have proclaimed that Rosa Parks started the civil rights movement in the United States by refusing to give up her seat to a white person while on a city bus in Montgomery, Alabama, in December 1955. Although it is true that Parks played an invaluable role in the movement, and that her act of civil disobedience gave others courage to stand up against racist policies, beliefs, and actions, to credit Parks with starting the movement is reductionist. Surely the confluence of many factors, from fights over legalized racial segregation to the Supreme Court’s historic decision to desegregate schools in 1954 to the creation of groups such as the Student Nonviolent Coordinating Committee (to name just a few), contributed to the rise and success of the American civil rights movement. In other words, the movement is attributable to many factors—some social, others political and others economic. Did Parks play a role? Of course she did—and a very important one at that. But did she cause the movement? To say yes would be reductionist.

It would be a mistake to conclude from the preceding discussion that researchers should avoid making any claims whatsoever about data or about relationships between levels of analysis. While it is important to be attentive to the possibility for error in causal reasoning about different levels of analysis, this warning should not prevent you from drawing well-reasoned analytic conclusions from your data. The point is to be cautious and conscientious in making conclusions between levels of analysis. Errors in analysis come from a lack of rigor and deviating from the scientific method.

Key Takeaways

- A unit of analysis is the item you wish to be able to say something about at the end of your study while a unit of observation is the item that you actually observe.

- When researchers confuse their units of analysis and observation, they may be prone to committing either the ecological fallacy or reductionism.

- Ecological fallacy- claims about one lower-level unit of analysis are made based on data from some higher-level unit of analysis

- Reductionism- when claims about some higher-level unit of analysis are made based on data at some lower-level unit of analysis

- Unit of analysis- entity that a researcher wants to say something about at the end of her study

- Unit of observation- the item that a researcher actually observes, measures, or collects in the course of trying to learn something about her unit of analysis

Image attributions

Binoculars by nightowl CC-0

Scientific Inquiry in Social Work Copyright © 2018 by Matthew DeCarlo is licensed under a Creative Commons Attribution-NonCommercial-ShareAlike 4.0 International License , except where otherwise noted.

Share This Book

Webinar ‘Praxis-Check Qualitätssicherung bei Online-Umfragen’

22.04.2024 11:00 - 11:45 UHR

Choosing the Right Unit of Analysis for Your Research Project

Table of content.

- Understanding the Unit of Analysis in Research

- Factors to Consider When Selecting the Right Unit of Analysis

- Common Mistakes to Avoid

A research project is like setting out on a voyage through uncharted territory; the unit of analysis is your compass, guiding every decision from methodology to interpretation.

It’s the beating heart of your data collection and the lens through which you view your findings. With deep-seated experience in research methodologies , our expertise recognizes that choosing an appropriate unit of analysis not only anchors your study but illuminates paths towards meaningful conclusions.

The right choice empowers researchers to extract patterns, answer pivotal questions, and offer insights into complex phenomena. But tread carefully—selecting an ill-suited unit can distort results or obscure significant relationships within data.

Remember this: A well-chosen unit of analysis acts as a beacon for accuracy and relevance throughout your scholarly inquiry. Continue reading to unlock the strategies for selecting this cornerstone of research design with precision—your project’s success depends on it.

Engage with us as we delve deeper into this critical aspect of research mastery.

Key Takeaways

- Your research questions and hypotheses drive the choice of your unit of analysis, shaping how you collect and interpret data.

- Avoid common mistakes like reductionism , which oversimplifies complex issues, and the ecological fallacy , where group-level findings are wrongly applied to individuals.

- Consider the availability and quality of data when selecting your unit of analysis to ensure your research is feasible and conclusions are valid.

- Differentiate between units of analysis (what you’re analyzing) and units of observation (what or who you’re observing) for clarity in your study.

- Ensure that your chosen unit aligns with both the theoretical framework and practical considerations such as time and resources.

The unit of analysis in research refers to the level at which data is collected and analyzed. It is essential for researchers to understand the different types of units of analysis, as well as their significance in shaping the research process and outcomes.

Definition and Importance

With resonio, the unit of analysis you choose lays the groundwork for your market research focus. Whether it’s individuals, organizations, or specific events, resonio’s platform facilitates targeted data collection and analysis to address your unique research questions. Our tool simplifies this selection process, ensuring that you can efficiently zero in on the most relevant unit for insightful and actionable results.

This crucial component serves as a navigational aid for your market research. The market research tool not only guides you in data collection but also in selecting the most effective sampling methods and approaches to hypothesis testing. Getting robust and reliable data, ensuring your research is both effective and straightforward.

Choosing the right unit of analysis is crucial, as it defines your research’s direction. resonio makes this easier, ensuring your choice aligns with your theoretical approach and data collection methods, thereby enhancing the validity and reliability of your results.

Additionally, resonio aids in steering clear of errors like reductionism and ecological fallacy, ensuring your conclusions match the data’s level of analysis

Difference between Unit of Analysis and Unit of Observation