Where does research begin and end, or where should it?

For some time now, I have been pondering the notion of how we need to consider changing where research begins and ends. Traditionally research starts with writing a grant and usually finishes with publishing a paper – it’s funny, I have never thought about the way we currently bookend our research with a bunch of writing to justify ourselves! But, with all the discussion around translation and research impact the goal posts are moving. Knowledge translation is the underpinning process that gives us a pathway to research impact, with impact being the ultimate thing we are trying to create and if you ask funders….measure!

Is translation an add on to the research process or should it be embedded? The answer is yes, no and maybe!

Simply put, if we are to create impact, then we must do research that is relevant, meets a user’s needs and is delivered in a way that is appropriate to those needs and understanding. There are exceptions to this rule – cue happy sigh of the basic scientists. Indeed, in some cases, the use or user may not be known until the end of the process. However, KT still applies, only it will look a little different. You may recall the differences in integrated and end-of-grant KT as shown below.

Integrated KT

Involves collaboration between researchers and knowledge users at every stage of the research process – from shaping the research question, to interpreting the results, to disseminating the research findings into practice. This co-production of research increases the likelihood that the results of a project will be relevant to end-users, thereby improving the possibility of uptake and application

End-of-grant KT

The dissemination of findings generated from research once a project is completed, depending on the extent to which there are mature findings appropriate for dissemination. Researchers who undertake traditional dissemination activities such as publishing in peer-reviewed journals and presenting their research at conferences and workshops are engaging in end-of-grant knowledge translation.

http://www.cihr-irsc.gc.ca/e/45321.html#a3

Relationships, interactions and dialogue between multidisciplinary research groups and stakeholder groups create buy-in are crucial for successful KT. These interactions increase the likely uptake of your knowledge in some form, be it to change behaviour, guide discussions, or for more tangible changes. The inclusion of a variety of stakeholders, from policymakers, planners and managers, private sector industries and consumer groups within different areas of health care and health policy, helps to shape questions and solutions while representing the interests of research user groups ( Sudsawad ). Additionally, the engagement between researchers and research user groups facilitates an understanding of each other environments that help the utilisation process ( Mitton ). This evidence points to the importance of early engagement and a more integrated process of translation, hence altering where the research process begins and ends.

Other types of research may not need consistent engagement, but I would argue that a well thought out KT plan would add significant value to any research endeavour, and this would be considered at the research development stage, hence the “maybe”. Additionally, we should be finding additional ways of sharing new knowledge from our research, be it sharing with an academic audience or a non-academic audience; this would require moving the finish line just a little.

I first started to ponder this question at the Medical Research Future Fund (MRFF) Public Forum held in Melbourne. It was explicitly stated that the fund was to be used to fund research and not translation. However, I think that translation should be considered an integral part of the process of research,l and how we share that work with others. The MRFF has an opportunity to move the goal posts and change where research begins and ends with its funding process, particularly if it is to meet its strategic objectives, all of which are pointed heavily toward translation and impact.

Leave a Reply Cancel reply

Your email address will not be published. Required fields are marked *

Research Design in Business and Management pp 53–84 Cite as

Writing up a Research Report

- Stefan Hunziker 3 &

- Michael Blankenagel 3

- First Online: 04 January 2024

302 Accesses

A research report is one big argument about how and why you came up with your conclusions. To make it a convincing argument, a typical guiding structure has developed. In the different chapters, there are distinct issues that need to be addressed to explain to the reader why your conclusions are valid. The governing principle for writing the report is full disclosure: to explain everything and ensure replicability by another researcher.

This is a preview of subscription content, log in via an institution .

Buying options

- Available as PDF

- Read on any device

- Instant download

- Own it forever

- Available as EPUB and PDF

- Compact, lightweight edition

- Dispatched in 3 to 5 business days

- Free shipping worldwide - see info

Tax calculation will be finalised at checkout

Purchases are for personal use only

Barros, L. O. (2016). The only academic phrasebook you’ll ever need . Createspace Independent Publishing Platform.

Google Scholar

Field, A. (2016). An adventure in statistics. The reality enigma . SAGE.

Field, A. (2020). Discovering statistics using IBM SPSS statistics (5th ed.). SAGE.

Früh, M., Keimer, I., & Blankenagel, M. (2019). The impact of Balanced Scorecard excellence on shareholder returns. IFZ Working Paper No. 0003/2019. https://zenodo.org/record/2571603#.YMDUafkzZaQ . Accessed: 9 June 2021.

Pearl, J., & Mackenzie, D. (2018). The book of why: The new science of cause and effect. Basic Books.

Yin, R. K. (2013). Case study research: Design and methods (5th ed.). SAGE.

Download references

Author information

Authors and affiliations.

Wirtschaft/IFZ, Campus Zug-Rotkreuz, Hochschule Luzern, Zug-Rotkreuz, Zug, Switzerland

Stefan Hunziker & Michael Blankenagel

You can also search for this author in PubMed Google Scholar

Corresponding author

Correspondence to Stefan Hunziker .

Rights and permissions

Reprints and permissions

Copyright information

© 2024 Springer Fachmedien Wiesbaden GmbH, part of Springer Nature

About this chapter

Cite this chapter.

Hunziker, S., Blankenagel, M. (2024). Writing up a Research Report. In: Research Design in Business and Management. Springer Gabler, Wiesbaden. https://doi.org/10.1007/978-3-658-42739-9_4

Download citation

DOI : https://doi.org/10.1007/978-3-658-42739-9_4

Published : 04 January 2024

Publisher Name : Springer Gabler, Wiesbaden

Print ISBN : 978-3-658-42738-2

Online ISBN : 978-3-658-42739-9

eBook Packages : Business and Management Business and Management (R0)

Share this chapter

Anyone you share the following link with will be able to read this content:

Sorry, a shareable link is not currently available for this article.

Provided by the Springer Nature SharedIt content-sharing initiative

- Publish with us

Policies and ethics

- Find a journal

- Track your research

Princeton Correspondents on Undergraduate Research

Flexibility of Research: What to Do When You Feel like You’ve Hit a Dead End

Research can be a truly thrilling experience–interesting data, new findings, surprising collections. However, research can also be incredibly frustrating, namely when you feel like your work isn’t going anywhere.

If you’ve ever been really excited about a topic, done a load of research, and still found that you aren’t making forward progress, this post is for you. I’m talking about hitting tough obstacles in your research–walls you can’t seem to get over–reaching what seems like a dead end.

I’ve been there before (oh more times than I would like to admit), and what I’ve found is that normally, this frustrating lack of a solution is not an indicator that your research is ‘wrong’ or isn’t worthwhile. In fact, it might actually mean that there is another question buried in your topic that needs to be addressed primarily.

I learned this after experiencing a dead–end–feeling just last year as I wrote my R3 (that’s right! flashback to everyone’s favorite: Writing Sem!*). My original plan had been to interpret a modern still life––picture the abstract still lifes of Stuart Davis and Arthur Dove––using symbols and traditions from realistic still lifes––imagine a Renaissance painting of a fruit bowl and flowers. Specifically, I had selected Morris Grave’s August Still Life which was currently on display in the Princeton University Art Museum in the exhibit The Artist Sees Differently: Modern Still Lifes from the Phillips Collection . I hoped to use a change–over–time comparison between modern and Renaissance still lifes to discover how modern still lifes may or may not showcase the unique characteristics of the modern age.

It was a great plan, but as I dug further into my research, I quickly hit a roadblock…

It was a great plan, but as I dug further into my research, I quickly hit a roadblock: art historians don’t interpret still lifes.

Seriously! I had pulled almost every book in the Princeton library system on still life paintings; they all were focused only on the formal elements of the works (such as compositional balance, technique, color, etc)! I could barely find any instruction on how to interpret a still life painting or any evidence that any one ever had!

I thought back to the exhibit I had picked my painting from; it was entire exhibit of still life paintings. I thought to myself ‘ Well, maybe the curator of this exhibit can tell me a little bit more about still lifes! He designed a whole exhibition of them! Surely there was some method to his interpretation of each work that helped him arrange the works.’

I arranged to meet with T. Butron Thurber, the Associate Director for Collections and Exhibitions who had curated the exhibit I was curious about. We got together and discussed the genre. Turns out he had organized the gallery based on formal relationships and style because he too had worries about interpreting still lifes.

This was initially extremely disappointing, but now I had a new motive for my essay:

Art historians, including Mr. Thurber, were choosing not to engage in the interpretation of still lifes. I needed to explore why this is the case. I realized the problem wasn’t about art historians not wanting to interpret still lifes. It was about a lack of a method. As Thurber expressed, art historians didn’t trust that there was a method that wasn’t over interpretive (making up meaning out of little evidence). By studying this why, I was better able to develop a method for interpreting these overlooked works of art that––in my opinion––addressed the concerns of art historians like Mr.Thurber.

My experience should remind us that research is a flexible process; you must follow where your evidence takes you. Research is variable; you can not control the answers you will receive. Nonetheless, new information can always push you forward. If you are hitting a roadblock in your research, maybe that doesn’t mean you need to pick a new topic but rather there is a different question, hidden in your topic, you need to explore first!

— Raya Ward, Natural Sciences Correspondent

* If you haven’t taken writing sem yet, your R3 is your final research paper in the class where you have almost full flexibility to pick your topic.

Share this:

- Share on Tumblr

- USC Libraries

- Research Guides

Organizing Your Social Sciences Research Paper

- The Research Problem/Question

- Purpose of Guide

- Design Flaws to Avoid

- Independent and Dependent Variables

- Glossary of Research Terms

- Reading Research Effectively

- Narrowing a Topic Idea

- Broadening a Topic Idea

- Extending the Timeliness of a Topic Idea

- Academic Writing Style

- Applying Critical Thinking

- Choosing a Title

- Making an Outline

- Paragraph Development

- Research Process Video Series

- Executive Summary

- The C.A.R.S. Model

- Background Information

- Theoretical Framework

- Citation Tracking

- Content Alert Services

- Evaluating Sources

- Primary Sources

- Secondary Sources

- Tiertiary Sources

- Scholarly vs. Popular Publications

- Qualitative Methods

- Quantitative Methods

- Insiderness

- Using Non-Textual Elements

- Limitations of the Study

- Common Grammar Mistakes

- Writing Concisely

- Avoiding Plagiarism

- Footnotes or Endnotes?

- Further Readings

- Generative AI and Writing

- USC Libraries Tutorials and Other Guides

- Bibliography

A research problem is a definite or clear expression [statement] about an area of concern, a condition to be improved upon, a difficulty to be eliminated, or a troubling question that exists in scholarly literature, in theory, or within existing practice that points to a need for meaningful understanding and deliberate investigation. A research problem does not state how to do something, offer a vague or broad proposition, or present a value question. In the social and behavioral sciences, studies are most often framed around examining a problem that needs to be understood and resolved in order to improve society and the human condition.

Bryman, Alan. “The Research Question in Social Research: What is its Role?” International Journal of Social Research Methodology 10 (2007): 5-20; Guba, Egon G., and Yvonna S. Lincoln. “Competing Paradigms in Qualitative Research.” In Handbook of Qualitative Research . Norman K. Denzin and Yvonna S. Lincoln, editors. (Thousand Oaks, CA: Sage, 1994), pp. 105-117; Pardede, Parlindungan. “Identifying and Formulating the Research Problem." Research in ELT: Module 4 (October 2018): 1-13; Li, Yanmei, and Sumei Zhang. "Identifying the Research Problem." In Applied Research Methods in Urban and Regional Planning . (Cham, Switzerland: Springer International Publishing, 2022), pp. 13-21.

Importance of...

The purpose of a problem statement is to:

- Introduce the reader to the importance of the topic being studied . The reader is oriented to the significance of the study.

- Anchors the research questions, hypotheses, or assumptions to follow . It offers a concise statement about the purpose of your paper.

- Place the topic into a particular context that defines the parameters of what is to be investigated.

- Provide the framework for reporting the results and indicates what is probably necessary to conduct the study and explain how the findings will present this information.

In the social sciences, the research problem establishes the means by which you must answer the "So What?" question. This declarative question refers to a research problem surviving the relevancy test [the quality of a measurement procedure that provides repeatability and accuracy]. Note that answering the "So What?" question requires a commitment on your part to not only show that you have reviewed the literature, but that you have thoroughly considered the significance of the research problem and its implications applied to creating new knowledge and understanding or informing practice.

To survive the "So What" question, problem statements should possess the following attributes:

- Clarity and precision [a well-written statement does not make sweeping generalizations and irresponsible pronouncements; it also does include unspecific determinates like "very" or "giant"],

- Demonstrate a researchable topic or issue [i.e., feasibility of conducting the study is based upon access to information that can be effectively acquired, gathered, interpreted, synthesized, and understood],

- Identification of what would be studied, while avoiding the use of value-laden words and terms,

- Identification of an overarching question or small set of questions accompanied by key factors or variables,

- Identification of key concepts and terms,

- Articulation of the study's conceptual boundaries or parameters or limitations,

- Some generalizability in regards to applicability and bringing results into general use,

- Conveyance of the study's importance, benefits, and justification [i.e., regardless of the type of research, it is important to demonstrate that the research is not trivial],

- Does not have unnecessary jargon or overly complex sentence constructions; and,

- Conveyance of more than the mere gathering of descriptive data providing only a snapshot of the issue or phenomenon under investigation.

Bryman, Alan. “The Research Question in Social Research: What is its Role?” International Journal of Social Research Methodology 10 (2007): 5-20; Brown, Perry J., Allen Dyer, and Ross S. Whaley. "Recreation Research—So What?" Journal of Leisure Research 5 (1973): 16-24; Castellanos, Susie. Critical Writing and Thinking. The Writing Center. Dean of the College. Brown University; Ellis, Timothy J. and Yair Levy Nova. "Framework of Problem-Based Research: A Guide for Novice Researchers on the Development of a Research-Worthy Problem." Informing Science: the International Journal of an Emerging Transdiscipline 11 (2008); Thesis and Purpose Statements. The Writer’s Handbook. Writing Center. University of Wisconsin, Madison; Thesis Statements. The Writing Center. University of North Carolina; Tips and Examples for Writing Thesis Statements. The Writing Lab and The OWL. Purdue University; Selwyn, Neil. "‘So What?’…A Question that Every Journal Article Needs to Answer." Learning, Media, and Technology 39 (2014): 1-5; Shoket, Mohd. "Research Problem: Identification and Formulation." International Journal of Research 1 (May 2014): 512-518.

Structure and Writing Style

I. Types and Content

There are four general conceptualizations of a research problem in the social sciences:

- Casuist Research Problem -- this type of problem relates to the determination of right and wrong in questions of conduct or conscience by analyzing moral dilemmas through the application of general rules and the careful distinction of special cases.

- Difference Research Problem -- typically asks the question, “Is there a difference between two or more groups or treatments?” This type of problem statement is used when the researcher compares or contrasts two or more phenomena. This a common approach to defining a problem in the clinical social sciences or behavioral sciences.

- Descriptive Research Problem -- typically asks the question, "what is...?" with the underlying purpose to describe the significance of a situation, state, or existence of a specific phenomenon. This problem is often associated with revealing hidden or understudied issues.

- Relational Research Problem -- suggests a relationship of some sort between two or more variables to be investigated. The underlying purpose is to investigate specific qualities or characteristics that may be connected in some way.

A problem statement in the social sciences should contain :

- A lead-in that helps ensure the reader will maintain interest over the study,

- A declaration of originality [e.g., mentioning a knowledge void or a lack of clarity about a topic that will be revealed in the literature review of prior research],

- An indication of the central focus of the study [establishing the boundaries of analysis], and

- An explanation of the study's significance or the benefits to be derived from investigating the research problem.

NOTE : A statement describing the research problem of your paper should not be viewed as a thesis statement that you may be familiar with from high school. Given the content listed above, a description of the research problem is usually a short paragraph in length.

II. Sources of Problems for Investigation

The identification of a problem to study can be challenging, not because there's a lack of issues that could be investigated, but due to the challenge of formulating an academically relevant and researchable problem which is unique and does not simply duplicate the work of others. To facilitate how you might select a problem from which to build a research study, consider these sources of inspiration:

Deductions from Theory This relates to deductions made from social philosophy or generalizations embodied in life and in society that the researcher is familiar with. These deductions from human behavior are then placed within an empirical frame of reference through research. From a theory, the researcher can formulate a research problem or hypothesis stating the expected findings in certain empirical situations. The research asks the question: “What relationship between variables will be observed if theory aptly summarizes the state of affairs?” One can then design and carry out a systematic investigation to assess whether empirical data confirm or reject the hypothesis, and hence, the theory.

Interdisciplinary Perspectives Identifying a problem that forms the basis for a research study can come from academic movements and scholarship originating in disciplines outside of your primary area of study. This can be an intellectually stimulating exercise. A review of pertinent literature should include examining research from related disciplines that can reveal new avenues of exploration and analysis. An interdisciplinary approach to selecting a research problem offers an opportunity to construct a more comprehensive understanding of a very complex issue that any single discipline may be able to provide.

Interviewing Practitioners The identification of research problems about particular topics can arise from formal interviews or informal discussions with practitioners who provide insight into new directions for future research and how to make research findings more relevant to practice. Discussions with experts in the field, such as, teachers, social workers, health care providers, lawyers, business leaders, etc., offers the chance to identify practical, “real world” problems that may be understudied or ignored within academic circles. This approach also provides some practical knowledge which may help in the process of designing and conducting your study.

Personal Experience Don't undervalue your everyday experiences or encounters as worthwhile problems for investigation. Think critically about your own experiences and/or frustrations with an issue facing society or related to your community, your neighborhood, your family, or your personal life. This can be derived, for example, from deliberate observations of certain relationships for which there is no clear explanation or witnessing an event that appears harmful to a person or group or that is out of the ordinary.

Relevant Literature The selection of a research problem can be derived from a thorough review of pertinent research associated with your overall area of interest. This may reveal where gaps exist in understanding a topic or where an issue has been understudied. Research may be conducted to: 1) fill such gaps in knowledge; 2) evaluate if the methodologies employed in prior studies can be adapted to solve other problems; or, 3) determine if a similar study could be conducted in a different subject area or applied in a different context or to different study sample [i.e., different setting or different group of people]. Also, authors frequently conclude their studies by noting implications for further research; read the conclusion of pertinent studies because statements about further research can be a valuable source for identifying new problems to investigate. The fact that a researcher has identified a topic worthy of further exploration validates the fact it is worth pursuing.

III. What Makes a Good Research Statement?

A good problem statement begins by introducing the broad area in which your research is centered, gradually leading the reader to the more specific issues you are investigating. The statement need not be lengthy, but a good research problem should incorporate the following features:

1. Compelling Topic The problem chosen should be one that motivates you to address it but simple curiosity is not a good enough reason to pursue a research study because this does not indicate significance. The problem that you choose to explore must be important to you, but it must also be viewed as important by your readers and to a the larger academic and/or social community that could be impacted by the results of your study. 2. Supports Multiple Perspectives The problem must be phrased in a way that avoids dichotomies and instead supports the generation and exploration of multiple perspectives. A general rule of thumb in the social sciences is that a good research problem is one that would generate a variety of viewpoints from a composite audience made up of reasonable people. 3. Researchability This isn't a real word but it represents an important aspect of creating a good research statement. It seems a bit obvious, but you don't want to find yourself in the midst of investigating a complex research project and realize that you don't have enough prior research to draw from for your analysis. There's nothing inherently wrong with original research, but you must choose research problems that can be supported, in some way, by the resources available to you. If you are not sure if something is researchable, don't assume that it isn't if you don't find information right away--seek help from a librarian !

NOTE: Do not confuse a research problem with a research topic. A topic is something to read and obtain information about, whereas a problem is something to be solved or framed as a question raised for inquiry, consideration, or solution, or explained as a source of perplexity, distress, or vexation. In short, a research topic is something to be understood; a research problem is something that needs to be investigated.

IV. Asking Analytical Questions about the Research Problem

Research problems in the social and behavioral sciences are often analyzed around critical questions that must be investigated. These questions can be explicitly listed in the introduction [i.e., "This study addresses three research questions about women's psychological recovery from domestic abuse in multi-generational home settings..."], or, the questions are implied in the text as specific areas of study related to the research problem. Explicitly listing your research questions at the end of your introduction can help in designing a clear roadmap of what you plan to address in your study, whereas, implicitly integrating them into the text of the introduction allows you to create a more compelling narrative around the key issues under investigation. Either approach is appropriate.

The number of questions you attempt to address should be based on the complexity of the problem you are investigating and what areas of inquiry you find most critical to study. Practical considerations, such as, the length of the paper you are writing or the availability of resources to analyze the issue can also factor in how many questions to ask. In general, however, there should be no more than four research questions underpinning a single research problem.

Given this, well-developed analytical questions can focus on any of the following:

- Highlights a genuine dilemma, area of ambiguity, or point of confusion about a topic open to interpretation by your readers;

- Yields an answer that is unexpected and not obvious rather than inevitable and self-evident;

- Provokes meaningful thought or discussion;

- Raises the visibility of the key ideas or concepts that may be understudied or hidden;

- Suggests the need for complex analysis or argument rather than a basic description or summary; and,

- Offers a specific path of inquiry that avoids eliciting generalizations about the problem.

NOTE: Questions of how and why concerning a research problem often require more analysis than questions about who, what, where, and when. You should still ask yourself these latter questions, however. Thinking introspectively about the who, what, where, and when of a research problem can help ensure that you have thoroughly considered all aspects of the problem under investigation and helps define the scope of the study in relation to the problem.

V. Mistakes to Avoid

Beware of circular reasoning! Do not state the research problem as simply the absence of the thing you are suggesting. For example, if you propose the following, "The problem in this community is that there is no hospital," this only leads to a research problem where:

- The need is for a hospital

- The objective is to create a hospital

- The method is to plan for building a hospital, and

- The evaluation is to measure if there is a hospital or not.

This is an example of a research problem that fails the "So What?" test . In this example, the problem does not reveal the relevance of why you are investigating the fact there is no hospital in the community [e.g., perhaps there's a hospital in the community ten miles away]; it does not elucidate the significance of why one should study the fact there is no hospital in the community [e.g., that hospital in the community ten miles away has no emergency room]; the research problem does not offer an intellectual pathway towards adding new knowledge or clarifying prior knowledge [e.g., the county in which there is no hospital already conducted a study about the need for a hospital, but it was conducted ten years ago]; and, the problem does not offer meaningful outcomes that lead to recommendations that can be generalized for other situations or that could suggest areas for further research [e.g., the challenges of building a new hospital serves as a case study for other communities].

Alvesson, Mats and Jörgen Sandberg. “Generating Research Questions Through Problematization.” Academy of Management Review 36 (April 2011): 247-271 ; Choosing and Refining Topics. Writing@CSU. Colorado State University; D'Souza, Victor S. "Use of Induction and Deduction in Research in Social Sciences: An Illustration." Journal of the Indian Law Institute 24 (1982): 655-661; Ellis, Timothy J. and Yair Levy Nova. "Framework of Problem-Based Research: A Guide for Novice Researchers on the Development of a Research-Worthy Problem." Informing Science: the International Journal of an Emerging Transdiscipline 11 (2008); How to Write a Research Question. The Writing Center. George Mason University; Invention: Developing a Thesis Statement. The Reading/Writing Center. Hunter College; Problem Statements PowerPoint Presentation. The Writing Lab and The OWL. Purdue University; Procter, Margaret. Using Thesis Statements. University College Writing Centre. University of Toronto; Shoket, Mohd. "Research Problem: Identification and Formulation." International Journal of Research 1 (May 2014): 512-518; Trochim, William M.K. Problem Formulation. Research Methods Knowledge Base. 2006; Thesis and Purpose Statements. The Writer’s Handbook. Writing Center. University of Wisconsin, Madison; Thesis Statements. The Writing Center. University of North Carolina; Tips and Examples for Writing Thesis Statements. The Writing Lab and The OWL. Purdue University; Pardede, Parlindungan. “Identifying and Formulating the Research Problem." Research in ELT: Module 4 (October 2018): 1-13; Walk, Kerry. Asking an Analytical Question. [Class handout or worksheet]. Princeton University; White, Patrick. Developing Research Questions: A Guide for Social Scientists . New York: Palgrave McMillan, 2009; Li, Yanmei, and Sumei Zhang. "Identifying the Research Problem." In Applied Research Methods in Urban and Regional Planning . (Cham, Switzerland: Springer International Publishing, 2022), pp. 13-21.

- << Previous: Background Information

- Next: Theoretical Framework >>

- Last Updated: Apr 19, 2024 11:16 AM

- URL: https://libguides.usc.edu/writingguide

- 6 Moments of Research “Failure” and How to Deal With Them

- Coates Library Blog

“The artist, perhaps more than most people, inhabits failure, degrees of failure and accommodation and compromise: but the terms of his failure are generally secret” observes Joyce Carol Oates in her essay, “Notes on Failure” (231).

Substitute “researcher” for “artist” and Oates’ description still rings true. Yet while the failures and secrets of the research process are par for the course, we often overlook this fact and think that our own particular failures are unique and even shamefully so.

For this reason, for all the summer researchers taking on bigger projects than they have experienced in their classes so far, for all the incoming first-years who will soon receive more rigorous research assignments than they have previously encountered, I hope this brief catalog of common research “failures” might, if nothing else, do away with some of the unnecessary secrecy.

1. Procrastination

Having trouble getting started can feel like failure. If this is you, you may find this NYTimes article helpful. Journalist Charlotte Lieberman interviews several psychology researchers who describe procrastination not as a task or productivity problem, but an emotional one.

If you are struggling to get started, it might be worth taking a minute to ask yourself what exactly you’re afraid to face. Are you unsure of what you’re supposed to do and scared to ask? Worried you’re not smart enough to do the research? Bored or uninterested and therefore avoiding the fact that you don’t like whatever it is you now have to spend the whole summer working on?

Facing these feelings or even talking to your professor about them may help you break through your slump.

2. Finding sources

In doing library or archival research, sometimes it can feel like a failure if the sources you hoped to find don’t immediately appear. In this case, there is one very easy step you can take: meet with a librarian to see if your assessment of the situation is true!

Of course, it’s also possible that what you wanted to find does not exist. In this case, a librarian or professor can still help, by confirming the gap or absence you have noted, and also in helping you figure out what to do with that information (hint: the lack of information/attention on a topic is itself a finding, and as such a starting point, not an ending).

3. Staying focused

If you are anything like me or a number of other Trinity students, this is a common challenge. I often cast my research net wider and wider until it becomes impossible to pull in and sort through. For me, one way to mediate this tendency is to involve others in my process.

Try talking through your ideas and questions with someone else—a friend, professor, or librarian. See if the connections and project scope you see in your mind actually make sense when you say them out loud. Just the act of talking through your ideas may help you make sense of them yourself! Alternatively, talking to someone else can also help you determine whether different components of the project could stand separate projects in their own right.

Writing can be useful at this stage, too. Sometimes the urge to keep researching is really about something else—like avoiding writing and committing your thoughts to paper.

4. Having something to say

This, I think, can be an especially secretive secret. Who wants to admit they don’t have anything compelling to say about their research subject?

However, especially in assigned research or research under a time constraint, you may not always have an immediate or clear response to your research. Or perhaps your findings are not as striking as you hoped, or your hypothesis is not confirmed.

Remember, even the lack of a dramatic finding is still a finding; your research is not, in the end, only about you. It’s also about the community and conversation around your subject. What may seem like a small contribution to you is still part of shaping that community and future research.

5. Organizing and documenting

The failure to organize your research—the process, sources, methods, etc.—can sneak up on you. At least it does for me!

It might be small things, like not writing down the exact way you came up with a particular result, or getting a little sloppy with your citations. And yet, these little details matter. They are what gives your work polish and credibility.

You don’t have to be a “Type A” personality in order to become more organized. Rather, think about organization in research as an ongoing practice. By paying attention to where you’ve missed the mark this time, you can pinpoint small ways to improve the next time.

6. Sharing your work

Usually in the form of writing or presentation, this stage can be laden with anxiety. It also raises the possibility of public failure: what if you submit your work and it’s rejected? What if you bomb your presentation, or you don’t get the grade you hoped for on your paper?

It is difficult not to interpret rejection or an undesirable grade as failure. After all, if success is about meeting a specific expectation, and you do not meet it—that is one definition of failure. And yet, the story you tell yourself about that failure may do more damage than the failure itself.

My own story

Especially when the stakes are high, it’s easy to interpret failure as an ending. As I learned, though, it isn’t. While some failures may indeed mark the end of things going “the way they’re supposed to” or “according to plan,” they are never the final word. In my experience, and in the experiences of many students I’ve worked with on research projects, moments of doubt, fear, and failure often give way to later feelings of accomplishment, pride, and growth. In fact, this pattern is so common that information science researchers have documented it.

Engaging in research will no doubt bring you face to face with some form of failure. But you don’t have to live in that experience alone, in secret. Failure is a normal part of research, learning, and growing as a person.

At Trinity, there are always people ready to help you figure out how to get to the next part of the story. Failure is not an ending.

Kuhlthau, Carol Collier. “Information Search Process.” Rutgers.edu . 5 June 2019, http://wp.comminfo.rutgers.edu/ckuhlthau/information-search-process/

Lieberman, Charlotte. “Why You Procrastinate (It Has Nothing to Do With Self-Control).” New York Times , 25 March 2019, https://www.nytimes.com/2019/03/25/smarter-living/why-you- procrastinate-it-has-nothing-to-do-with-self-control.html

Oates, Joyce Carol. “Notes on Failure.” The Hudson Review , vol. 35, no. 2, 1982, pp. 231–245. JSTOR , www.jstor.org/stable/3850783 .

Recent Posts

- Working in Coates Library

- ResearchRabbit: an AI Research Tool

- Black History Month: from our students

- Obsolete Media: An internship

- Willa Cather Collection

Recent Comments

- February 2024

- January 2024

- December 2023

- October 2023

- August 2023

- January 2023

- November 2022

- October 2022

- September 2022

- August 2022

- February 2022

- January 2022

- December 2021

- November 2021

- October 2021

- September 2021

- August 2021

- February 2021

- December 2020

- October 2020

- September 2020

- August 2020

- February 2020

- January 2020

- December 2019

- November 2019

- October 2019

- September 2019

- August 2019

- February 2019

- January 2019

- October 2018

- September 2018

- August 2018

- About the Library

- About the Site

- Collections

- Information

- Special Collections

- Uncategorized

- Entries feed

- Comments feed

- WordPress.org

- Skip to main content

- Skip to primary sidebar

IResearchNet

Self-Defeating Behavior

Self-defeating behavior definition.

For social psychologists, a self-defeating behavior is any behavior that normally ends up with a result that is something the person doing the behavior doesn’t want to happen. If you are trying to accomplish some goal, and something you do makes it less likely that you will reach that goal, then that is a self-defeating behavior. If the goal is reached, but the ways you used to reach the goal cause more bad things to happen than the positive things you get from achieving the goal, that is also self-defeating behavior. Social psychologists have been studying self-defeating behaviors for at least 30 years. And although they have identified several things that seem to lead to self-defeating behaviors, much more can be learned about what self-defeating behaviors have in common, and how to get people to reduce the impact of these behaviors in their lives.

Self-Defeating Behavior Background and History

Academic Writing, Editing, Proofreading, And Problem Solving Services

Get 10% off with 24start discount code.

The group revising the DSM in the 1980s wanted to include a disorder where people showed “a pervasive pattern of self-defeating behaviors.” Some people didn’t want this to be included because they said that there wasn’t enough research to show that a disorder like this really existed; some people didn’t want it to be included because they said that the behaviors that supposedly made up the self-defeating personality disorder were really parts of other personality disorders; and finally, some people didn’t want it to be included because they were afraid that the disorder would be biased against women and would excuse spouse abusers, blaming their victims by claiming that the victims had self-defeating personality disorder.

In the edition of the DSM published in 1987 (called the DSM-III-R), self-defeating personality disorder was included in an appendix and was not considered an official diagnosis. More recent editions of the DSM do not mention the self-defeating personality disorder at all.

Even though social psychologists were inspired by this controversy, they are interested in studying behaviors of normal people, not those of people who are mentally ill. Although some psychiatrists believe that all humans are driven to harm themselves, most people are not motivated in this way. Most humans are interested in accomplishing their goals, not in harming themselves.

Types of Self-Defeating Behavior

Social psychologists have divided self-defeating behaviors into two types. One type is called counterproductive behaviors. A counterproductive behavior happens when people try to get something they want, but the way they try to get it ends up not being a good one. One type of counterproductive behavior occurs when people persevere at something beyond the time that it is realistic for them to achieve the desired outcome. For example, students taking a class, and doing very poorly, sometimes refuse to drop the class. They think that if they stick it out, they will be able to pull their grades up and pass the class. But, it may just be too late for some, or they may not have the ability to really pass the class. Most students’ goals are to get a degree with as high a grade point average as possible, so refusing to drop the class is a self-defeating behavior. Counterproductive behaviors usually happen because the person has a wrong idea either about himself or herself or about the situation the person is in. The students have an incorrect idea about their own abilities; they think they can succeed, but they can’t.

The second type of self-defeating behavior is called trade-offs. We make trade-offs in our behavior all the time. For example, you may decide not to go to a party so you can study for an exam. This is a trade-off: You are trading the fun you will have at the party for the benefit you will get from studying (a better grade).

This example of a trade-off is not self-defeating. You are probably going to come out a winner: The benefit of studying will, in the end, outweigh the benefit of going to the party. But, some kinds of trade-offs are self-defeating: The cost that you have to accept is greater than the benefit that you end up getting. One example is neglecting to take care of yourself physically. When people don’t exercise, go to the dentist, or follow the doctor’s orders, they are risking their health to either avoid some short-term pain or discomfort (such as the discomfort of exercise or the anxiety that the dentist causes).

Another example of a self-defeating trade-off is called self-handicapping. Self-handicapping is when people do something to make their success on a task less likely to happen. People do this so that they will have a built-in excuse if they fail. For example, students may get drunk the night before a big exam. If they do poorly on the exam, they have a built in excuse: They didn’t study and they were hungover. This way they avoid thinking that they don’t have the ability to do well in the class.

Some common self-defeating behaviors represent a combination of counterproductive behaviors and trade-offs. Procrastination is a familiar example. When you think about why people procrastinate, you probably think about it as a trade-off. People want to do something more fun, or something that is less difficult, or something that allows them to grow or develop more, instead of the thing they are putting off. But, sometimes people explain why they procrastinate in another way: That they do better work if they wait until the last minute. If this is really the reason people procrastinate (instead of something people just say to justify their procrastination), then it is a counterproductive strategy; they believe that they will do better work if they wait until the last minute, but that is not usually the case. (Research shows that college students who procrastinate get worse grades, have more stress, and are more likely to get sick.)

Alcohol or drug abuse is another self-defeating behavior. Many people use alcohol and drugs responsibly, and do it to gain pleasure or pain relief. But for addicts, and in some situations for anyone, substance use is surely self-defeating. Substance use may be a trade-off: A person trades the costs of using drugs or alcohol (health risks, addiction, embarrassing or dangerous behavior, legal problems) for benefits (feeling good, not having to think about one’s inadequacies). Usually over the long run, however, the costs are much greater than the benefits.

Even suicide can be looked at as either a self-defeating trade-off or counterproductive behavior. People who commit suicide are trying to escape from negative things in their life. They are trading off the fear of death, and the good things in life, because they think the benefit of no longer feeling the way they do will be greater than what they are giving up. But, suicide can also be thought of as a counterproductive behavior. People may think that taking their life will allow them to reach a certain goal (not having problems).

Causes and Consequences of Self-Defeating Behavior

Causes of different self-defeating behaviors vary; however, most self-defeating behaviors have some things in common. People who engage in self-defeating behaviors often feel a threat to their egos or self-esteem; there is usually some element of bad mood involved in self-defeating behaviors. And, people who engage in self-defeating behaviors often focus on the short-term consequences of their behavior, and ignore or underestimate the long-term consequences.

Procrastination is an example that combines all three of these factors. One reason people procrastinate is that they are afraid that when they do the thing they are putting off, it will show that they are not as good or competent as they want to be or believe they are (threat to self). Also, people procrastinate because the thing they put off causes anxiety (a negative emotion). Finally, people who procrastinate are focusing on the short-term effects of their behavior (it will feel good right now to watch TV instead of do my homework), but they are ignoring the long-term consequences (if I put off my homework, either I’ll get an F or I will have to pull an all-nighter to get it done).

These three common causes are all related to each other. If you have a goal for yourself, or if other people expect certain things from you, and you fail or think you will fail to meet the goal, this is a threat to your self-esteem or ego. That will usually make you feel bad (negative mood). So, ego-threats make you have negative moods.

But, negative moods also can lead to ego threats. When people are in negative moods, they set higher standards or goals for themselves. So, this will make them more likely to fail. Here is a vicious cycle: Failing to meet your goals is a threat to your ego, which leads to negative emotion, which leads you to set higher standards, which makes you fail more. Negative moods also can lead you to think more about the immediate consequences of your actions, instead of the long-term consequences. This, too, can make people do something self-defeating.

References:

- Baumeister, R. F. (1997). Esteem threat, self-regulatory breakdown, and emotional distress as factors in self-defeating behavior. Review of General Psychology, 1, 145-174.

- Baumeister, R. F., & Scher, S. J. (1988). Self-defeating behavior patterns among normal individuals: Review and analysis of common self-destructive tendencies. Psychological Bulletin, 104, 3-22.

- Curtis, R. C. (Ed.). (1989). Self-defeating behaviors: Experimental research, clinical impressions, and practical implications. New York: Plenum.

- Fiester, S. J. (1995). Self-defeating personality disorder. In W. J. Livesley (Ed.), The DSM-IV personality disorders (pp. 341-358). New York: Guilford Press.

- Widiger, T. A. (1995). Deletion of self-defeating and sadistic personality disorders. In W. J. Livesley (Ed.), The DSM-IV personality disorders (pp. 359-373). New York: Guilford Press.

- Spartanburg Community College Library

- SCC Research Guides

Evaluating Sources

- Domain Endings

Domain endings are the end part of a URL (.com, .org, .edu, etc.). Sometimes the domain ending can give you a clue to a website's purpose. While domain endings can give you some ideas about a website, they should not be the only way you determine if a website is credible or not.

.com stands for commercial sites, but can really be anything. Below are some examples of .com sites you are probably familiar with:

- Huffington Post: generally considered to have a liberal slant, but does have articles with legitimate information and credible sources.

- NBC : official television network with information about news and current events; not always guaranteed to be accurate, but in general a credible .com website.

- The Atlantic: considered to have a moderate worldview, their news articles are considered to be of a higher quality.

- Biography : a website for the television channel Biography . Often a good source for newer figures who have not had time to be printed in more formal biographical publications.

- Glamour : may have occasional articles that are relevant for specific topics. It depends on the context and the assignment needs. If a student is preparing a speech on a popular culture topic, Glamour may be an acceptable source of information.

.org websites should be organizations, but again, they can really be anything, since the purchase of .org domain names is not restricted. The Modern Language Association, American Cancer Society, and the American Welding Society are all examples of respected, well-known websites and are considered to be "the" organization that drives the discipline.

- NPR : an example of a well-known, respected news organization.

- Wikipedia : often students are allowed to use .org websites, but not Wikipedia . This creates a little conflict in the information relayed to students. While Wikipedia is a .org website, it can technically be edited by anyone, which is why it is not always the most credible source to use for an assignment.

- Martin Luther King : sounds respectable. While not obvious at first glance, but if you look into the publisher of the website, you will find the group, Stormfront, is a white nationalist organization with a very biased opinion of Dr. King.

- Institute for Historical Review : this site looks and sounds official. The article, "Context and Perspective in the 'Holocaust' Controversy," is published in a journal with the biography of the author provided. But what do we know about the organization? The journal is actually published by a well-known Holocaust denial group. And if you look at the author's credibility, you find that he is not a historian, but a professor of electrical engineering. To further raise suspicions, most of the citations in the article are to other works by the author.

.edu websites should contain credible materials, at least that is our expectation. The domain .edu is restricted for purchase, however, there are a few sites that have been grandfathered in (not legitimate schools), but the other general information to keep in mind is that schools often offer web space to students for student work. Many schools also offer a digital commons to share student and faculty work, that has not been peer-reviewed. The sites look professional, so it is important to understand what type of information you are looking at.

- "Drug Shortages: The Problem of Inadequate Profits": this is a student paper located in the Harvard Digital Commons. The paper looks very similar to any other article you might locate in a database, meaning it may be difficult to determine this is another student's work.

- "Researcher Dispels Myth of Dioxins and Plastic Water Bottles": This article on the Johns Hopkins website is written by a professor with a PhD in environmental science and works in the Department of Public Health at the university. There is a measure of credibility to this article, but it has not been formally published or peer-reviewed. You will need to consider whether the information is authoritative for your information need.

- "Gender Bias in Microlending: Do Opposite Attract?": this example is a student thesis for a master's program. There should be some oversight by the student's professors, but the authority of the information would be dependent on how the student is using it. Is a master's thesis acceptable material for your assignment?

Miscellaneous Domain

Miscellaneous domain endings have a lot of variety, so it is important to look closely at the source itself, and not the domain ending on it's own:

- .int: stands for international. The NATO website and the World Health Organization (WHO) are great examples of credible websites ending with this domain.

- .uk: the country code for the United Kingdom. The official website for the UK government, Parliament , uses this domain.

- .net: could be any type of website, like the Institute of War and Peace Reporting , a charitable foundation that reports on the safety and events occurring in countries in upheaval around the world.

- << Previous: Evaluation Criteria

- Next: Evaluating Sources with Lateral Reading >>

- What Makes a Credible Source?

- Scholarly Sources vs. Popular Sources

- Evaluation Criteria

- Evaluating Sources with Lateral Reading

- Evaluating Websites Exercises

- Helpful Resources

Questions? Ask a Librarian

- Last Updated: Jan 17, 2024 9:39 AM

- URL: https://libguides.sccsc.edu/evaluatingsources

Giles Campus | 864.592.4764 | Toll Free 866.542.2779 | Contact Us

Copyright © 2024 Spartanburg Community College. All rights reserved.

Info for Library Staff | Guide Search

Return to SCC Website

- SUGGESTED TOPICS

- The Magazine

- Newsletters

- Managing Yourself

- Managing Teams

- Work-life Balance

- The Big Idea

- Data & Visuals

- Reading Lists

- Case Selections

- HBR Learning

- Topic Feeds

- Account Settings

- Email Preferences

The Research-Backed Benefits of Daily Rituals

- Michael I. Norton

A survey of more than 130 HBR readers asked how they use rituals to start their days, psych themselves up for stressful challenges, and transition when the workday is done.

While some may cringe at forced corporate rituals, research shows that personal and team rituals can actually benefit the way we work. The authors’ expertise on the topic over the past decade, plus a survey of nearly 140 HBR readers, explores the ways rituals can set us up for success before work, get us psyched up for important presentations, foster a strong team culture, and help us wind down at the end of the day.

“Give me a W ! Give me an A ! Give me an L ! Give me a squiggly! Give me an M ! Give me an A ! Give me an R ! Give me a T !”

- Michael I. Norton is the Harold M. Brierley Professor of Business Administration at the Harvard Business School. He is the author of The Ritual Effect and co-author of Happy Money: The Science of Happier Spending . His research focuses on happiness, well-being, rituals, and inequality. See his faculty page here .

Partner Center

Numbers, Facts and Trends Shaping Your World

Read our research on:

Full Topic List

Regions & Countries

- Publications

- Our Methods

- Short Reads

- Tools & Resources

Read Our Research On:

What the data says about abortion in the U.S.

Pew Research Center has conducted many surveys about abortion over the years, providing a lens into Americans’ views on whether the procedure should be legal, among a host of other questions.

In a Center survey conducted nearly a year after the Supreme Court’s June 2022 decision that ended the constitutional right to abortion , 62% of U.S. adults said the practice should be legal in all or most cases, while 36% said it should be illegal in all or most cases. Another survey conducted a few months before the decision showed that relatively few Americans take an absolutist view on the issue .

Find answers to common questions about abortion in America, based on data from the Centers for Disease Control and Prevention (CDC) and the Guttmacher Institute, which have tracked these patterns for several decades:

How many abortions are there in the U.S. each year?

How has the number of abortions in the u.s. changed over time, what is the abortion rate among women in the u.s. how has it changed over time, what are the most common types of abortion, how many abortion providers are there in the u.s., and how has that number changed, what percentage of abortions are for women who live in a different state from the abortion provider, what are the demographics of women who have had abortions, when during pregnancy do most abortions occur, how often are there medical complications from abortion.

This compilation of data on abortion in the United States draws mainly from two sources: the Centers for Disease Control and Prevention (CDC) and the Guttmacher Institute, both of which have regularly compiled national abortion data for approximately half a century, and which collect their data in different ways.

The CDC data that is highlighted in this post comes from the agency’s “abortion surveillance” reports, which have been published annually since 1974 (and which have included data from 1969). Its figures from 1973 through 1996 include data from all 50 states, the District of Columbia and New York City – 52 “reporting areas” in all. Since 1997, the CDC’s totals have lacked data from some states (most notably California) for the years that those states did not report data to the agency. The four reporting areas that did not submit data to the CDC in 2021 – California, Maryland, New Hampshire and New Jersey – accounted for approximately 25% of all legal induced abortions in the U.S. in 2020, according to Guttmacher’s data. Most states, though, do have data in the reports, and the figures for the vast majority of them came from each state’s central health agency, while for some states, the figures came from hospitals and other medical facilities.

Discussion of CDC abortion data involving women’s state of residence, marital status, race, ethnicity, age, abortion history and the number of previous live births excludes the low share of abortions where that information was not supplied. Read the methodology for the CDC’s latest abortion surveillance report , which includes data from 2021, for more details. Previous reports can be found at stacks.cdc.gov by entering “abortion surveillance” into the search box.

For the numbers of deaths caused by induced abortions in 1963 and 1965, this analysis looks at reports by the then-U.S. Department of Health, Education and Welfare, a precursor to the Department of Health and Human Services. In computing those figures, we excluded abortions listed in the report under the categories “spontaneous or unspecified” or as “other.” (“Spontaneous abortion” is another way of referring to miscarriages.)

Guttmacher data in this post comes from national surveys of abortion providers that Guttmacher has conducted 19 times since 1973. Guttmacher compiles its figures after contacting every known provider of abortions – clinics, hospitals and physicians’ offices – in the country. It uses questionnaires and health department data, and it provides estimates for abortion providers that don’t respond to its inquiries. (In 2020, the last year for which it has released data on the number of abortions in the U.S., it used estimates for 12% of abortions.) For most of the 2000s, Guttmacher has conducted these national surveys every three years, each time getting abortion data for the prior two years. For each interim year, Guttmacher has calculated estimates based on trends from its own figures and from other data.

The latest full summary of Guttmacher data came in the institute’s report titled “Abortion Incidence and Service Availability in the United States, 2020.” It includes figures for 2020 and 2019 and estimates for 2018. The report includes a methods section.

In addition, this post uses data from StatPearls, an online health care resource, on complications from abortion.

An exact answer is hard to come by. The CDC and the Guttmacher Institute have each tried to measure this for around half a century, but they use different methods and publish different figures.

The last year for which the CDC reported a yearly national total for abortions is 2021. It found there were 625,978 abortions in the District of Columbia and the 46 states with available data that year, up from 597,355 in those states and D.C. in 2020. The corresponding figure for 2019 was 607,720.

The last year for which Guttmacher reported a yearly national total was 2020. It said there were 930,160 abortions that year in all 50 states and the District of Columbia, compared with 916,460 in 2019.

- How the CDC gets its data: It compiles figures that are voluntarily reported by states’ central health agencies, including separate figures for New York City and the District of Columbia. Its latest totals do not include figures from California, Maryland, New Hampshire or New Jersey, which did not report data to the CDC. ( Read the methodology from the latest CDC report .)

- How Guttmacher gets its data: It compiles its figures after contacting every known abortion provider – clinics, hospitals and physicians’ offices – in the country. It uses questionnaires and health department data, then provides estimates for abortion providers that don’t respond. Guttmacher’s figures are higher than the CDC’s in part because they include data (and in some instances, estimates) from all 50 states. ( Read the institute’s latest full report and methodology .)

While the Guttmacher Institute supports abortion rights, its empirical data on abortions in the U.S. has been widely cited by groups and publications across the political spectrum, including by a number of those that disagree with its positions .

These estimates from Guttmacher and the CDC are results of multiyear efforts to collect data on abortion across the U.S. Last year, Guttmacher also began publishing less precise estimates every few months , based on a much smaller sample of providers.

The figures reported by these organizations include only legal induced abortions conducted by clinics, hospitals or physicians’ offices, or those that make use of abortion pills dispensed from certified facilities such as clinics or physicians’ offices. They do not account for the use of abortion pills that were obtained outside of clinical settings .

(Back to top)

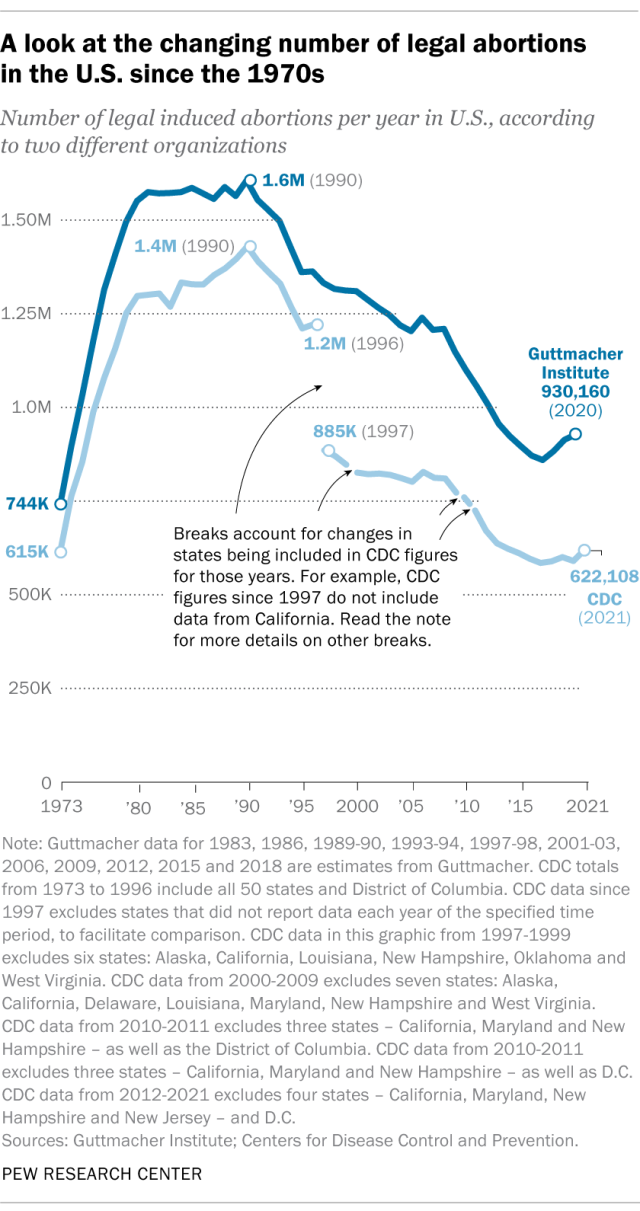

The annual number of U.S. abortions rose for years after Roe v. Wade legalized the procedure in 1973, reaching its highest levels around the late 1980s and early 1990s, according to both the CDC and Guttmacher. Since then, abortions have generally decreased at what a CDC analysis called “a slow yet steady pace.”

Guttmacher says the number of abortions occurring in the U.S. in 2020 was 40% lower than it was in 1991. According to the CDC, the number was 36% lower in 2021 than in 1991, looking just at the District of Columbia and the 46 states that reported both of those years.

(The corresponding line graph shows the long-term trend in the number of legal abortions reported by both organizations. To allow for consistent comparisons over time, the CDC figures in the chart have been adjusted to ensure that the same states are counted from one year to the next. Using that approach, the CDC figure for 2021 is 622,108 legal abortions.)

There have been occasional breaks in this long-term pattern of decline – during the middle of the first decade of the 2000s, and then again in the late 2010s. The CDC reported modest 1% and 2% increases in abortions in 2018 and 2019, and then, after a 2% decrease in 2020, a 5% increase in 2021. Guttmacher reported an 8% increase over the three-year period from 2017 to 2020.

As noted above, these figures do not include abortions that use pills obtained outside of clinical settings.

Guttmacher says that in 2020 there were 14.4 abortions in the U.S. per 1,000 women ages 15 to 44. Its data shows that the rate of abortions among women has generally been declining in the U.S. since 1981, when it reported there were 29.3 abortions per 1,000 women in that age range.

The CDC says that in 2021, there were 11.6 abortions in the U.S. per 1,000 women ages 15 to 44. (That figure excludes data from California, the District of Columbia, Maryland, New Hampshire and New Jersey.) Like Guttmacher’s data, the CDC’s figures also suggest a general decline in the abortion rate over time. In 1980, when the CDC reported on all 50 states and D.C., it said there were 25 abortions per 1,000 women ages 15 to 44.

That said, both Guttmacher and the CDC say there were slight increases in the rate of abortions during the late 2010s and early 2020s. Guttmacher says the abortion rate per 1,000 women ages 15 to 44 rose from 13.5 in 2017 to 14.4 in 2020. The CDC says it rose from 11.2 per 1,000 in 2017 to 11.4 in 2019, before falling back to 11.1 in 2020 and then rising again to 11.6 in 2021. (The CDC’s figures for those years exclude data from California, D.C., Maryland, New Hampshire and New Jersey.)

The CDC broadly divides abortions into two categories: surgical abortions and medication abortions, which involve pills. Since the Food and Drug Administration first approved abortion pills in 2000, their use has increased over time as a share of abortions nationally, according to both the CDC and Guttmacher.

The majority of abortions in the U.S. now involve pills, according to both the CDC and Guttmacher. The CDC says 56% of U.S. abortions in 2021 involved pills, up from 53% in 2020 and 44% in 2019. Its figures for 2021 include the District of Columbia and 44 states that provided this data; its figures for 2020 include D.C. and 44 states (though not all of the same states as in 2021), and its figures for 2019 include D.C. and 45 states.

Guttmacher, which measures this every three years, says 53% of U.S. abortions involved pills in 2020, up from 39% in 2017.

Two pills commonly used together for medication abortions are mifepristone, which, taken first, blocks hormones that support a pregnancy, and misoprostol, which then causes the uterus to empty. According to the FDA, medication abortions are safe until 10 weeks into pregnancy.

Surgical abortions conducted during the first trimester of pregnancy typically use a suction process, while the relatively few surgical abortions that occur during the second trimester of a pregnancy typically use a process called dilation and evacuation, according to the UCLA School of Medicine.

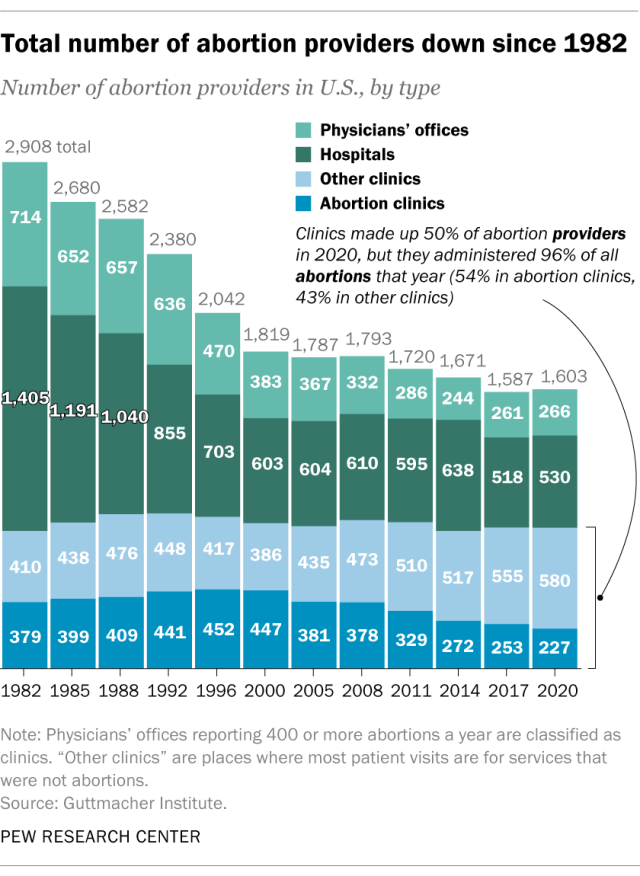

In 2020, there were 1,603 facilities in the U.S. that provided abortions, according to Guttmacher . This included 807 clinics, 530 hospitals and 266 physicians’ offices.

While clinics make up half of the facilities that provide abortions, they are the sites where the vast majority (96%) of abortions are administered, either through procedures or the distribution of pills, according to Guttmacher’s 2020 data. (This includes 54% of abortions that are administered at specialized abortion clinics and 43% at nonspecialized clinics.) Hospitals made up 33% of the facilities that provided abortions in 2020 but accounted for only 3% of abortions that year, while just 1% of abortions were conducted by physicians’ offices.

Looking just at clinics – that is, the total number of specialized abortion clinics and nonspecialized clinics in the U.S. – Guttmacher found the total virtually unchanged between 2017 (808 clinics) and 2020 (807 clinics). However, there were regional differences. In the Midwest, the number of clinics that provide abortions increased by 11% during those years, and in the West by 6%. The number of clinics decreased during those years by 9% in the Northeast and 3% in the South.

The total number of abortion providers has declined dramatically since the 1980s. In 1982, according to Guttmacher, there were 2,908 facilities providing abortions in the U.S., including 789 clinics, 1,405 hospitals and 714 physicians’ offices.

The CDC does not track the number of abortion providers.

In the District of Columbia and the 46 states that provided abortion and residency information to the CDC in 2021, 10.9% of all abortions were performed on women known to live outside the state where the abortion occurred – slightly higher than the percentage in 2020 (9.7%). That year, D.C. and 46 states (though not the same ones as in 2021) reported abortion and residency data. (The total number of abortions used in these calculations included figures for women with both known and unknown residential status.)

The share of reported abortions performed on women outside their state of residence was much higher before the 1973 Roe decision that stopped states from banning abortion. In 1972, 41% of all abortions in D.C. and the 20 states that provided this information to the CDC that year were performed on women outside their state of residence. In 1973, the corresponding figure was 21% in the District of Columbia and the 41 states that provided this information, and in 1974 it was 11% in D.C. and the 43 states that provided data.

In the District of Columbia and the 46 states that reported age data to the CDC in 2021, the majority of women who had abortions (57%) were in their 20s, while about three-in-ten (31%) were in their 30s. Teens ages 13 to 19 accounted for 8% of those who had abortions, while women ages 40 to 44 accounted for about 4%.

The vast majority of women who had abortions in 2021 were unmarried (87%), while married women accounted for 13%, according to the CDC , which had data on this from 37 states.

In the District of Columbia, New York City (but not the rest of New York) and the 31 states that reported racial and ethnic data on abortion to the CDC , 42% of all women who had abortions in 2021 were non-Hispanic Black, while 30% were non-Hispanic White, 22% were Hispanic and 6% were of other races.

Looking at abortion rates among those ages 15 to 44, there were 28.6 abortions per 1,000 non-Hispanic Black women in 2021; 12.3 abortions per 1,000 Hispanic women; 6.4 abortions per 1,000 non-Hispanic White women; and 9.2 abortions per 1,000 women of other races, the CDC reported from those same 31 states, D.C. and New York City.

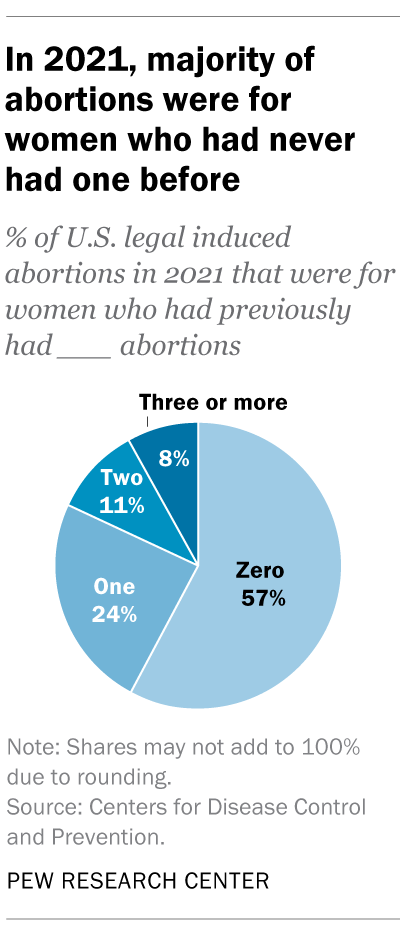

For 57% of U.S. women who had induced abortions in 2021, it was the first time they had ever had one, according to the CDC. For nearly a quarter (24%), it was their second abortion. For 11% of women who had an abortion that year, it was their third, and for 8% it was their fourth or more. These CDC figures include data from 41 states and New York City, but not the rest of New York.

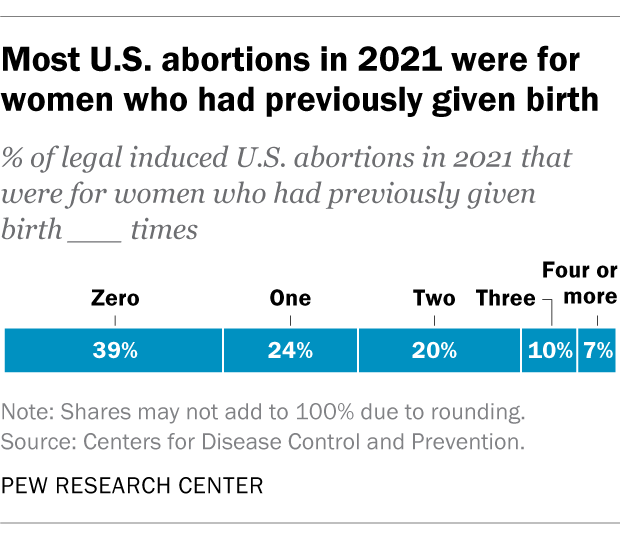

Nearly four-in-ten women who had abortions in 2021 (39%) had no previous live births at the time they had an abortion, according to the CDC . Almost a quarter (24%) of women who had abortions in 2021 had one previous live birth, 20% had two previous live births, 10% had three, and 7% had four or more previous live births. These CDC figures include data from 41 states and New York City, but not the rest of New York.

The vast majority of abortions occur during the first trimester of a pregnancy. In 2021, 93% of abortions occurred during the first trimester – that is, at or before 13 weeks of gestation, according to the CDC . An additional 6% occurred between 14 and 20 weeks of pregnancy, and about 1% were performed at 21 weeks or more of gestation. These CDC figures include data from 40 states and New York City, but not the rest of New York.

About 2% of all abortions in the U.S. involve some type of complication for the woman , according to an article in StatPearls, an online health care resource. “Most complications are considered minor such as pain, bleeding, infection and post-anesthesia complications,” according to the article.

The CDC calculates case-fatality rates for women from induced abortions – that is, how many women die from abortion-related complications, for every 100,000 legal abortions that occur in the U.S . The rate was lowest during the most recent period examined by the agency (2013 to 2020), when there were 0.45 deaths to women per 100,000 legal induced abortions. The case-fatality rate reported by the CDC was highest during the first period examined by the agency (1973 to 1977), when it was 2.09 deaths to women per 100,000 legal induced abortions. During the five-year periods in between, the figure ranged from 0.52 (from 1993 to 1997) to 0.78 (from 1978 to 1982).

The CDC calculates death rates by five-year and seven-year periods because of year-to-year fluctuation in the numbers and due to the relatively low number of women who die from legal induced abortions.

In 2020, the last year for which the CDC has information , six women in the U.S. died due to complications from induced abortions. Four women died in this way in 2019, two in 2018, and three in 2017. (These deaths all followed legal abortions.) Since 1990, the annual number of deaths among women due to legal induced abortion has ranged from two to 12.

The annual number of reported deaths from induced abortions (legal and illegal) tended to be higher in the 1980s, when it ranged from nine to 16, and from 1972 to 1979, when it ranged from 13 to 63. One driver of the decline was the drop in deaths from illegal abortions. There were 39 deaths from illegal abortions in 1972, the last full year before Roe v. Wade. The total fell to 19 in 1973 and to single digits or zero every year after that. (The number of deaths from legal abortions has also declined since then, though with some slight variation over time.)

The number of deaths from induced abortions was considerably higher in the 1960s than afterward. For instance, there were 119 deaths from induced abortions in 1963 and 99 in 1965 , according to reports by the then-U.S. Department of Health, Education and Welfare, a precursor to the Department of Health and Human Services. The CDC is a division of Health and Human Services.

Note: This is an update of a post originally published May 27, 2022, and first updated June 24, 2022.

Support for legal abortion is widespread in many countries, especially in Europe

Nearly a year after roe’s demise, americans’ views of abortion access increasingly vary by where they live, by more than two-to-one, americans say medication abortion should be legal in their state, most latinos say democrats care about them and work hard for their vote, far fewer say so of gop, positive views of supreme court decline sharply following abortion ruling, most popular.

1615 L St. NW, Suite 800 Washington, DC 20036 USA (+1) 202-419-4300 | Main (+1) 202-857-8562 | Fax (+1) 202-419-4372 | Media Inquiries

Research Topics

- Age & Generations

- Coronavirus (COVID-19)

- Economy & Work

- Family & Relationships

- Gender & LGBTQ

- Immigration & Migration

- International Affairs

- Internet & Technology

- Methodological Research

- News Habits & Media

- Non-U.S. Governments

- Other Topics

- Politics & Policy

- Race & Ethnicity

- Email Newsletters

ABOUT PEW RESEARCH CENTER Pew Research Center is a nonpartisan fact tank that informs the public about the issues, attitudes and trends shaping the world. It conducts public opinion polling, demographic research, media content analysis and other empirical social science research. Pew Research Center does not take policy positions. It is a subsidiary of The Pew Charitable Trusts .

Copyright 2024 Pew Research Center

Terms & Conditions

Privacy Policy

Cookie Settings

Reprints, Permissions & Use Policy

- International edition

- Australia edition

- Europe edition

Drug shortages, now normal in UK, made worse by Brexit, report warns

Some shortages are so serious they are imperilling the health and even lives of patients with serious illnesses, pharmacy bosses say