Chapter 9 Survey Research

Survey research a research method involving the use of standardized questionnaires or interviews to collect data about people and their preferences, thoughts, and behaviors in a systematic manner. Although census surveys were conducted as early as Ancient Egypt, survey as a formal research method was pioneered in the 1930-40s by sociologist Paul Lazarsfeld to examine the effects of the radio on political opinion formation of the United States. This method has since become a very popular method for quantitative research in the social sciences.

The survey method can be used for descriptive, exploratory, or explanatory research. This method is best suited for studies that have individual people as the unit of analysis. Although other units of analysis, such as groups, organizations or dyads (pairs of organizations, such as buyers and sellers), are also studied using surveys, such studies often use a specific person from each unit as a “key informant” or a “proxy” for that unit, and such surveys may be subject to respondent bias if the informant chosen does not have adequate knowledge or has a biased opinion about the phenomenon of interest. For instance, Chief Executive Officers may not adequately know employee’s perceptions or teamwork in their own companies, and may therefore be the wrong informant for studies of team dynamics or employee self-esteem.

Survey research has several inherent strengths compared to other research methods. First, surveys are an excellent vehicle for measuring a wide variety of unobservable data, such as people’s preferences (e.g., political orientation), traits (e.g., self-esteem), attitudes (e.g., toward immigrants), beliefs (e.g., about a new law), behaviors (e.g., smoking or drinking behavior), or factual information (e.g., income). Second, survey research is also ideally suited for remotely collecting data about a population that is too large to observe directly. A large area, such as an entire country, can be covered using mail-in, electronic mail, or telephone surveys using meticulous sampling to ensure that the population is adequately represented in a small sample. Third, due to their unobtrusive nature and the ability to respond at one’s convenience, questionnaire surveys are preferred by some respondents. Fourth, interviews may be the only way of reaching certain population groups such as the homeless or illegal immigrants for which there is no sampling frame available. Fifth, large sample surveys may allow detection of small effects even while analyzing multiple variables, and depending on the survey design, may also allow comparative analysis of population subgroups (i.e., within-group and between-group analysis). Sixth, survey research is economical in terms of researcher time, effort and cost than most other methods such as experimental research and case research. At the same time, survey research also has some unique disadvantages. It is subject to a large number of biases such as non-response bias, sampling bias, social desirability bias, and recall bias, as discussed in the last section of this chapter.

Depending on how the data is collected, survey research can be divided into two broad categories: questionnaire surveys (which may be mail-in, group-administered, or online surveys), and interview surveys (which may be personal, telephone, or focus group interviews). Questionnaires are instruments that are completed in writing by respondents, while interviews are completed by the interviewer based on verbal responses provided by respondents. As discussed below, each type has its own strengths and weaknesses, in terms of their costs, coverage of the target population, and researcher’s flexibility in asking questions.

Questionnaire Surveys

Invented by Sir Francis Galton, a questionnaire is a research instrument consisting of a set of questions (items) intended to capture responses from respondents in a standardized manner. Questions may be unstructured or structured. Unstructured questions ask respondents to provide a response in their own words, while structured questions ask respondents to select an answer from a given set of choices. Subjects’ responses to individual questions (items) on a structured questionnaire may be aggregated into a composite scale or index for statistical analysis. Questions should be designed such that respondents are able to read, understand, and respond to them in a meaningful way, and hence the survey method may not be appropriate or practical for certain demographic groups such as children or the illiterate.

Most questionnaire surveys tend to be self-administered mail surveys , where the same questionnaire is mailed to a large number of people, and willing respondents can complete the survey at their convenience and return it in postage-prepaid envelopes. Mail surveys are advantageous in that they are unobtrusive, and they are inexpensive to administer, since bulk postage is cheap in most countries. However, response rates from mail surveys tend to be quite low since most people tend to ignore survey requests. There may also be long delays (several months) in respondents’ completing and returning the survey (or they may simply lose it). Hence, the researcher must continuously monitor responses as they are being returned, track and send reminders to non-respondents repeated reminders (two or three reminders at intervals of one to 1.5 months is ideal). Questionnaire surveys are also not well-suited for issues that require clarification on the part of the respondent or those that require detailed written responses. Longitudinal designs can be used to survey the same set of respondents at different times, but response rates tend to fall precipitously from one survey to the next.

A second type of survey is group-administered questionnaire . A sample of respondents is brought together at a common place and time, and each respondent is asked to complete the survey questionnaire while in that room. Respondents enter their responses independently without interacting with each other. This format is convenient for the researcher, and high response rate is assured. If respondents do not understand any specific question, they can ask for clarification. In many organizations, it is relatively easy to assemble a group of employees in a conference room or lunch room, especially if the survey is approved by corporate executives.

A more recent type of questionnaire survey is an online or web survey. These surveys are administered over the Internet using interactive forms. Respondents may receive an electronic mail request for participation in the survey with a link to an online website where the survey may be completed. Alternatively, the survey may be embedded into an e-mail, and can be completed and returned via e-mail. These surveys are very inexpensive to administer, results are instantly recorded in an online database, and the survey can be easily modified if needed. However, if the survey website is not password-protected or designed to prevent multiple submissions, the responses can be easily compromised. Furthermore, sampling bias may be a significant issue since the survey cannot reach people that do not have computer or Internet access, such as many of the poor, senior, and minority groups, and the respondent sample is skewed toward an younger demographic who are online much of the time and have the time and ability to complete such surveys. Computing the response rate may be problematic, if the survey link is posted on listservs or bulletin boards instead of being e-mailed directly to targeted respondents. For these reasons, many researchers prefer dual-media surveys (e.g., mail survey and online survey), allowing respondents to select their preferred method of response.

Constructing a survey questionnaire is an art. Numerous decisions must be made about the content of questions, their wording, format, and sequencing, all of which can have important consequences for the survey responses.

Response formats. Survey questions may be structured or unstructured. Responses to structured questions are captured using one of the following response formats:

- Dichotomous response , where respondents are asked to select one of two possible choices, such as true/false, yes/no, or agree/disagree. An example of such a question is: Do you think that the death penalty is justified under some circumstances (circle one): yes / no.

- Nominal response , where respondents are presented with more than two unordered options, such as: What is your industry of employment: manufacturing / consumer services / retail / education / healthcare / tourism & hospitality / other.

- Ordinal response , where respondents have more than two ordered options, such as: what is your highest level of education: high school / college degree / graduate studies.

- Interval-level response , where respondents are presented with a 5-point or 7-point Likert scale, semantic differential scale, or Guttman scale. Each of these scale types were discussed in a previous chapter.

- Continuous response , where respondents enter a continuous (ratio-scaled) value with a meaningful zero point, such as their age or tenure in a firm. These responses generally tend to be of the fill-in-the blanks type.

Question content and wording. Responses obtained in survey research are very sensitive to the types of questions asked. Poorly framed or ambiguous questions will likely result in meaningless responses with very little value. Dillman (1978) recommends several rules for creating good survey questions. Every single question in a survey should be carefully scrutinized for the following issues:

- Is the question clear and understandable: Survey questions should be stated in a very simple language, preferably in active voice, and without complicated words or jargon that may not be understood by a typical respondent. All questions in the questionnaire should be worded in a similar manner to make it easy for respondents to read and understand them. The only exception is if your survey is targeted at a specialized group of respondents, such as doctors, lawyers and researchers, who use such jargon in their everyday environment.

- Is the question worded in a negative manner: Negatively worded questions, such as should your local government not raise taxes, tend to confuse many responses and lead to inaccurate responses. Such questions should be avoided, and in all cases, avoid double-negatives.

- Is the question ambiguous: Survey questions should not words or expressions that may be interpreted differently by different respondents (e.g., words like “any” or “just”). For instance, if you ask a respondent, what is your annual income, it is unclear whether you referring to salary/wages, or also dividend, rental, and other income, whether you referring to personal income, family income (including spouse’s wages), or personal and business income? Different interpretation by different respondents will lead to incomparable responses that cannot be interpreted correctly.

- Does the question have biased or value-laden words: Bias refers to any property of a question that encourages subjects to answer in a certain way. Kenneth Rasinky (1989) examined several studies on people’s attitude toward government spending, and observed that respondents tend to indicate stronger support for “assistance to the poor” and less for “welfare”, even though both terms had the same meaning. In this study, more support was also observed for “halting rising crime rate” (and less for “law enforcement”), “solving problems of big cities” (and less for “assistance to big cities”), and “dealing with drug addiction” (and less for “drug rehabilitation”). A biased language or tone tends to skew observed responses. It is often difficult to anticipate in advance the biasing wording, but to the greatest extent possible, survey questions should be carefully scrutinized to avoid biased language.

- Is the question double-barreled: Double-barreled questions are those that can have multiple answers. For example, are you satisfied with the hardware and software provided for your work? In this example, how should a respondent answer if he/she is satisfied with the hardware but not with the software or vice versa? It is always advisable to separate double-barreled questions into separate questions: (1) are you satisfied with the hardware provided for your work, and (2) are you satisfied with the software provided for your work. Another example: does your family favor public television? Some people may favor public TV for themselves, but favor certain cable TV programs such as Sesame Street for their children.

- Is the question too general: Sometimes, questions that are too general may not accurately convey respondents’ perceptions. If you asked someone how they liked a certain book and provide a response scale ranging from “not at all” to “extremely well”, if that person selected “extremely well”, what does he/she mean? Instead, ask more specific behavioral questions, such as will you recommend this book to others, or do you plan to read other books by the same author? Likewise, instead of asking how big is your firm (which may be interpreted differently by respondents), ask how many people work for your firm, and/or what is the annual revenues of your firm, which are both measures of firm size.

- Is the question too detailed: Avoid unnecessarily detailed questions that serve no specific research purpose. For instance, do you need the age of each child in a household or is just the number of children in the household acceptable? However, if unsure, it is better to err on the side of details than generality.

- Is the question presumptuous: If you ask, what do you see are the benefits of a tax cut, you are presuming that the respondent sees the tax cut as beneficial. But many people may not view tax cuts as being beneficial, because tax cuts generally lead to lesser funding for public schools, larger class sizes, and fewer public services such as police, ambulance, and fire service. Avoid questions with built-in presumptions.

- Is the question imaginary: A popular question in many television game shows is “if you won a million dollars on this show, how will you plan to spend it?” Most respondents have never been faced with such an amount of money and have never thought about it (most don’t even know that after taxes, they will get only about $640,000 or so in the United States, and in many cases, that amount is spread over a 20-year period, so that their net present value is even less), and so their answers tend to be quite random, such as take a tour around the world, buy a restaurant or bar, spend on education, save for retirement, help parents or children, or have a lavish wedding. Imaginary questions have imaginary answers, which cannot be used for making scientific inferences.

- Do respondents have the information needed to correctly answer the question: Often times, we assume that subjects have the necessary information to answer a question, when in reality, they do not. Even if a response is obtained, in such case, the responses tend to be inaccurate, given their lack of knowledge about the question being asked. For instance, we should not ask the CEO of a company about day-to-day operational details that they may not be aware of, or asking teachers about how much their students are learning, or asking high-schoolers “Do you think the US Government acted appropriately in the Bay of Pigs crisis?”

Question sequencing. In general, questions should flow logically from one to the next. To achieve the best response rates, questions should flow from the least sensitive to the most sensitive, from the factual and behavioral to the attitudinal, and from the more general to the more specific. Some general rules for question sequencing:

- Start with easy non-threatening questions that can be easily recalled. Good options are demographics (age, gender, education level) for individual-level surveys and firmographics (employee count, annual revenues, industry) for firm-level surveys.

- Never start with an open ended question.

- If following an historical sequence of events, follow a chronological order from earliest to latest.

- Ask about one topic at a time. When switching topics, use a transition, such as “The next section examines your opinions about …”

- Use filter or contingency questions as needed, such as: “If you answered “yes” to question 5, please proceed to Section 2. If you answered “no” go to Section 3.”

Other golden rules . Do unto your respondents what you would have them do unto you. Be attentive and appreciative of respondents’ time, attention, trust, and confidentiality of personal information. Always practice the following strategies for all survey research:

- People’s time is valuable. Be respectful of their time. Keep your survey as short as possible and limit it to what is absolutely necessary. Respondents do not like spending more than 10-15 minutes on any survey, no matter how important it is. Longer surveys tend to dramatically lower response rates.

- Always assure respondents about the confidentiality of their responses, and how you will use their data (e.g., for academic research) and how the results will be reported (usually, in the aggregate).

- For organizational surveys, assure respondents that you will send them a copy of the final results, and make sure that you follow up with your promise.

- Thank your respondents for their participation in your study.

- Finally, always pretest your questionnaire, at least using a convenience sample, before administering it to respondents in a field setting. Such pretesting may uncover ambiguity, lack of clarity, or biases in question wording, which should be eliminated before administering to the intended sample.

Interview Survey

Interviews are a more personalized form of data collection method than questionnaires, and are conducted by trained interviewers using the same research protocol as questionnaire surveys (i.e., a standardized set of questions). However, unlike a questionnaire, the interview script may contain special instructions for the interviewer that is not seen by respondents, and may include space for the interviewer to record personal observations and comments. In addition, unlike mail surveys, the interviewer has the opportunity to clarify any issues raised by the respondent or ask probing or follow-up questions. However, interviews are time-consuming and resource-intensive. Special interviewing skills are needed on part of the interviewer. The interviewer is also considered to be part of the measurement instrument, and must proactively strive not to artificially bias the observed responses.

The most typical form of interview is personal or face-to-face interview , where the interviewer works directly with the respondent to ask questions and record their responses.

Personal interviews may be conducted at the respondent’s home or office location. This approach may even be favored by some respondents, while others may feel uncomfortable in allowing a stranger in their homes. However, skilled interviewers can persuade respondents to cooperate, dramatically improving response rates.

A variation of the personal interview is a group interview, also called focus group . In this technique, a small group of respondents (usually 6-10 respondents) are interviewed together in a common location. The interviewer is essentially a facilitator whose job is to lead the discussion, and ensure that every person has an opportunity to respond. Focus groups allow deeper examination of complex issues than other forms of survey research, because when people hear others talk, it often triggers responses or ideas that they did not think about before. However, focus group discussion may be dominated by a dominant personality, and some individuals may be reluctant to voice their opinions in front of their peers or superiors, especially while dealing with a sensitive issue such as employee underperformance or office politics. Because of their small sample size, focus groups are usually used for exploratory research rather than descriptive or explanatory research.

A third type of interview survey is telephone interviews . In this technique, interviewers contact potential respondents over the phone, typically based on a random selection of people from a telephone directory, to ask a standard set of survey questions. A more recent and technologically advanced approach is computer-assisted telephone interviewing (CATI), increasing being used by academic, government, and commercial survey researchers, where the interviewer is a telephone operator, who is guided through the interview process by a computer program displaying instructions and questions to be asked on a computer screen. The system also selects respondents randomly using a random digit dialing technique, and records responses using voice capture technology. Once respondents are on the phone, higher response rates can be obtained. This technique is not ideal for rural areas where telephone density is low, and also cannot be used for communicating non-audio information such as graphics or product demonstrations.

Role of interviewer. The interviewer has a complex and multi-faceted role in the interview process, which includes the following tasks:

- Prepare for the interview: Since the interviewer is in the forefront of the data collection effort, the quality of data collected depends heavily on how well the interviewer is trained to do the job. The interviewer must be trained in the interview process and the survey method, and also be familiar with the purpose of the study, how responses will be stored and used, and sources of interviewer bias. He/she should also rehearse and time the interview prior to the formal study.

- Locate and enlist the cooperation of respondents: Particularly in personal, in-home surveys, the interviewer must locate specific addresses, and work around respondents’ schedule sometimes at undesirable times such as during weekends. They should also be like a salesperson, selling the idea of participating in the study.

- Motivate respondents: Respondents often feed off the motivation of the interviewer. If the interviewer is disinterested or inattentive, respondents won’t be motivated to provide useful or informative responses either. The interviewer must demonstrate enthusiasm about the study, communicate the importance of the research to respondents, and be attentive to respondents’ needs throughout the interview.

- Clarify any confusion or concerns: Interviewers must be able to think on their feet and address unanticipated concerns or objections raised by respondents to the respondents’ satisfaction. Additionally, they should ask probing questions as necessary even if such questions are not in the script.

- Observe quality of response: The interviewer is in the best position to judge the quality of information collected, and may supplement responses obtained using personal observations of gestures or body language as appropriate.

Conducting the interview. Before the interview, the interviewer should prepare a kit to carry to the interview session, consisting of a cover letter from the principal investigator or sponsor, adequate copies of the survey instrument, photo identification, and a telephone number for respondents to call to verify the interviewer’s authenticity. The interviewer should also try to call respondents ahead of time to set up an appointment if possible. To start the interview, he/she should speak in an imperative and confident tone, such as “I’d like to take a few minutes of your time to interview you for a very important study,” instead of “May I come in to do an interview?” He/she should introduce himself/herself, present personal credentials, explain the purpose of the study in 1-2 sentences, and assure confidentiality of respondents’ comments and voluntariness of their participation, all in less than a minute. No big words or jargon should be used, and no details should be provided unless specifically requested. If the interviewer wishes to tape-record the interview, he/she should ask for respondent’s explicit permission before doing so. Even if the interview is recorded, the interview must take notes on key issues, probes, or verbatim phrases.

During the interview, the interviewer should follow the questionnaire script and ask questions exactly as written, and not change the words to make the question sound friendlier. They should also not change the order of questions or skip any question that may have been answered earlier. Any issues with the questions should be discussed during rehearsal prior to the actual interview sessions. The interviewer should not finish the respondent’s sentences. If the respondent gives a brief cursory answer, the interviewer should probe the respondent to elicit a more thoughtful, thorough response. Some useful probing techniques are:

- The silent probe: Just pausing and waiting (without going into the next question) may suggest to respondents that the interviewer is waiting for more detailed response.

- Overt encouragement: Occasional “uh-huh” or “okay” may encourage the respondent to go into greater details. However, the interviewer must not express approval or disapproval of what was said by the respondent.

- Ask for elaboration: Such as “can you elaborate on that?” or “A minute ago, you were talking about an experience you had in high school. Can you tell me more about that?”

- Reflection: The interviewer can try the psychotherapist’s trick of repeating what the respondent said. For instance, “What I’m hearing is that you found that experience very traumatic” and then pause and wait for the respondent to elaborate.

After the interview in completed, the interviewer should thank respondents for their time, tell them when to expect the results, and not leave hastily. Immediately after leaving, they should write down any notes or key observations that may help interpret the respondent’s comments better.

Biases in Survey Research

Despite all of its strengths and advantages, survey research is often tainted with systematic biases that may invalidate some of the inferences derived from such surveys. Five such biases are the non-response bias, sampling bias, social desirability bias, recall bias, and common method bias.

Non-response bias. Survey research is generally notorious for its low response rates. A response rate of 15-20% is typical in a mail survey, even after two or three reminders. If the majority of the targeted respondents fail to respond to a survey, then a legitimate concern is whether non-respondents are not responding due to a systematic reason, which may raise questions about the validity of the study’s results. For instance, dissatisfied customers tend to be more vocal about their experience than satisfied customers, and are therefore more likely to respond to questionnaire surveys or interview requests than satisfied customers. Hence, any respondent sample is likely to have a higher proportion of dissatisfied customers than the underlying population from which it is drawn. In this instance, not only will the results lack generalizability, but the observed outcomes may also be an artifact of the biased sample. Several strategies may be employed to improve response rates:

- Advance notification: A short letter sent in advance to the targeted respondents soliciting their participation in an upcoming survey can prepare them in advance and improve their propensity to respond. The letter should state the purpose and importance of the study, mode of data collection (e.g., via a phone call, a survey form in the mail, etc.), and appreciation for their cooperation. A variation of this technique may request the respondent to return a postage-paid postcard indicating whether or not they are willing to participate in the study.

- Relevance of content: If a survey examines issues of relevance or importance to respondents, then they are more likely to respond than to surveys that don’t matter to them.

- Respondent-friendly questionnaire: Shorter survey questionnaires tend to elicit higher response rates than longer questionnaires. Furthermore, questions that are clear, non-offensive, and easy to respond tend to attract higher response rates.

- Endorsement: For organizational surveys, it helps to gain endorsement from a senior executive attesting to the importance of the study to the organization. Such endorsement can be in the form of a cover letter or a letter of introduction, which can improve the researcher’s credibility in the eyes of the respondents.

- Follow-up requests: Multiple follow-up requests may coax some non-respondents to respond, even if their responses are late.

- Interviewer training: Response rates for interviews can be improved with skilled interviewers trained on how to request interviews, use computerized dialing techniques to identify potential respondents, and schedule callbacks for respondents who could not be reached.

- Incentives : Response rates, at least with certain populations, may increase with the use of incentives in the form of cash or gift cards, giveaways such as pens or stress balls, entry into a lottery, draw or contest, discount coupons, promise of contribution to charity, and so forth.

- Non-monetary incentives: Businesses, in particular, are more prone to respond to non-monetary incentives than financial incentives. An example of such a non-monetary incentive is a benchmarking report comparing the business’s individual response against the aggregate of all responses to a survey.

- Confidentiality and privacy: Finally, assurances that respondents’ private data or responses will not fall into the hands of any third party, may help improve response rates.

Sampling bias. Telephone surveys conducted by calling a random sample of publicly available telephone numbers will systematically exclude people with unlisted telephone numbers, mobile phone numbers, and people who are unable to answer the phone (for instance, they are at work) when the survey is being conducted, and will include a disproportionate number of respondents who have land-line telephone service with listed phone numbers and people who stay home during much of the day, such as the unemployed, the disabled, and the elderly. Likewise, online surveys tend to include a disproportionate number of students and younger people who are constantly on the Internet, and systematically exclude people with limited or no access to computers or the Internet, such as the poor and the elderly. Similarly, questionnaire surveys tend to exclude children and the illiterate, who are unable to read, understand, or meaningfully respond to the questionnaire. A different kind of sampling bias relate to sampling the wrong population, such as asking teachers (or parents) about academic learning of their students (or children), or asking CEOs about operational details in their company. Such biases make the respondent sample unrepresentative of the intended population and hurt generalizability claims about inferences drawn from the biased sample.

Social desirability bias . Many respondents tend to avoid negative opinions or embarrassing comments about themselves, their employers, family, or friends. With negative questions such as do you think that your project team is dysfunctional, is there a lot of office politics in your workplace, or have you ever illegally downloaded music files from the Internet, the researcher may not get truthful responses. This tendency among respondents to “spin the truth” in order to portray themselves in a socially desirable manner is called the “social desirability bias”, which hurts the validity of response obtained from survey research. There is practically no way of overcoming the social desirability bias in a questionnaire survey, but in an interview setting, an astute interviewer may be able to spot inconsistent answers and ask probing questions or use personal observations to supplement respondents’ comments.

Recall bias. Responses to survey questions often depend on subjects’ motivation, memory, and ability to respond. Particularly when dealing with events that happened in the distant past, respondents may not adequately remember their own motivations or behaviors or perhaps their memory of such events may have evolved with time and no longer retrievable. For instance, if a respondent to asked to describe his/her utilization of computer technology one year ago or even memorable childhood events like birthdays, their response may not be accurate due to difficulties with recall. One possible way of overcoming the recall bias is by anchoring respondent’s memory in specific events as they happened, rather than asking them to recall their perceptions and motivations from memory.

Common method bias. Common method bias refers to the amount of spurious covariance shared between independent and dependent variables that are measured at the same point in time, such as in a cross-sectional survey, using the same instrument, such as a questionnaire. In such cases, the phenomenon under investigation may not be adequately separated from measurement artifacts. Standard statistical tests are available to test for common method bias, such as Harmon’s single-factor test (Podsakoff et al. 2003), Lindell and Whitney’s (2001) market variable technique, and so forth. This bias can be potentially avoided if the independent and dependent variables are measured at different points in time, using a longitudinal survey design, of if these variables are measured using different methods, such as computerized recording of dependent variable versus questionnaire-based self-rating of independent variables.

- Social Science Research: Principles, Methods, and Practices. Authored by : Anol Bhattacherjee. Provided by : University of South Florida. Located at : http://scholarcommons.usf.edu/oa_textbooks/3/ . License : CC BY-NC-SA: Attribution-NonCommercial-ShareAlike

Teach yourself statistics

How to Analyze Survey Data for Hypothesis Tests

Traditionally, researchers analyze survey data to estimate population parameters. But very similar analytical techniques can also be applied to test hypotheses.

In this lesson, we describe how to analyze survey data to test statistical hypotheses.

The Logic of the Analysis

In a big-picture sense, the analysis of survey sampling data is easy. When you use sample data to test a hypothesis, the analysis includes the same seven steps:

- Estimate a population parameter.

- Estimate population variance.

- Compute standard error.

- Set the significance level.

- Find the critical value (often a z-score or a t-score).

- Define the upper limit of the region of acceptance.

- Define the lower limit of the region of acceptance.

It doesn't matter whether the sampling method is simple random sampling, stratified sampling, or cluster sampling. And it doesn't matter whether the parameter of interest is a mean score, a proportion, or a total score. The analysis of survey sampling data always includes the same seven steps.

However, formulas used in the first three steps of the analysis can differ, based on the sampling method and the parameter of interest. In the next section, we'll list the formulas to use for each step. By the end of the lesson, you'll know how to test hypotheses about mean scores, proportions, and total scores using data from simple random samples, stratified samples, and cluster samples.

Data Analysis for Hypothesis Testing

Now, let's look in a little more detail at the seven steps required to conduct a hypothesis test, when you are working with data from a survey sample.

Sample mean = x = Σx / n

where x is a sample estimate of the population mean, Σx is the sum of all the sample observations, and n is the number of sample observations.

Population total = t = N * x

where N is the number of observations in the population, and x is the sample mean.

Or, if we know the sample proportion, we can estimate the population total (t) as:

Population total = t = N * p

where t is an estimate of the number of elements in the population that have a specified attribute, N is the number of observations in the population, and p is the sample proportion.

Sample mean = x = Σ( N h / N ) * x h

where N h is the number of observations in stratum h of the population, N is the number of observations in the population, and x h is the mean score from the sample in stratum h .

Sample proportion = p = Σ( N h / N ) * p h

where N h is the number of observations in stratum h of the population, N is the number of observations in the population, and p h is the sample proportion in stratum h .

Population total = t = ΣN h * x h

where N h is the number of observations in the population from stratum h , and x h is the sample mean from stratum h .

Or if we know the population proportion in each stratum, we can use this formula to estimate a population total:

Population total = t = ΣN h * p h

where t is an estimate of the number of observations in the population that have a specified attribute, N h is the number of observations from stratum h in the population, and p h is the sample proportion from stratum h .

x = ( N / ( n * M ) ] * Σ ( M h * x h )

where N is the number of clusters in the population, n is the number of clusters in the sample, M is the number of observations in the population, M h is the number of observations in cluster h , and x h is the mean score from the sample in cluster h .

p = ( N / ( n * M ) ] * Σ ( M h * p h )

where N is the number of clusters in the population, n is the number of clusters in the sample, M is the number of observations in the population, M h is the number of observations in cluster h , and p h is the proportion from the sample in cluster h .

Population total = t = N/n * ΣM h * x h

where N is the number of clusters in the population, n is the number of clusters in the sample, M h is the number of observations in the population from cluster h , and x h is the sample mean from cluster h .

And, if we know the sample proportion for each cluster, we can estimate a population total:

Population total = t = N/n * ΣM h * p h

where t is an estimate of the number of elements in the population that have a specified attribute, N is the number of clusters in the population, n is the number of clusters in the sample, M h is the number of observations from cluster h in the population, and p h is the sample proportion from cluster h .

s 2 = P * (1 - P)

where s 2 is an estimate of population variance, and P is the value of the proportion in the null hypothesis.

s 2 = Σ ( x i - x ) 2 / ( n - 1 )

where s 2 is a sample estimate of population variance, x is the sample mean, x i is the i th element from the sample, and n is the number of elements in the sample.

s 2 h = Σ ( x i h - x h ) 2 / ( n h - 1 )

where s 2 h is a sample estimate of population variance in stratum h , x i h is the value of the i th element from stratum h, x h is the sample mean from stratum h , and n h is the number of sample observations from stratum h .

s 2 h = Σ ( x i h - x h ) 2 / ( m h - 1 )

where s 2 h is a sample estimate of population variance in cluster h , x i h is the value of the i th element from cluster h, x h is the sample mean from cluster h , and m h is the number of observations sampled from cluster h .

s 2 b = Σ ( t h - t/N ) 2 / ( n - 1 )

where s 2 b is a sample estimate of the variance between sampled clusters, t h is the total from cluster h, t is the sample estimate of the population total, N is the number of clusters in the population, and n is the number of clusters in the sample.

You can estimate the population total (t) from the following formula:

where M h is the number of observations in the population from cluster h , and x h is the sample mean from cluster h .

SE = sqrt [ (1 - n/N) * s 2 / n ]

where n is the sample size, N is the population size, and s is a sample estimate of the population standard deviation.

SE = sqrt [ N 2 * (1 - n/N) * s 2 / n ]

where N is the population size, n is the sample size, and s 2 is a sample estimate of the population variance.

SE = (1 / N) * sqrt { Σ [ N 2 h * ( 1 - n h /N h ) * s 2 h / n h ] }

where n h is the number of sample observations from stratum h, N h is the number of elements from stratum h in the population, N is the number of elements in the population, and s 2 h is a sample estimate of the population variance in stratum h.

SE = sqrt { Σ [ N 2 h * ( 1 - n h /N h ) * s 2 h / n h ] }

where N h is the number of elements from stratum h in the population, n h is the number of sample observations from stratum h, and s 2 h is a sample estimate of the population variance in stratum h.

where M is the number of observations in the population, N is the number of clusters in the population, n is the number of clusters in the sample, M h is the number of elements from cluster h in the population, m h is the number of elements from cluster h in the sample, x h is the sample mean from cluster h, s 2 h is a sample estimate of the population variance in stratum h, and t is a sample estimate of the population total. For the equation above, use the following formula to estimate the population total.

t = N/n * Σ M h x h

With one-stage cluster sampling, the formula for the standard error reduces to:

where M is the number of observations in the population, N is the number of clusters in the population, n is the number of clusters in the sample, M h is the number of elements from cluster h in the population, m h is the number of elements from cluster h in the sample, p h is the value of the proportion from cluster h, and t is a sample estimate of the population total. For the equation above, use the following formula to estimate the population total.

t = N/n * Σ M h p h

where N is the number of clusters in the population, n is the number of clusters in the sample, s 2 b is a sample estimate of the variance between clusters, m h is the number of elements from cluster h in the sample, M h is the number of elements from cluster h in the population, and s 2 h is a sample estimate of the population variance in cluster h.

SE = N * sqrt { [ ( 1 - n/N ) / n ] * s 2 b /n }

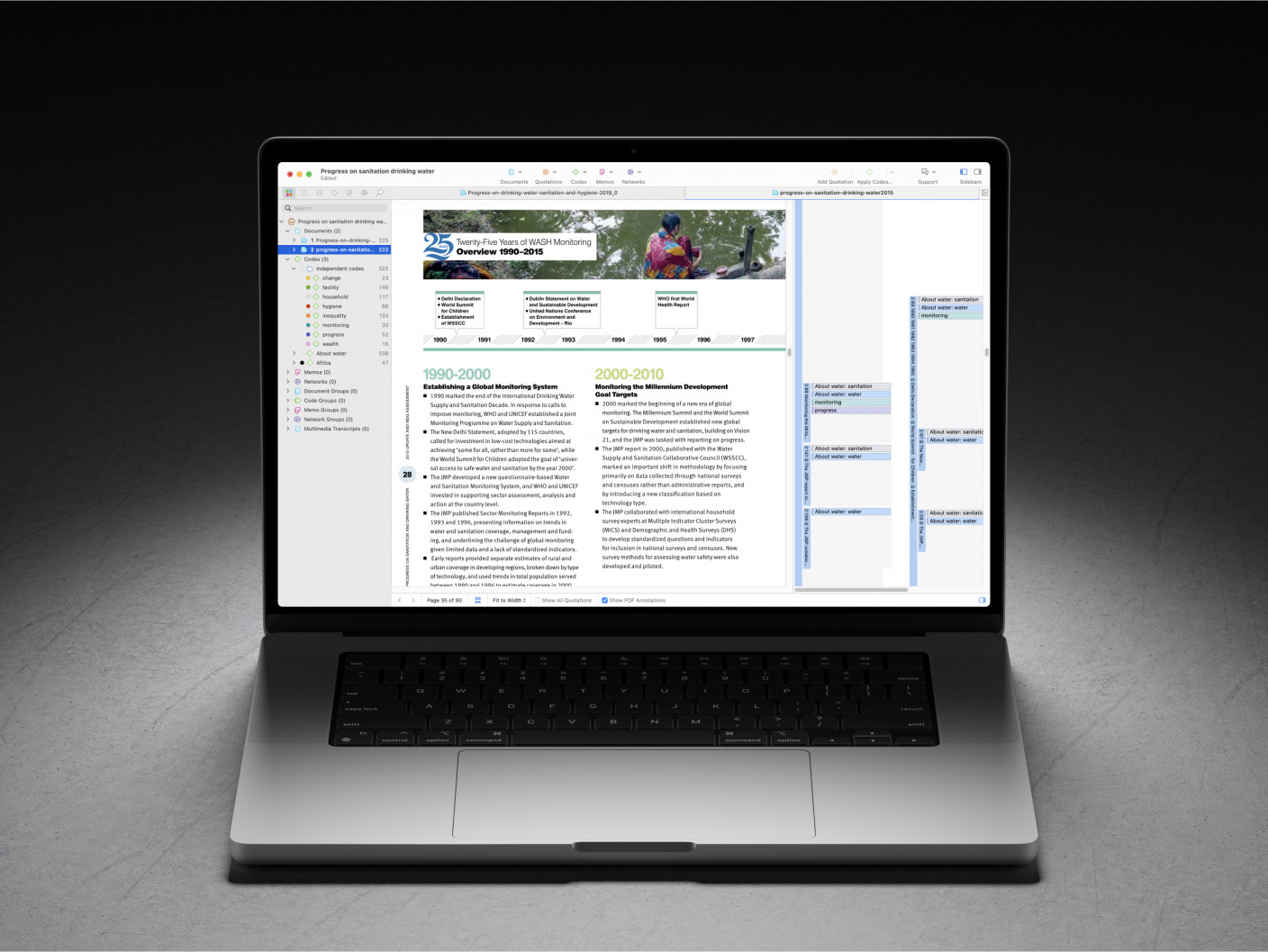

- Choose a significance level. The significance level (denoted by α) is the probability of committing a Type I error . Researchers often set the significance level equal to 0.05 or 0.01.

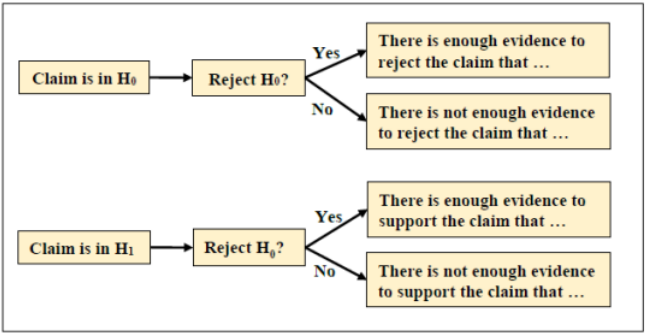

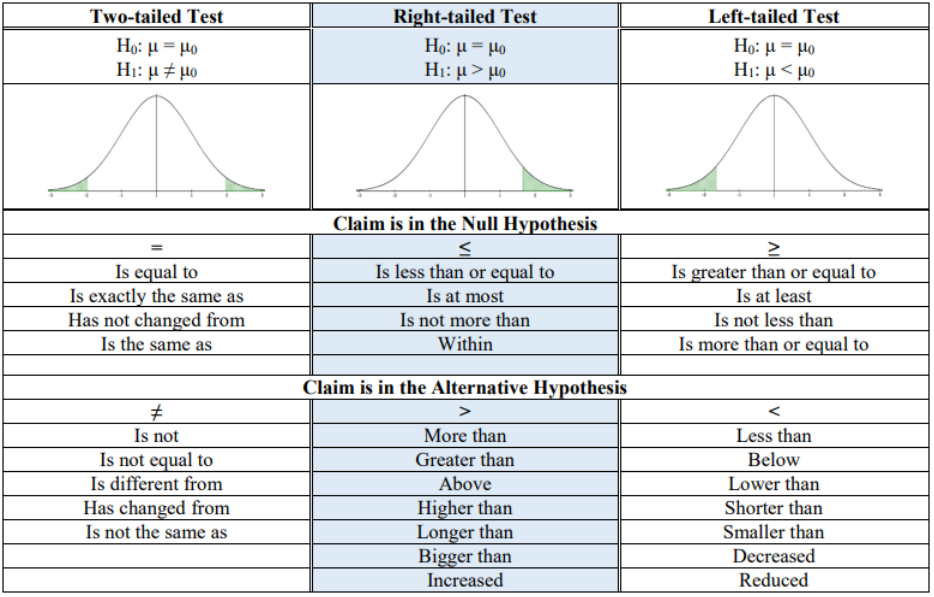

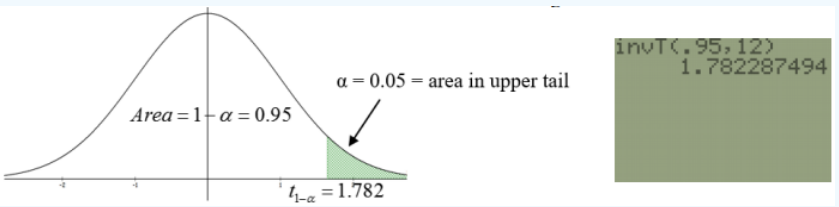

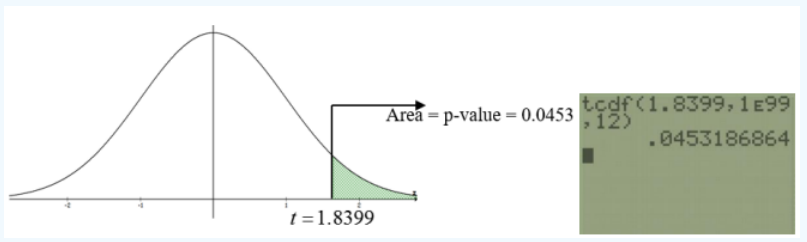

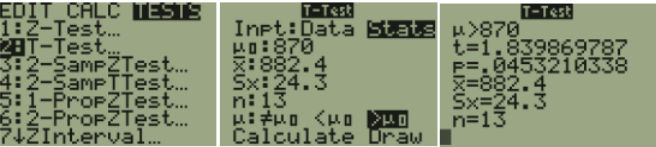

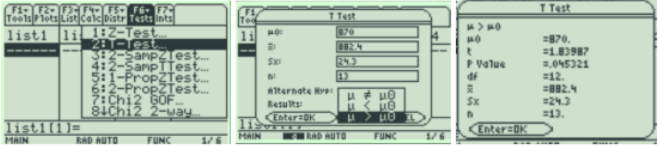

When the null hypothesis is two-tailed, the critical value is the z-score or t-score that has a cumulative probability equal to 1 - α/2. When the null hypothesis is one-tailed, the critical value has a cumulative probability equal to 1 - α.

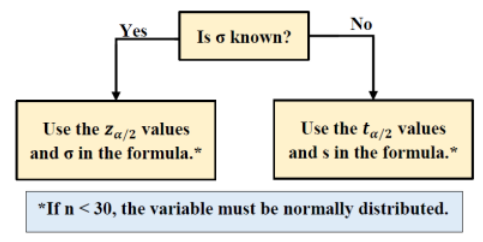

Researchers use a t-score when sample size is small; a z-score when it is large (at least 30). You can use the Normal Distribution Calculator to find the critical z-score, and the t Distribution Calculator to find the critical t-score.

If you use a t-score, you will have to find the degrees of freedom (df). With simple random samples, df is often equal to the sample size minus one.

Note: The critical value for a one-tailed hypothesis does not equal the critical value for a two-tailed hypothesis. The critical value for a one-tailed hypothesis is smaller.

UL = M + SE * CV

- If the null hypothesis is μ > M: The theoretical upper limit of the region of acceptance is plus infinity, unless the parameter in the null hypothesis is a proportion or a percentage. The upper limit is 1 for a proportion, and 100 for a percentage.

LL = M - SE * CV

- If the null hypothesis is μ < M: The theoretical lower limit of the region of acceptance is minus infinity, unless the test statistic is a proportion or a percentage. The lower limit for a proportion or a percentage is zero.

The region of acceptance is the range of values between LL and UL. If the sample estimate of the population parameter falls outside the region of acceptance, the researcher rejects the null hypothesis. If the sample estimate falls within the region of acceptance, the researcher does not reject the null hypothesis.

By following the steps outlined above, you define the region of acceptance in such a way that the chance of making a Type I error is equal to the significance level .

Test Your Understanding

In this section, two hypothesis testing examples illustrate how to define the region of acceptance. The first problem shows a two-tailed test with a mean score; and the second problem, a one-tailed test with a proportion.

Sample Size Calculator

As you probably noticed, defining the region of acceptance can be complex and time-consuming. Stat Trek's Sample Size Calculator can do the same job quickly, easily, and error-free.The calculator is easy to use, and it is free. You can find the Sample Size Calculator in Stat Trek's main menu under the Stat Tools tab. Or you can tap the button below.

An inventor has developed a new, energy-efficient lawn mower engine. He claims that the engine will run continuously for 5 hours (300 minutes) on a single ounce of regular gasoline. Suppose a random sample of 50 engines is tested. The engines run for an average of 295 minutes, with a standard deviation of 20 minutes.

Consider the null hypothesis that the mean run time is 300 minutes against the alternative hypothesis that the mean run time is not 300 minutes. Use a 0.05 level of significance. Find the region of acceptance. Based on the region of acceptance, would you reject the null hypothesis?

Solution: The analysis of survey data to test a hypothesis takes seven steps. We work through those steps below:

However, if we had to compute the sample mean from raw data, we could do it, using the following formula:

where Σx is the sum of all the sample observations, and n is the number of sample observations.

If we hadn't been given the standard deviation, we could have computed it from the raw sample data, using the following formula:

For this problem, we know that the sample size is 50, and the standard deviation is 20. The population size is not stated explicitly; but, in theory, the manufacturer could produce an infinite number of motors. Therefore, the population size is a very large number. For the purpose of the analysis, we'll assume that the population size is 100,000. Plugging those values into the formula, we find that the standard error is:

SE = sqrt [ (1 - 50/100,000) * 20 2 / 50 ]

SE = sqrt(0.9995 * 8) = 2.828

- Choose a significance level. The significance level (α) is chosen for us in the problem. It is 0.05. (Researchers often set the significance level equal to 0.05 or 0.01.)

When the null hypothesis is two-tailed, the critical value has a cumulative probability equal to 1 - α/2. When the null hypothesis is one-tailed, the critical value has a cumulative probability equal to 1 - α.

For this problem, the null hypothesis and the alternative hypothesis can be expressed as:

Since this problem deals with a two-tailed hypothesis, the critical value will be the z-score that has a cumulative probability equal to 1 - α/2. Here, the significance level (α) is 0.05, so the critical value will be the z-score that has a cumulative probability equal to 0.975.

We use the Normal Distribution Calculator to find that the z-score with a cumulative probability of 0.975 is 1.96. Thus, the critical value is 1.96.

where M is the parameter value in the null hypothesis, SE is the standard error, and CV is the critical value. So, for this problem, we compute the lower limit of the region of acceptance as:

LL = 300 - 2.828 * 1.96

LL = 300 - 5.54

LL = 294.46

LL = 300 + 2.828 * 1.96

LL = 300 + 5.54

LL = 305.54

Thus, given a significance level of 0.05, the region of acceptance is range of values between 294.46 and 305.54. In the tests, the engines ran for an average of 295 minutes. That value is within the region of acceptance, so the inventor cannot reject the null hypothesis that the engines run for 300 minutes on an ounce of fuel.

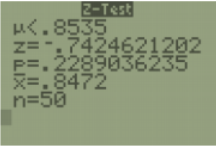

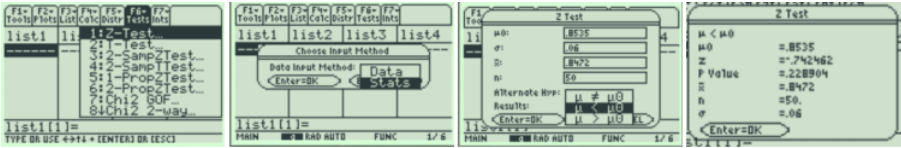

Problem 2 Suppose the CEO of a large software company claims that at least 80 percent of the company's 1,000,000 customers are very satisfied. A survey of 100 randomly sampled customers finds that 73 percent are very satisfied. To test the CEO's hypothesis, find the region of acceptance. Assume a significance level of 0.05.

However, if we had to compute the sample proportion (p) from raw data, we could do it by using the following formula:

where s 2 is the population variance when the true population proportion is P, and P is the value of the proportion in the null hypothesis.

For the purpose of estimating population variance, we assume the null hypothesis is true. In this problem, the null hypothesis states that the true proportion of satisfied customers is 0.8. Therefore, to estimate population variance, we insert that value in the formula:

s 2 = 0.8 * (1 - 0.8)

s 2 = 0.8 * 0.2 = 0.16

For this problem, we know that the sample size is 100, the variance ( s 2 ) is 0.16, and the population size is 1,000,000. Plugging those values into the formula, we find that the standard error is:

SE = sqrt [ (1 - 100/1,000,000) * 0.16 / 100 ]

SE = sqrt(0.9999 * 0.0016) = 0.04

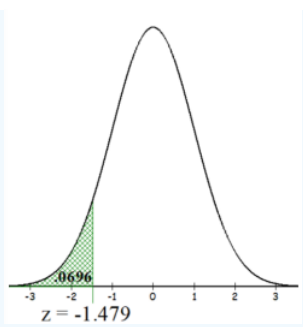

Since this problem deals with a one-tailed hypothesis, the critical value will be the z-score that has a cumulative probability equal to 1 - α. Here, the significance level (α) is 0.05, so the critical value will be the z-score that has a cumulative probability equal to 0.95.

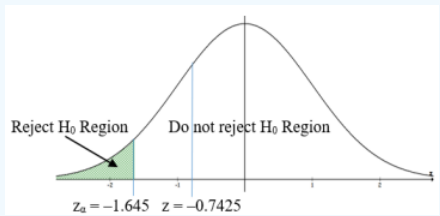

We use the Normal Distribution Calculator to find that the z-score with a cumulative probability of 0.95 is 1.645. Thus, the critical value is 1.645.

LL = 0.8 - 0.04 * 1.645

LL = 0.8 - 0.0658 = 0.7342

- Find the upper limit of the region of acceptance. For this type of one-tailed hypothesis, the theoretical upper limit of the region of acceptance is 1; since any proportion greater than 0.8 is consistent with the null hypothesis, and 1 is the largest value that a proportion can have.

Thus, given a significance level of 0.05, the region of acceptance is the range of values between 0.7342 and 1.0. In the sample survey, the proportion of satisfied customers was 0.73. That value is outside the region of acceptance, so null hypothesis must be rejected.

An official website of the United States government

The .gov means it’s official. Federal government websites often end in .gov or .mil. Before sharing sensitive information, make sure you’re on a federal government site.

The site is secure. The https:// ensures that you are connecting to the official website and that any information you provide is encrypted and transmitted securely.

- Publications

- Account settings

- Advanced Search

- Journal List

- J Korean Med Sci

- v.37(16); 2022 Apr 25

A Practical Guide to Writing Quantitative and Qualitative Research Questions and Hypotheses in Scholarly Articles

Edward barroga.

1 Department of General Education, Graduate School of Nursing Science, St. Luke’s International University, Tokyo, Japan.

Glafera Janet Matanguihan

2 Department of Biological Sciences, Messiah University, Mechanicsburg, PA, USA.

The development of research questions and the subsequent hypotheses are prerequisites to defining the main research purpose and specific objectives of a study. Consequently, these objectives determine the study design and research outcome. The development of research questions is a process based on knowledge of current trends, cutting-edge studies, and technological advances in the research field. Excellent research questions are focused and require a comprehensive literature search and in-depth understanding of the problem being investigated. Initially, research questions may be written as descriptive questions which could be developed into inferential questions. These questions must be specific and concise to provide a clear foundation for developing hypotheses. Hypotheses are more formal predictions about the research outcomes. These specify the possible results that may or may not be expected regarding the relationship between groups. Thus, research questions and hypotheses clarify the main purpose and specific objectives of the study, which in turn dictate the design of the study, its direction, and outcome. Studies developed from good research questions and hypotheses will have trustworthy outcomes with wide-ranging social and health implications.

INTRODUCTION

Scientific research is usually initiated by posing evidenced-based research questions which are then explicitly restated as hypotheses. 1 , 2 The hypotheses provide directions to guide the study, solutions, explanations, and expected results. 3 , 4 Both research questions and hypotheses are essentially formulated based on conventional theories and real-world processes, which allow the inception of novel studies and the ethical testing of ideas. 5 , 6

It is crucial to have knowledge of both quantitative and qualitative research 2 as both types of research involve writing research questions and hypotheses. 7 However, these crucial elements of research are sometimes overlooked; if not overlooked, then framed without the forethought and meticulous attention it needs. Planning and careful consideration are needed when developing quantitative or qualitative research, particularly when conceptualizing research questions and hypotheses. 4

There is a continuing need to support researchers in the creation of innovative research questions and hypotheses, as well as for journal articles that carefully review these elements. 1 When research questions and hypotheses are not carefully thought of, unethical studies and poor outcomes usually ensue. Carefully formulated research questions and hypotheses define well-founded objectives, which in turn determine the appropriate design, course, and outcome of the study. This article then aims to discuss in detail the various aspects of crafting research questions and hypotheses, with the goal of guiding researchers as they develop their own. Examples from the authors and peer-reviewed scientific articles in the healthcare field are provided to illustrate key points.

DEFINITIONS AND RELATIONSHIP OF RESEARCH QUESTIONS AND HYPOTHESES

A research question is what a study aims to answer after data analysis and interpretation. The answer is written in length in the discussion section of the paper. Thus, the research question gives a preview of the different parts and variables of the study meant to address the problem posed in the research question. 1 An excellent research question clarifies the research writing while facilitating understanding of the research topic, objective, scope, and limitations of the study. 5

On the other hand, a research hypothesis is an educated statement of an expected outcome. This statement is based on background research and current knowledge. 8 , 9 The research hypothesis makes a specific prediction about a new phenomenon 10 or a formal statement on the expected relationship between an independent variable and a dependent variable. 3 , 11 It provides a tentative answer to the research question to be tested or explored. 4

Hypotheses employ reasoning to predict a theory-based outcome. 10 These can also be developed from theories by focusing on components of theories that have not yet been observed. 10 The validity of hypotheses is often based on the testability of the prediction made in a reproducible experiment. 8

Conversely, hypotheses can also be rephrased as research questions. Several hypotheses based on existing theories and knowledge may be needed to answer a research question. Developing ethical research questions and hypotheses creates a research design that has logical relationships among variables. These relationships serve as a solid foundation for the conduct of the study. 4 , 11 Haphazardly constructed research questions can result in poorly formulated hypotheses and improper study designs, leading to unreliable results. Thus, the formulations of relevant research questions and verifiable hypotheses are crucial when beginning research. 12

CHARACTERISTICS OF GOOD RESEARCH QUESTIONS AND HYPOTHESES

Excellent research questions are specific and focused. These integrate collective data and observations to confirm or refute the subsequent hypotheses. Well-constructed hypotheses are based on previous reports and verify the research context. These are realistic, in-depth, sufficiently complex, and reproducible. More importantly, these hypotheses can be addressed and tested. 13

There are several characteristics of well-developed hypotheses. Good hypotheses are 1) empirically testable 7 , 10 , 11 , 13 ; 2) backed by preliminary evidence 9 ; 3) testable by ethical research 7 , 9 ; 4) based on original ideas 9 ; 5) have evidenced-based logical reasoning 10 ; and 6) can be predicted. 11 Good hypotheses can infer ethical and positive implications, indicating the presence of a relationship or effect relevant to the research theme. 7 , 11 These are initially developed from a general theory and branch into specific hypotheses by deductive reasoning. In the absence of a theory to base the hypotheses, inductive reasoning based on specific observations or findings form more general hypotheses. 10

TYPES OF RESEARCH QUESTIONS AND HYPOTHESES

Research questions and hypotheses are developed according to the type of research, which can be broadly classified into quantitative and qualitative research. We provide a summary of the types of research questions and hypotheses under quantitative and qualitative research categories in Table 1 .

Research questions in quantitative research

In quantitative research, research questions inquire about the relationships among variables being investigated and are usually framed at the start of the study. These are precise and typically linked to the subject population, dependent and independent variables, and research design. 1 Research questions may also attempt to describe the behavior of a population in relation to one or more variables, or describe the characteristics of variables to be measured ( descriptive research questions ). 1 , 5 , 14 These questions may also aim to discover differences between groups within the context of an outcome variable ( comparative research questions ), 1 , 5 , 14 or elucidate trends and interactions among variables ( relationship research questions ). 1 , 5 We provide examples of descriptive, comparative, and relationship research questions in quantitative research in Table 2 .

Hypotheses in quantitative research

In quantitative research, hypotheses predict the expected relationships among variables. 15 Relationships among variables that can be predicted include 1) between a single dependent variable and a single independent variable ( simple hypothesis ) or 2) between two or more independent and dependent variables ( complex hypothesis ). 4 , 11 Hypotheses may also specify the expected direction to be followed and imply an intellectual commitment to a particular outcome ( directional hypothesis ) 4 . On the other hand, hypotheses may not predict the exact direction and are used in the absence of a theory, or when findings contradict previous studies ( non-directional hypothesis ). 4 In addition, hypotheses can 1) define interdependency between variables ( associative hypothesis ), 4 2) propose an effect on the dependent variable from manipulation of the independent variable ( causal hypothesis ), 4 3) state a negative relationship between two variables ( null hypothesis ), 4 , 11 , 15 4) replace the working hypothesis if rejected ( alternative hypothesis ), 15 explain the relationship of phenomena to possibly generate a theory ( working hypothesis ), 11 5) involve quantifiable variables that can be tested statistically ( statistical hypothesis ), 11 6) or express a relationship whose interlinks can be verified logically ( logical hypothesis ). 11 We provide examples of simple, complex, directional, non-directional, associative, causal, null, alternative, working, statistical, and logical hypotheses in quantitative research, as well as the definition of quantitative hypothesis-testing research in Table 3 .

Research questions in qualitative research

Unlike research questions in quantitative research, research questions in qualitative research are usually continuously reviewed and reformulated. The central question and associated subquestions are stated more than the hypotheses. 15 The central question broadly explores a complex set of factors surrounding the central phenomenon, aiming to present the varied perspectives of participants. 15

There are varied goals for which qualitative research questions are developed. These questions can function in several ways, such as to 1) identify and describe existing conditions ( contextual research question s); 2) describe a phenomenon ( descriptive research questions ); 3) assess the effectiveness of existing methods, protocols, theories, or procedures ( evaluation research questions ); 4) examine a phenomenon or analyze the reasons or relationships between subjects or phenomena ( explanatory research questions ); or 5) focus on unknown aspects of a particular topic ( exploratory research questions ). 5 In addition, some qualitative research questions provide new ideas for the development of theories and actions ( generative research questions ) or advance specific ideologies of a position ( ideological research questions ). 1 Other qualitative research questions may build on a body of existing literature and become working guidelines ( ethnographic research questions ). Research questions may also be broadly stated without specific reference to the existing literature or a typology of questions ( phenomenological research questions ), may be directed towards generating a theory of some process ( grounded theory questions ), or may address a description of the case and the emerging themes ( qualitative case study questions ). 15 We provide examples of contextual, descriptive, evaluation, explanatory, exploratory, generative, ideological, ethnographic, phenomenological, grounded theory, and qualitative case study research questions in qualitative research in Table 4 , and the definition of qualitative hypothesis-generating research in Table 5 .

Qualitative studies usually pose at least one central research question and several subquestions starting with How or What . These research questions use exploratory verbs such as explore or describe . These also focus on one central phenomenon of interest, and may mention the participants and research site. 15

Hypotheses in qualitative research

Hypotheses in qualitative research are stated in the form of a clear statement concerning the problem to be investigated. Unlike in quantitative research where hypotheses are usually developed to be tested, qualitative research can lead to both hypothesis-testing and hypothesis-generating outcomes. 2 When studies require both quantitative and qualitative research questions, this suggests an integrative process between both research methods wherein a single mixed-methods research question can be developed. 1

FRAMEWORKS FOR DEVELOPING RESEARCH QUESTIONS AND HYPOTHESES

Research questions followed by hypotheses should be developed before the start of the study. 1 , 12 , 14 It is crucial to develop feasible research questions on a topic that is interesting to both the researcher and the scientific community. This can be achieved by a meticulous review of previous and current studies to establish a novel topic. Specific areas are subsequently focused on to generate ethical research questions. The relevance of the research questions is evaluated in terms of clarity of the resulting data, specificity of the methodology, objectivity of the outcome, depth of the research, and impact of the study. 1 , 5 These aspects constitute the FINER criteria (i.e., Feasible, Interesting, Novel, Ethical, and Relevant). 1 Clarity and effectiveness are achieved if research questions meet the FINER criteria. In addition to the FINER criteria, Ratan et al. described focus, complexity, novelty, feasibility, and measurability for evaluating the effectiveness of research questions. 14

The PICOT and PEO frameworks are also used when developing research questions. 1 The following elements are addressed in these frameworks, PICOT: P-population/patients/problem, I-intervention or indicator being studied, C-comparison group, O-outcome of interest, and T-timeframe of the study; PEO: P-population being studied, E-exposure to preexisting conditions, and O-outcome of interest. 1 Research questions are also considered good if these meet the “FINERMAPS” framework: Feasible, Interesting, Novel, Ethical, Relevant, Manageable, Appropriate, Potential value/publishable, and Systematic. 14

As we indicated earlier, research questions and hypotheses that are not carefully formulated result in unethical studies or poor outcomes. To illustrate this, we provide some examples of ambiguous research question and hypotheses that result in unclear and weak research objectives in quantitative research ( Table 6 ) 16 and qualitative research ( Table 7 ) 17 , and how to transform these ambiguous research question(s) and hypothesis(es) into clear and good statements.

a These statements were composed for comparison and illustrative purposes only.

b These statements are direct quotes from Higashihara and Horiuchi. 16

a This statement is a direct quote from Shimoda et al. 17

The other statements were composed for comparison and illustrative purposes only.

CONSTRUCTING RESEARCH QUESTIONS AND HYPOTHESES

To construct effective research questions and hypotheses, it is very important to 1) clarify the background and 2) identify the research problem at the outset of the research, within a specific timeframe. 9 Then, 3) review or conduct preliminary research to collect all available knowledge about the possible research questions by studying theories and previous studies. 18 Afterwards, 4) construct research questions to investigate the research problem. Identify variables to be accessed from the research questions 4 and make operational definitions of constructs from the research problem and questions. Thereafter, 5) construct specific deductive or inductive predictions in the form of hypotheses. 4 Finally, 6) state the study aims . This general flow for constructing effective research questions and hypotheses prior to conducting research is shown in Fig. 1 .

Research questions are used more frequently in qualitative research than objectives or hypotheses. 3 These questions seek to discover, understand, explore or describe experiences by asking “What” or “How.” The questions are open-ended to elicit a description rather than to relate variables or compare groups. The questions are continually reviewed, reformulated, and changed during the qualitative study. 3 Research questions are also used more frequently in survey projects than hypotheses in experiments in quantitative research to compare variables and their relationships.

Hypotheses are constructed based on the variables identified and as an if-then statement, following the template, ‘If a specific action is taken, then a certain outcome is expected.’ At this stage, some ideas regarding expectations from the research to be conducted must be drawn. 18 Then, the variables to be manipulated (independent) and influenced (dependent) are defined. 4 Thereafter, the hypothesis is stated and refined, and reproducible data tailored to the hypothesis are identified, collected, and analyzed. 4 The hypotheses must be testable and specific, 18 and should describe the variables and their relationships, the specific group being studied, and the predicted research outcome. 18 Hypotheses construction involves a testable proposition to be deduced from theory, and independent and dependent variables to be separated and measured separately. 3 Therefore, good hypotheses must be based on good research questions constructed at the start of a study or trial. 12

In summary, research questions are constructed after establishing the background of the study. Hypotheses are then developed based on the research questions. Thus, it is crucial to have excellent research questions to generate superior hypotheses. In turn, these would determine the research objectives and the design of the study, and ultimately, the outcome of the research. 12 Algorithms for building research questions and hypotheses are shown in Fig. 2 for quantitative research and in Fig. 3 for qualitative research.

EXAMPLES OF RESEARCH QUESTIONS FROM PUBLISHED ARTICLES

- EXAMPLE 1. Descriptive research question (quantitative research)

- - Presents research variables to be assessed (distinct phenotypes and subphenotypes)

- “BACKGROUND: Since COVID-19 was identified, its clinical and biological heterogeneity has been recognized. Identifying COVID-19 phenotypes might help guide basic, clinical, and translational research efforts.

- RESEARCH QUESTION: Does the clinical spectrum of patients with COVID-19 contain distinct phenotypes and subphenotypes? ” 19

- EXAMPLE 2. Relationship research question (quantitative research)

- - Shows interactions between dependent variable (static postural control) and independent variable (peripheral visual field loss)

- “Background: Integration of visual, vestibular, and proprioceptive sensations contributes to postural control. People with peripheral visual field loss have serious postural instability. However, the directional specificity of postural stability and sensory reweighting caused by gradual peripheral visual field loss remain unclear.

- Research question: What are the effects of peripheral visual field loss on static postural control ?” 20

- EXAMPLE 3. Comparative research question (quantitative research)

- - Clarifies the difference among groups with an outcome variable (patients enrolled in COMPERA with moderate PH or severe PH in COPD) and another group without the outcome variable (patients with idiopathic pulmonary arterial hypertension (IPAH))

- “BACKGROUND: Pulmonary hypertension (PH) in COPD is a poorly investigated clinical condition.

- RESEARCH QUESTION: Which factors determine the outcome of PH in COPD?

- STUDY DESIGN AND METHODS: We analyzed the characteristics and outcome of patients enrolled in the Comparative, Prospective Registry of Newly Initiated Therapies for Pulmonary Hypertension (COMPERA) with moderate or severe PH in COPD as defined during the 6th PH World Symposium who received medical therapy for PH and compared them with patients with idiopathic pulmonary arterial hypertension (IPAH) .” 21

- EXAMPLE 4. Exploratory research question (qualitative research)

- - Explores areas that have not been fully investigated (perspectives of families and children who receive care in clinic-based child obesity treatment) to have a deeper understanding of the research problem

- “Problem: Interventions for children with obesity lead to only modest improvements in BMI and long-term outcomes, and data are limited on the perspectives of families of children with obesity in clinic-based treatment. This scoping review seeks to answer the question: What is known about the perspectives of families and children who receive care in clinic-based child obesity treatment? This review aims to explore the scope of perspectives reported by families of children with obesity who have received individualized outpatient clinic-based obesity treatment.” 22

- EXAMPLE 5. Relationship research question (quantitative research)

- - Defines interactions between dependent variable (use of ankle strategies) and independent variable (changes in muscle tone)

- “Background: To maintain an upright standing posture against external disturbances, the human body mainly employs two types of postural control strategies: “ankle strategy” and “hip strategy.” While it has been reported that the magnitude of the disturbance alters the use of postural control strategies, it has not been elucidated how the level of muscle tone, one of the crucial parameters of bodily function, determines the use of each strategy. We have previously confirmed using forward dynamics simulations of human musculoskeletal models that an increased muscle tone promotes the use of ankle strategies. The objective of the present study was to experimentally evaluate a hypothesis: an increased muscle tone promotes the use of ankle strategies. Research question: Do changes in the muscle tone affect the use of ankle strategies ?” 23

EXAMPLES OF HYPOTHESES IN PUBLISHED ARTICLES

- EXAMPLE 1. Working hypothesis (quantitative research)

- - A hypothesis that is initially accepted for further research to produce a feasible theory

- “As fever may have benefit in shortening the duration of viral illness, it is plausible to hypothesize that the antipyretic efficacy of ibuprofen may be hindering the benefits of a fever response when taken during the early stages of COVID-19 illness .” 24

- “In conclusion, it is plausible to hypothesize that the antipyretic efficacy of ibuprofen may be hindering the benefits of a fever response . The difference in perceived safety of these agents in COVID-19 illness could be related to the more potent efficacy to reduce fever with ibuprofen compared to acetaminophen. Compelling data on the benefit of fever warrant further research and review to determine when to treat or withhold ibuprofen for early stage fever for COVID-19 and other related viral illnesses .” 24

- EXAMPLE 2. Exploratory hypothesis (qualitative research)

- - Explores particular areas deeper to clarify subjective experience and develop a formal hypothesis potentially testable in a future quantitative approach

- “We hypothesized that when thinking about a past experience of help-seeking, a self distancing prompt would cause increased help-seeking intentions and more favorable help-seeking outcome expectations .” 25

- “Conclusion

- Although a priori hypotheses were not supported, further research is warranted as results indicate the potential for using self-distancing approaches to increasing help-seeking among some people with depressive symptomatology.” 25

- EXAMPLE 3. Hypothesis-generating research to establish a framework for hypothesis testing (qualitative research)

- “We hypothesize that compassionate care is beneficial for patients (better outcomes), healthcare systems and payers (lower costs), and healthcare providers (lower burnout). ” 26

- Compassionomics is the branch of knowledge and scientific study of the effects of compassionate healthcare. Our main hypotheses are that compassionate healthcare is beneficial for (1) patients, by improving clinical outcomes, (2) healthcare systems and payers, by supporting financial sustainability, and (3) HCPs, by lowering burnout and promoting resilience and well-being. The purpose of this paper is to establish a scientific framework for testing the hypotheses above . If these hypotheses are confirmed through rigorous research, compassionomics will belong in the science of evidence-based medicine, with major implications for all healthcare domains.” 26

- EXAMPLE 4. Statistical hypothesis (quantitative research)

- - An assumption is made about the relationship among several population characteristics ( gender differences in sociodemographic and clinical characteristics of adults with ADHD ). Validity is tested by statistical experiment or analysis ( chi-square test, Students t-test, and logistic regression analysis)

- “Our research investigated gender differences in sociodemographic and clinical characteristics of adults with ADHD in a Japanese clinical sample. Due to unique Japanese cultural ideals and expectations of women's behavior that are in opposition to ADHD symptoms, we hypothesized that women with ADHD experience more difficulties and present more dysfunctions than men . We tested the following hypotheses: first, women with ADHD have more comorbidities than men with ADHD; second, women with ADHD experience more social hardships than men, such as having less full-time employment and being more likely to be divorced.” 27

- “Statistical Analysis

- ( text omitted ) Between-gender comparisons were made using the chi-squared test for categorical variables and Students t-test for continuous variables…( text omitted ). A logistic regression analysis was performed for employment status, marital status, and comorbidity to evaluate the independent effects of gender on these dependent variables.” 27

EXAMPLES OF HYPOTHESIS AS WRITTEN IN PUBLISHED ARTICLES IN RELATION TO OTHER PARTS

- EXAMPLE 1. Background, hypotheses, and aims are provided

- “Pregnant women need skilled care during pregnancy and childbirth, but that skilled care is often delayed in some countries …( text omitted ). The focused antenatal care (FANC) model of WHO recommends that nurses provide information or counseling to all pregnant women …( text omitted ). Job aids are visual support materials that provide the right kind of information using graphics and words in a simple and yet effective manner. When nurses are not highly trained or have many work details to attend to, these job aids can serve as a content reminder for the nurses and can be used for educating their patients (Jennings, Yebadokpo, Affo, & Agbogbe, 2010) ( text omitted ). Importantly, additional evidence is needed to confirm how job aids can further improve the quality of ANC counseling by health workers in maternal care …( text omitted )” 28

- “ This has led us to hypothesize that the quality of ANC counseling would be better if supported by job aids. Consequently, a better quality of ANC counseling is expected to produce higher levels of awareness concerning the danger signs of pregnancy and a more favorable impression of the caring behavior of nurses .” 28

- “This study aimed to examine the differences in the responses of pregnant women to a job aid-supported intervention during ANC visit in terms of 1) their understanding of the danger signs of pregnancy and 2) their impression of the caring behaviors of nurses to pregnant women in rural Tanzania.” 28

- EXAMPLE 2. Background, hypotheses, and aims are provided